A library of solvers that leverage neuromorphic hardware for constrained optimization. Lava-Optimization is part of Lava Framework. Lava-optimization is part of Lava Framework

Project description

Neuromorphic Constrained Optimization Library

A library of solvers that leverage neuromorphic hardware for constrained optimization.

Table of Contents

About the Project

Constrained optimization searches for the values of input variables that minimize or maximize a given objective function, while the variables are subject to constraints. This kind of problem is ubiquitous throughout scientific domains and industries. Constrained optimization is a promising application for neuromorphic computing as it naturally aligns with the dynamics of spiking neural networks. When individual neurons represent states of variables, the neuronal connections can directly encode constraints between the variables: in its simplest form, recurrent inhibitory synapses connect neurons that represent mutually exclusive variable states, while recurrent excitatory synapses link neurons representing reinforcing states. Implemented on massively parallel neuromorphic hardware, such a spiking neural network can simultaneously evaluate conflicts and cost functions involving many variables, and update all variables accordingly. This allows a quick convergence towards an optimal state. In addition, the fine-scale timing dynamics of SNNs allow them to readily escape from local minima.

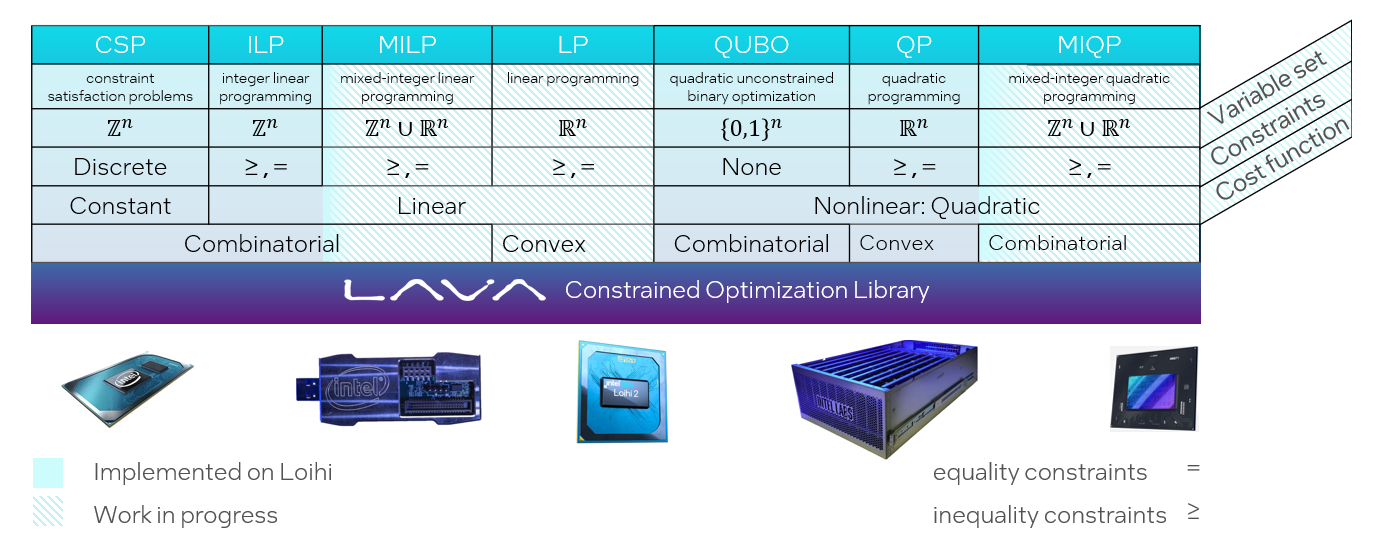

This Lava repository currently supports solvers for the following constrained optimization problems:

- Quadratic Programming (QP)

- Quadratic Unconstrained Binary Optimization (QUBO)

As we continue development, the library will support more constrained optimization problems that are relevant for robotics and operations research. We currently plan the following development order in such a way that new solvers build on the capabilities of existing ones:

- Constraint Satisfaction Problems (CSP) [problem interface already available]

- Integer Linear Programming (ILP)

- Mixed-Integer Linear Programming (MILP)

- Mixed-Integer Quadratic Programming (MIQP)

- Linear Programming (LP)

Taxonomy of Optimization Problems

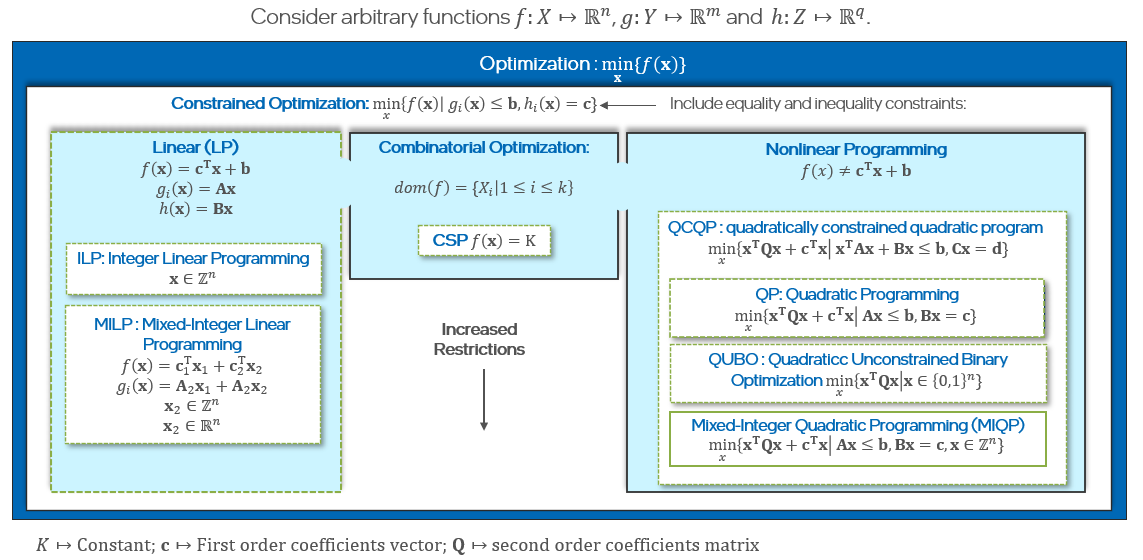

More formally, the general form of a constrained optimization problem is:

$$ \displaystyle{\min_{x} \lbrace f(x) | g_i(x) \leq b, h_i(x) = c.\rbrace} $$

Where $f(x)$ is the objective function to be optimized while $g(x)$ and $h(x)$ constrain the validity of $f(x)$ to regions in the state space satisfying the respective equality and inequality constraints. The vector $x$ can be continuous, discrete or a mixture of both. We can then construct the following taxonomy of optimization problems according to the characteristics of the variable domain and of $f$, $g$, and $h$:

In the long run, lava-optimization aims to offer support to solve all of the problems in the figure with a neuromorphic backend.

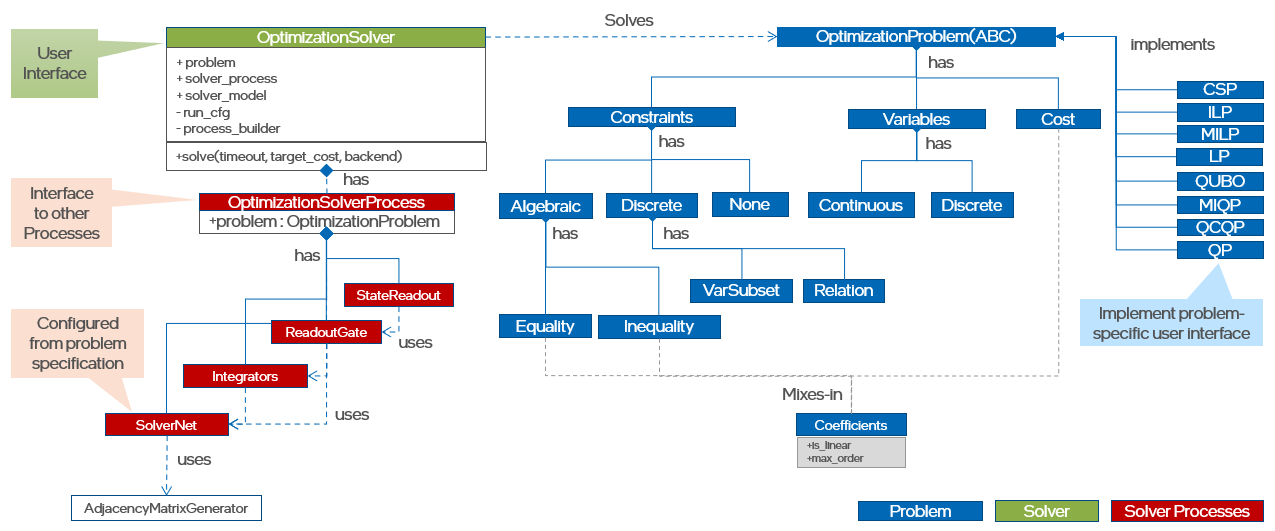

OptimizationSolver and OptimizationProblem Classes

The figure below shows the general architecture of the library. We harness the general definition of constraint optimization problems to create OptimizationProblem instances by composing Constraints, Variables, and Cost classes which describe the characteristics of every subproblem class. Note that while a quadratic problem (QP) will be described by linear equality and inequality constraints with variables on the continuous domain and a quadratic function. A constraint satisfaction problem (CSP) will be described by discrete constraints, defined by variable subsets and a binary relation describing the mutually allowed values for such discrete variables and will have a constant cost function with the pure goal of satisfying constraints.

An API for every problem class can be created by inheriting from OptimizationSolver and composing particular flavors of Constraints, Variables, and Cost.

The instance of an Optimization problem is the valid input for instantiating the generic OptimizationSolver class. In this way, the OptimizationSolver interface is left fixed and the OptimizationProblem allows the greatest flexibility for creating new APIs. Under the hood, the OptimizationSolver understands the composite structure of the OptimizationProblem and will in turn compose the required solver components and Lava processes.

Tutorials

Quadratic Programming

Quadratic Unconstrained Binary Optimization

Examples

Solving QP problems

import numpy as np

from lava.lib.optimization.problems.problems import QP

from lava.lib.optimization.solvers.generic.solver import (

SolverConfig,

OptimizationSolver,

)

# Define QP problem

Q = np.array([[100, 0, 0], [0, 15, 0], [0, 0, 5]])

p = np.array([[1, 2, 1]]).T

A = -np.array([[1, 2, 2], [2, 100, 3]])

k = -np.array([[-50, 50]]).T

qp = QP(Q, p, A, k)

# Define hyper-parameters

hyperparameters = {

"neuron_model": "qp-lp_pipg",

"alpha_mantissa": 160,

"alpha_exponent": -8,

"beta_mantissa": 7,

"beta_exponent": -10,

"decay_schedule_parameters": (100, 100, 0),

"growth_schedule_parameters": (3, 2),

}

# Solve using QPSolver

solver = OptimizationSolver(problem=qp)

config = SolverConfig(timeout=400, hyperparameters=hyperparameters, backend="Loihi2")

solver.solve(config=config)

Solving QUBO

import numpy as np

from lava.lib.optimization.problems.problems import QUBO

from lava.lib.optimization.solvers.generic.solver import (

SolverConfig,

OptimizationSolver,

)

# Define QUBO problem

q = np.array([[-5, 2, 4, 0],

[ 2,-3, 1, 0],

[ 4, 1,-8, 5],

[ 0, 0, 5,-6]]))

qubo = QUBO(q)

# Solve using generic OptimizationSolver

solver = OptimizationSolver(problem=qubo)

config = SolverConfig(timeout=3000, target_cost=-50, backend="Loihi2")

solution = solver.solve(config=config)

Getting Started

Requirements

- Working installation of Lava, installed automatically with poetry below. For custom installs see Lava installation tutorial.

Installation

[Linux/MacOS]

cd $HOME

git clone git@github.com:lava-nc/lava-optimization.git

cd lava-optimization

curl -sSL https://install.python-poetry.org | python3 -

poetry config virtualenvs.in-project true

poetry install

source .venv/bin/activate

pytest

[Windows]

# Commands using PowerShell

cd $HOME

git clone git@github.com:lava-nc/lava-optimization.git

cd lava-optimization

python3 -m venv .venv

.venv\Scripts\activate

pip install -U pip

curl -sSL https://install.python-poetry.org | python3 -

poetry config virtualenvs.in-project true

poetry install

pytest

[Alternative] Installing Lava via Conda

If you use the Conda package manager, you can simply install the Lava package via:

conda install lava-optimization -c conda-forge

Alternatively with intel numpy and scipy:

conda create -n lava-optimization python=3.9 -c intel

conda activate lava-optimization

conda install -n lava-optimization -c intel numpy scipy

conda install -n lava-optimization -c conda-forge lava-optimization --freeze-installed

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file lava_optimization-0.5.0.tar.gz.

File metadata

- Download URL: lava_optimization-0.5.0.tar.gz

- Upload date:

- Size: 4.0 MB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.1.0 CPython/3.12.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

3aecfff7a86e86a32a4da7676b271477d03967ecd0fdabd1121f48de31475315

|

|

| MD5 |

b7de54093e23cfe5b468782d3074d16f

|

|

| BLAKE2b-256 |

f9ba958def4f6f152563d28350b446d812cb2778304348652b174b6244ba0352

|

File details

Details for the file lava_optimization-0.5.0-py3-none-any.whl.

File metadata

- Download URL: lava_optimization-0.5.0-py3-none-any.whl

- Upload date:

- Size: 189.1 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.1.0 CPython/3.12.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

af10f3f22b976597d2269548e7a40527b62e916c45df69742518f479571a7613

|

|

| MD5 |

60f3cd8a3ac92c1296c2ea9e1e5c8698

|

|

| BLAKE2b-256 |

d8eaf1c32c40ca6d5e79fa487cded1342bc41a032f5117dc06c260dd96322066

|