A construct for AWS Glue DataBrew wtih CICD

Project description

cdk-databrew-cicd

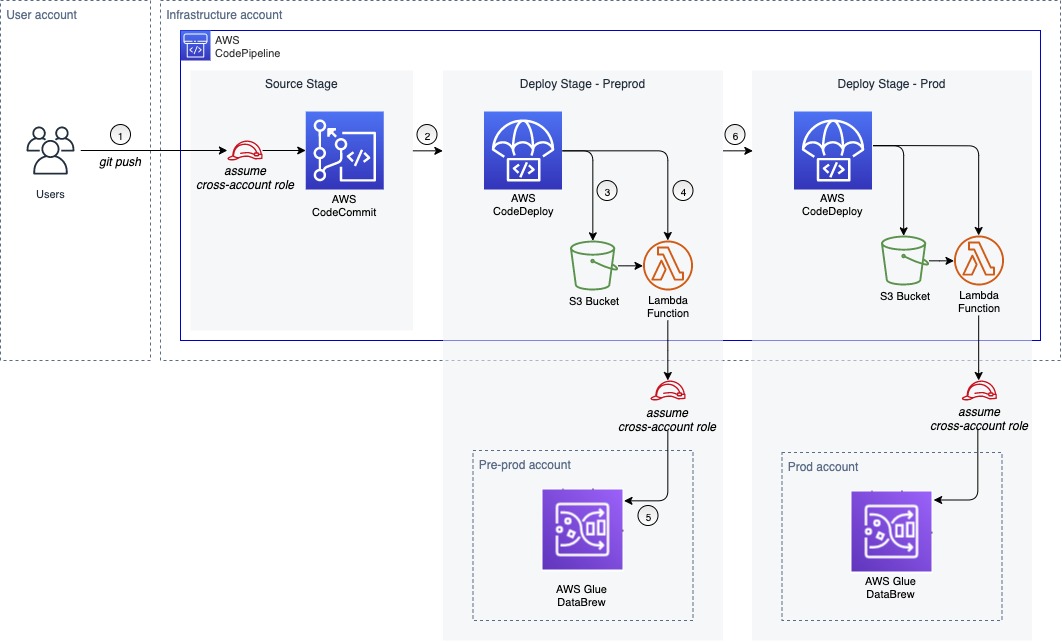

This construct creates a CodePipeline pipeline where users can push a DataBrew recipe into the CodeCommit repository and the recipe will be pushed to a pre-production AWS account and a production AWS account by order automatically.

| npm (JS/TS) | PyPI (Python) | Maven (Java) | Go | NuGet |

|---|---|---|---|---|

| Link | Link | Link | Link | Link |

Table of Contents

Serverless Architecture

Introduction

The architecture was introduced by Romi Boimer and Gaurav Wadhawan and was posted on the AWS Blog as Set up CI/CD pipelines for AWS Glue DataBrew using AWS Developer Tools. I converted the architecture into a CDK construct for 4 programming languages. Before applying the AWS construct, make sure you've set up a proper IAM role for the pre-production and production AWS accounts. You could achieve it either by creating manually or creating through a custom construct in this library.

import { IamRole } from 'cdk-databrew-cicd';

new IamRole(this, 'AccountIamRole', {

environment: 'preproduction', // or 'production'

accountID: 'ACCOUNT_ID',

// roleName: 'OPTIONAL'

});

Example

Typescript

You could also refer to here.

$ cdk --init language typescript

$ yarn add cdk-databrew-cicd

import * as cdk from '@aws-cdk/core';

import { DataBrewCodePipeline } from 'cdk-databrew-cicd';

class TypescriptStack extends cdk.Stack {

constructor(scope: cdk.Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

const preproductionAccountId = 'PREPRODUCTION_ACCOUNT_ID';

const productionAccountId = 'PRODUCTION_ACCOUNT_ID';

const dataBrewPipeline = new DataBrewCodePipeline(this, 'DataBrewCicdPipeline', {

preproductionIamRoleArn: `arn:${cdk.Aws.PARTITION}:iam::${preproductionAccountId}:role/preproduction-Databrew-Cicd-Role`,

productionIamRoleArn: `arn:${cdk.Aws.PARTITION}:iam::${productionAccountId}:role/production-Databrew-Cicd-Role`,

// bucketName: 'OPTIONAL',

// repoName: 'OPTIONAL',

// branchName: 'OPTIONAL',

// pipelineName: 'OPTIONAL'

});

new cdk.CfnOutput(this, 'OPreproductionLambdaArn', { value: dataBrewPipeline.preproductionFunctionArn });

new cdk.CfnOutput(this, 'OProductionLambdaArn', { value: dataBrewPipeline.productionFunctionArn });

new cdk.CfnOutput(this, 'OCodeCommitRepoArn', { value: dataBrewPipeline.codeCommitRepoArn });

new cdk.CfnOutput(this, 'OCodePipelineArn', { value: dataBrewPipeline.codePipelineArn });

}

}

const app = new cdk.App();

new TypescriptStack(app, 'TypescriptStack', {

stackName: 'DataBrew-CICD'

});

Python

You could also refer to here.

# upgrading related Python packages

$ python -m ensurepip --upgrade

$ python -m pip install --upgrade pip

$ python -m pip install --upgrade virtualenv

# initialize a CDK Python project

$ cdk init --language python

# make packages installed locally instead of globally

$ source .venv/bin/activate

$ cat <<EOL > requirements.txt

aws-cdk.core

cdk-databrew-cicd

EOL

$ python -m pip install -r requirements.txt

from aws_cdk import core as cdk

from cdk_databrew_cicd import DataBrewCodePipeline

class PythonStack(cdk.Stack):

def __init__(self, scope: cdk.Construct, construct_id: str, **kwargs) -> None:

super().__init__(scope, construct_id, **kwargs)

preproduction_account_id = "PREPRODUCTION_ACCOUNT_ID"

production_account_id = "PRODUCTION_ACCOUNT_ID"

databrew_pipeline = DataBrewCodePipeline(self,

"DataBrewCicdPipeline",

preproduction_iam_role_arn=f"arn:{cdk.Aws.PARTITION}:iam::{preproduction_account_id}:role/preproduction-Databrew-Cicd-Role",

production_iam_role_arn=f"arn:{cdk.Aws.PARTITION}:iam::{production_account_id}:role/preproduction-Databrew-Cicd-Role",

# bucket_name="OPTIONAL",

# repo_name="OPTIONAL",

# repo_name="OPTIONAL",

# branch_namne="OPTIONAL",

# pipeline_name="OPTIONAL"

)

cdk.CfnOutput(self, 'OPreproductionLambdaArn', value=databrew_pipeline.preproduction_function_arn)

cdk.CfnOutput(self, 'OProductionLambdaArn', value=databrew_pipeline.production_function_arn)

cdk.CfnOutput(self, 'OCodeCommitRepoArn', value=databrew_pipeline.code_commit_repo_arn)

cdk.CfnOutput(self, 'OCodePipelineArn', value=databrew_pipeline.code_pipeline_arn)

$ deactivate

Java

You could also refer to here.

$ cdk init --language java

$ mvn package

.

.

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<cdk.version>1.107.0</cdk.version>

<constrcut.verion>0.1.4</constrcut.verion>

<junit.version>5.7.1</junit.version>

</properties>

.

.

<dependencies>

<!-- AWS Cloud Development Kit -->

<dependency>

<groupId>software.amazon.awscdk</groupId>

<artifactId>core</artifactId>

<version>${cdk.version}</version>

</dependency>

<dependency>

<groupId>io.github.hsiehshujeng</groupId>

<artifactId>cdk-databrew-cicd</artifactId>

<version>${constrcut.verion}</version>

</dependency>

.

.

.

</dependencies>

package com.myorg;

import software.amazon.awscdk.core.CfnOutput;

import software.amazon.awscdk.core.CfnOutputProps;

import software.amazon.awscdk.core.Construct;

import software.amazon.awscdk.core.Stack;

import software.amazon.awscdk.core.StackProps;

import io.github.hsiehshujeng.cdk.databrew.cicd.DataBrewCodePipeline;

import io.github.hsiehshujeng.cdk.databrew.cicd.DataBrewCodePipelineProps;

public class JavaStack extends Stack {

public JavaStack(final Construct scope, final String id) {

this(scope, id, null);

}

public JavaStack(final Construct scope, final String id, final StackProps props) {

super(scope, id, props);

String preproductionAccountId = "PREPRODUCTION_ACCOUNT_ID";

String productionAccountId = "PRODUCTION_ACCOUNT_ID";

DataBrewCodePipeline databrewPipeline = new DataBrewCodePipeline(this, "DataBrewCicdPipeline",

DataBrewCodePipelineProps.builder().preproductionIamRoleArn(preproductionAccountId)

.productionIamRoleArn(productionAccountId)

// .bucketName("OPTIONAL")

// .branchName("OPTIONAL")

// .pipelineName("OPTIONAL")

.build());

new CfnOutput(this, "OPreproductionLambdaArn",

CfnOutputProps.builder()

.value(databrewPipeline.getPreproductionFunctionArn())

.build());

new CfnOutput(this, "OProductionLambdaArn",

CfnOutputProps.builder()

.value(databrewPipeline.getProductionFunctionArn())

.build());

new CfnOutput(this, "OCodeCommitRepoArn",

CfnOutputProps.builder()

.value(databrewPipeline.getCodeCommitRepoArn())

.build());

new CfnOutput(this, "OCodePipelineArn",

CfnOutputProps.builder()

.value(databrewPipeline.getCodePipelineArn())

.build());

}

}

C#

You could also refer to here.

$ cdk init --language csharp

$ dotnet add src/Csharp package Databrew.Cicd --version 0.1.4

using Amazon.CDK;

using ScottHsieh.Cdk;

namespace Csharp

{

public class CsharpStack : Stack

{

internal CsharpStack(Construct scope, string id, IStackProps props = null) : base(scope, id, props)

{

var preproductionAccountId = "PREPRODUCTION_ACCOUNT_ID";

var productionAccountId = "PRODUCTION_ACCOUNT_ID";

var dataBrewPipeline = new DataBrewCodePipeline(this, "DataBrewCicdPipeline", new DataBrewCodePipelineProps

{

PreproductionIamRoleArn = $"arn:{Aws.PARTITION}:iam::{preproductionAccountId}:role/preproduction-Databrew-Cicd-Role",

ProductionIamRoleArn = $"arn:{Aws.PARTITION}:iam::{productionAccountId}:role/preproduction-Databrew-Cicd-Role",

// BucketName = "OPTIONAL",

// RepoName = "OPTIONAL",

// BranchName = "OPTIONAL",

// PipelineName = "OPTIONAL"

});

new CfnOutput(this, "OPreproductionLambdaArn", new CfnOutputProps

{

Value = dataBrewPipeline.PreproductionFunctionArn

});

new CfnOutput(this, "OProductionLambdaArn", new CfnOutputProps

{

Value = dataBrewPipeline.ProductionFunctionArn

});

new CfnOutput(this, "OCodeCommitRepoArn", new CfnOutputProps

{

Value = dataBrewPipeline.CodeCommitRepoArn

});

new CfnOutput(this, "OCodePipelineArn", new CfnOutputProps

{

Value = dataBrewPipeline.CodeCommitRepoArn

});

}

}

}

Some Efforts after Stack Creation

CodeCommit

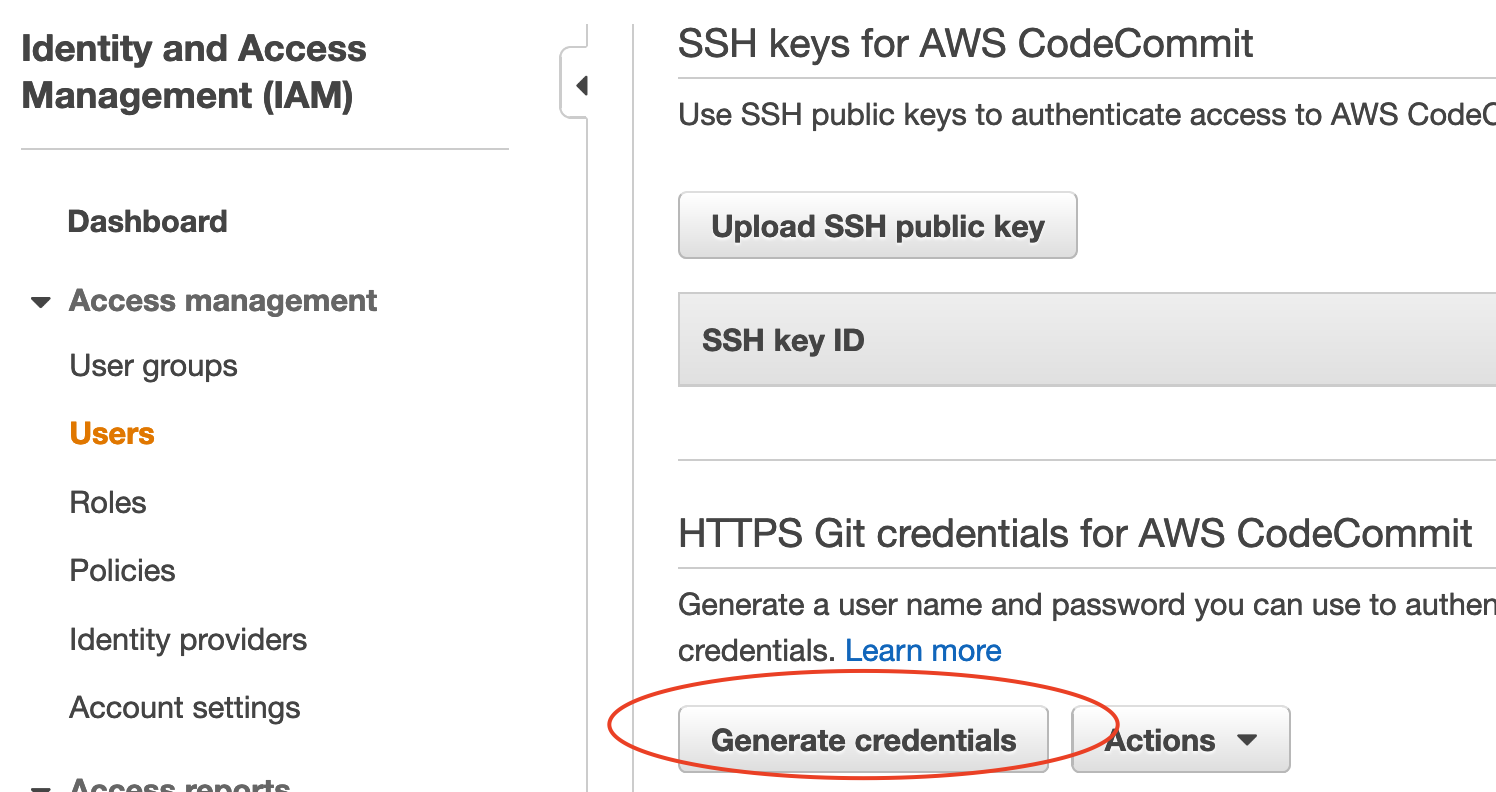

-

Create HTTPS Git credentials for AWS CodeCommit with an IAM user that you're going to use.

-

Run through the steps noted on the README.md of the CodeCommit repository after finishing establishing the stack via CDK. The returned message with success should be looked like the following (assume you have installed

git-remote-codecommit):$ git clone codecommit://scott.codecommit@DataBrew-Recipes-Repo Cloning into 'DataBrew-Recipes-Repo'... remote: Counting objects: 6, done. Unpacking objects: 100% (6/6), 2.03 KiB | 138.00 KiB/s, done.

-

Add a DataBrew recipe into the local repositroy (directory) and commit the change. (either directly on the main branch or merging another branch into the main branch)

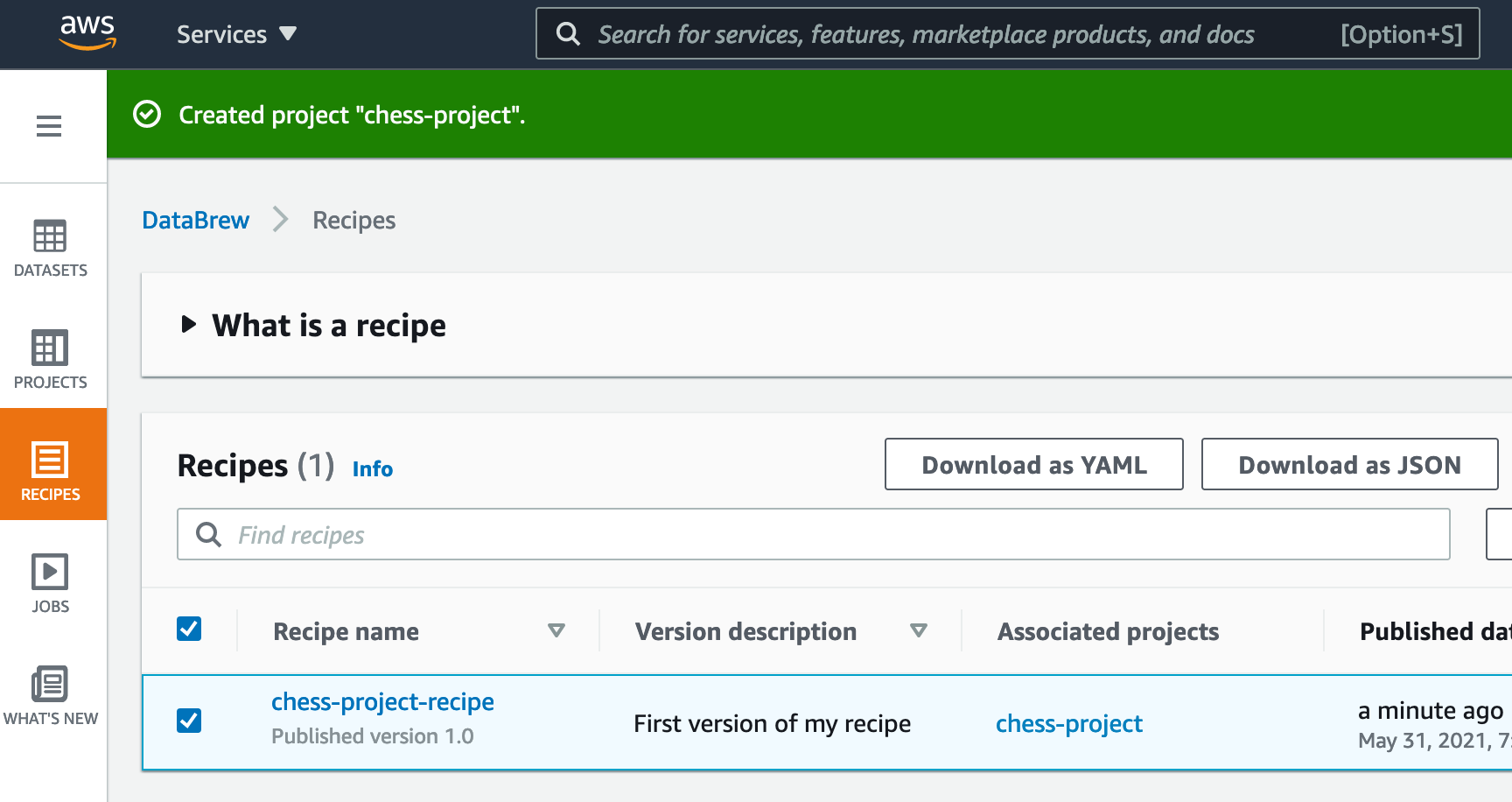

Glue DataBrew

-

Download any recipe either generated out by following Getting started with AWS Glue DataBrew or made by yourself as JSON file.

-

Move the recipe from the download directory to the local directory for the CodeCommit repository.

$ mv ${DOWNLOAD_DIRECTORY}/chess-project-recipe.json ${CODECOMMIT_LOCAL_DIRECTORY}/

-

Commit the change to a branch with a name you prefer.

$ cd ${{CODECOMMIT_LOCAL_DIRECTORY}} $ git checkout -b add-recipe main $ git add . $ git commit -m "first recipe" $ git push --set-upstream origin add-recipe

-

Merge the branch into the main branch. Just go to the AWS CodeCommit web console to do the merge as its process is purely the same as you've already done thousands of times on Github but only with different UIs.

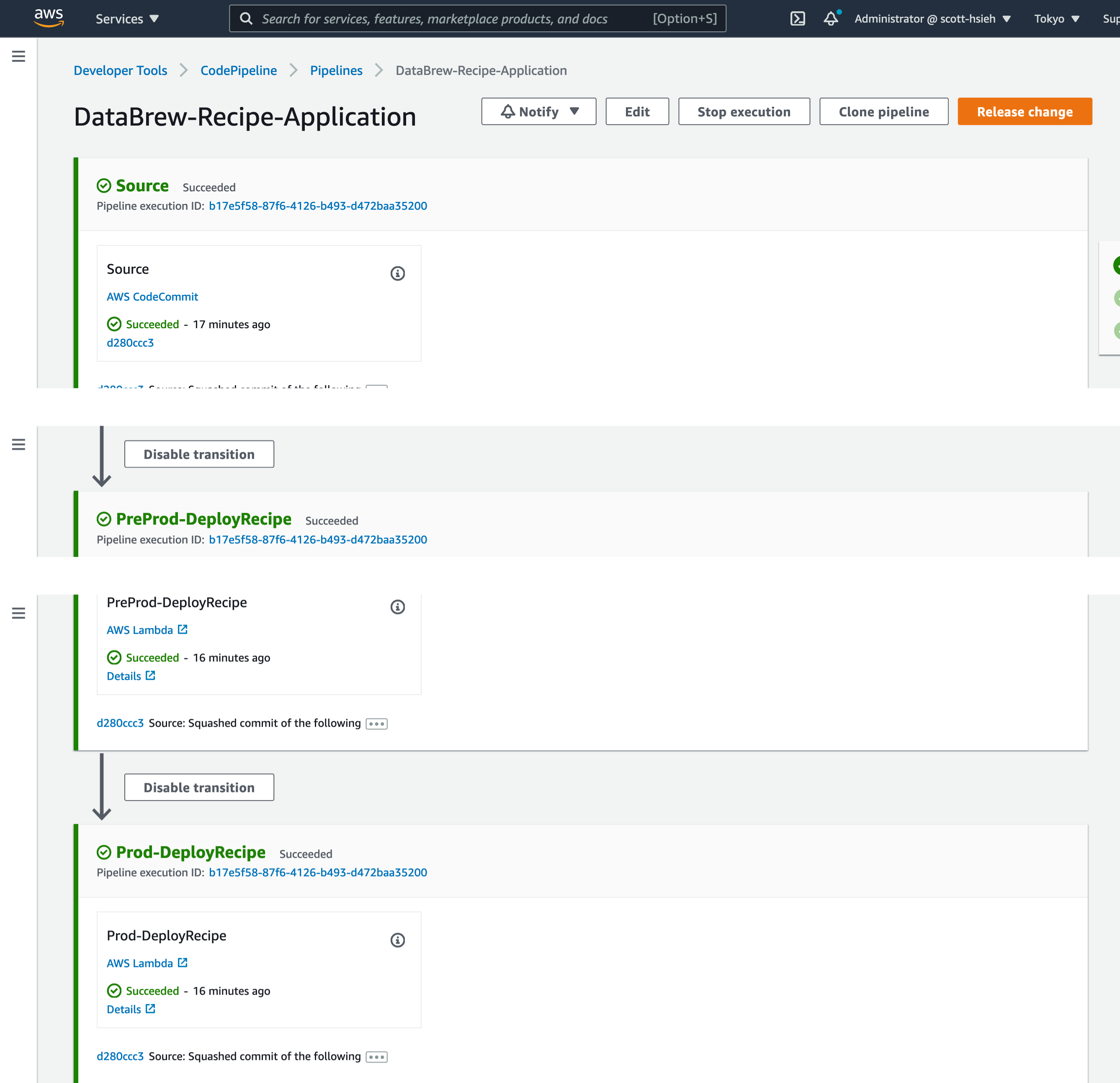

How Successful Commits Look Like

- In the infrastructure account, the status of the CodePipeline DataBrew pipeline should be similar as the following:

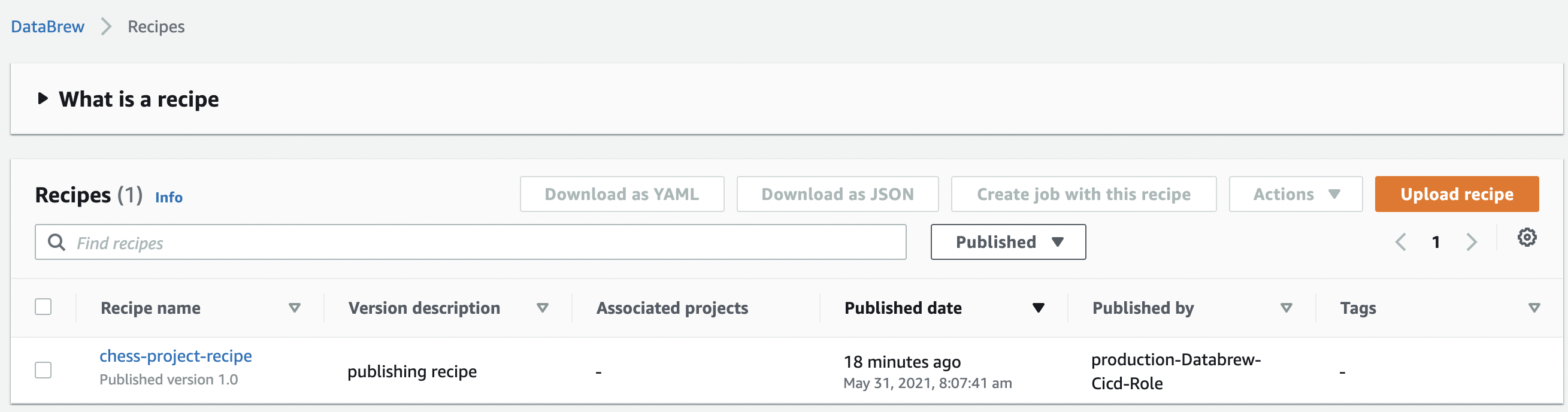

- In the pre-production account with the same region as where the CICD pipeline is deployed at the infrastructue account, you'll see this.

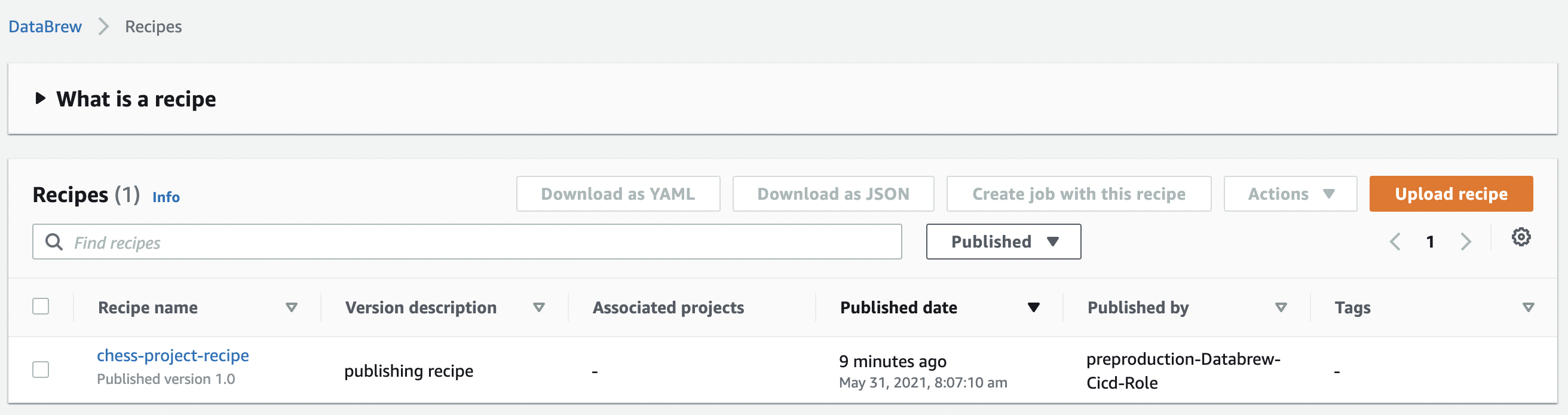

- In the production account with the same region as where the CICD pipeline is deployed at the infrastructue account, you'll see this.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file cdk_databrew_cicd-2.0.21.tar.gz.

File metadata

- Download URL: cdk_databrew_cicd-2.0.21.tar.gz

- Upload date:

- Size: 1.4 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.10.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | e6c5de2e9e15acfd8249ae8440cdcd9d26941a478319c0b96173a1fdf07a6dd4 |

|

| MD5 | 017ce2736acc1c9fcdc4ab202caa8cd0 |

|

| BLAKE2b-256 | 09bb5d64236ded8e12ca3d47be5ab310dc902ecc1e43314a1b9e23d61eff10b8 |

File details

Details for the file cdk_databrew_cicd-2.0.21-py3-none-any.whl.

File metadata

- Download URL: cdk_databrew_cicd-2.0.21-py3-none-any.whl

- Upload date:

- Size: 1.4 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.10.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 3ff0bf40ab793ad74f2539bdfb8e91571bd6b96c21271c9b6dbc091910549705 |

|

| MD5 | 1163b647d83109d26ac764c9430003db |

|

| BLAKE2b-256 | 94d8933019dcef5c2140772d34b6d22b8a62f13dfd59e146f5406dccd02aa58d |