A tiny tool for generating synthetic data from the original one

Project description

[!TIP] This project complements the Differential Privacy with AI & ML project available in the repository. To fully grasp the concept, make sure to read both documentations.

About the Package

Author's Words

Welcome to the first edition of the Disguise Data Tool official documentation. I am Deniz Dahman, Ph.D., the creator of the BireyselValue algorithm and the author of this package. In the following section, you will find a brief introduction to the principal idea of the disguisedata tool, along with a reference to the academic publication on the method and its mathematical foundations. Before proceeding, I would like to inform you that I have conducted this work as an independent scientist without any funding or similar support. I am dedicated to continuing and seeking further improvements on the proposed method at all costs. If you wish to contribute in any way to this work, please find further details in the contributing section.

Contributing

If you wish to support the creator of this project, you might want to explore possible ways on:

Thank you for your willingness to contribute in any way possible. You can check links below for more information on how to get involved.:

- view options to subscribe on Dahman's Phi Services Website

- subscribe to this channel Dahman's Phi Services

- you can support on patreon

If you prefer any other way of contribution, please feel free to contact me directly on contact.

Thank you

Introduction

History and Purpose of Synthetic Data

The concept of synthetic data has roots in scientific modeling and simulations, dating back to the early 20th century. For instance, audio and voice synthesis research began in the 1930s. The development of software synthesizers in the 1970s marked a significant advancement in creating synthetic data. In 1993, the idea of fully synthetic data for privacy-preserving statistical analysis was introduced to the research community. Today, synthetic data is extensively used in various fields, including healthcare, finance, and defense, to train AI models and conduct simulations. More importantly, synthetic data continues to evolve, offering innovative solutions to data scarcity, privacy, and bias challenges in the AI and machine learning landscape.

Synthetic data serves multiple purposes. It enhances AI models, safeguards sensitive information, reduces bias, and offers an alternative when real-world data is scarce or unavailable:

- Training AI Models: Synthetic data is widely used to train machine learning models, especially when real-world data is scarce or sensitive. It helps in creating diverse datasets that improve model accuracy and robustness,

- Privacy Protection: By using synthetic data, organizations can avoid privacy issues associated with real data, such as patient information in healthcare,

- Testing and Validation: Synthetic data allows for extensive testing and validation of systems without the need for real data, which might be difficult to obtain or use due to privacy concerns.

- Testing and Validation: Synthetic data allows for extensive testing and validation of systems without the need for real data, which might be difficult to obtain or use due to privacy concerns.

- Bias Reduction: It helps in reducing biases in datasets, ensuring that AI models are trained on balanced and representative data.

disguisedata __version__1.0

There are numerous tools available to generate synthetic data using various techniques. This is where I introduce the disguisedata tool. This tool helps to disguise data based on a mathematical foundational concept. In particular, it relies on two important indicators in the original dataset:

-

The norm: Initially, it captures the general norm of the dataset, involving every entry in the set. This norm is then used to scale the dataset to a range of values. It is considered the secret key used later to convert the synthetic data into the same scale as the original.

-

The Stat: The second important indicator for the disguisedata method is the statistical distribution of the original dataset, particularly the correlation and independence across all features. These values help to adjust the tweak and level of disguise of the data. Two crucial values are the mean and variance, which indicate the level of disguise.

[!IMPORTANT] This tool demonstrates the proposed method solely for educational purposes. The author provides no warranty of any kind, including the warranty of design, merchantability, and fitness for a particular purpose.

Installation

[!TIP] The simulation using disguisedata was conducted on three datasets, which are referenced in the section below.

Data Availability

- Breast Cancer Wisconsin (Diagnostic) Dataset available in [UCI Machine Learning Repository] at https://archive.ics.uci.edu/dataset/17/breast+cancer+wisconsin+diagnostic , reference (Street 1993)

- The Dry Bean Dataset available in [UCI Machine Learning Repository] at https://doi.org/10.24432/C50S4B , reference (Koklu 2020)

- TeleCommunications Dataset available in [UCI Machine Learning Repository] at https://www.kaggle.com/datasets/navins7/telecommunications

Install disguisedata

to install the package all what you have to do:

pip install disguisedata==1.0.3

You should then be able to use the package. It might be a good idea to confirm the installation to ensure everything is set up correctly.

pip show disguisedata

The result then shall be as:

Name: disguisedata

Version: 1.0.1

Summary: A tiny tool for generating synthetic data from the original one

Home-page: https://github.com/dahmansphi/disguisedata

Author: Deniz Dahman's, Ph.D.

Author-email: dahmansphi@gmail.com

[!IMPORTANT] Sometimes, the

seabornlibrary isn't installed during setup. If that's the case, you'll need to install it manually.

Employ the disguisedata -Conditions

[!IMPORTANT] It's mandatory to provide the instance of the class with the NumPy array of the original dataset, which does not include the y feature or the target variable.

Detour in the disguisedata package- Build-in

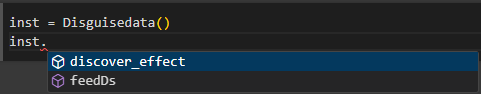

Once your installation is complete and all conditions are met, you may want to explore the built-in functions of the disguisedata package to understand each one. Essentially, you can create an instance from the disguisedata class as follows:

from disguisedata.disguisedata import Disguisedata

inst = Disguisedata()

Now this instance offers you access to the built-in functions that you need. Here is a screenshot:

Once you have the disguisedata instance, follow these steps to obtain the new disguised data:

Control and Get the data format:

The first function you want to employ is feedDs using data = inst.feedDs(ds=ds). This function takes one argument, which is the NumPy dataset, and it controls the conditions and returns a formatted, scaled dataset that is ready for the action of disguise.

Explore different types of Disguise:

The function explor_effect allows you to explore how the disguised data differs from the original data. It is called using inst.explor_effect(data=data, mu=0.5, div=0.9). This function takes three arguments: the first is the formatted dataset returned from the previous function, the second is the value representing the difference from the original mean, and the third is the amount of deviation. These parameters determine the result of the newly generated disguised data. For a detailed explanation of each parameter's effect and purpose, refer to the academic publication on the method. Here are some outputs from the function::

It's important to observe how the screenshot shows the location of the disguised data from the original dataset. The report then illustrates how the values are altered according to the parameter adjustments. Additionally, it presents the differences in the mean and standard deviation between the original and disguised data.

Once the tuning of the parameters and the disguised data are acceptable, it is time to execute the disguise_data function.

Generating the Disguised data

the disguise_data function basically generate the disguised data, it is implemented as Xnp = inst.disguise_data(data=data, mu=0.5, div=0.9), three arguments: the first is the formatted dataset returned from section, the second is the value representing the difference from the original mean, and the third is the amount of deviation. the result from that is as shown here:

Conclusion on installation and employment of the method

It is possible to test the results returned based on the proposed method. I used two predictive methods on the original and the disguised dataset to observe the effect on accuracy. The conclusion is that there are almost identical results between both predictions, which implies that the proposed method is effective in generating realistic disguised data that maintains privacy.

Reference

please follow up on the publication in the website to find the academic published paper

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for disguisedata-1.0.3-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | d6cce5b9c2448c4d38ee8046559705c8086b9bac873d0768dcb45cf146249938 |

|

| MD5 | a796da1df1165749c6ac88aea6eb40b0 |

|

| BLAKE2b-256 | 11c6913435a1302284300818a7fb609e7d08a57fa86f646597a1b0a87e55a2d9 |