A new flavour of deep learning operations

Project description

https://user-images.githubusercontent.com/6318811/177030658-66f0eb5d-e136-44d8-99c9-86ae298ead5b.mp4

einops

Flexible and powerful tensor operations for readable and reliable code.

Supports numpy, pytorch, tensorflow, jax, and others.

Recent updates:

- einops 0.6 introduces packing and unpacking

- einops 0.5: einsum is now a part of einops

- Einops paper is accepted for oral presentation at ICLR 2022 (yes, it worth reading)

- flax and oneflow backend added

- torch.jit.script is supported for pytorch layers

- powerful EinMix added to einops. Einmix tutorial notebook

Tweets

In case you need convincing arguments for setting aside time to learn about einsum and einops... Tim Rocktäschel, FAIR

Writing better code with PyTorch and einops 👌 Andrej Karpathy, AI at Tesla

Slowly but surely, einops is seeping in to every nook and cranny of my code. If you find yourself shuffling around bazillion dimensional tensors, this might change your life Nasim Rahaman, MILA (Montreal)

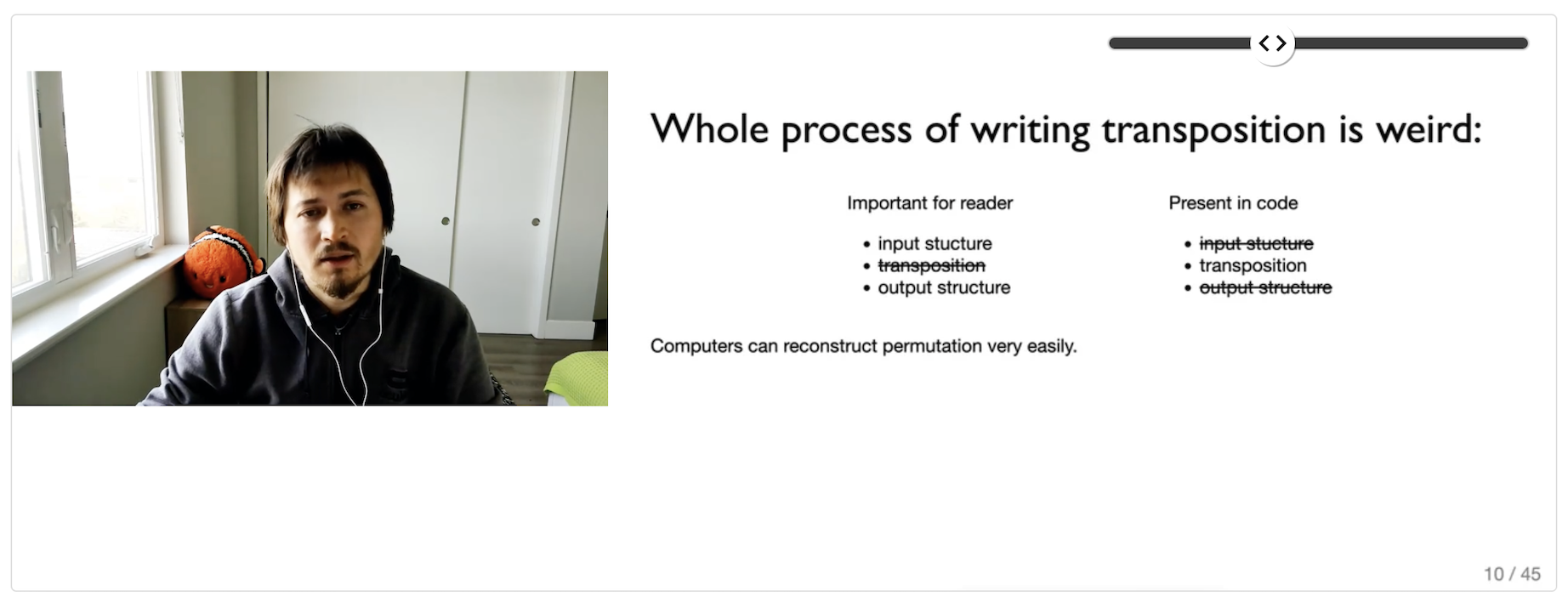

Recordings of talk at ICLR 2022

Watch a 15-minute talk focused on main problems of standard tensor manipulation methods, and how einops improves this process.

Contents

- Installation

- Documentation

- Tutorial

- API micro-reference

- Why using einops

- Supported frameworks

- Repository and discussions

Installation

Plain and simple:

pip install einops

Tutorials

Tutorials are the most convenient way to see einops in action

- part 1: einops fundamentals

- part 2: einops for deep learning

- part 3: packing and unpacking

- part 4: improve pytorch code with einops

Kapil Sachdeva recorded a small intro to einops.

API

einops has a minimalistic yet powerful API.

Three core operations provided (einops tutorial shows those cover stacking, reshape, transposition, squeeze/unsqueeze, repeat, tile, concatenate, view and numerous reductions)

from einops import rearrange, reduce, repeat

# rearrange elements according to the pattern

output_tensor = rearrange(input_tensor, 't b c -> b c t')

# combine rearrangement and reduction

output_tensor = reduce(input_tensor, 'b c (h h2) (w w2) -> b h w c', 'mean', h2=2, w2=2)

# copy along a new axis

output_tensor = repeat(input_tensor, 'h w -> h w c', c=3)

And two corresponding layers (einops keeps a separate version for each framework) with the same API.

from einops.layers.torch import Rearrange, Reduce

from einops.layers.tensorflow import Rearrange, Reduce

from einops.layers.flax import Rearrange, Reduce

from einops.layers.gluon import Rearrange, Reduce

from einops.layers.keras import Rearrange, Reduce

from einops.layers.chainer import Rearrange, Reduce

Layers behave similarly to operations and have the same parameters (with the exception of the first argument, which is passed during call).

Example of using layers within a model:

# example given for pytorch, but code in other frameworks is almost identical

from torch.nn import Sequential, Conv2d, MaxPool2d, Linear, ReLU

from einops.layers.torch import Rearrange

model = Sequential(

...,

Conv2d(6, 16, kernel_size=5),

MaxPool2d(kernel_size=2),

# flattening without need to write forward

Rearrange('b c h w -> b (c h w)'),

Linear(16*5*5, 120),

ReLU(),

Linear(120, 10),

)

Later additions to the family are einsum, pack and unpack functions:

from einops import einsum, pack, unpack

# einsum is like ... einsum, generic and flexible dot-product

# but 1) axes can be multi-lettered 2) pattern goes last 3) works with multiple frameworks

C = einsum(A, B, 'b t1 head c, b t2 head c -> b head t1 t2')

# pack and unpack allow reversibly 'packing' multiple tensors into one.

# Packed tensors may be of different dimensionality:

packed, ps = pack([class_token_bc, image_tokens_bhwc, text_tokens_btc], 'b * c')

class_emb_bc, image_emb_bhwc, text_emb_btc = unpack(transformer(packed), ps, 'b * c')

# Pack/Unpack are more convenient than concat and split, see tutorial

Last, but not the least EinMix layer is available!

EinMix is a generic linear layer, perfect for MLP Mixers and similar architectures.

Naming

einops stands for Einstein-Inspired Notation for operations

(though "Einstein operations" is more attractive and easier to remember).

Notation was loosely inspired by Einstein summation (in particular by numpy.einsum operation).

Why use einops notation?!

Semantic information (being verbose in expectations)

y = x.view(x.shape[0], -1)

y = rearrange(x, 'b c h w -> b (c h w)')

While these two lines are doing the same job in some context,

the second one provides information about the input and output.

In other words, einops focuses on interface: what is the input and output, not how the output is computed.

The next operation looks similar:

y = rearrange(x, 'time c h w -> time (c h w)')

but it gives the reader a hint: this is not an independent batch of images we are processing, but rather a sequence (video).

Semantic information makes the code easier to read and maintain.

Convenient checks

Reconsider the same example:

y = x.view(x.shape[0], -1) # x: (batch, 256, 19, 19)

y = rearrange(x, 'b c h w -> b (c h w)')

The second line checks that the input has four dimensions, but you can also specify particular dimensions. That's opposed to just writing comments about shapes since comments don't prevent mistakes, not tested, and without code review tend to be outdated

y = x.view(x.shape[0], -1) # x: (batch, 256, 19, 19)

y = rearrange(x, 'b c h w -> b (c h w)', c=256, h=19, w=19)

Result is strictly determined

Below we have at least two ways to define the depth-to-space operation

# depth-to-space

rearrange(x, 'b c (h h2) (w w2) -> b (c h2 w2) h w', h2=2, w2=2)

rearrange(x, 'b c (h h2) (w w2) -> b (h2 w2 c) h w', h2=2, w2=2)

There are at least four more ways to do it. Which one is used by the framework?

These details are ignored, since usually it makes no difference, but it can make a big difference (e.g. if you use grouped convolutions in the next stage), and you'd like to specify this in your code.

Uniformity

reduce(x, 'b c (x dx) -> b c x', 'max', dx=2)

reduce(x, 'b c (x dx) (y dy) -> b c x y', 'max', dx=2, dy=3)

reduce(x, 'b c (x dx) (y dy) (z dz) -> b c x y z', 'max', dx=2, dy=3, dz=4)

These examples demonstrated that we don't use separate operations for 1d/2d/3d pooling, those are all defined in a uniform way.

Space-to-depth and depth-to space are defined in many frameworks but how about width-to-height? Here you go:

rearrange(x, 'b c h (w w2) -> b c (h w2) w', w2=2)

Framework independent behavior

Even simple functions are defined differently by different frameworks

y = x.flatten() # or flatten(x)

Suppose x's shape was (3, 4, 5), then y has shape ...

- numpy, cupy, chainer, pytorch:

(60,) - keras, tensorflow.layers, gluon:

(3, 20)

einops works the same way in all frameworks.

Independence of framework terminology

Example: tile vs repeat causes lots of confusion. To copy image along width:

np.tile(image, (1, 2)) # in numpy

image.repeat(1, 2) # pytorch's repeat ~ numpy's tile

With einops you don't need to decipher which axis was repeated:

repeat(image, 'h w -> h (tile w)', tile=2) # in numpy

repeat(image, 'h w -> h (tile w)', tile=2) # in pytorch

repeat(image, 'h w -> h (tile w)', tile=2) # in tf

repeat(image, 'h w -> h (tile w)', tile=2) # in jax

repeat(image, 'h w -> h (tile w)', tile=2) # in cupy

... (etc.)

Testimonials provide user's perspective on the same question.

Supported frameworks

Einops works with ...

- numpy

- pytorch

- tensorflow

- jax

- cupy

- chainer

- tf.keras

- oneflow (experimental)

- flax (experimental)

- paddle (experimental)

- gluon (deprecated)

Citing einops

Please use the following bibtex record

@inproceedings{

rogozhnikov2022einops,

title={Einops: Clear and Reliable Tensor Manipulations with Einstein-like Notation},

author={Alex Rogozhnikov},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=oapKSVM2bcj}

}

Supported python versions

einops works with python 3.7 or later.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file einops-0.6.1.tar.gz.

File metadata

- Download URL: einops-0.6.1.tar.gz

- Upload date:

- Size: 55.8 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: python-httpx/0.24.0

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

f95f8d00f4ded90dbc4b19b6f98b177332614b0357dde66997f3ae5d474dc8c8

|

|

| MD5 |

286d29e542b1a5cdfbf5eafadcfd15fd

|

|

| BLAKE2b-256 |

ac099d5fb513bda4845044802b389e0e1ecddb47b01a7934d33d3b406adf5073

|

File details

Details for the file einops-0.6.1-py3-none-any.whl.

File metadata

- Download URL: einops-0.6.1-py3-none-any.whl

- Upload date:

- Size: 42.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: python-httpx/0.24.0

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

99149e46cc808956b174932fe563d920db4d6e5dadb8c6ecdaa7483b7ef7cfc3

|

|

| MD5 |

8b38d639deb7bec816312bd1e7db4d12

|

|

| BLAKE2b-256 |

6824b05452c986e8eff11f47e123a40798ae693f2fa1ed2f9546094997d2f6be

|