PyTorch Extension Library of Optimized Scatter Operations

Project description

Flexi Hash Embeddings

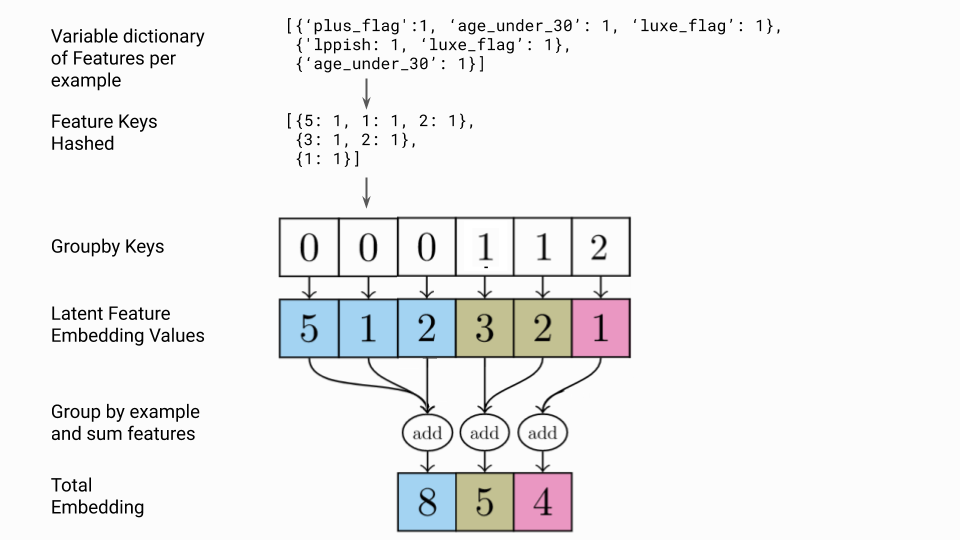

This PyTorch Module hashes and sums variably-sized dictionaries of features into a single fixed-size embedding. Feature keys are hashed, which is ideal for streaming contexts and online-learning such that we don't have to memorize a mapping between feature keys and indices. Multiple variable-length features are grouped by example and then summed. Feature embeddings are scaled by their values, enabling linear features rather than just one-hot features.

Uses the wonderful torch scatter library and sklearn's feature hashing under the hood.

So for example:

>>> from flexi_hash_embedding import FlexiHashEmbedding

>>> X = [{'dog': 1, 'cat':2, 'elephant':4},

{'dog': 2, 'run': 5}]

>>> embed = FlexiHashEmbedding(dim=5)

>>> embed(X)

tensor([[ 1.0753, -5.3999, 2.6890, 2.4224, -2.8352],

[ 2.9265, 5.1440, -4.1737, -12.3070, -8.2725]],

grad_fn=<ScatterAddBackward>)

Example

Frequently, we'll have data for a single event or user that's, for example, in a JSON blob format which lends itself to features which may be missing, incomplete or never before seen. Furthermore, there may be a variable number of features defined. This use case is ideal for feature hashing and for groupby summing of feature embeddings.

In this example we have a total of six features across our whole dataset, but we compute three vectors, one for every input row:

In the example above we have a total of six features but they're

spread out across three clients. The first client has three active

features, the second client two features

(and only one feature that overlaps with the first client)

and the third client has a single feature active.

Flexi Hash Embeddings returns three vectors, one for each client,

and not six vectors even though there are six features present.

The first client's vector is a sum of three feature vectors

(plus_flag, age_under, luxe_flag)

while the second client's vector is a sum of just two feature vectors

(lppish, luxe_flag)

and the third client's vector is just a single feature.

Speed

Online feature hasing and groupby are relatively fast. For a large batchsize of 4096 with on average 5 features per row equals 20,000 total features. This module will hash that many features in about 20ms on a modern MacBook Pro.

Installation

Install from PyPi do pip install flexi-hash-embedding

Install locally by doing git@github.com:cemoody/flexi_hash_embedding.git.

Testing

>>> pip install -e .

>>> py.test

To publish a new package version:

python3 setup.py sdist bdist_wheel

twine upload dist/*

pip install --index-url https://pypi.org/simple/ --no-deps flexi_hash_embedding

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file flexi_hash_embedding-0.0.3.tar.gz.

File metadata

- Download URL: flexi_hash_embedding-0.0.3.tar.gz

- Upload date:

- Size: 4.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.1.1 pkginfo/1.5.0.1 requests/2.22.0 setuptools/41.4.0 requests-toolbelt/0.9.1 tqdm/4.36.1 CPython/3.7.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

f20b2985c423c2166704443e59112fdc83c6cf2eaca3bc90ef04a0a28a0597c5

|

|

| MD5 |

d524f43477c3ab564f61c8e2bbe39169

|

|

| BLAKE2b-256 |

e183e792d18b9e9497764f1dea72cbdc40a95d3ed107160e449e808f936bff88

|

File details

Details for the file flexi_hash_embedding-0.0.3-py3-none-any.whl.

File metadata

- Download URL: flexi_hash_embedding-0.0.3-py3-none-any.whl

- Upload date:

- Size: 5.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.1.1 pkginfo/1.5.0.1 requests/2.22.0 setuptools/41.4.0 requests-toolbelt/0.9.1 tqdm/4.36.1 CPython/3.7.4

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e302843475c1cb98e05f55a774d823f2abcd3a404ba88d85c8c84a3368a8f68d

|

|

| MD5 |

3d94147358c57a1b3f66ea6290cb2c91

|

|

| BLAKE2b-256 |

99749b11678c188dc80ea3b94c7d692bda6e638e1ebec2c0d5365dbd0cfd8375

|