ImagenHub is a one-stop library to standardize the inference and evaluation of all the conditional image generation models.

Project description

🖼️ ImagenHub

ImagenHub: Standardizing the evaluation of conditional image generation models

ICLR 2024

ImagenHub is a one-stop library to standardize the inference and evaluation of all the conditional image generation models.

- We define 7 prominent tasks and curate 7 high-quality evaluation datasets for each task.

- We built a unified inference pipeline to ensure fair comparison. We currently support around 30 models.

- We designed two human evaluation scores, i.e. Semantic Consistency and Perceptual Quality, along with comprehensive guidelines to evaluate generated images.

- We provide code for visualization, autometrics and Amazon mechanical turk templates.

📰 News

- 2024 Jun 10: GenAI-Arena Paper is out. It is featured on Huggingface Daily Papers.

- 2024 Jun 07: ImagenHub is finally on PyPI! Check: https://pypi.org/project/imagen-hub/

- 2024 Apr 07: We released Human evaluation ratings According to our latest Arxiv paper.

- 2024 Feb 14: Checkout ⚔️ GenAI-Arena ⚔️ : Benchmarking Visual Generative Models in the Wild!

- 2024 Jan 15: Paper accepted to ICLR 2024! See you in Vienna!

- 2024 Jan 7: We updated Human Evaluation Guideline, ImagenMuseum Submission! Now we welcome researchers to submit their method on ImagenMuseum with minimal effort.

- 2023 Oct 23: Version 0.1.0 released! ImagenHub’s documentation now available!

- 2023 Oct 19: Code Released. Docs under construction.

- 2023 Oct 13: We released Imagen Museum, a visualization page for all models from ImagenHub!

- 2023 Oct 4: Our paper is featured on Huggingface Daily Papers!

- 2023 Oct 2: Paper available on Arxiv. Code coming Soon!

📄 Table of Contents

- 🛠️ Installation

- 👨🏫 Get Started

- 📘 Documentation

- 🧠 Philosophy

- 🙌 Contributing

- 🖊️ Citation

- 🤝 Acknowledgement

- 🎫 License

🛠️ Installation 🔝

Install from PyPI:

pip install imagen-hub

Or build from source:

git clone https://github.com/TIGER-AI-Lab/ImagenHub.git

cd ImagenHub

conda env create -f env_cfg/imagen_environment.yml

conda activate imagen

pip install -e .

For models like Dall-E, DreamEdit, and BLIPDiffusion, please see Extra Setup

For some models (Stable Diffusion, SDXL, CosXL, etc.), you need to login through huggingface-cli.

huggingface-cli login

👨🏫 Get Started 🔝

Benchmarking

To reproduce our experiment reported in the paper:

Example for text-guided image generation:

python3 benchmarking.py -cfg benchmark_cfg/ih_t2i.yml

Note that the expected output structure would be:

result_root_folder

└── experiment_basename_folder

├── input (If applicable)

│ └── image_1.jpg ...

├── model1

│ └── image_1.jpg ...

├── model2

│ └── image_1.jpg ...

├── ...

Then after running the experiment, you can run

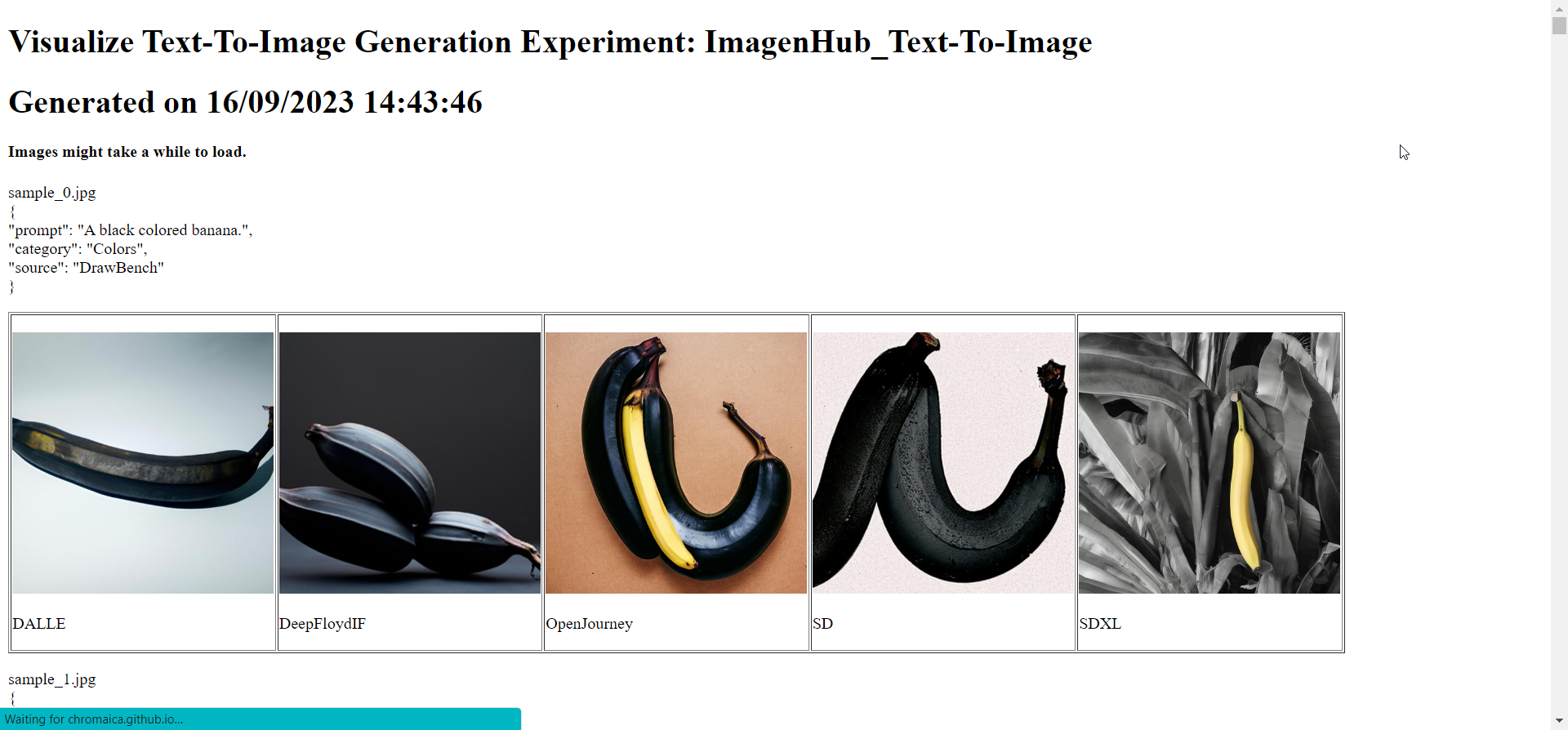

python3 visualize.py --cfg benchmark_cfg/ih_t2i.yml

to produce a index.html file for visualization.

The file would look like something like this. We hosted our experiment results on Imagen Museum.

Infering one model

import imagen_hub

model = imagen_hub.load("SDXL")

image = model.infer_one_image(prompt="people reading pictures in a museum, watercolor", seed=1)

image

Running Metrics

from imagen_hub.metrics import MetricLPIPS

from imagen_hub.utils import load_image, save_pil_image, get_concat_pil_images

def evaluate_one(model, real_image, generated_image):

score = model.evaluate(real_image, generated_image)

print("====> Score : ", score)

image_I = load_image("https://chromaica.github.io/Museum/ImagenHub_Text-Guided_IE/input/sample_102724_1.jpg")

image_O = load_image("https://chromaica.github.io/Museum/ImagenHub_Text-Guided_IE/DiffEdit/sample_102724_1.jpg")

show_image = get_concat_pil_images([image_I, image_O], 'h')

model = MetricLPIPS()

evaluate_one(model, image_I, image_O) # ====> Score : 0.11225218325853348

show_image

📘 Documentation 🔝

The tutorials and API documentation are hosted on imagenhub.readthedocs.io.

🧠 Philosophy 🔝

By streamlining research and collaboration, ImageHub plays a pivotal role in propelling the field of Image Generation and Editing.

- Purity of Evaluation: We ensure a fair and consistent evaluation for all models, eliminating biases.

- Research Roadmap: By defining tasks and curating datasets, we provide clear direction for researchers.

- Open Collaboration: Our platform fosters the exchange and cooperation of related technologies, bringing together minds and innovations.

Implemented Models

We included more than 30 Models in image synthesis. See the full list here:

- Supported Models: https://github.com/TIGER-AI-Lab/ImagenHub/issues/1

- Supported Metrics: https://github.com/TIGER-AI-Lab/ImagenHub/issues/6

| Method | Venue | Type |

|---|---|---|

| Stable Diffusion | - | Text-To-Image Generation |

| Stable Diffusion XL | arXiv'23 | Text-To-Image Generation |

| DeepFloyd-IF | - | Text-To-Image Generation |

| OpenJourney | - | Text-To-Image Generation |

| Dall-E | - | Text-To-Image Generation |

| Kandinsky | - | Text-To-Image Generation |

| MagicBrush | arXiv'23 | Text-guided Image Editing |

| InstructPix2Pix | CVPR'23 | Text-guided Image Editing |

| DiffEdit | ICLR'23 | Text-guided Image Editing |

| Imagic | CVPR'23 | Text-guided Image Editing |

| CycleDiffusion | ICCV'23 | Text-guided Image Editing |

| SDEdit | ICLR'22 | Text-guided Image Editing |

| Prompt-to-Prompt | ICLR'23 | Text-guided Image Editing |

| Text2Live | ECCV'22 | Text-guided Image Editing |

| Pix2PixZero | SIGGRAPH'23 | Text-guided Image Editing |

| GLIDE | ICML'22 | Mask-guided Image Editing |

| Blended Diffusion | CVPR'22 | Mask-guided Image Editing |

| Stable Diffusion Inpainting | - | Mask-guided Image Editing |

| Stable Diffusion XL Inpainting | - | Mask-guided Image Editing |

| TextualInversion | ICLR'23 | Subject-driven Image Generation |

| BLIP-Diffusion | arXiv'23 | Subject-Driven Image Generation |

| DreamBooth(+ LoRA) | CVPR'23 | Subject-Driven Image Generation |

| Photoswap | arXiv'23 | Subject-Driven Image Editing |

| DreamEdit | arXiv'23 | Subject-Driven Image Editing |

| Custom Diffusion | CVPR'23 | Multi-Subject-Driven Generation |

| ControlNet | arXiv'23 | Control-guided Image Generation |

| UniControl | arXiv'23 | Control-guided Image Generation |

Comprehensive Functionality

- Common Metrics for GenAI

- Visualization tool

- Amazon Mechanical Turk Templates (Coming Soon)

High quality software engineering standard.

- Documentation

- Type Hints

- Code Coverage (Coming Soon)

🙌 Contributing 🔝

For the Community

Community contributions are encouraged!

ImagenHub is still under development. More models and features are going to be added and we always welcome contributions to help make ImagenHub better. If you would like to contribute, please check out CONTRIBUTING.md.

We believe that everyone can contribute and make a difference. Whether it's writing code 💻, fixing bugs 🐛, or simply sharing feedback 💬, your contributions are definitely welcome and appreciated 🙌

And if you like the project, but just don't have time to contribute, that's fine. There are other easy ways to support the project and show your appreciation, which we would also be very happy about:

- Star the project

- Tweet about it

- Refer this project in your project's readme

- Mention the project at local meetups and tell your friends/colleagues

For the Researchers:

-

Q: How can I use your evaluation method for my method?

-

A: Please Refer to https://imagenhub.readthedocs.io/en/latest/Guidelines/humaneval.html

-

Q: How can I add my method to ImagenHub codebase?

-

A: Please Refer to https://imagenhub.readthedocs.io/en/latest/Guidelines/custommodel.html

-

Q: I want to feature my method on ImagenMuseum!

-

A: Please Refer to https://imagenhub.readthedocs.io/en/latest/Guidelines/imagenmuseum.html

🖊️ Citation 🔝

Please kindly cite our paper if you use our code, data, models or results:

@inproceedings{

ku2024imagenhub,

title={ImagenHub: Standardizing the evaluation of conditional image generation models},

author={Max Ku and Tianle Li and Kai Zhang and Yujie Lu and Xingyu Fu and Wenwen Zhuang and Wenhu Chen},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=OuV9ZrkQlc}

}

@article{ku2023imagenhub,

title={ImagenHub: Standardizing the evaluation of conditional image generation models},

author={Max Ku and Tianle Li and Kai Zhang and Yujie Lu and Xingyu Fu and Wenwen Zhuang and Wenhu Chen},

journal={arXiv preprint arXiv:2310.01596},

year={2023}

}

🤝 Acknowledgement 🔝

Please refer to ACKNOWLEDGEMENTS.md

🎫 License 🔝

This project is released under the License.

⭐ Star History 🔝

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for imagen_hub-0.2.4-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 9523815ce947a1a0076cc461010db3b594b3862b0dcf21a429f5914eb7d9fa2a |

|

| MD5 | 3ce89eb40354f3112542ab5a48b03df6 |

|

| BLAKE2b-256 | 74bf548201d0ac000605944324778bda823bd774720d6e238d4585edb8040733 |