Legendre Memory Units

Project description

Legendre Memory Units: Continuous-Time Representation in Recurrent Neural Networks

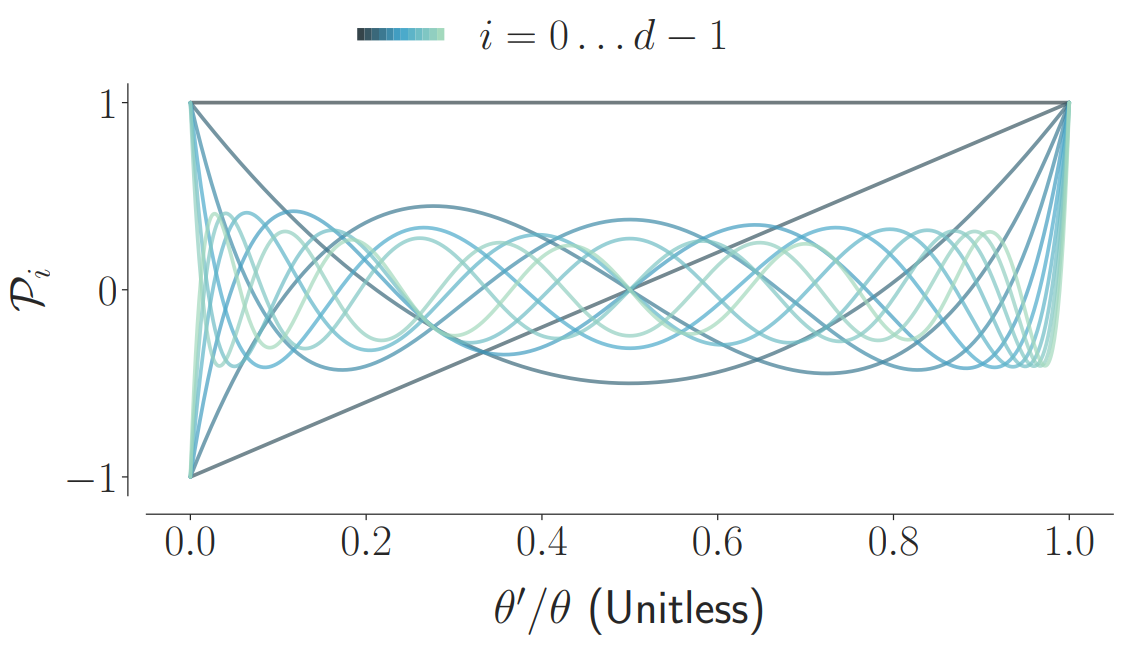

We propose a novel memory cell for recurrent neural networks that dynamically maintains information across long windows of time using relatively few resources. The Legendre Memory Unit (LMU) is mathematically derived to orthogonalize its continuous-time history – doing so by solving d coupled ordinary differential equations (ODEs), whose phase space linearly maps onto sliding windows of time via the Legendre polynomials up to degree d − 1 (example d=12, shown below).

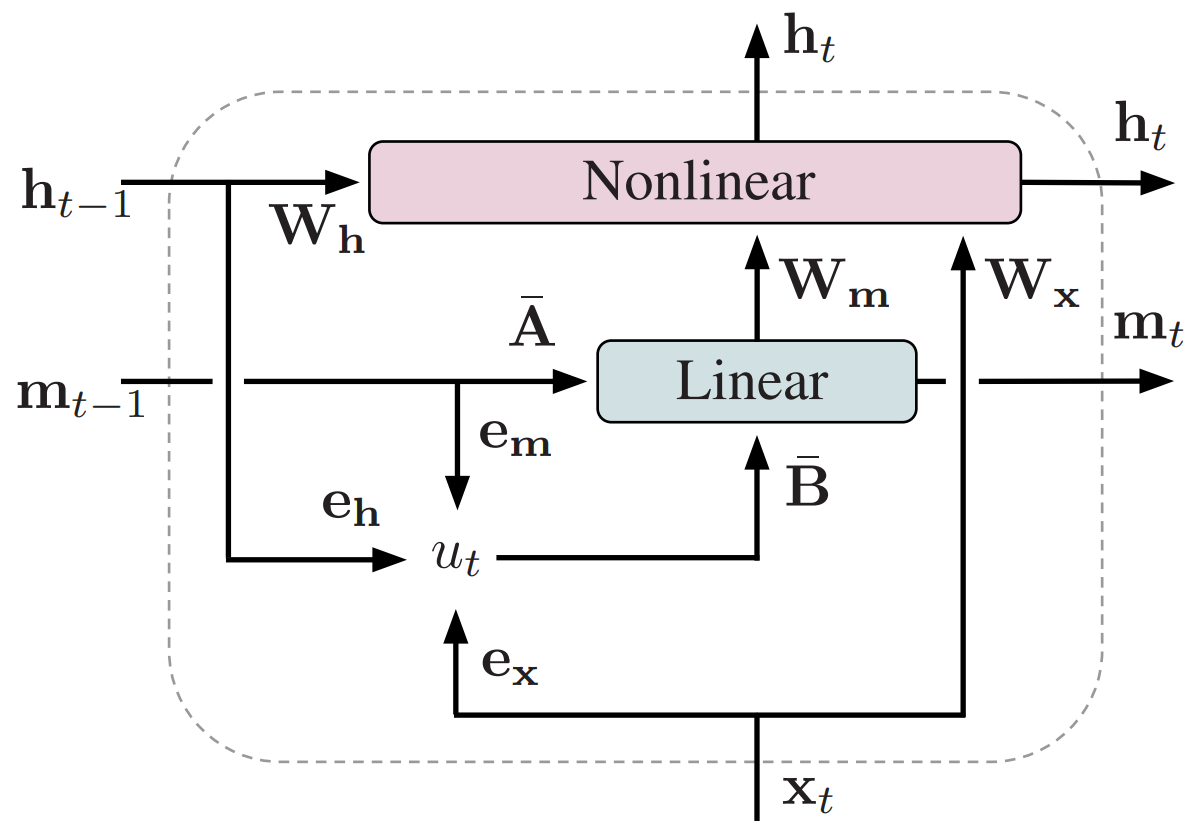

A single LMUCell expresses the following computational graph in Keras as an RNN layer, which couples the optimal linear memory (m) with a nonlinear hidden state (h):

The discretized (A, B) matrices are initialized according to the LMU’s mathematical derivation with respect to some chosen window length, θ. Backpropagation can be used to learn this time-scale, or fine-tune (A, B), if necessary. By default the coupling between the hidden state (h) and the memory vector (m) is trained via backpropagation, while the dynamics of the memory remain fixed (see paper for details).

The docs includes an example for how to use the LMUCell.

The paper branch in the lmu GitHub repository includes a pre-trained Keras/TensorFlow model, located at models/psMNIST-standard.hdf5, which obtains the current best-known psMNIST result (using an RNN) of 97.15%. Note, the network is using fewer internal state-variables and neurons than there are pixels in the input sequence. To reproduce the results from the paper, run the notebooks in the experiments directory within the paper branch.

Nengo Examples

Citation

@inproceedings{voelker2019lmu,

title={Legendre Memory Units: Continuous-Time Representation in Recurrent Neural Networks},

author={Aaron R. Voelker and Ivana Kaji\'c and Chris Eliasmith},

booktitle={Advances in Neural Information Processing Systems},

pages={15544--15553},

year={2019}

}Release history

0.1.0 (June 22, 2020)

Initial release of LMU 0.1.0! Supports Python 3.5+.

The API is considered unstable; parts are likely to change in the future.

Thanks to all of the contributors for making this possible!

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.