A suite of AI libraries and tools that accelerates model serving and provides programmability all the way to the GPU kernels

Project description

Get started | API docs | GitHub | Changelog

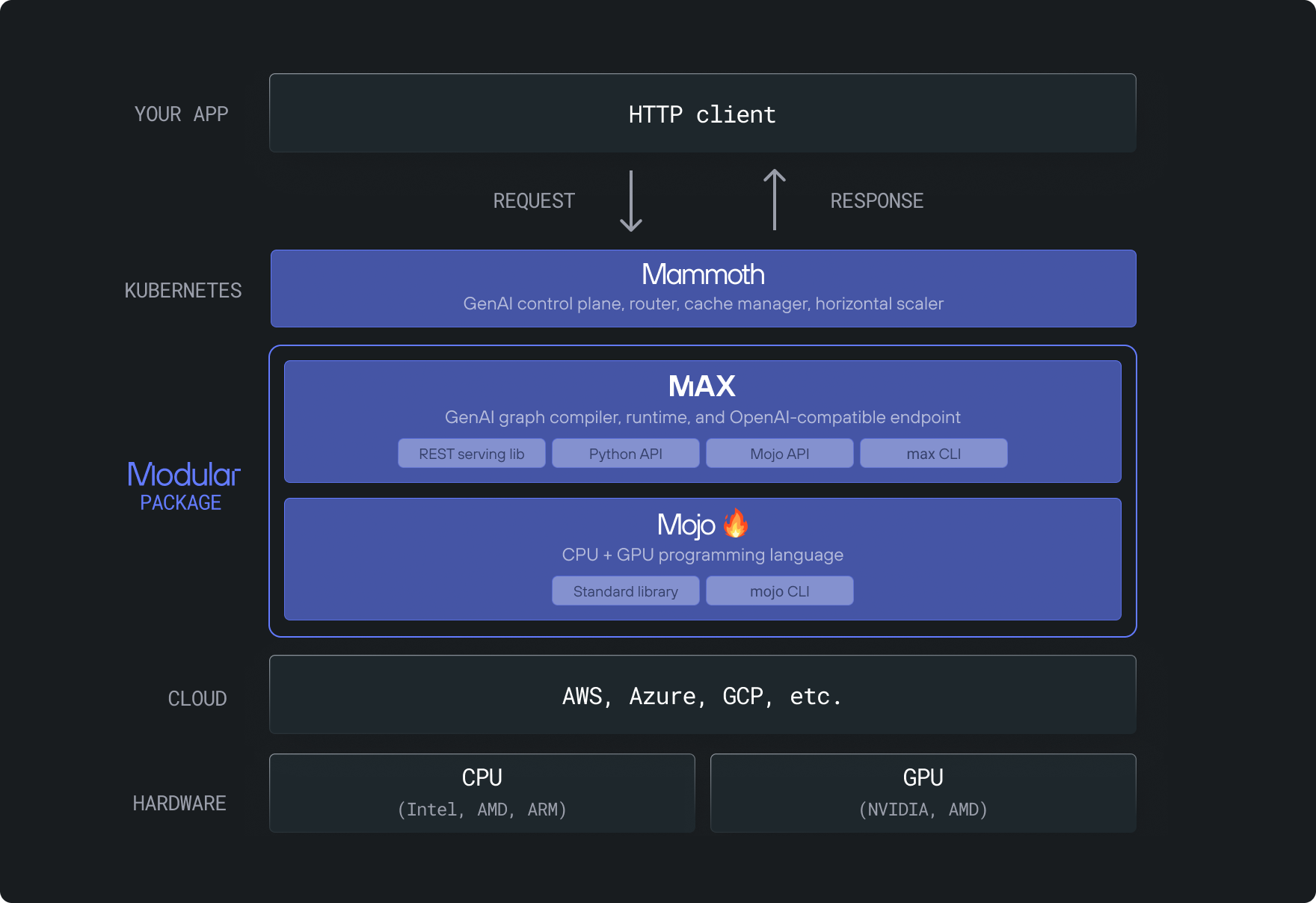

The Modular Platform is an open and fully-integrated suite of AI libraries and tools that accelerates model serving and scales GenAI deployments. It abstracts away hardware complexity so you can run the most popular open AI models with industry-leading performance on GPUs and CPUs.

You can also customize everything from the serving pipeline and model architecture all the way down to the metal by writing custom ops and GPU kernels in Mojo.

NOTE: Not available for Windows.

Get started

It takes only a moment to start an OpenAI-compatible endpoint or use our Python API to run inference with a GenAI model from Hugging Face.

Try it now with our quickstart guide.

Stay in touch

Check out our GitHub repo and join our community.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file modular-26.1.0-py3-none-any.whl.

File metadata

- Download URL: modular-26.1.0-py3-none-any.whl

- Upload date:

- Size: 1.7 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

05104c00803cbaaf4ebf70a3805e541d6731fa180bbda3ac3f38c1c9e3314751

|

|

| MD5 |

a4d6025e7c8aee26d6948db53035bdb7

|

|

| BLAKE2b-256 |

b9a7d8c067f280fe7ef341646e3c6ef7c7f6aeedcb73e228cba1acbfea2709dd

|