py.test fixture for benchmarking code

Project description

A py.test fixture for benchmarking code.

Free software: BSD license

Installation

pip install pytest-benchmark

Usage

This plugin provides a benchmark fixture. This fixture is a callable object that will benchmark any function passed to it.

Example:

def something(duration=0.000001):

# Code to be measured

return time.sleep(duration)

def test_my_stuff(benchmark):

# benchmark something

result = benchmark(something)

# Extra code, to verify that the run completed correctly.

# Note: this code is not measured.

assert result is NoneYou can also pass extra arguments:

def test_my_stuff(benchmark):

# benchmark something

result = benchmark(something, 0.02)If you need to do some wrapping (like special setup), you can use it as a decorator around a wrapper function:

def test_my_stuff(benchmark):

@benchmark

def result():

# Code to be measured

return something(0.0002)

# Extra code, to verify that the run completed correctly.

# Note: this code is not measured.

assert result is Nonepy.test command-line options:

- --benchmark-min-time=BENCHMARK_MIN_TIME

Minimum time per round. Default: 25.00us

- --benchmark-max-time=BENCHMARK_MAX_TIME

Maximum time to spend in a benchmark. Default: 1.00s

- --benchmark-min-rounds=BENCHMARK_MIN_ROUNDS

Minimum rounds, even if total time would exceed –max-time. Default: 5

- --benchmark-sort=BENCHMARK_SORT

Column to sort on. Can be one of: ‘min’, ‘max’, ‘mean’ or ‘stddev’. Default: min

- --benchmark-timer=BENCHMARK_TIMER

Timer to use when measuring time. Default: time.perf_counter

- --benchmark-warmup

Runs the benchmarks two times. Discards data from the first run.

- --benchmark-warmup-iterations=BENCHMARK_WARMUP_ITERATIONS

Max number of iterations to run in the warmup phase. Default: 100000

- --benchmark-verbose

Dump diagnostic and progress information.

- --benchmark-disable-gc

Disable GC during benchmarks.

- --benchmark-skip

Skip running any benchmarks.

- --benchmark-only

Only run benchmarks.

Setting per-test options:

@pytest.mark.benchmark(

group="group-name",

min_time=0.1,

max_time=0.5,

min_rounds=5,

timer=time.time,

disable_gc=True,

warmup=False

)

def test_my_stuff(benchmark):

@benchmark

def result():

# Code to be measured

return time.sleep(0.000001)

# Extra code, to verify that the run

# completed correctly.

# Note: this code is not measured.

assert result is NoneFeatures

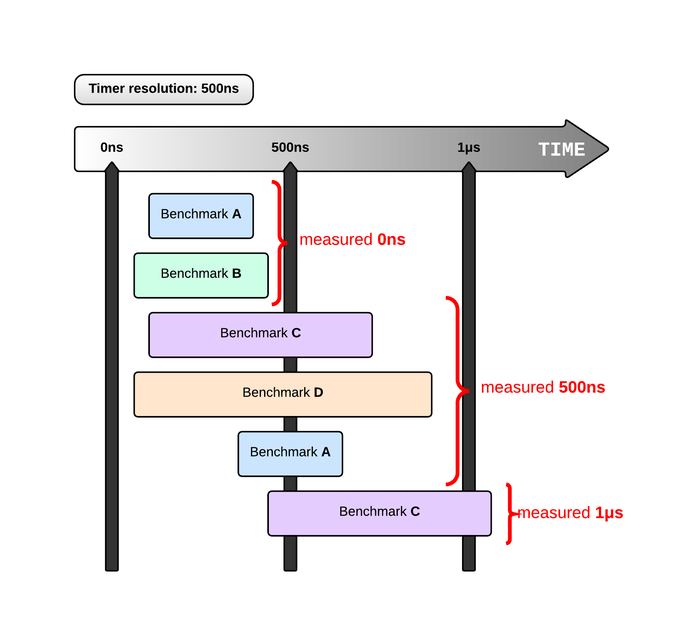

Calibration

pytest-benchmark will run your function multiple times between measurements. This is quite similar to the builtin timeit module but it’s more robust.

The problem with measuring single runs apears when you have very fast code. To illustrate:

Patch utilities

Suppose you want to benchmark an internal function from a class:

class Foo(object):

def __init__(self, arg=0.01):

self.arg = arg

def run(self):

self.internal(self.arg)

def internal(self, duration):

time.sleep(duration)With the benchmark fixture this is quite hard to test if you don’t control the Foo code or it has very complicated construction.

For this there’s an experimental benchmark_weave fixture that can patch stuff using aspectlib (make sure you pip install apectlib or pip install pytest-benchmark[aspect]):

def test_foo(benchmark_weave):

with benchmark_weave(Foo.internal, lazy=True):

f = Foo()

f.run()Documentation

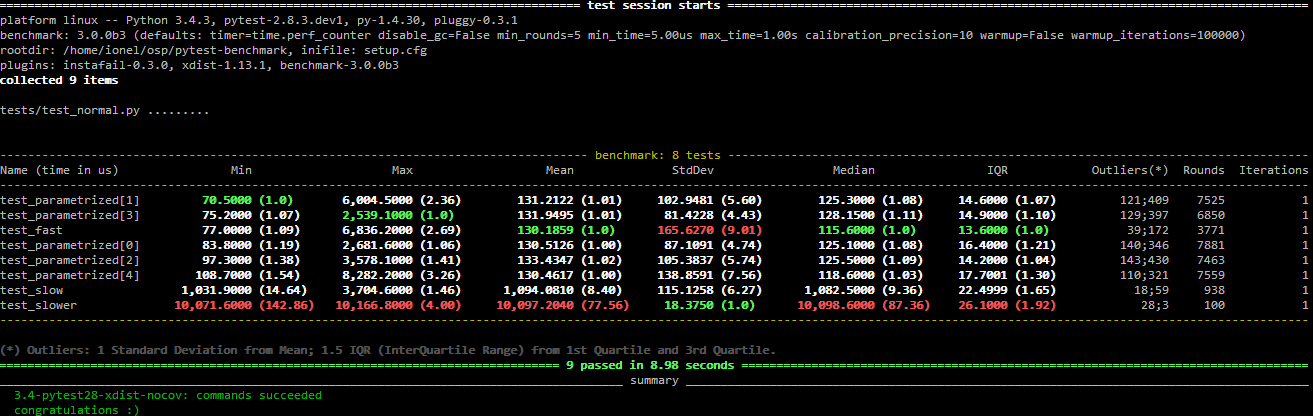

Obligatory screenshot

Development

To run the all tests run:

tox

Credits

Timing code and ideas taken from: https://bitbucket.org/haypo/misc/src/tip/python/benchmark.py

Changelog

2.4.1 (2015-03-16)

Fix regression, plugin was raising ValueError: no option named 'dist' when xdist wasn’t installed.

2.4.0 (2015-03-12)

Add a benchmark_weave experimental fixture.

Fix internal failures when xdist plugin is active.

Automatically disable benchmarks if xdist is active.

2.3.0 (2014-12-27)

Moved the warmup in the calibration phase. Solves issues with benchmarking on PyPy.

Added a --benchmark-warmup-iterations option to fine-tune that.

2.2.0 (2014-12-26)

Make the default rounds smaller (so that variance is more accurate).

Show the defaults in the --help section.

2.1.0 (2014-12-20)

Simplify the calibration code so that the round is smaller.

Add diagnostic output for calibration code (--benchmark-verbose).

2.0.0 (2014-12-19)

Replace the context-manager based API with a simple callback interface.

Implement timer calibration for precise measurements.

1.0.0 (2014-12-15)

Use a precise default timer for PyPy.

? (?)

Readme and styling fixes (contributed by Marc Abramowitz)

Lots of wild changes.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file pytest-benchmark-2.4.1.tar.gz.

File metadata

- Download URL: pytest-benchmark-2.4.1.tar.gz

- Upload date:

- Size: 95.4 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

aedf56bff36f6bc850661a3c1cc25bfcdef4eb6fc61952d08cac2b93215f06a1

|

|

| MD5 |

bc46237f5da685b0c149ec2b56cffef9

|

|

| BLAKE2b-256 |

3af58453279a28cd61ec67de2cd3f3ff8d06b4274adafedf5eecb0ddfea489c2

|

File details

Details for the file pytest_benchmark-2.4.1-py2.py3-none-any.whl.

File metadata

- Download URL: pytest_benchmark-2.4.1-py2.py3-none-any.whl

- Upload date:

- Size: 19.9 kB

- Tags: Python 2, Python 3

- Uploaded using Trusted Publishing? No

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

76bffced2a38779e0eb4d854620710a1c3bb7146190615b4139531769ac06e78

|

|

| MD5 |

8e473a1f3ffb8a0f6a6223cbe491d601

|

|

| BLAKE2b-256 |

c1d5641ea0219cf129bac9615c9073f70a3fa2937d08e707b6e807144f257e88

|