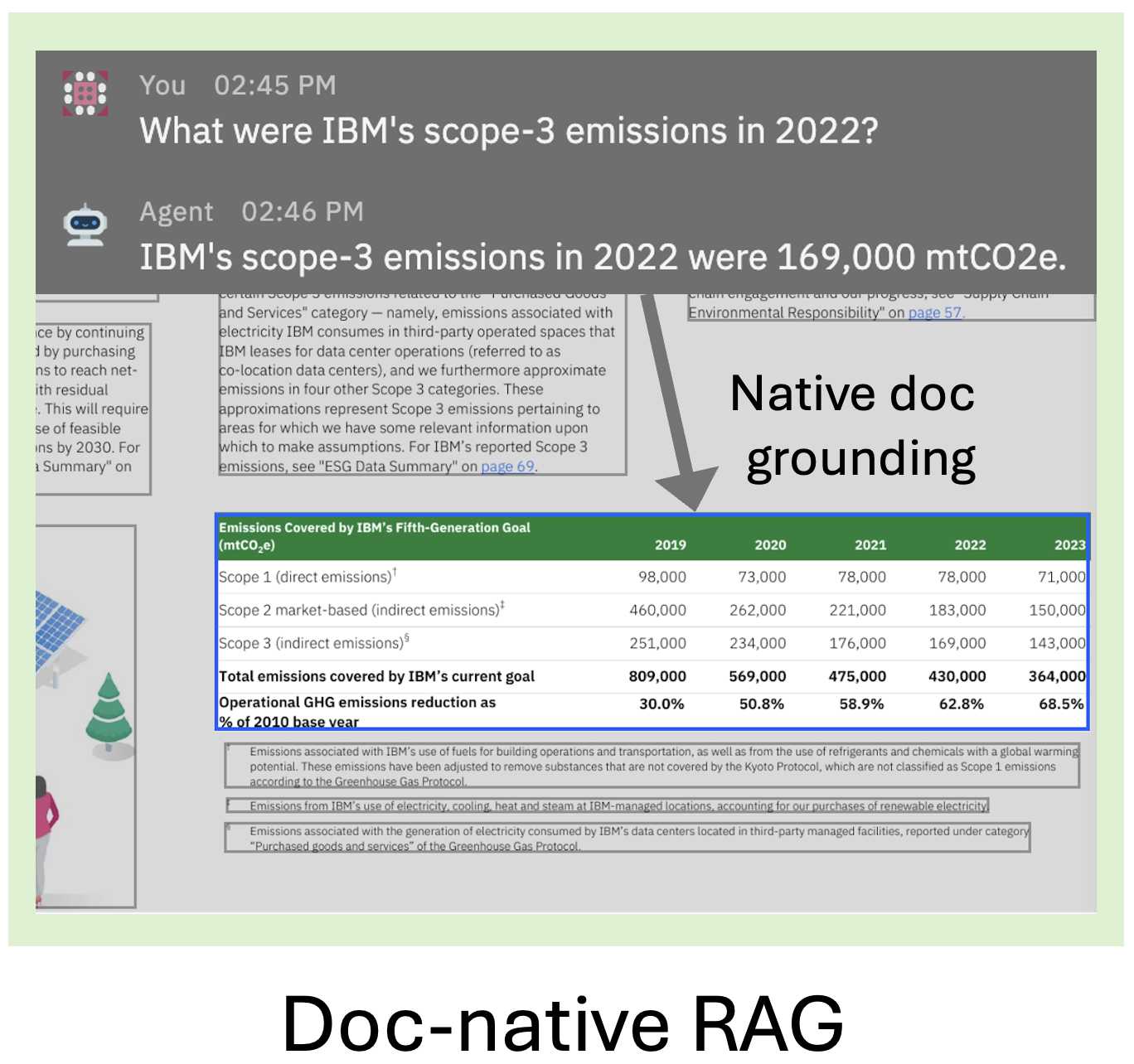

Quackling enables document-native generative AI applications

Project description

[!IMPORTANT]

👉 Now part of Docling!

Quackling

Easily build document-native generative AI applications, such as RAG, leveraging Docling's efficient PDF extraction and rich data model — while still using your favorite framework, 🦙 LlamaIndex or 🦜🔗 LangChain.

Features

- 🧠 Enables rich gen AI applications by providing capabilities on native document level — not just plain text / Markdown!

- ⚡️ Leverages Docling's conversion quality and speed.

- ⚙️ Plug-and-play integration with LlamaIndex and LangChain for building powerful applications like RAG.

Installation

To use Quackling, simply install quackling from your package manager, e.g. pip:

pip install quackling

Usage

Quackling offers core capabilities (quackling.core), as well as framework integration components (quackling.llama_index and quackling.langchain). Below you find examples of both.

Basic RAG

Here is a basic RAG pipeline using LlamaIndex:

[!NOTE] To use as is, first

pip install llama-index-embeddings-huggingface llama-index-llms-huggingface-apiadditionally toquacklingto install the models. Otherwise, you can setEMBED_MODEL&LLMas desired, e.g. using local models.

import os

from llama_index.core import VectorStoreIndex

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.llms.huggingface_api import HuggingFaceInferenceAPI

from quackling.llama_index.node_parsers import HierarchicalJSONNodeParser

from quackling.llama_index.readers import DoclingPDFReader

DOCS = ["https://arxiv.org/pdf/2206.01062"]

QUESTION = "How many pages were human annotated?"

EMBED_MODEL = HuggingFaceEmbedding(model_name="BAAI/bge-small-en-v1.5")

LLM = HuggingFaceInferenceAPI(

token=os.getenv("HF_TOKEN"),

model_name="mistralai/Mistral-7B-Instruct-v0.3",

)

index = VectorStoreIndex.from_documents(

documents=DoclingPDFReader(parse_type=DoclingPDFReader.ParseType.JSON).load_data(DOCS),

embed_model=EMBED_MODEL,

transformations=[HierarchicalJSONNodeParser()],

)

query_engine = index.as_query_engine(llm=LLM)

result = query_engine.query(QUESTION)

print(result.response)

# > 80K pages were human annotated

Chunking

You can also use Quackling as a standalone with any pipeline. For instance, to split the document to chunks based on document structure and returning pointers to Docling document's nodes:

from docling.document_converter import DocumentConverter

from quackling.core.chunkers import HierarchicalChunker

doc = DocumentConverter().convert_single("https://arxiv.org/pdf/2408.09869").output

chunks = list(HierarchicalChunker().chunk(doc))

# > [

# > ChunkWithMetadata(

# > path='$.main-text[4]',

# > text='Docling Technical Report\n[...]',

# > page=1,

# > bbox=[117.56, 439.85, 494.07, 482.42]

# > ),

# > [...]

# > ]

More examples

LlamaIndex

- Milvus basic RAG (dense embeddings)

- Milvus hybrid RAG (dense & sparse embeddings combined e.g. via RRF) & reranker model usage

- Milvus RAG also fetching native document metadata for search results

- Local node transformations (e.g. embeddings)

- ...

LangChain

Contributing

Please read Contributing to Quackling for details.

References

If you use Quackling in your projects, please consider citing the following:

@techreport{Docling,

author = "Deep Search Team",

month = 8,

title = "Docling Technical Report",

url = "https://arxiv.org/abs/2408.09869",

eprint = "2408.09869",

doi = "10.48550/arXiv.2408.09869",

version = "1.0.0",

year = 2024

}

License

The Quackling codebase is under MIT license. For individual component usage, please refer to the component licenses found in the original packages.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file quackling-0.4.1.tar.gz.

File metadata

- Download URL: quackling-0.4.1.tar.gz

- Upload date:

- Size: 13.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.8.3 CPython/3.10.12 Linux/6.5.0-1025-azure

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

9f3be8538c89258c774b047f762d71f92f5a0a776bab7630e81a9b0eb041d4a2

|

|

| MD5 |

6c1c9da8e8ff9a0d08992570dea4a9d4

|

|

| BLAKE2b-256 |

315842f51fb771fe4d7602a31074736aa12aea560cfe548d30abd9497e2e9279

|

File details

Details for the file quackling-0.4.1-py3-none-any.whl.

File metadata

- Download URL: quackling-0.4.1-py3-none-any.whl

- Upload date:

- Size: 17.5 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.8.3 CPython/3.10.12 Linux/6.5.0-1025-azure

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e1e07fcbb964e7e2a96f68bd4ce6b432a341b61f1c0ea1ca298e61e85b14e9a2

|

|

| MD5 |

8c2ceb51d9af0a27528d28a51078960a

|

|

| BLAKE2b-256 |

80980f5892f4d9c9c7dc6956f4b3a5315f81ee5b1acf5b7d1fb0f99fd22b0d64

|