Tools for simple inference testing using TensorRT, CUDA and OpenVINO CPU/GPU and CPU providers. Simple Inference Test for ONNX.

Project description

sit4onnx

Tools for simple inference testing using TensorRT, CUDA and OpenVINO CPU/GPU and CPU providers. Simple Inference Test for ONNX.

https://github.com/PINTO0309/simple-onnx-processing-tools

ToDo

- Add an interface to allow arbitrary test data to be specified as input parameters.

- numpy.ndarray

- numpy file

- Allow static fixed shapes to be specified when dimensions other than batch size are undefined.

- Returns numpy.ndarray of the last inference result as a return value when called from a Python script.

- Add

--output_numpy_fileoption. Output the final inference results to a numpy file. - Add

--non_verboseoption.

1. Setup

1-1. HostPC

### option

$ echo export PATH="~/.local/bin:$PATH" >> ~/.bashrc \

&& source ~/.bashrc

### run

$ pip install -U onnx \

&& pip install -U sit4onnx

1-2. Docker

https://github.com/PINTO0309/simple-onnx-processing-tools#docker

2. CLI Usage

$ sit4onnx -h

usage:

sit4onnx [-h]

--input_onnx_file_path INPUT_ONNX_FILE_PATH

[--batch_size BATCH_SIZE]

[--test_loop_count TEST_LOOP_COUNT]

[--onnx_execution_provider {tensorrt,cuda,openvino_cpu,openvino_gpu,cpu}]

[--output_numpy_file]

[--non_verbose]

optional arguments:

-h, --help

show this help message and exit.

--input_onnx_file_path INPUT_ONNX_FILE_PATH

Input onnx file path.

--batch_size BATCH_SIZE

Value to be substituted if input batch size is undefined.

This is ignored if the input dimensions are all of static size.

--test_loop_count TEST_LOOP_COUNT

Number of times to run the test.

The total execution time is divided by the number of times the test is executed,

and the average inference time per inference is displayed.

--onnx_execution_provider {tensorrt,cuda,openvino_cpu,openvino_gpu,cpu}

ONNX Execution Provider.

--output_numpy_file

Outputs the last inference result to an .npy file.

--non_verbose

Do not show all information logs. Only error logs are displayed.

3. In-script Usage

>>> from sit4onnx import inference

>>> help(inference)

Help on function inference in module sit4onnx.onnx_inference_test:

inference(

input_onnx_file_path: str,

batch_size: Union[int, NoneType] = 1,

test_loop_count: Union[int, NoneType] = 10,

onnx_execution_provider: Union[str, NoneType] = 'tensorrt',

output_numpy_file: Union[bool, NoneType] = False,

non_verbose: Union[bool, NoneType] = False

) -> List[numpy.ndarray]

Parameters

----------

input_onnx_file_path: str

Input onnx file path.

batch_size: Optional[int]

Value to be substituted if input batch size is undefined.

This is ignored if the input dimensions are all of static size.

Default: 1

test_loop_count: Optional[int]

Number of times to run the test.

The total execution time is divided by the number of times the test is executed,

and the average inference time per inference is displayed.

Default: 10

onnx_execution_provider: Optional[str]

ONNX Execution Provider.

"tensorrt" or "cuda" or "openvino_cpu" or "openvino_gpu" or "cpu"

Default: "tensorrt"

output_numpy_file: Optional[bool]

Outputs the last inference result to an .npy file.

Default: False

non_verbose: Optional[bool]

Do not show all information logs. Only error logs are displayed.

Default: False

Returns

-------

final_results: List[np.ndarray]

Last Reasoning Results.

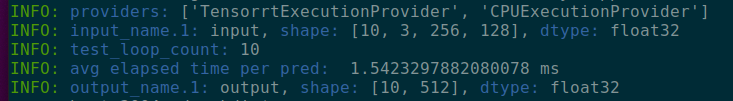

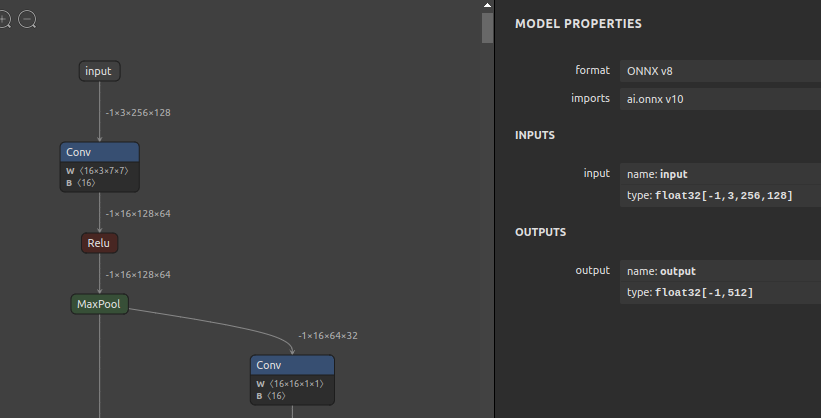

4. CLI Execution

$ sit4onnx \

--input_onnx_file_path osnet_x0_25_msmt17_Nx3x256x128.onnx \

--batch_size 10 \

--test_loop_count 10 \

--onnx_execution_provider tensorrt

5. In-script Execution

from sit4onnx import inference

inference(

input_onnx_file_path="osnet_x0_25_msmt17_Nx3x256x128.onnx",

batch_size=10,

test_loop_count=10,

onnx_execution_provider="tensorrt",

)

6. Sample

$ docker run --gpus all -it --rm \

-v `pwd`:/home/user/workdir \

ghcr.io/pinto0309/openvino2tensorflow:latest

$ sit4onnx \

--input_onnx_file_path osnet_x0_25_msmt17_Nx3x256x128.onnx \

--batch_size 10 \

--test_loop_count 10 \

--onnx_execution_provider tensorrt

7. Reference

- https://github.com/onnx/onnx/blob/main/docs/Operators.md

- https://docs.nvidia.com/deeplearning/tensorrt/onnx-graphsurgeon/docs/index.html

- https://github.com/NVIDIA/TensorRT/tree/main/tools/onnx-graphsurgeon

- https://github.com/PINTO0309/simple-onnx-processing-tools

- https://github.com/PINTO0309/PINTO_model_zoo

8. Issues

https://github.com/PINTO0309/simple-onnx-processing-tools/issues

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

sit4onnx-1.0.1.tar.gz

(7.8 kB

view hashes)