Neural network visualization toolkit for tf.keras

Project description

tf-keras-vis

Web documents

https://keisen.github.io/tf-keras-vis-docs/

Overview

tf-keras-vis is a visualization toolkit for debugging tf.keras.Model in Tensorflow2.0+.

Currently supported methods for visualization include:

- Feature Visualization

- Class Activation Maps

- Saliency Maps

tf-keras-vis is designed to be light-weight, flexible and ease of use. All visualizations have the features as follows:

- Support N-dim image inputs, that's, not only support pictures but also such as 3D images.

- Support batch wise processing, so, be able to efficiently process multiple input images.

- Support the model that have either multiple inputs or multiple outputs, or both.

- Support the mixed-precision model.

And in ActivationMaximization,

- Support Optimizers that are built to tf.keras.

Visualizations

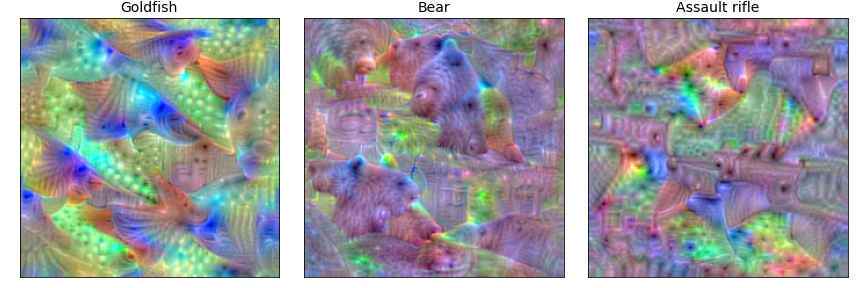

Dense Unit

Convolutional Filter

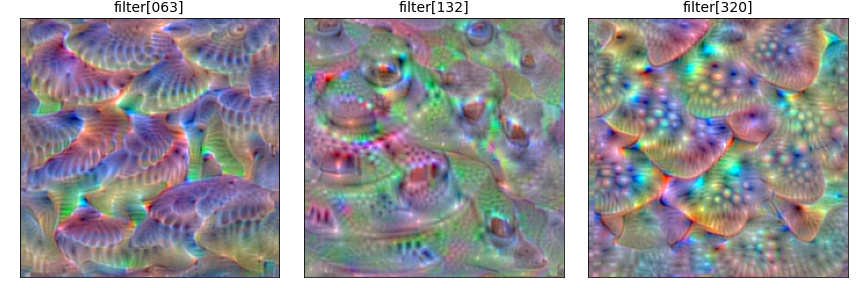

Class Activation Map

The images above are generated by GradCAM++.

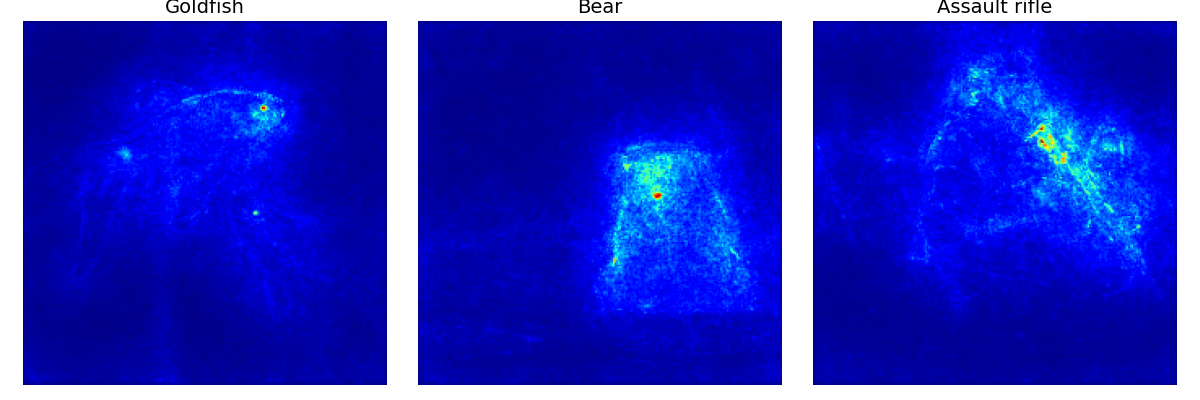

Saliency Map

The images above are generated by SmoothGrad.

Usage

ActivationMaximization (Visualizing Convolutional Filter)

import tensorflow as tf

from tensorflow.keras.applications import VGG16

from matplotlib import pyplot as plt

from tf_keras_vis.activation_maximization import ActivationMaximization

from tf_keras_vis.activation_maximization.callbacks import Progress

from tf_keras_vis.activation_maximization.input_modifiers import Jitter, Rotate2D

from tf_keras_vis.activation_maximization.regularizers import TotalVariation2D, Norm

from tf_keras_vis.utils.model_modifiers import ExtractIntermediateLayer, ReplaceToLinear

from tf_keras_vis.utils.scores import CategoricalScore

# Create the visualization instance.

# All visualization classes accept a model and model-modifier, which, for example,

# replaces the activation of last layer to linear function so on, in constructor.

activation_maximization = \

ActivationMaximization(VGG16(),

model_modifier=[ExtractIntermediateLayer('block5_conv3'),

ReplaceToLinear()],

clone=False)

# You can use Score class to specify visualizing target you want.

# And add regularizers or input-modifiers as needed.

activations = \

activation_maximization(CategoricalScore(FILTER_INDEX),

steps=200,

input_modifiers=[Jitter(jitter=16), Rotate2D(degree=1)],

regularizers=[TotalVariation2D(weight=1.0),

Norm(weight=0.3, p=1)],

optimizer=tf.keras.optimizers.RMSprop(1.0, 0.999),

callbacks=[Progress()])

## Since v0.6.0, calling `astype()` is NOT necessary.

# activations = activations[0].astype(np.uint8)

# Render

plt.imshow(activations[0])

Gradcam++

import numpy as np

from matplotlib import pyplot as plt

from matplotlib import cm

from tf_keras_vis.gradcam_plus_plus import GradcamPlusPlus

from tf_keras_vis.utils.model_modifiers import ReplaceToLinear

from tf_keras_vis.utils.scores import CategoricalScore

# Create GradCAM++ object

gradcam = GradcamPlusPlus(YOUR_MODEL_INSTANCE,

model_modifier=ReplaceToLinear(),

clone=True)

# Generate cam with GradCAM++

cam = gradcam(CategoricalScore(CATEGORICAL_INDEX),

SEED_INPUT)

## Since v0.6.0, calling `normalize()` is NOT necessary.

# cam = normalize(cam)

plt.imshow(SEED_INPUT_IMAGE)

heatmap = np.uint8(cm.jet(cam[0])[..., :3] * 255)

plt.imshow(heatmap, cmap='jet', alpha=0.5) # overlay

Please see the guides below for more details:

Getting Started Guides

[NOTES] If you have ever used keras-vis, you may feel that tf-keras-vis is similar with keras-vis. Actually tf-keras-vis derived from keras-vis, and both provided visualization methods are almost the same. But please notice that tf-keras-vis APIs does NOT have compatibility with keras-vis.

Requirements

- Python 3.7+

- Tensorflow 2.0+

Installation

- PyPI

$ pip install tf-keras-vis tensorflow

- Source (for development)

$ git clone https://github.com/keisen/tf-keras-vis.git

$ cd tf-keras-vis

$ pip install -e .[develop] tensorflow

Use Cases

- chitra

- A Deep Learning Computer Vision library for easy data loading, model building and model interpretation with GradCAM/GradCAM++.

Known Issues

- With InceptionV3, ActivationMaximization doesn't work well, that's, it might generate meaninglessly blur image.

- With cascading model, Gradcam and Gradcam++ don't work well, that's, it might occur some error. So we recommend to use FasterScoreCAM in this case.

channels-firstmodels and data is unsupported.

ToDo

- Guides

- Visualizing multiple attention or activation images at once utilizing batch-system of model

- Define various score functions

- Visualizing attentions with multiple inputs models

- Visualizing attentions with multiple outputs models

- Advanced score functions

- Tuning Activation Maximization

- Visualizing attentions for N-dim image inputs

- We're going to add some methods such as below

- Deep Dream

- Style transfer

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file tf-keras-vis-0.8.6.tar.gz.

File metadata

- Download URL: tf-keras-vis-0.8.6.tar.gz

- Upload date:

- Size: 28.7 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 8f28ae869fc94bc306090b124239bbcffedeee8b3c704c04a1dd61ebc0b99bad |

|

| MD5 | caf90c67ec506bffdaaa6e9a64cef2ee |

|

| BLAKE2b-256 | 27825e02ff58a5a7dc14cf5671154572a1752208f10c0da37d149c13b671eb3a |

File details

Details for the file tf_keras_vis-0.8.6-py3-none-any.whl.

File metadata

- Download URL: tf_keras_vis-0.8.6-py3-none-any.whl

- Upload date:

- Size: 52.1 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.8.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 0f0c0bcfeecd201b75cca80acc33f71f15be2241f6a9f2776979a521b03c9b0b |

|

| MD5 | dd69d2c5eab8d42f484ed35a93672c7d |

|

| BLAKE2b-256 | d57f115b804ae352989a2cf12ce66fb890f72a391837fa9baa9b218248b088a7 |