Memory management library to enhance AI agents with smarter, personalized, context-aware responses

Project description

Tovana

Tovana

Memory Driven Reasoning for Smarter AI Agents

Tovana is a library that introduces a new approach to improving LLM reasoning through actionable insights (beliefs) derived from continuous interactions and long term memory. Supercharge your AI agents with personalized, context-aware responses.

Why Tovana?

Current AI memory systems face significant drawbacks that limit their ability to mimic human-like intelligence. These include their static nature (vector dbs / semantic search), lack of contextual understanding, inability to learn from experience or form beliefs, poor handling of contradictions, limited associative capabilities, and absence of emotional intelligence. Additionally, AI agents struggle with abstraction, lack meta-cognitive abilities, and don't have mechanisms for selectively retaining or forgetting information. These shortcomings collectively restrict AI agents' adaptability, decision-making, and ability to navigate complex, real-world scenarios effectively.

The proposed AI agent memory system is designed to augment human memory and enhance AI agents' capabilities. Its purpose is to create more personalized, adaptive, and context-aware AI interactions by simulating human-like memory processes. This system aims to bridge the gap between static knowledge bases and dynamic, experience-based learning, allowing AI agents to evolve their understanding and behavior over time.

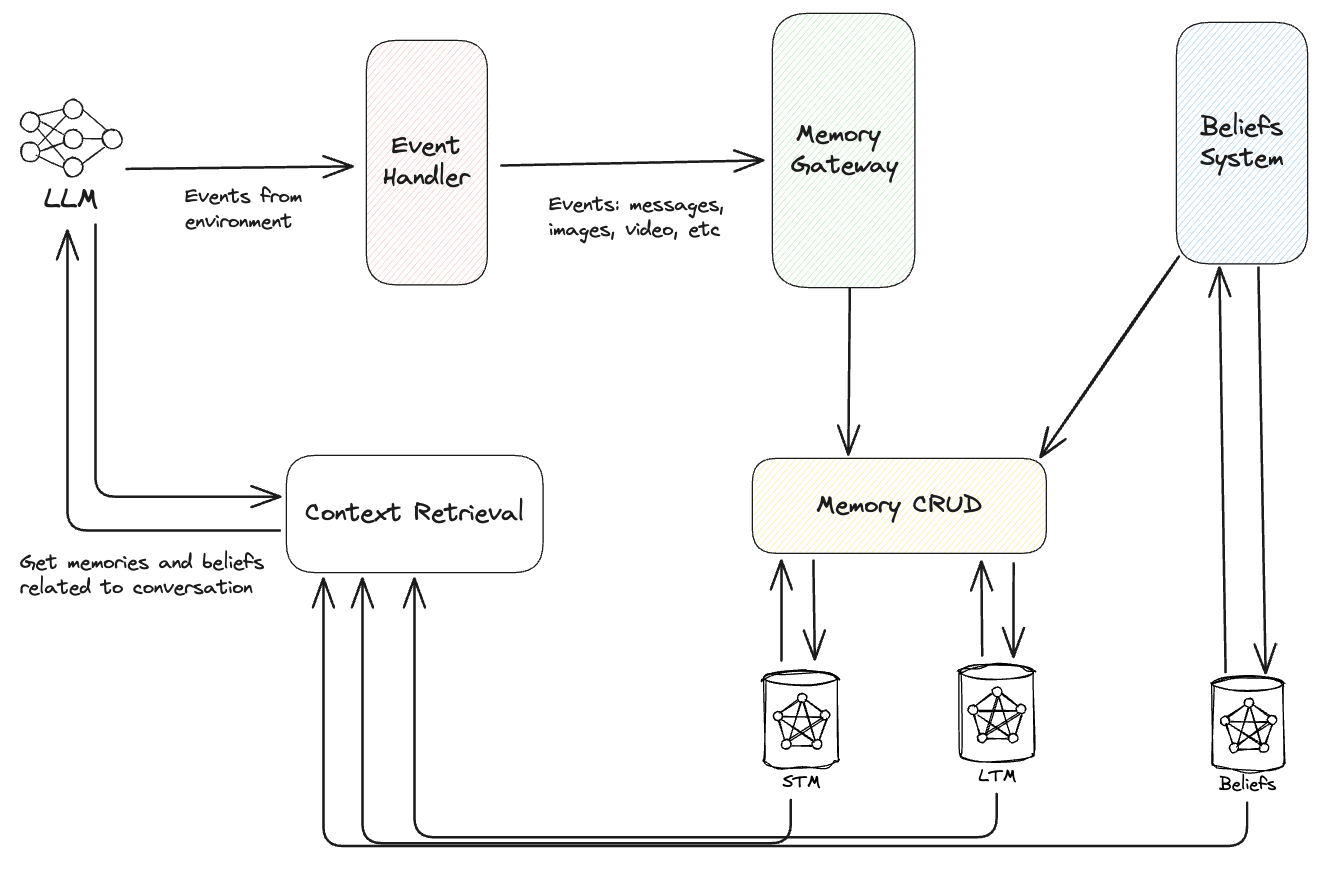

The system is a comprehensive memory and belief management framework for AI agents. It includes components for processing events, storing short-term and long-term memories, managing beliefs, creating associations, and informing decision-making processes. The core concept revolves around converting experiences (events) into memories, which in turn shape beliefs. These beliefs then influence the agent's reasoning, responses, and actions.

🌟 Features

| Feature | Status | Description |

|---|---|---|

| 🧠 Human-like Memory | ✅ Available | Transform interactions into lasting memories and actionable beliefs |

| 🔍 Smart Information Extraction | ✅ Available | Automatically capture and store relevant user details from conversations |

| 💡 Dynamic Belief Generation | ✅ Available | Create personalized, context-aware insights to guide AI responses |

| 🤖 LLM-Friendly Context | ✅ Available | Seamlessly integrate memory and beliefs into your AI's decision-making process |

| 🔌 Easy Integration | ✅ Available | Plug into your AI applications with a straightforward API |

| 🎭 Conflict Resolution | ✅ Available | Intelligently handle contradictions in user information |

| 🌐 Flexible Architecture | ✅ Available | Designed to work with various LLM providers and models |

| 📊 Memory Management | ✅ Available | Process events, store short-term and long-term memories, and manage beliefs |

| 🔗 Advanced Association Creation | ✅ Available | Form connections between memories and beliefs for more nuanced understanding |

| 🧵 Async Functionality | ✅ Available | Support for asynchronous operations to enhance performance in concurrent environments |

| ⛁ Persistent Database Support | 🔜 Coming Soon | Integration with persistent databases for long-term storage and retrieval of memory data |

| 🎛️ Custom Belief Generation | 🔜 Coming Soon | User-generated beliefs offering end-to-end flexibility in shaping the belief system reasoning |

🏗️ Architecture

🚀 Quick Start

- Install Tovana:

pip install tovana

- Use it in your project:

from tovana import MemoryManager

business_description = "an AI therapist"

message = "I just moved from New York to Paris for work."

user_id = "user123"

# Initialize with your preferred LLM provider and API key (Refer to the documentation for specific models)

memory_manager = MemoryManager(api_key="your-llm-provider-api-key-here", provider="anthropic",

business_description=business_description, include_beliefs=True)

# Update user memory

memory_manager.update_user_memory(user_id=user_id, message=message)

# Get user memory

user_memory = memory_manager.get_user_memory(user_id=user_id)

print(user_memory) # Output: {'location': 'Paris', 'previous_location': 'New York'}

# Get memory context for LLM

context = memory_manager.get_memory_context(user_id=user_id)

print(context) # Output: 'User Memory:\n location: Paris,\n previous_location: New York'

# Get beliefs

beliefs = memory_manager.get_beliefs(user_id=user_id)

print(beliefs) # Output: {"beliefs": "- Suggest spending time with Charlie and Luna when user is feeling down\n- Suggest family activities with Lisa and Mai for emotional well-being\n- Recommend playing basketball for physical exercise and stress relief"}

🧠 Belief Generation: The Secret Sauce

Tovana introduces a new approach to LLM reasoning: actionable beliefs generated from user memory. These beliefs provide personalized insights that can significantly enhance your agent's planning, reasoning and responses.

Examples

Input:

business_description: "a commerce site"memory: {'pets': ['dog named charlie', 'horse named luna']}

Output:

{"beliefs": ",- suggest pet products for dogs and horses"}

Input:

business_description: "an AI therapist"memory: {'pets': ['dog named charlie', 'horse named luna', 'sleep_time: 10pm']}

Output:

{"beliefs": ",- Suggest mediation at 9:30pm\n- Suggest spending time with Charlie and Luna for emotional well-being"}

🛠️ API Reference

MemoryManager

get_memory(user_id: str) -> JSON: Fetch user memorydelete_memory(user_id: str) -> bool: Delete user memoryupdate_memory(user_id: str, message: str) -> JSON: Update memory with relevant information if found in messagebatch_update_memory(user_id: str, messages: List[Dict[str, str]]) -> JSON: Update memory with relevant information if found in messageget_memory_context(user_id: str, message: Optiona[str]) -> str: Get formatted memory context, general or message specificget_beliefs(user_id: str) -> str: Get actionable beliefs context

Batch Update Memory

Traditional per-message memory updates can be costly and inefficient, especially in longer conversations. They often miss crucial context, leading to suboptimal information retrieval.

Our batch memory update method addresses these challenges by processing entire conversations at once. This approach not only improves performance and reduces costs but also enhances the quality of extracted information. This results in a more coherent and accurate user memory, ultimately leading to better AI reasoning.

Example

user_id = "user123"

messages = [

{"role": "user", "content": "Hi, I'm planning a trip to Japan."},

{"role": "assistant", "content": "That's exciting! When are you planning to go?"},

{"role": "user", "content": "I'm thinking about next spring. I love sushi and technology."}

]

await memory_manager.batch_update_memory(user_id, messages)

Sync vs Async Updates

Tovana provides both synchronous and asynchronous update methods to cater to different use cases and application architectures:

- Asynchronous Updates (

AsyncMemoryManager): Ideal for applications built on asynchronous frameworks like FastAPI or asynchronous Python scripts. This allows for non-blocking memory updates, improving overall application performance, especially when dealing with I/O-bound operations or high-concurrency scenarios. - Synchronous Updates (

MemoryManager): Suitable for traditional synchronous applications or when you need to ensure that memory updates are completed before proceeding with other operations. This can be useful in scripts or applications where the order of operations is critical.

By providing both options, our library offers flexibility, allowing to choose the most appropriate method based on your specific application requirements and architecture.

🤝 Contributing

We welcome contributions! Found a bug or have a feature idea? Open an issue or submit a pull request. Let's make Tovana even better together! 💪

📄 License

Tovana is Apache-2.0 licensed. See the LICENSE file for details.

Ready to empower your AI agents with memory-driven reasoning? Get started with Tovana! 🚀 If you find it useful, don't forget to star the repo! ⭐

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file tovana-0.0.7.tar.gz.

File metadata

- Download URL: tovana-0.0.7.tar.gz

- Upload date:

- Size: 18.4 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.12.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 5d544e44d1059accaa4c95f16061f7824c6603324ed461d238536701022515ef |

|

| MD5 | b7675c50ff4ac9e1f1b32d62dbdc839c |

|

| BLAKE2b-256 | 439c26372c3ad940b3fd59b81c4ce43c27204aa88860491b3c10d20b22bfde3f |

File details

Details for the file tovana-0.0.7-py3-none-any.whl.

File metadata

- Download URL: tovana-0.0.7-py3-none-any.whl

- Upload date:

- Size: 14.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.12.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 72b1be7a5a21eb50d20bf4244d14d55ba24b2f49a6c7371dbdf83575f3784ef6 |

|

| MD5 | 9b239545dc55cc1dc0cc748eab976751 |

|

| BLAKE2b-256 | 76dbcb763b3c43766be782820e551b8400d8bb4a38628ec2e91aa69e9ef52255 |