An ensamble method to recover corrupted FASTQ files, drop or fix pesky lines, remove unpaired reads, and fix reads interleaving

Project description

FastqWiper

FastqWiper is a Snakemake-enabled application that wipes out bad reads from broken FASTQ files. Additionally, the available and pre-designed Snakemake workflows allows recovering corrupted fastq.gz, dropping or fixing pesky lines, removing unpaired reads, and fixing reads interleaving.

- Compatibility: Python ≥3.7, <3.11

- OS: Windows, Linux, Mac OS (Snakemake workflows through Docker for Windows)

- Contributions: bioinformatics@css-mendel.it

- Docker: https://hub.docker.com/r/mazzalab/fastqwiper

- Bug report: https://github.com/mazzalab/fastqwiper/issues

USAGE

- Case 1. You have one or a couple (R1&R2) of computer readable FASTQ files which contain pesky, unformatted, uncompliant lines: Use FastWiper only to clean them;

- Case 2. You have one or a couple (R1&R2) of computer readable FASTQ files that you want to drop unpaired reads from or fix reads interleaving: Use the FastqWiper's Snakemake workflows;

- Case 3. You have one

fastq.gzfile or a couple (R1&R2) offastq.gzfiles which are corrupted and you want to recover healthy reads and reformat them: Use the FastqWiper's Snakemake workflows;

Installation

Case 1

This requires you to install FastqWiper and therefore does not require you to configure workflows also. You can do it for all OSs:

Use Conda

conda create -n fastqwiper python=3.10

conda activate fastqwiper

conda install -c bfxcss fastqwiper

fastqwiper --help

Hint: for an healthier experience, use mamba

Use Pypi

pip install fastqwiper

fastqwiper --help

fastqwiper <options>

options:

--fastq_in TEXT The input FASTQ file to be cleaned [required]

--fastq_out TEXT The wiped FASTQ file [required]

--log_frequency INTEGER The number of reads you want to print a status message

--log_out TEXT The file name of the final quality report summary

--help Show this message and exit.

It accepts in input and outputs readable *.fastq or *.fastq.gz files.

Cases 2 & 3

There is a QUICK and a SLOW method to configure FastqWiper's workflows.

The quick way (Docker, all OS)

- Pull the Docker image from DockerHub:

docker pull mazzalab/fastqwiper

- Once downloaded the image, type:

docker run --rm -ti --name fastqwiper -v "YOUR_LOCAL_PATH_TO_DATA_FOLDER:/fastqwiper/data" mazzalab/fastqwiper paired 8 sample

where:

YOUR_LOCAL_PATH_TO_DATA_FOLDERis the path of the folder where the fastq.gz files to be wiped are located;pairedtriggers the cleaning of R1 and R2. Alternatively,singlewill trigger the wipe of individual FASTQ files;8is the number of your choice of computing cores to be spawned;sampleis part of the names of the FASTQ files to be wiped. Be aware that: for paired-end files (e.g., "sample_R1.fastq.gz" and "sample_R2.fastq.gz"), your files must finish with_R1.fastq.gzand_R2.fastq.gz. Therefore, the argument to pass is everything before these texts:samplein this case. For single end/individual files (e.g., "excerpt_R1_001.fastq.gz"), your file must end with the string.fastq.gz; the preceding text, i.e., "excerpt_R1_001" in this case, will be the text to be passed to the command as an argument. E.g.,

docker run --rm -ti --name fastqwiper -v "YOUR_LOCAL_PATH_TO_DATA_FOLDER:/fastqwiper/data" mazzalab/fastqwiper single 8 excerpt_R1_001

The slow way (Linux & Mac OS)

To enable the use of preconfigured pipelines, you need to install Snakemake. The recommended way to install Snakemake is via Conda, because it enables Snakemake to handle software dependencies of your workflow. However, the default conda solver is slow and often hangs. Therefore, we recommend installing Mamba as a drop-in replacement via

$ conda install -c conda-forge mamba

if you have anaconda/miniconda already installed, or directly installing Mambaforge as described here.

Then, create and activate a clean environment as above:

mamba create -n fastqwiper python=3.10

mamba activate fastqwiper

Finally, install a few dependencies:

$ mamba install -c bioconda snakemake

$ mamba install colorama click

Usage

Clone the FastqWiper repository in a folder of your choice and enter it:

git clone https://github.com/mazzalab/fastqwiper.git

cd fastqwiper

It contains, in particular, a folder data containing the fastq files to be processed, a folder pipeline containing the released pipelines and a folder fastq_wiper with the source files of FastqWiper.

Input files to be processed should be copied into the data folder. All software packages not fetched from Conda and used by the pipelines should be copied, even if it is not strictly mandatory, in the root directory of the cloned repository.

Currently, to run the FastqWiper pipelines, the following packages need to be installed manually:

required packages:

gzrt (Linux build fron source instructions, Ubuntu install instructions, Mac OS install instructions)

BBTools (install instructions)

Both packages need to be downloaded and installed in the root folder of FastqWiper, as the image below

Commands:

Copy the fastq files you want to fix in the data folder.

N.b.: In all commands above, you will pass to the workflow the name of the sample to be analyzed through the config argument: sample_name. Remember that your fastq files' names must finish with _R1.fastq.gz and _R2.fastq.gz, for paired fastq files, and with .fastq.gz, for individual fastq files, and, therefore, the text to be assigned to the variable sample_name must be everything before them. E.g., if your files are my_sample_R1.fastq.gz and my_sample_Re.fastq.gz, then --config sample_name=my_sample.

Paired-end files

-

Get a dry run of a pipeline (e.g.,

fix_wipe_single_reads_sequential.smk):

snakemake --config sample_name=my_sample -s pipeline/fix_wipe_pairs_reads_sequential.smk --use-conda --cores 4 -

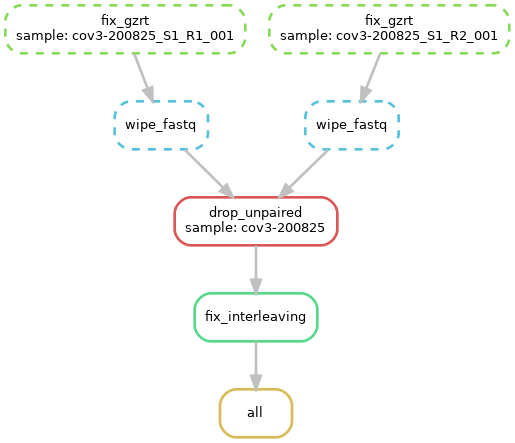

Generate the planned DAG:

snakemake --config sample_name=my_sample -s pipeline/fix_wipe_single_reads_sequential.smk --dag | dot -Tpdf > dag.pdf

- Run the pipeline (n.b., during the first execution, Snakemake will download and install some required remote packages and may take longer). The number of computing cores can be tuned accordingly:

snakemake --config sample_name=my_sample -s pipeline/fix_wipe_single_reads_sequential.smk --use-conda --cores 2

Fixed files will be copied in the data folder and will be suffixed with the string _fixed_wiped_paired_interleaving.

We remind that the fix_wipe_pairs_reads.smk pipeline performs the following actions:

- execute

gzrton corrupted fastq.gz files (i.e., that cannot be unzipped because of errors) and recover readable reads; - execute

fastqwiperon recovered reads to make them compliant with the FASTQ format (source: Wipipedia) - execute

Trimmomaticon wiped reads to remove residual unpaired reads - execute

BBmap (repair.sh)on paired reads to fix the correct interleaving and sort fastq files.

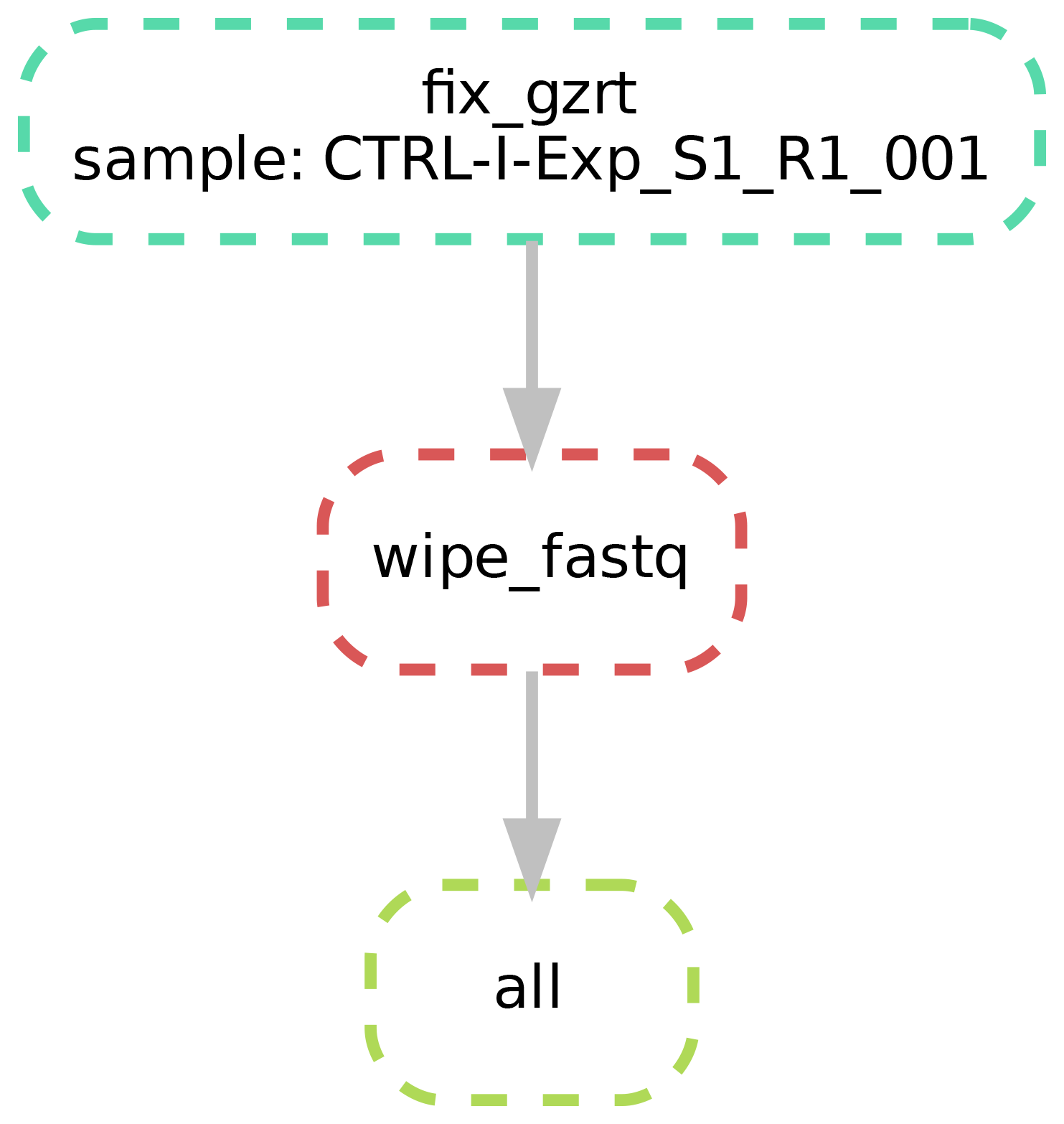

Single-end files

fix_wipe_single_reads_parallel.smk and fix_wipe_single_reads_sequential.smk will not execute trimmomatic and BBmap's repair.sh.

-

Get a dry run of a pipeline (e.g.,

fix_wipe_single_reads_sequential.smk):

snakemake --config sample_name=my_sample -s pipeline/fix_wipe_single_reads_sequential.smk --use-conda --cores 2 -np -

Generate the planned DAG:

snakemake --config sample_name=my_sample -s pipeline/fix_wipe_single_reads_sequential.smk --dag | dot -Tpdf > dag.pdf

- Run the pipeline (n.b., during the first execution, Snakemake will download and install some required remote

packages and may take longer). The number of computing cores can be tuned accordingly:

snakemake --config sample_name=my_sample -s pipeline/fix_wipe_single_reads_sequential.smk --use-conda --cores 2

Author

Laboratory of Bioinformatics

Fondazione IRCCS Casa Sollievo della Sofferenza

Viale Regina Margherita 261 - 00198 Roma IT

Tel: +39 06 44160526 - Fax: +39 06 44160548

E-mail: t.mazza@css-mendel.it

Web page: http://www.css-mendel.it

Web page: http://bioinformatics.css-mendel.it

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distribution

File details

Details for the file fastqwiper-2023.2.18-py3-none-any.whl.

File metadata

- Download URL: fastqwiper-2023.2.18-py3-none-any.whl

- Upload date:

- Size: 11.4 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.12

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | c905c5d9a3d429f084f63a27d3c6a0a10e61afa7e31345364be52a49dad6b0fe |

|

| MD5 | 3b6bb4f100b2af0398aa9b7b9fa2d067 |

|

| BLAKE2b-256 | 7ed06bfdc423bb5f1210f5f1913a172717fd143f5808847a30a9ff5af15001c3 |