A command-line tool for generating UMAP plots and KMeans clustering from JSONL data

Project description

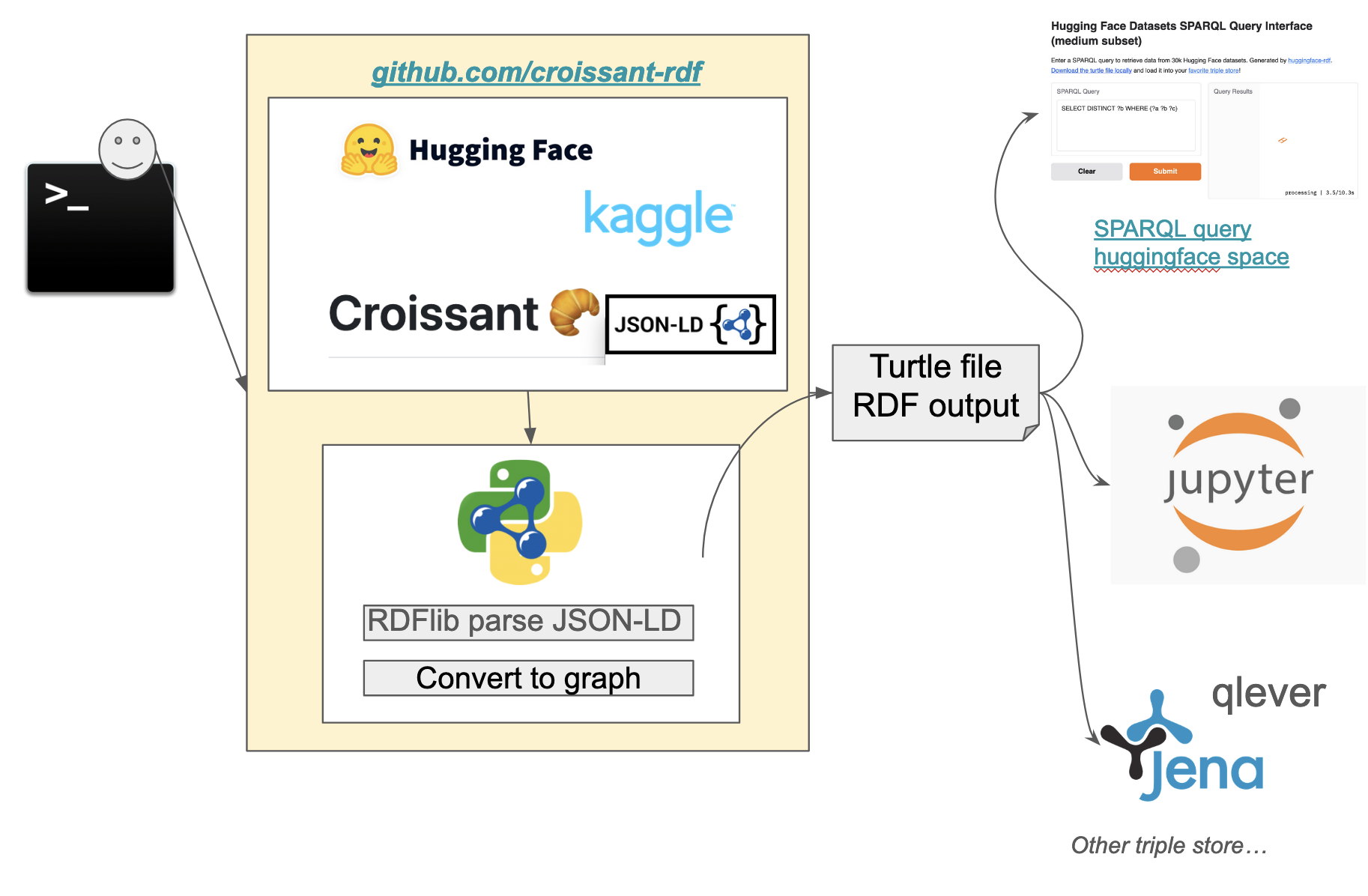

croissant-rdf

A proud Biohackathon project 🧑💻🧬👩💻

huggingface_rdf is a Python tool that generates RDF (Resource Description Framework) data from datasets available on Hugging Face. This tool enables researchers and developers to convert data into a machine-readable format for enhanced querying and data analysis.

This is made possible due to an effort to align to the MLCommons Croissant schema, which HF and others conform to.

Features

- Fetch datasets from Hugging Face.

- Convert datasets to RDF format.

- Generate Turtle (.ttl) files for easy integration with SPARQL endpoints.

Installation

To install huggingface_rdf, clone the repository and install the package using pip:

git clone https://github.com/david4096/croissant-rdf.git

cd croissant-rdf

pip install .

Usage

After installing the package, you can use the command-line interface (CLI) to generate RDF data:

export HF_API_KEY={YOUR_KEY}

huggingface-rdf --fname huggingface.ttl --limit 10

Check out the qlever_scripts directory to get help loading the RDF into qlever for querying.

You can also easily use Jena fuseki and load the generated .ttl file from the Fuseki ui.

docker run -it -p 3030:3030 stain/jena-fuseki

Extracting data from Kaggle

You'll need to get a Kaggle API key and it comes in a file called kaggle.json, you have to put the username and key into environment variables.

export KAGGLE_USERNAME={YOUR_USERNAME}

export KAGGLE_KEY={YOUR_KEY}

kaggle-rdf --fname kaggle.ttl --limit 10

Using Docker

To launch a jupyter notebook server to run and develop on the project locally run the following:

docker build -t croissant-rdf .

docker run -p 8888:8888 -v $(pwd):/app croissant-rdf

The run command works for mac and linux for windows in PowerShell you need to use the following:

docker run -p 8888:8888 -v ${PWD}:/app croissant-rdf

After that, you can access the Jupyter notebook server at http://localhost:8888.

Useful SPARQL Queries

SPARQL (SPARQL Protocol and RDF Query Language) is a query language used to retrieve and manipulate data stored in RDF (Resource Description Framework) format, typically within a triplestore. Here are a few useful SPARQL query examples you can try to implement on https://huggingface.co/spaces/david4096/huggingface-rdf

The basic structure of a SPQRQL query is SELECT: which you have to include a keywords that you would like to return in the result. WHERE: Defines the triple pattern we want to match in the RDF dataset.

- This query is used to retrieve distinct predicates from an Huggingface RDF dataset

SELECT DISTINCT ?b WHERE {?a ?b ?c}

- To retrieve information about a dataset, including its name, predicates, and the count of objects associated with each predicate. Includes a filters in the results to include only resources that are of type https://schema.org/Dataset.

SELECT ?name ?p (count(?o) as ?predicate_count)

WHERE {

?dataset <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <https://schema.org/Dataset> .

?dataset <https://schema.org/name> ?name .

?dataset ?p ?o .

}

GROUP BY ?p ?dataset

- To retrieve distinct values with the keyword "bio" associated with the property https://schema.org/keywords regardless of the case.

SELECT DISTINCT ?c

WHERE {

?a <https://schema.org/keywords> ?c .

FILTER(CONTAINS(LCASE(?c), "bio"))

}

- To retrieve distinct values for croissant columns associated with the predicate.

SELECT DISTINCT ?c

WHERE {

?a <http://mlcommons.org/croissant/column> ?c

}

- To retrieves the names of creators and the count of items they are associated with.

SELECT ?creatorName (COUNT(?a) AS ?count)

WHERE {

?a <https://schema.org/creator> ?c.

?c <https://schema.org/name> ?creatorName.

}

GROUP BY ?creatorName

ORDER BY DESC(?count)

Contributing

We welcome contributions! Please open an issue or submit a pull request!

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file huggingface_rdf-0.1.0.tar.gz.

File metadata

- Download URL: huggingface_rdf-0.1.0.tar.gz

- Upload date:

- Size: 9.5 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.9.20

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 3b57f0d2bbd4118d486f7b2bc24a5a2a1a5709a9fe8432443318f723eda1e057 |

|

| MD5 | ba1a86d85b5b1c66846bed13a4325668 |

|

| BLAKE2b-256 | dfe418f0510758fb2aff36d4f738bfefb004e3ea1c970a7aa1a18aff24181805 |

File details

Details for the file huggingface_rdf-0.1.0-py3-none-any.whl.

File metadata

- Download URL: huggingface_rdf-0.1.0-py3-none-any.whl

- Upload date:

- Size: 10.9 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.9.20

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 712707bd59e86b16a0c230ae0e6ae82db7332824e9c7dd9f8a791c822c5fd437 |

|

| MD5 | 6ffe2507d58d45fa3a774cb7558d2d22 |

|

| BLAKE2b-256 | 9b6a8325c21bb69e5dcc1609b6908c47e024990e4ab99441521dc46d08a59e81 |