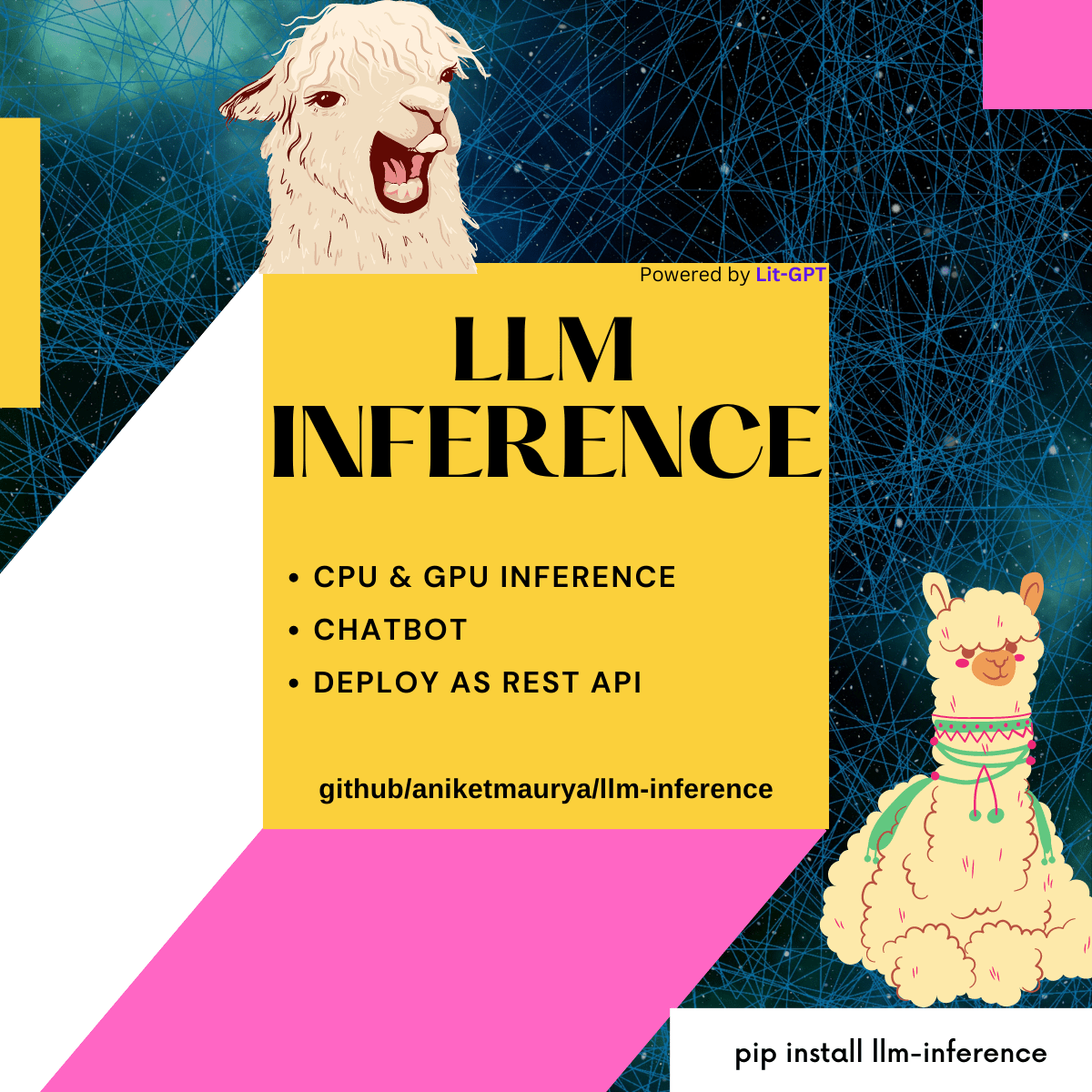

Large Language Models Inference API and Applications

Project description

Large Language Model (LLM) Inference API and Chatbot 🦙

Inference API for LLMs like LLaMA and Falcon powered by Lit-GPT from Lightning AI

pip install llm-inference

Install from main branch

pip install git+https://github.com/aniketmaurya/llm-inference.git@main

Note: You need to manually install Lit-GPT and setup the model weights to use this project.

pip install lit_gpt@git+https://github.com/aniketmaurya/install-lit-gpt.git@install

For Inference

from llm_inference import LLMInference, prepare_weights

from rich import print

path = prepare_weights("EleutherAI/pythia-70m")

model = LLMInference(checkpoint_dir=path)

print(model("New York is located in"))

How to use the Chatbot

from llm_chain import LitGPTConversationChain, LitGPTLLM

from llm_inference import prepare_weights

from rich import print

path = str(prepare_weights("lmsys/longchat-13b-16k"))

llm = LitGPTLLM(checkpoint_dir=path, quantize="bnb.nf4") # 8.4GB GPU memory

bot = LitGPTConversationChain.from_llm(llm=llm, verbose=True)

print(bot.send("hi, what is the capital of France?"))

Launch Chatbot App

1. Download weights

from llm_inference import prepare_weights

path = prepare_weights("lmsys/longchat-13b-16k")

2. Launch Gradio App

python examples/chatbot/gradio_demo.py

For deploying as a REST API

Create a Python file app.py and initialize the ServeLLaMA App.

# app.py

from llm_inference.serve import ServeLLaMA, Response, PromptRequest

import lightning as L

component = ServeLLaMA(input_type=PromptRequest, output_type=Response)

app = L.LightningApp(component)

lightning run app app.py

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file llm_inference-0.0.6.tar.gz.

File metadata

- Download URL: llm_inference-0.0.6.tar.gz

- Upload date:

- Size: 810.4 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.17

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

5bc77680d1df2f9af5b4cc9187fd47c473a2c98a32d0c59eb7427949ad19cbfe

|

|

| MD5 |

c813fdea738fb735019ce0ee3237a0ef

|

|

| BLAKE2b-256 |

541756ce7b12de3af15ae7acf9358760ad9689e36392da2491888fa7299ad6b5

|

File details

Details for the file llm_inference-0.0.6-py3-none-any.whl.

File metadata

- Download URL: llm_inference-0.0.6-py3-none-any.whl

- Upload date:

- Size: 12.0 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.17

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

08fe641c4511b1ad465a503c02b58752cf785ee00f4516a3b63e99103d1ea6ac

|

|

| MD5 |

5a50437efe21dab18314b9a3f2441bea

|

|

| BLAKE2b-256 |

c6cd4bfeab074dd703d4977641711c16e5f4641d550c55d2890b797c71f90af7

|