NanoPlaceR - An open-source framework for placement and routing of Field-coupled Nanotechnologies based on reinforcement learning.

Project description

NanoPlaceR: Placement and Routing for Field-coupled Nanocomputing (FCN) based on Reinforcement Learning

NanoPlaceR is a tool for the physical design of FCN circuitry based on Reinforcement Learning. It can generate layouts for logic networks up to ~200 gates, while requiring ~50% less area than the state-of-the-art heuristic approach.

Related publication presented at DAC: paper

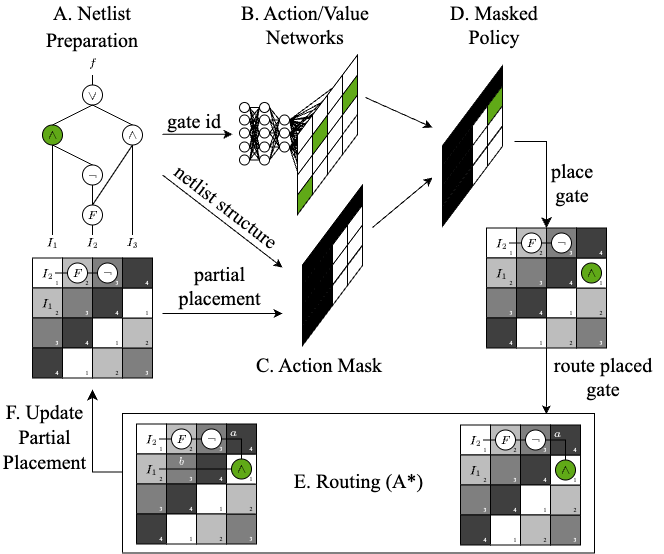

Inspired by recent developments in the field of machine learning-aided design automation, this tool combines reinforcement learning with efficient path routing algorithms based on established algorithms such as A* search. Masked Proximal Policy Optimization (PPO) is used to learn the placement of logic elements, which is further accelerated by incorporating an action mask computed based on the netlist structure and the last partial placement, ensuring valid and compact solutions. To minimize the occurrence of unpromising partial placements, several checks constantly ensure the early termination of sub-par solutions. Furthermore, the routing of placed gates is incorporated directly into the placement step using established routing strategies. The following figure outlines the methodology:

Usage of NanoPlaceR

Currently, due to the Open-AI gym dependency, only python versions up to 3.10 are supported.

If you do not have a virtual environment set up, the following steps outline one possible way to do so. First, install virtualenv:

$ pip install virtualenv

Then create a new virtual environment in your project folder and activate it:

$ mkdir nano_placement

$ cd nano_placement

$ python -m venv venv

$ source venv/bin/activate

NanoPlaceR can be installed via pip:

(venv) $ pip install mnt.nanoplacer

You can then create the desired layout based on specified parameters (e.g. logic function, clocking scheme, layout width etc.) directly in your pyhon project:

from mnt import nanoplacer

if __name__ == "__main__":

nanoplacer.create_layout(

benchmark="trindade16",

function="mux21",

clocking_scheme="2DDWave",

technology="QCA",

minimal_layout_dimension=False,

layout_width=3,

layout_height=4,

time_steps=10000,

reset_model=True,

verbose=1,

optimize=True,

)

or via the command:

(venv) $ mnt.nanoplacer

usage: mnt.nanoplacer [-h] [-b {fontes18,trindade16,EPFL,TOY,ISCAS85}] [-f FUNCTION] [-c {2DDWave,USE, RES, ESR}] [-t {QCA,SiDB, Gate-level}] [-l] [-lw LAYOUT_WIDTH] [-lh LAYOUT_HEIGHT] [-ts TIME_STEPS] [-r] [-v {0,1, 2, 3}]

Optional arguments:

-h, --help Show this help message and exit.

-b, --benchmark Benchmark set.

-f, --function Logic function to generate layout for.

-c, --clocking_scheme Underlying clocking scheme.

-t, --technology Underlying technology (QCA, SiDB or technology-independent Gate-level layout).

-l, --minimal_layout_dimension If True, experimentally found minimal layout dimensions are used (defautls to False).

-lw, --layout_width User defined layout width.

-lh, --layout_height User defined layout height.

-ts, --time_steps Number of time steps to train the RL agent.

-r, --reset_model If True, reset saved model and train from scratch (defautls to False).

-v, --verbosity 0: No information. 1: Print layout after every new best placement. 2: Print training metrics. 3: 1 and 2 combined.

For example to create the gate-level layout for the mux21 function from trindade16 on the 2DDWave clocking scheme using the best found layout dimensions (by training for a maximum of 10000 timesteps):

mnt.nanoplacer -b "trindade16" -f "mux21" -c "2DDWave" -t "Gate-level" -l -ts 10000 -v 1

Repository Structure

.

├── docs/

├── src/

│ ├── mnt/

│ └── nanoplacer/

│ ├── main.py # entry point for mnt.bench script

│ ├── benchmarks/ # common benchmark sets

│ ├── placement_envs/

│ │ └── utils/

│ │ ├── placement_utils/ # placement util functions

│ │ └── layout_dimenions/ # predefined layout dimensions for certain functions

│ └── nano_placement_env.py # placement environment

Monitoring Training

Training can be monitored using Tensorboard.

Install it via

(venv) $ pip install tensorboard

and run the following command from within the NanoPlaceR directory:

(venv) $ tensorboard --logdir="tensorboard/{Insert function name here}"

References

In case you are using NanoPlaceR in your work, we would be thankful if you referred to it by citing the following publication:

@INPROCEEDINGS{hofmann2024nanoplacer,

author = {S. Hofmann and M. Walter and L. Servadei and R. Wille},

title = {{Thinking Outside the Clock: Physical Design for Field-coupled Nanocomputing with Deep Reinforcement Learning}},

booktitle = {{2024 25th International Symposium on Quality Electronic Design (ISQED)}},

year = {2024},

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

File details

Details for the file mnt_nanoplacer-0.2.3.tar.gz.

File metadata

- Download URL: mnt_nanoplacer-0.2.3.tar.gz

- Upload date:

- Size: 4.2 MB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.0.0 CPython/3.12.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | abb2045e863cd2e9b11b964861e2b27af6cc31505c3ed6fa5233b5f3d9c0ea09 |

|

| MD5 | 0ad08b887b07332294b4c1e6bfec1e53 |

|

| BLAKE2b-256 | 38ee926455e3c4c286dc9d76f3696ddd28759ae7031ec50afd2b8174fc031fc7 |