Onnx Text Recognition (OnnxTR): docTR Onnx-Wrapper for high-performance OCR on documents.

Project description

:warning: Please note that this is wrapper around the doctr library to provide a Onnx pipeline for docTR. For feature requests, which are not directly related to the Onnx pipeline, please refer to the base project.

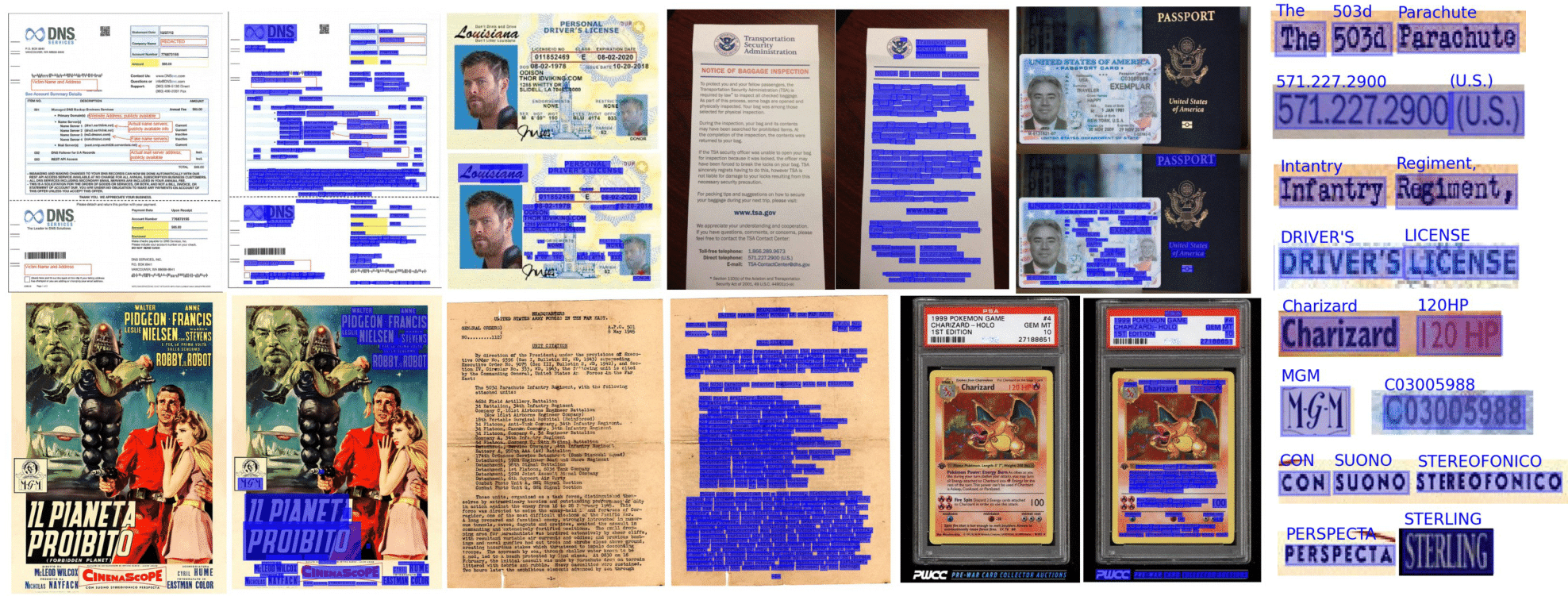

Optical Character Recognition made seamless & accessible to anyone, powered by Onnx

What you can expect from this repository:

- efficient ways to parse textual information (localize and identify each word) from your documents

- a Onnx pipeline for docTR, a wrapper around the doctr library

- more lightweight package with faster inference latency and less required resources

Installation

Prerequisites

Python 3.9 (or higher) and pip are required to install OnnxTR.

Latest release

You can then install the latest release of the package using pypi as follows:

NOTE: For GPU support please take a look at: ONNX Runtime. Currently supported execution providers by default are: CPU, CUDA

pip install onnxtr[cpu]

# with gpu support

pip install "onnxtr[gpu]"

# with HTML support

pip install "onnxtr[html]"

# with support for visualization

pip install "onnxtr[viz]"

# with support for all dependencies

pip install "onnxtr[html, gpu, viz]"

Reading files

Documents can be interpreted from PDF / Images / Webpages / Multiple page images using the following code snippet:

from onnxtr.io import DocumentFile

# PDF

pdf_doc = DocumentFile.from_pdf("path/to/your/doc.pdf")

# Image

single_img_doc = DocumentFile.from_images("path/to/your/img.jpg")

# Webpage (requires `weasyprint` to be installed)

webpage_doc = DocumentFile.from_url("https://www.yoursite.com")

# Multiple page images

multi_img_doc = DocumentFile.from_images(["path/to/page1.jpg", "path/to/page2.jpg"])

Putting it together

Let's use the default pretrained model for an example:

from onnxtr.io import DocumentFile

from onnxtr.models import ocr_predictor

model = ocr_predictor(

det_arch='fast_base', # detection architecture

rec_arch='vitstr_base', # recognition architecture

det_bs=4, # detection batch size

reco_bs=1024, # recognition batch size

assume_straight_pages=True, # set to `False` if the pages are not straight (rotation, perspective, etc.) (default: True)

straighten_pages=False, # set to `True` if the pages should be straightened before final processing (default: False)

preserve_aspect_ratio=True, # set to `False` if the aspect ratio should not be preserved (default: True)

symmetric_pad=True, # set to `False` to disable symmetric padding (default: True)

# DocumentBuilder specific parameters

resolve_lines=True, # whether words should be automatically grouped into lines (default: True)

resolve_blocks=True, # whether lines should be automatically grouped into blocks (default: True)

paragraph_break=0.035, # relative length of the minimum space separating paragraphs (default: 0.035)

)

# PDF

doc = DocumentFile.from_pdf("path/to/your/doc.pdf")

# Analyze

result = model(doc)

# Display the result (requires matplotlib & mplcursors to be installed)

result.show()

Or even rebuild the original document from its predictions:

import matplotlib.pyplot as plt

synthetic_pages = result.synthesize()

plt.imshow(synthetic_pages[0]); plt.axis('off'); plt.show()

The ocr_predictor returns a Document object with a nested structure (with Page, Block, Line, Word, Artefact).

To get a better understanding of the document model, check out documentation:

You can also export them as a nested dict, more appropriate for JSON format / render it or export as XML (hocr format):

json_output = result.export() # nested dict

text_output = result.render() # human-readable text

xml_output = result.export_as_xml() # hocr format

for output in xml_output:

xml_bytes_string = output[0]

xml_element = output[1]

Loading custom exported models

You can also load docTR custom exported models: For exporting please take a look at the doctr documentation.

from onnxtr.models import ocr_predictor, linknet_resnet18, parseq

reco_model = parseq("path_to_custom_model.onnx", vocab="ABC")

det_model = linknet_resnet18("path_to_custom_model.onnx")

model = ocr_predictor(det_model=det_model, reco_model=reco_model)

Models architectures

Credits where it's due: this repository is implementing, among others, architectures from published research papers.

Text Detection

- DBNet: Real-time Scene Text Detection with Differentiable Binarization.

- LinkNet: LinkNet: Exploiting Encoder Representations for Efficient Semantic Segmentation

- FAST: FAST: Faster Arbitrarily-Shaped Text Detector with Minimalist Kernel Representation

Text Recognition

- CRNN: An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition.

- SAR: Show, Attend and Read:A Simple and Strong Baseline for Irregular Text Recognition.

- MASTER: MASTER: Multi-Aspect Non-local Network for Scene Text Recognition.

- ViTSTR: Vision Transformer for Fast and Efficient Scene Text Recognition.

- PARSeq: Scene Text Recognition with Permuted Autoregressive Sequence Models.

predictor = ocr_predictor()

predictor.list_archs()

{

'detection archs':

[

'db_resnet34',

'db_resnet50',

'db_mobilenet_v3_large',

'linknet_resnet18',

'linknet_resnet34',

'linknet_resnet50',

'fast_tiny',

'fast_small',

'fast_base'

],

'recognition archs':

[

'crnn_vgg16_bn',

'crnn_mobilenet_v3_small',

'crnn_mobilenet_v3_large',

'sar_resnet31',

'master',

'vitstr_small',

'vitstr_base',

'parseq'

]

}

Documentation

This repository is in sync with the doctr library, which provides a high-level API to perform OCR on documents. This repository stays up-to-date with the latest features and improvements from the base project. So we can refer to the doctr documentation for more detailed information.

NOTE:

pretrainedis the default in OnnxTR, and not available as a parameter.- docTR specific environment variables (e.g.: DOCTR_CACHE_DIR -> ONNXTR_CACHE_DIR) needs to be replaced with

ONNXTR_prefix.

Benchmarks

COMING SOON

Citation

If you wish to cite please refer to the base project citation, feel free to use this BibTeX reference:

@misc{doctr2021,

title={docTR: Document Text Recognition},

author={Mindee},

year={2021},

publisher = {GitHub},

howpublished = {\url{https://github.com/mindee/doctr}}

}

License

Distributed under the Apache 2.0 License. See LICENSE for more information.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file onnxtr-0.1.1.tar.gz.

File metadata

- Download URL: onnxtr-0.1.1.tar.gz

- Upload date:

- Size: 66.8 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.0.0 CPython/3.9.19

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 8fd83a89cbb2e89e30b53f4d4ed16cd47f53bf0cdef94c2a5c6fbd538f57a09c |

|

| MD5 | 574a6f0cb6d5e54e58584ff40b5708f1 |

|

| BLAKE2b-256 | e6b757f209964dd82044ee4f2304b6cb9361cf2c361f087d8a0b5d8e1fa514f6 |

File details

Details for the file onnxtr-0.1.1-py3-none-any.whl.

File metadata

- Download URL: onnxtr-0.1.1-py3-none-any.whl

- Upload date:

- Size: 90.5 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.0.0 CPython/3.9.19

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 24569a618303119ee4a343131578db6041132a89cf12cdaeda844a6c7599474a |

|

| MD5 | 6dc77d1ea2f30fb56b2ee95821275b20 |

|

| BLAKE2b-256 | 8ece46dc7693ed89502641c6693eaa0d1430a58cf72174e9d9dbbfcb8af78b96 |