SDK for Agenta an open-source LLMOps platform.

Project description

Home Page | Slack | Documentation

The open-source LLMOps platform for prompt-engineering, evaluation, and deployment of complex LLM apps.

About • Demo • Quick Start • Installation • Features • Documentation • Support • Community • Contributing

ℹ️ About

Building production-ready LLM-powered applications is currently very difficult. It involves countless iterations of prompt engineering, parameter tuning, and architectures.

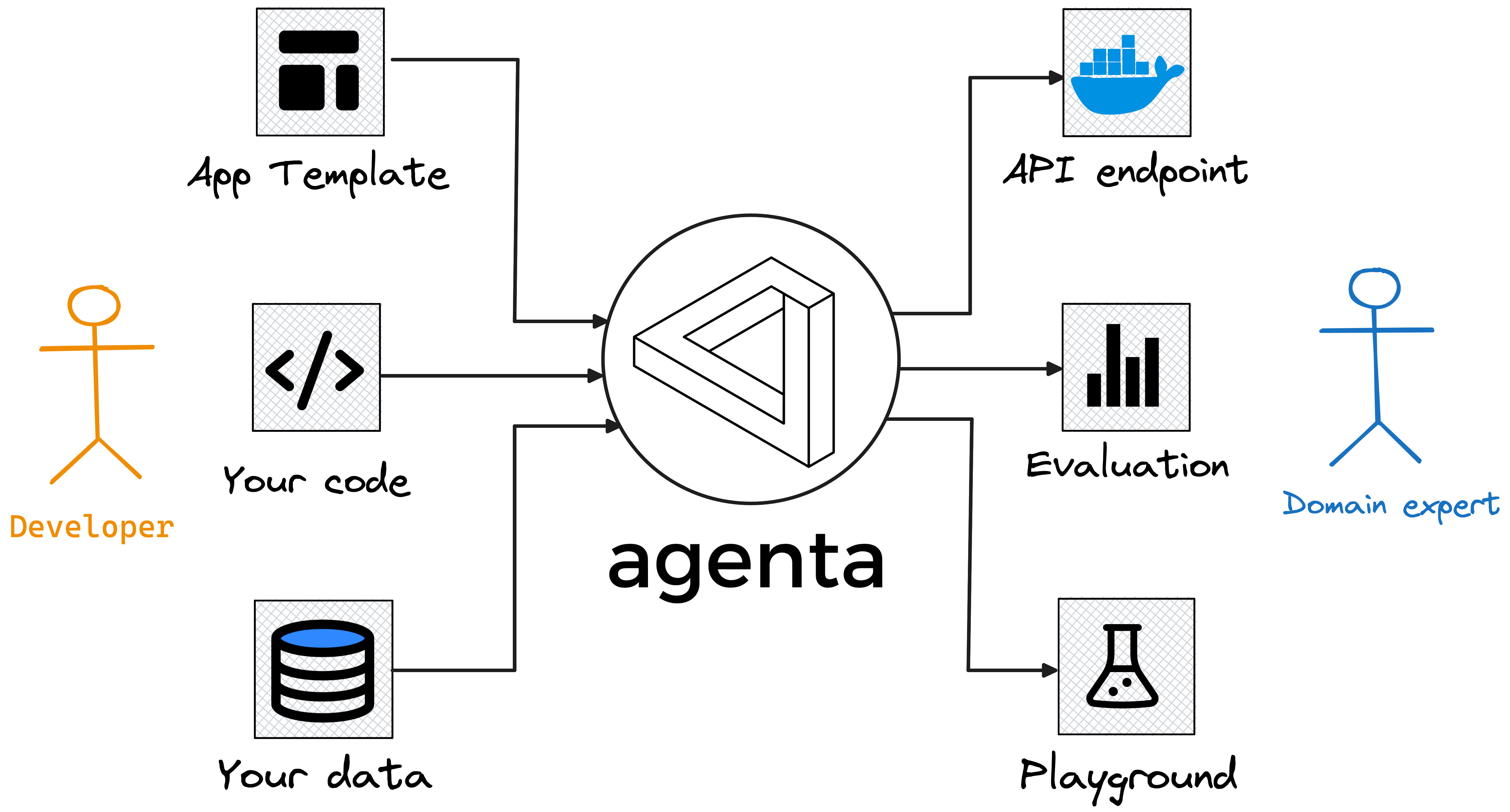

Agenta provides you with the tools to quickly do prompt engineering and 🧪 experiment, ⚖️ evaluate, and :rocket: deploy your LLM apps. All without imposing any restrictions on your choice of framework, library, or model.

Demo

https://github.com/Agenta-AI/agenta/assets/57623556/99733147-2b78-4b95-852f-67475e4ce9ed

Quick Start

Features

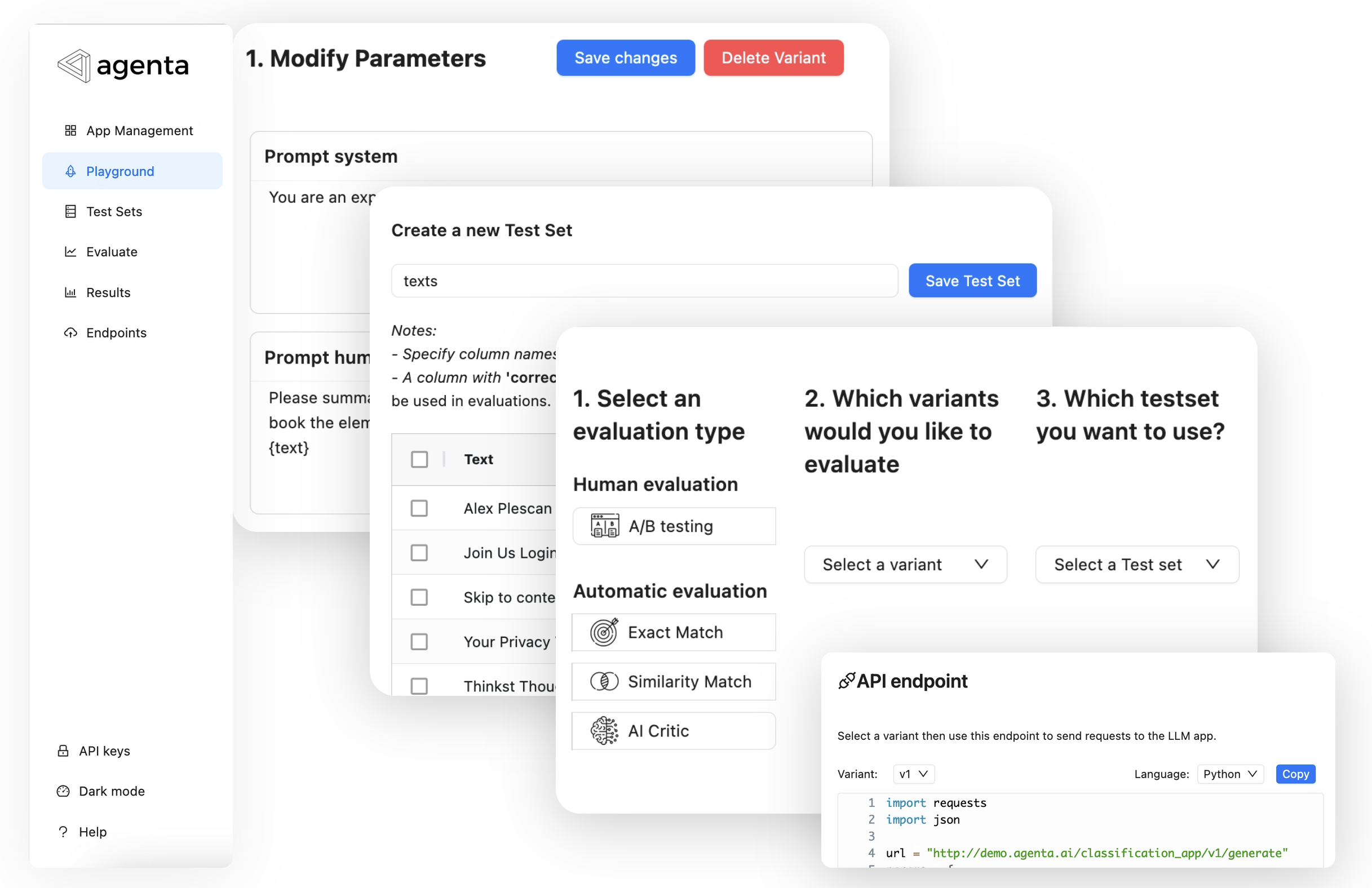

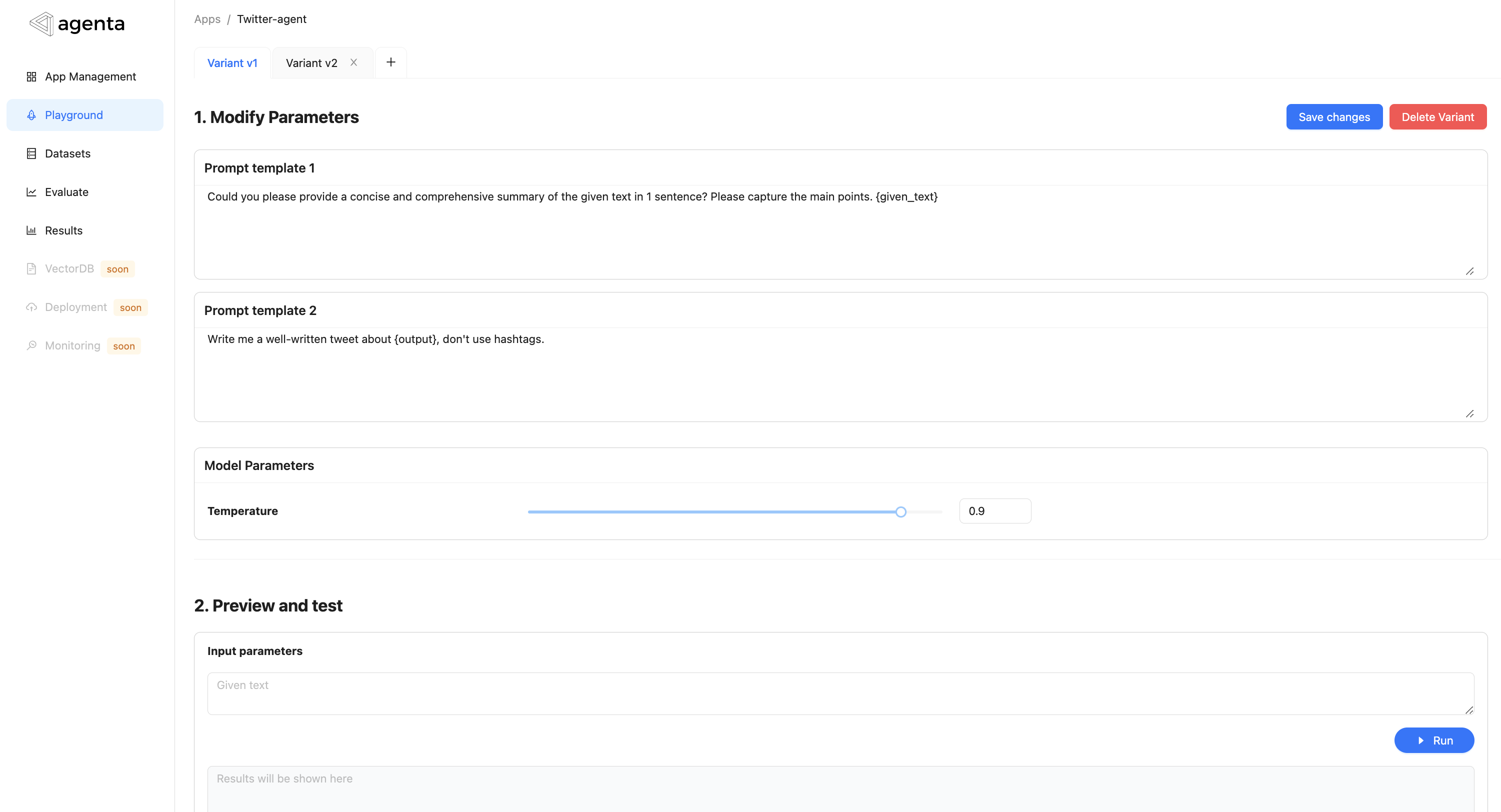

Playground 🪄

With just a few lines of code, define the parameters and prompts you wish to experiment with. You and your team can quickly experiment and test new variants on the web UI. https://github.com/Agenta-AI/agenta/assets/4510758/8b736d2b-7c61-414c-b534-d95efc69134c

Version Evaluation 📊

Define test sets, the evaluate manually or programmatically your different variants.API Deployment 🚀

When you are ready, deploy your LLM applications as APIs in one click.Why choose Agenta for building LLM-apps?

- 🔨 Build quickly: You need to iterate many times on different architectures and prompts to bring apps to production. We streamline this process and allow you to do this in days instead of weeks.

- 🏗️ Build robust apps and reduce hallucination: We provide you with the tools to systematically and easily evaluate your application to make sure you only serve robust apps to production

- 👨💻 Developer-centric: We cater to complex LLM-apps and pipelines that require more than one simple prompt. We allow you to experiment and iterate on apps that have complex integration, business logic, and many prompts.

- 🌐 Solution-Agnostic: You have the freedom to use any library and models, be it Langchain, llma_index, or a custom-written alternative.

- 🔒 Privacy-First: We respect your privacy and do not proxy your data through third-party services. The platform and the data are hosted on your infrastructure.

How Agenta works:

1. Write your LLM-app code

Write the code using any framework, library, or model you want. Add the agenta.post decorator and put the inputs and parameters in the function call just like in this example:

Example simple application that generates baby names

import agenta as ag

from langchain.chains import LLMChain

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

default_prompt = "Give me five cool names for a baby from {country} with this gender {gender}!!!!"

@ag.post

def generate(

country: str,

gender: str,

temperature: ag.FloatParam = 0.9,

prompt_template: ag.TextParam = default_prompt,

) -> str:

llm = OpenAI(temperature=temperature)

prompt = PromptTemplate(

input_variables=["country", "gender"],

template=prompt_template,

)

chain = LLMChain(llm=llm, prompt=prompt)

output = chain.run(country=country, gender=gender)

return output

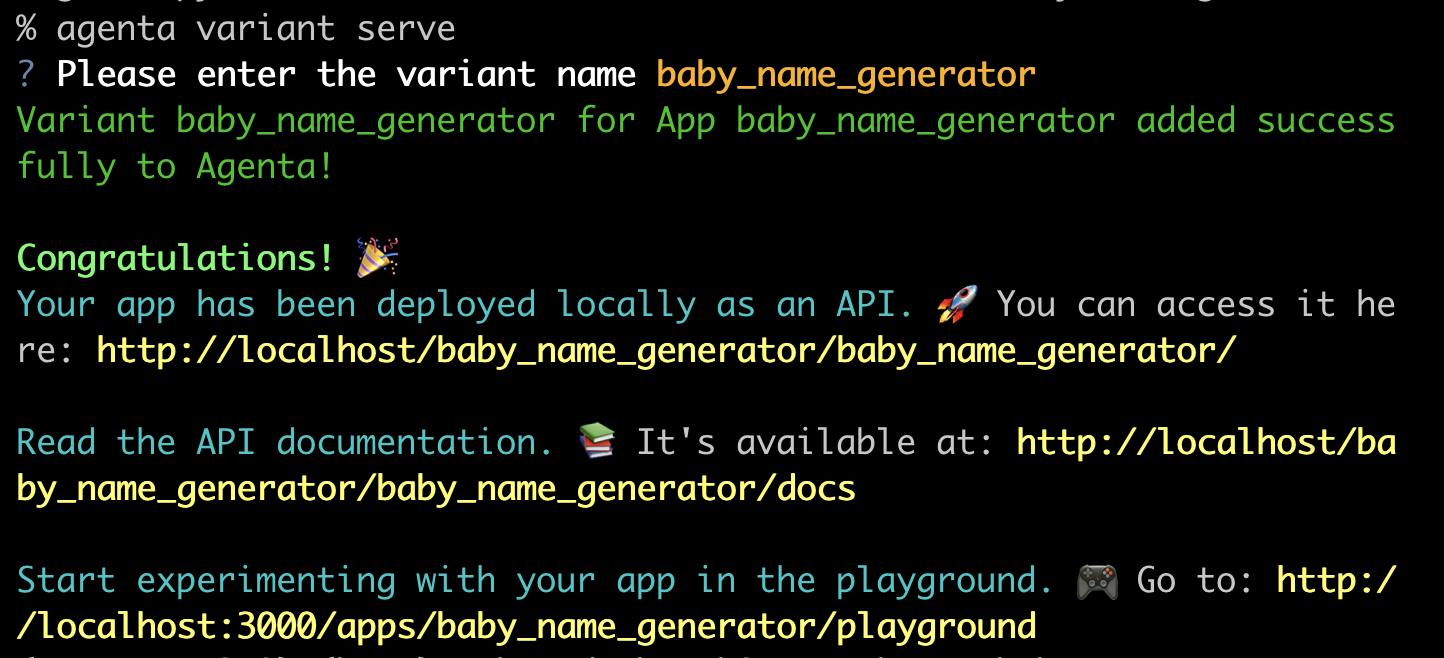

2.Deploy your app using the Agenta CLI.

3. Go to agenta at localhost:3000

Now your team can 🔄 iterate, 🧪 experiment, and ⚖️ evaluate different versions of your app (with your code!) in the web platform.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file agenta-0.2.9.tar.gz.

File metadata

- Download URL: agenta-0.2.9.tar.gz

- Upload date:

- Size: 21.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.6.1 CPython/3.9.18 Linux/6.2.0-1011-azure

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

7900122c74e8ad56c135071f36553981e5d660868f8e2be362b38e3fd5caa829

|

|

| MD5 |

dd673fc00a31b0fb459902a7e70a6c5c

|

|

| BLAKE2b-256 |

e91740cb7ecb50896e081696c029eb4fa72177296393b1b688e80e9353d7e753

|

File details

Details for the file agenta-0.2.9-py3-none-any.whl.

File metadata

- Download URL: agenta-0.2.9-py3-none-any.whl

- Upload date:

- Size: 28.1 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.6.1 CPython/3.9.18 Linux/6.2.0-1011-azure

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

4805b7158e6271b74da8e8fbe472dfadd2997149a2157ab83480af4f62ace516

|

|

| MD5 |

392a6506b1852d6731f6b01c640bc8c8

|

|

| BLAKE2b-256 |

e8d8018f5959c9687d67ff36f4abac129a529c51ab4b2e991582d0a46935a934

|