ao3scraper is a python webscraper that scrapes AO3 for fanfiction data, stores it in a database, and highlights entries when they are updated.

Project description

ao3scraper

A python webscraper that scrapes AO3 for fanfiction data, stores it in a database, and highlights entries when they are updated.

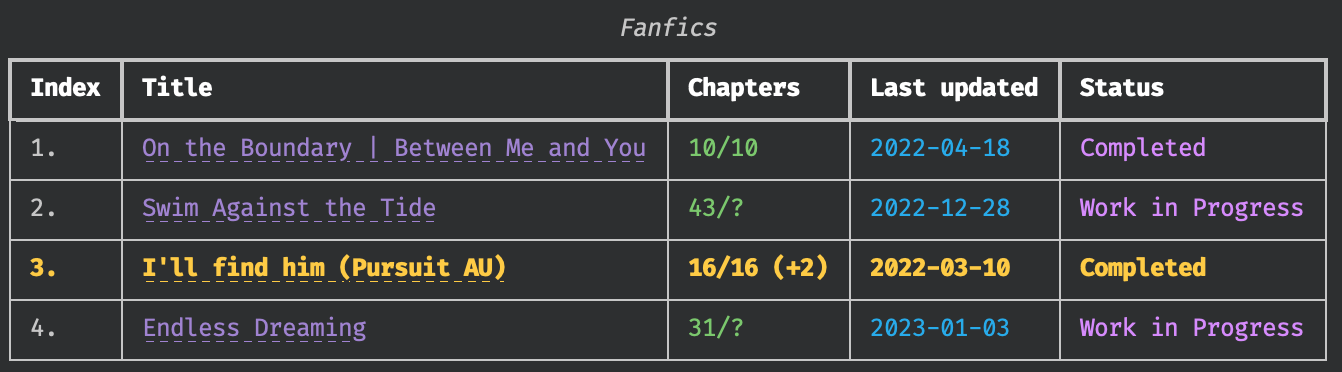

Table with an updated entry highlighted.

Installation

You can easily install the latest version from pip:

pip3 install ao3scraper

Development Installation

Create a python virtual environment with python3 -m venv dev_venv and activate it.

Then, install required packages with:

poetry install

This will also install ao3scraper into the virtual environment.

Usage

Usage: ao3scraper [OPTIONS]

Options:

-s, --scrape Launches scraping mode.

-c, --cache Prints the last scraped table.

-l, --list Lists all entries in the database.

-a, --add TEXT Adds a single url to the database.

--add-urls Opens a text file to add multiple urls to the database.

-d, --delete INTEGER Deletes an entry from the database.

-v, --version Display version of ao3scraper and other info.

--help Show this message and exit.

Configuration

ao3scraper is ridiculously customisable, and most aspects of the program can be modified from here.

To find the configuration file location, run python3 ao3scraper -v.

ao3scraper uses rich's styling. To disable any styling options, replace the styling value with 'none'.

Fics have many attributes that are not displayed by default. To add these columns, create a new option under table_template, like so:

table_template:

- column: characters # The specified attribute

name: Characters :) # This is what the column will be labelled as

styles: none # Rich styling

A complete list of attributes can be found on the wiki.

Migrating the database

If you're updating from a legacy version of ao3scraper (before 1.0.0), move fics.db to the data location.

This can be found by running python3 ao3scraper -v.

The migration wizard will then prompt you to upgrade your database.

If you accept, a backup of the current fics.db will be created in /backups, and migration will proceed.

Contributing

Contributions are always appreciated. Submit a pull request with your suggested changes!

Acknowledgements

ao3scraper would not be possible without the existence of ao3_api and the work of its contributors.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file ao3scraper-1.0.3.tar.gz.

File metadata

- Download URL: ao3scraper-1.0.3.tar.gz

- Upload date:

- Size: 22.7 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.8.4 CPython/3.12.4 Darwin/23.4.0

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

b3eb4d12a89c5a15f955190b6634e1a1fade6a7d769f5fc0e3ba0052d358ebfd

|

|

| MD5 |

68f30931ac474be13108b0fc56552fb8

|

|

| BLAKE2b-256 |

9fde4ee6739bae1823eba55be40f12ecaeff329bb7a28d54acf99f12efb60ea7

|

File details

Details for the file ao3scraper-1.0.3-py3-none-any.whl.

File metadata

- Download URL: ao3scraper-1.0.3-py3-none-any.whl

- Upload date:

- Size: 24.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.8.4 CPython/3.12.4 Darwin/23.4.0

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

6e86bebdf51ee8f9c513c053a40d5f4aecd22e349640d302d2ad47ea9cf96187

|

|

| MD5 |

36eb179bf7384db83d6292f63fdb9f22

|

|

| BLAKE2b-256 |

a56cb8710ab16d22e09346ffa29f3a77e2d0356420f85e3e53c2fef00ce2884b

|