An open-source package for privacy-preserving federated learning

Project description

APPFL - Advanced Privacy-Preserving Federated Learning Framework.

APPFL, Advanced Privacy-Preserving Federated Learning, is an open-source and highly extensible software framework that allows research communities to implement, test, and validate various ideas related to privacy-preserving federated learning (FL), and deploy real FL experiments easily and safely among distributed clients to train more robust ML models.With this framework, developers and users can easily

- Train any user-defined machine learning model on decentralized data with optional differential privacy and client authentication.

- Simulate various synchronous and asynchronous PPFL algorithms on high-performance computing (HPC) architecture with MPI.

- Implement customizations in a plug-and-play manner for all aspects of FL, including aggregation algorithms, server scheduling strategies, and client local trainers.

Documentation: please check out our documentation for tutorials, users guide, and developers guide.

Table of Contents

:hammer_and_wrench: Installation

We highly recommend creating a new Conda virtual environment and install the required packages for APPFL.

conda create -n appfl python=3.8

conda activate appfl

User installation

For most users such as data scientists, this simple installation must be sufficient for running the package.

pip install pip --upgrade

pip install "appfl[examples,mpi]"

💡 Note: If you do not need to use MPI for simulations, then you can install the package without the mpi option: pip install "appfl[examples]".

If we want to even minimize the installation of package dependencies, we can skip the installation of a few packages (e.g., matplotlib and jupyter):

pip install "appfl"

Developer installation

Code developers and contributors may want to work on the local repositofy. To set up the development environment,

git clone --single-branch --branch main https://github.com/APPFL/APPFL.git

cd APPFL

pip install -e ".[mpi,dev,examples]"

💡 Note: If you do not need to use MPI for simulations, then you can install the package without the mpi option: pip install -e ".[dev,examples]".

On Ubuntu: If the install process failed, you can try:

sudo apt install libopenmpi-dev,libopenmpi-bin,libopenmpi-doc

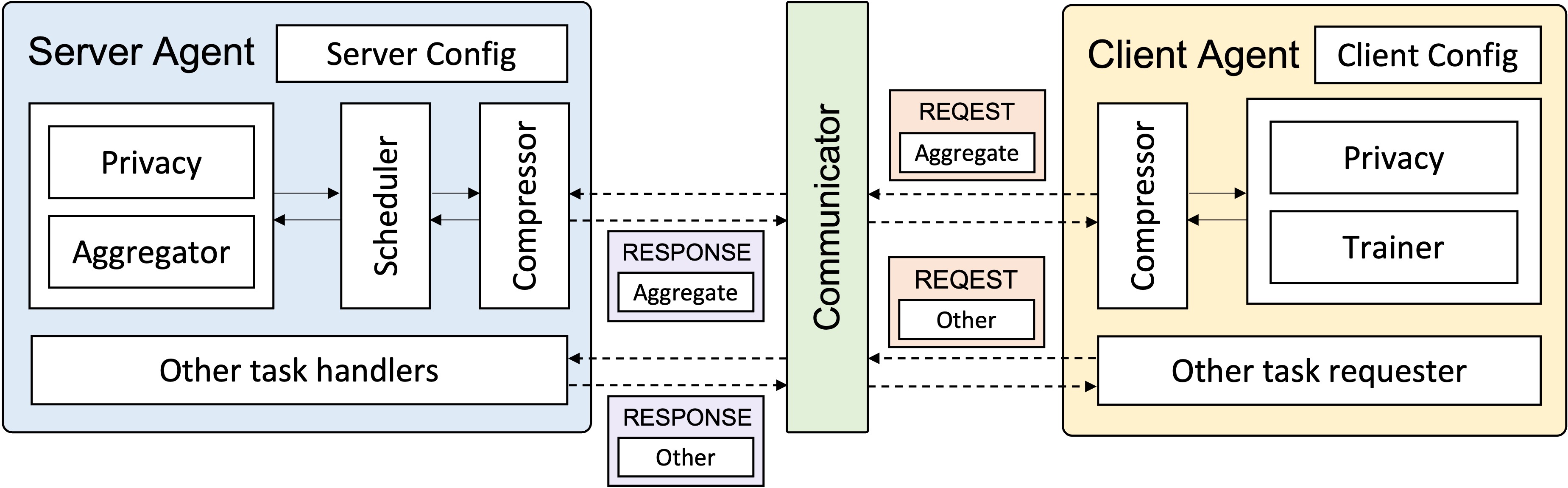

:bricks: Technical Components

APPFL is primarily composed of the following six technical components

- Aggregator: APPFL supports several popular algorithms to aggregate one or several client local models.

- Scheduler: APPFL supports several synchronous and asynchronous scheduling algorithms at the server-side to deal with different arrival times of client local models.

- Trianer: APPFL supports several client local trainers for various training tasks.

- Privacy: APPFL supports several global/local differential privacy schemes.

- Communicator: APPFL supports MPI for single-machine/cluster simulation, and gRPC and Globus Compute with authenticator for secure distributed training.

- Compressor: APPFL supports several lossy compressors for model parameters, including SZ2, SZ3, ZFP, and SZx.

:bulb: Framework Overview

In the design of the APPFL framework, we essentially create the server agent and client agent, using the six technical components above as building blocks, to act on behalf of the FL server and clients to conduct FL experiments. For more details, please refer to our documentation.

:page_facing_up: Citation

If you find APPFL useful for your research or development, please consider citing the following papers:

@article{li2024advances,

title={Advances in APPFL: A Comprehensive and Extensible Federated Learning Framework},

author={Li, Zilinghan and He, Shilan and Yang, Ze and Ryu, Minseok and Kim, Kibaek and Madduri, Ravi},

journal={arXiv preprint arXiv:2409.11585},

year={2024}

}

@inproceedings{ryu2022appfl,

title={APPFL: open-source software framework for privacy-preserving federated learning},

author={Ryu, Minseok and Kim, Youngdae and Kim, Kibaek and Madduri, Ravi K},

booktitle={2022 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW)},

pages={1074--1083},

year={2022},

organization={IEEE}

}

:trophy: Acknowledgements

This material is based upon work supported by the U.S. Department of Energy, Office of Science, under contract number DE-AC02-06CH11357.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file appfl-1.8.0.tar.gz.

File metadata

- Download URL: appfl-1.8.0.tar.gz

- Upload date:

- Size: 197.3 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e28db32076594721cccef159f5262c2516747141cb80af1aa2c2f45ae47e7b4b

|

|

| MD5 |

60e928dbe44edc46b72e5914d2dcc379

|

|

| BLAKE2b-256 |

09c79fb99ced871ccbc825c0d35a02a6ada1db81166d83d8402cf82b7ab10e37

|

File details

Details for the file appfl-1.8.0-py3-none-any.whl.

File metadata

- Download URL: appfl-1.8.0-py3-none-any.whl

- Upload date:

- Size: 289.6 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

da69d8551ca5d07924ce32ecf70323691f051030deb801b0d21d9b92ea9b4902

|

|

| MD5 |

308f97a711fb917b5b344a634f912513

|

|

| BLAKE2b-256 |

03a492a0837ddf19eda5c556ea242e1f3f0e702890606f9639a16fa79061ebbd

|