Python SDK for the Aqueduct prediction infrastructure

Project description

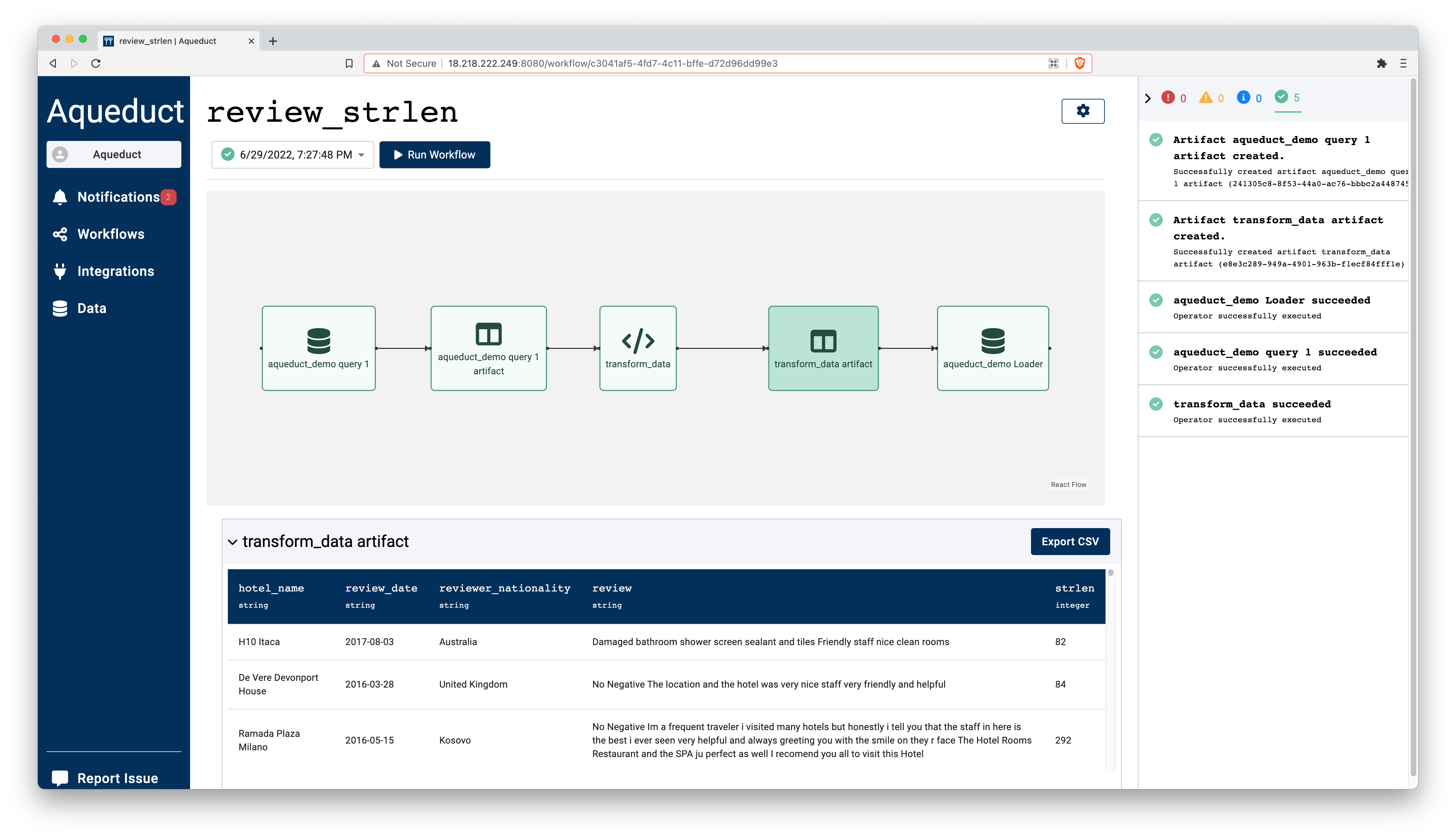

Aqueduct: Prediction Delivery for Data Teams

Aqueduct is prediction delivery made simple. With Aqueduct, data scientists can instantaneously deploy machine learning models to the cloud, connect those models to data and business systems, and gain visibility into everything from inference latency to model accuracy -- all with Python.

from aqueduct import Client, op, metric, get_apikey

client = Client(get_apikey(), "localhost:8080")

@op

def transform_data(reviews):

reviews['strlen'] = reviews['review'].str.len()

demo_db = client.integration("aqueduct_demo")

reviews_table = demo_db.sql("select * from hotel_reviews;")

strlen_table = transform_data(reviews_table)

strlen_table.save(demo_db.config(table="strlen_table", update_mode="replace"))

client.publish_flow(name="review_strlen", artifacts=[strlen_table])

You can run the full Aqueduct server in a Google Colab notebook here. Our examples directory has a few, more detailed prediction pipelines:

- Churn Ensemble

- Sentiment Analysis

- Impute Missing Wine Data

- more coming soon!

Getting Started

To get started with Aqueduct:

- Ensure that you meet the basic requirements.

- Install the aqueduct server and UI by running:

pip3 install aqueduct-ml

- Launch both the server and the UI by running:

aqueduct start - Get your API Key by running:

aqueduct apikey

The core abstraction in Aqueduct is a Workflow, which is a sequence of Artifacts (data) that are transformed by Operators (compute). The input Artifact(s) for a Workflow is typically loaded from a database, and the output Artifact(s) are typically persisted back to a database. Each Workflow can either be run on a fixed schedule or triggered on-demand.

Why Aqueduct?

The existing tools for deploying models are not designed with data scientists in mind -- they assume the user will casually build Docker containers, deploy Kubernetes clusters, and writes thousands of lines of YAML to deploy a single model. Data scientists are by and large not interested in doing that, and there are better uses for their skills.

Aqueduct is designed for data scientists, with three core design principles in mind:

- Simplicity: Data scientists should be able to deploy models with tools they're comfortable with and without having to learn how to use complex, low-level infrastructure systems.

- Connectedness: Data science and machine learning can have the greatest impact when everyone in the business has access, and data scientists shouldn't have to bend over backwards to make this happen.

- Confidence: Having the whole organization benefit from your work means that data scientists should be able to sleep peacefully, knowing that things are working as expected -- and they'll be alerted as soon as that changes.

What's next?

Interested in learning more? Check out our documentation, where you'll find:

- a Quickstart Guide

- example workflows

- and more details on creating workflows

If you have questions or comments or would like to learn more about what we're building, please reach out, join our Slack channel, or start a conversation on GitHub. We'd love to hear from you!

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for aqueduct_sdk-0.0.5-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 7b4f08d05b4304282dd20d206be929931abca5706174e3310e9f757c6cf95cee |

|

| MD5 | 5b490a85020cca4573395c2e2f183d8f |

|

| BLAKE2b-256 | bd54cbfa96bd44f4a200337024512b734e3ec4a9e1a8ee083cde26c55bde033d |