A stripped and opiniated version of Scott Lundberg's SHAP (SHapley Additive exPlanations)

Project description

Baby Shap is a stripped and opiniated version of SHAP (SHapley Additive exPlanations), a game theoretic approach to explain the output of any machine learning model by Scott Lundberg. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions (see papers for details and citations).

Baby Shap solely implements and maintains the Kernel Explainer and a limited range of plots, while limiting the number of raised errors, warnings, dependencies and conflicts.

Install

Baby SHAP can be installed from either PyPI:

pip install baby-shap

Model agnostic example with KernelExplainer (explains any function)

Kernel SHAP uses a specially-weighted local linear regression to estimate SHAP values for any model. Below is a simple example for explaining a multi-class SVM on the classic iris dataset.

import baby_shap

from sklearn import datasets, svm, model_selection

# print the JS visualization code to the notebook

baby_shap.initjs()

# train a SVM classifier

d = datasets.load_iris()

X = pd.DataFrame(data=d.data, columns=d.feature_names)

y = d.target

X_train, X_test, Y_train, Y_test = model_selection.train_test_split(X, y, test_size=0.2, random_state=0)

clf = svm.SVC(kernel='rbf', probability=True)

clf.fit(X_train.to_numpy(), Y_train)

# use Kernel SHAP to explain test set predictions

explainer = baby_shap.KernelExplainer(svm.predict_proba, X_train, link="logit")

shap_values = explainer.shap_values(X_test, nsamples=100)

# plot the SHAP values for the Setosa output of the first instance

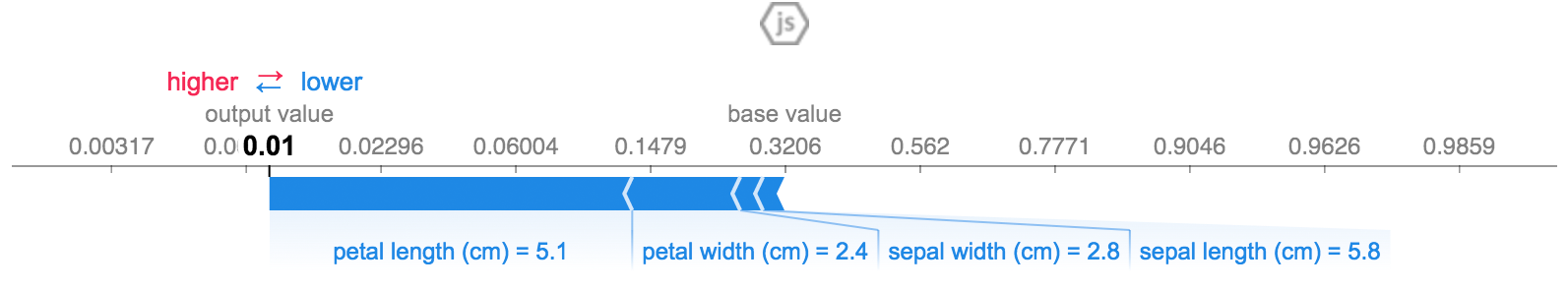

baby_shap.force_plot(explainer.expected_value[0], shap_values[0][0,:], X_test.iloc[0,:], link="logit")

The above explanation shows four features each contributing to push the model output from the base value (the average model output over the training dataset we passed) towards zero. If there were any features pushing the class label higher they would be shown in red.

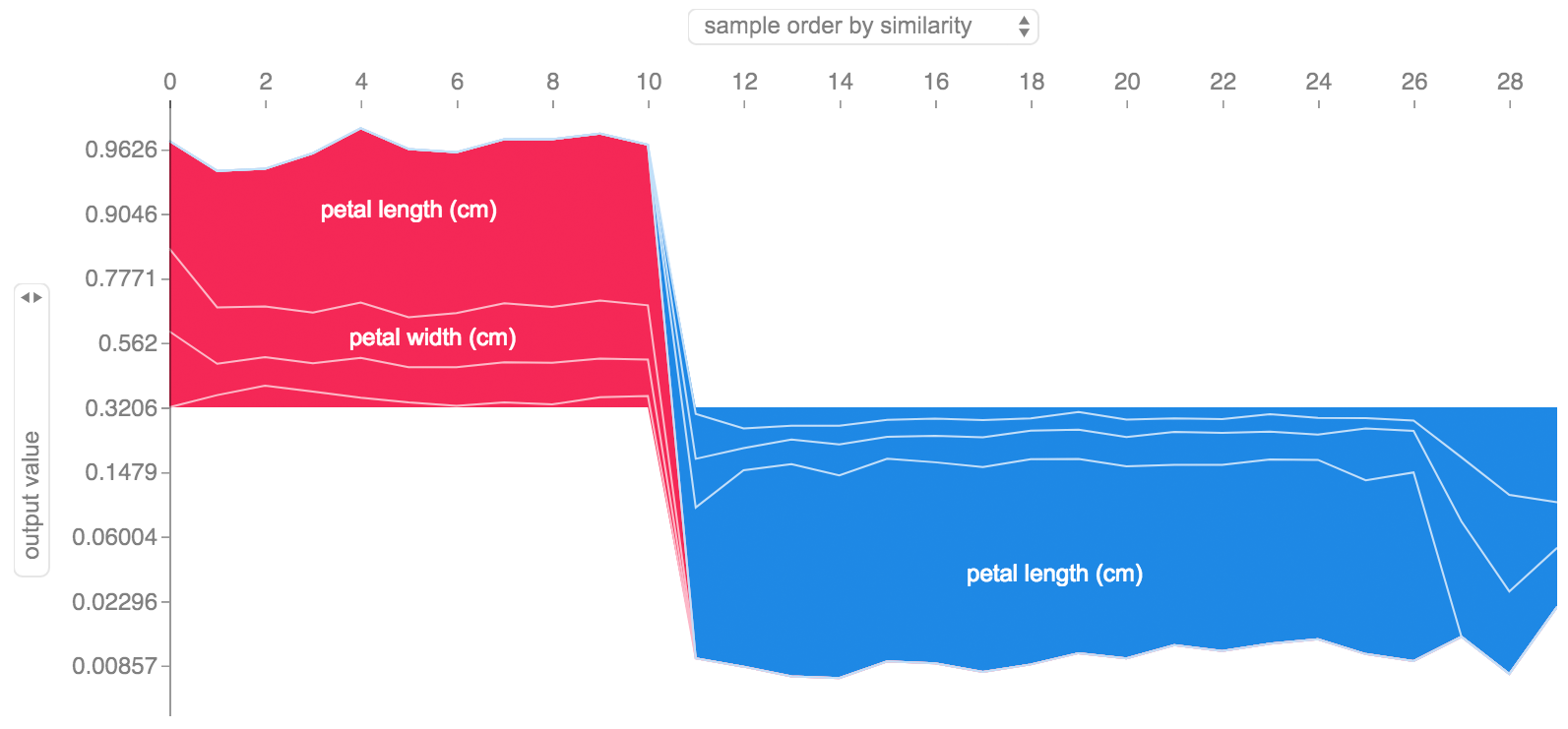

If we take many explanations such as the one shown above, rotate them 90 degrees, and then stack them horizontally, we can see explanations for an entire dataset. This is exactly what we do below for all the examples in the iris test set:

# plot the SHAP values for the Setosa output of all instances

baby_shap.force_plot(explainer.expected_value[0], shap_values[0], X_test, link="logit")

KernelExplainer

An implementation of Kernel SHAP, a model agnostic method to estimate SHAP values for any model. Because it makes no assumptions about the model type, KernelExplainer is slower than the other model type specific algorithms.

-

Census income classification with scikit-learn - Using the standard adult census income dataset, this notebook trains a k-nearest neighbors classifier using scikit-learn and then explains predictions using

baby_shap. -

ImageNet VGG16 Model with Keras - Explain the classic VGG16 convolutional nerual network's predictions for an image. This works by applying the model agnostic Kernel SHAP method to a super-pixel segmented image.

-

Iris classification - A basic demonstration using the popular iris species dataset. It explains predictions from six different models in scikit-learn using

baby_shap.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for baby_shap-0.0.3-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 07f87e33040a76ac587662daaec101a6ede11f5ff410a10b4b665939e3b7f412 |

|

| MD5 | bb3a4a90cf096a408ff3b1188d03ede4 |

|

| BLAKE2b-256 | 4a02db6e6c4013a3bcb5da7f1777502c58f928c64dcfca7ca47967360e8a2bc7 |