Microsoft Fabric ETL toolbox

Project description

bifabrik

Microsoft Fabric ETL toolbox

The library aims to make BI development in Microsoft Fabric easier by providing a fluent API for common ETL tasks.

See the project page for info on all the features and check the changelog to see what's new.

If you find a problem or have a feature request, please submit it here: https://github.com/rjankovic/bifabrik/issues. Thanks!

Quickstart

First, let's install the library. Either add the bifabrik library to an environment in Fabric and attach that environment to your notebook.

Or you can add %pip install bifabrik at the beginning of the notebook.

Import the library

import bifabrik as bif

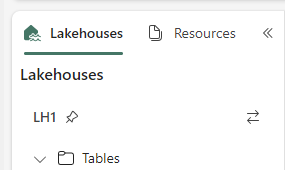

Also, make sure that your notebook is connected to a lakehouse. This is the lakehouse to which bifabrik will save data by default.

You can also configure it to target different lakehouses.

Load CSV files (JSON is similar)

Simple tasks should be easy.

import bifabrik as bif

bif.fromCsv('Files/CsvFiles/annual-enterprise-survey-2021.csv').toTable('Survey2021').run()

...and the table is in place

display(spark.sql('SELECT * FROM Survey2021'))

Or you can make use of pattern matching

# take all files matching the pattern and concat them

bif.fromCsv('Files/*/annual-enterprise-survey-*.csv').toTable('SurveyAll').run()

These are full loads, overwriting the target table if it exists.

Configure load preferences

Is your CSV is a bit...special? No problem, we'll tend to it.

Let's say you have a European CSV with commas instead of decimal points and semicolons instead of commas as separators.

bif.fromCsv("Files/CsvFiles/dimBranch.csv").delimiter(';').decimal(',').toTable('DimBranch').run()

The backend uses pandas, so you can take advantage of many other options - see help(bif.fromCsv())

Keep the configuration

What, you have more files like that? Well then, you probably don't want to repeat the setup each time. Good news is, the bifabrik object can keep all your preferences:

import bifabrik as bif

# set the configuration

bif.config.csv.delimiter = ';'

bif.config.csv.decimal = ','

# the configuration will be applied to all these loads

bif.fromCsv("Files/CsvFiles/dimBranch.csv").toTable('DimBranch').run()

bif.fromCsv("Files/CsvFiles/dimDepartment.csv").toTable('DimDepartment').run()

bif.fromCsv("Files/CsvFiles/dimDivision.csv").toTable('DimDivision').run()

# (You can still apply configuration in the individual loads, as seen above, to override the general configuration.)

If you want to persist your configuration beyond the PySpark session, you can save it to a JSON file - see Configuration

Consistent configuration is one of the core values of the project.

We like our lakehouses to be uniform in terms of loading patterns, table structures, tracking, etc. At the same time, we want to keep it DRY.

bifabrik configuration aims to cover many aspects of the lakehouse so that you can define your conventions once, use it repeatedly, and override when neccessary.

Spark SQL transformations

Enough with the files! Let's make a simple Spark SQL transformation, writing data to another SQL table - a straightforward full load:

bif.fromSql('''

SELECT Industry_name_NZSIOC AS Industry_Name

,AVG(`Value`) AS AvgValue

FROM LakeHouse1.Survey2021

WHERE Variable_Code = 'H35'

GROUP BY Industry_name_NZSIOC

''').toTable('SurveySummarized').run()

# The resulting table will be saved to the lakehouse attached to your notebook.

# You can refer to a different source warehouse in the query, though.

Data lineage

You can track column-level lineage of your data transformations - see the documentation

More options

bifabrik can help with incremental loads, identity columns (auto-increment), dataframe transformations and more

For example

import bifabrik as bif

from pyspark.sql.functions import col, upper

(

bif

.fromCsv('CsvFiles/fact_append_*.csv')

.transformSparkDf(lambda df: df.withColumn('CodeUppercase', upper(col('Code'))))

.toTable('SnapshotTable1')

.increment('snapshot')

.snapshotKeyColumns(['Date', 'Code'])

.identityColumnPattern('{tablename}ID')

.run()

)

For more details, see the project page

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file bifabrik-0.15.2.tar.gz.

File metadata

- Download URL: bifabrik-0.15.2.tar.gz

- Upload date:

- Size: 66.2 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.9.23

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0bec4ab6c4dabe0900e472462f2f7dd393d41ec4135f176d6de8b528f4d046fa

|

|

| MD5 |

8ee6eada2c89eabf67e6c16700b20859

|

|

| BLAKE2b-256 |

2fd745d48aaf2bcf8cac0979b23e6b61a1fc36827d951ff09452286b6686f1ee

|

File details

Details for the file bifabrik-0.15.2-py3-none-any.whl.

File metadata

- Download URL: bifabrik-0.15.2-py3-none-any.whl

- Upload date:

- Size: 94.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.9.23

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

7f4e04f547832eb76c6cb00159f26fdd7f0f82429b5445baf7b7fa4acc0124df

|

|

| MD5 |

7f4d462f7ef475f77176317ae93e5830

|

|

| BLAKE2b-256 |

69081a7a337162493b0215f88fe5f74ec0180b7c9edc793d885185f4b1c05e87

|