Python API client for Bytez service

Project description

API Documentation

Basic Usage

from bytez import Bytez

client = Bytez("YOUR_BYTEZ_KEY_HERE")

model = client.model("Qwen/Qwen2-7B-Instruct")

input_text = "Once upon a time there was a beautiful home where"

model_params = {"max_new_tokens": 20, "max_new_tokens": 5, "temperature": 0.5}

result = model.run(input_text, model_params=model_params)

print(result.output)

# Access other properties of the result

error = result.error # Will be None if no error

provider = result.provider # if a closed source model, the raw output

Streaming usage (only text-generation models support streaming currently)

from bytez import Bytez

client = Bytez("YOUR_BYTEZ_KEY_HERE")

model = client.model("Qwen/Qwen2-7B-Instruct")

input_text = "Once upon a time there was a beautiful home where"

model_params = {"max_new_tokens": 20, "max_new_tokens": 5, "temperature": 0.5}

stream = model.run(

input_text,

stream=True,

model_params=model_params,

)

for chunk in stream:

print(f"Output: {chunk}")

Installation

pip install bytez

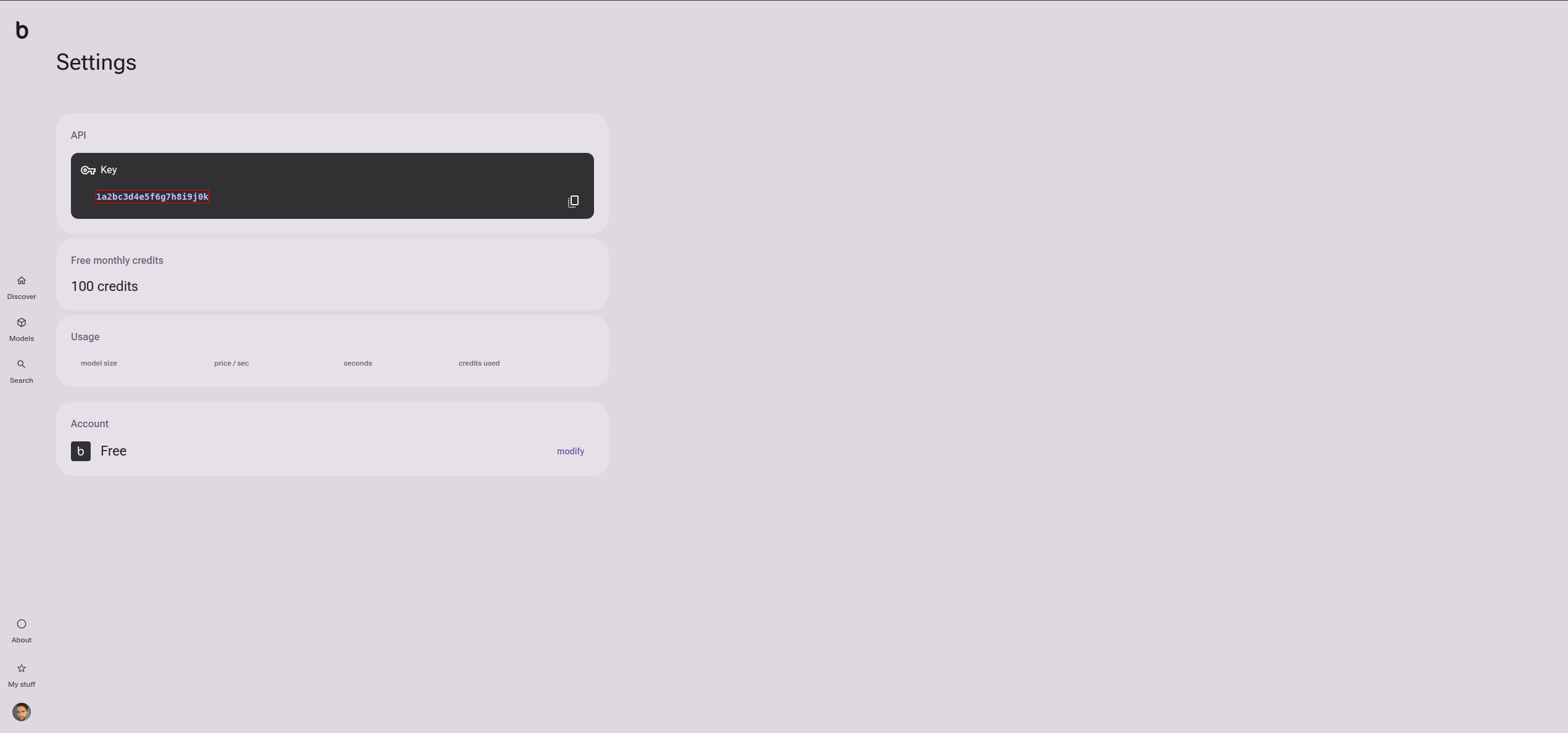

Authentication and Getting Your Key

To use this API, you need an API key. Obtain your key by visiting the Bytez Settings Page

To then use it in code:

from bytez import Bytez

client = Bytez("YOUR_BYTEZ_KEY_HERE")

List Available Models

Lists the currently available models and provides basic information about each one, such as the RAM required to run an instance.

from bytez import Bytez

client = Bytez("YOUR_BYTEZ_KEY_HERE")

# To list all models

result = client.list.models()

print(result.output)

# To list models by task

result = client.list.models({ "task": "object-detection"})

print(result.output)

Initialize the Model API

Initialize a model, so you can check its status, load, run, or shut it down.

model = client.model("openai-community/gpt2")

Run a Model

Run inference.

result = model.run("Once upon a time there was a")

print(result.output)

# Access error if needed

if result.error:

print(f"Error occurred: {result.error}")

# Access provider information

print(f"Provider: {result.provider}")

Run a Model with HuggingFace Params

Run inference with HuggingFace parameters.

input_text = "Once upon a time there was a small little man who"

model_params = {"max_new_tokens": 20, "temperature": 2}

result = model.run(input_text, model_params=model_params)

print(result.output)

# Access error if needed

if result.error:

print(f"Error occurred: {result.error}")

Stream the Response

Note: This is only supported for text-generation models.

input_text = "Once upon a time there was a beautiful home where"

model_params = {"max_new_tokens": 20, "max_new_tokens": 5, "temperature": 0.5}

stream = model.run(

input_text,

stream=True,

model_params=model_params,

)

for chunk in stream:

print(f"Output: {chunk}")

Request a Huggingface Model Not Yet on Bytez

To request a model that exists on Huggingface but not yet on Bytez, you can do the following:

model_id = "openai-community/gpt2"

job_status = client.process(model_id)

print(job_status)

This sends a job to an automated queue. When the job completes, you'll receive an email indicating the model is ready for use with the models API.

Request a Model Not on Huggingface or Bytez

Please reach out to us and we'll do what's necessary to make other models available!

Please join our Discord or contact us via email at help@bytez.com

Examples snippets

Open our docs for Python snippets.

Feedback

We value your feedback to improve our documentation and services. If you have any suggestions, please join our Discord or contact us via email at help@bytez.com

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file bytez-3.0.1.tar.gz.

File metadata

- Download URL: bytez-3.0.1.tar.gz

- Upload date:

- Size: 6.4 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.0 CPython/3.8.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

88e547eb074a051e8f6fbcb4d79d7af2d2c715245e872bbfa8dcdb205f406d7f

|

|

| MD5 |

01dc80afd6b4d2a0fc03909304285b37

|

|

| BLAKE2b-256 |

a472a15b05fa5656f5fd321bb66ecb80ba50300a1081f06c0bda09271deb9f7f

|

File details

Details for the file bytez-3.0.1-py3-none-any.whl.

File metadata

- Download URL: bytez-3.0.1-py3-none-any.whl

- Upload date:

- Size: 9.7 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.0 CPython/3.8.10

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

aba0c0ded17719e9b285e1a2aad94e456aa5c0fa507046a52efa1305a6d8c196

|

|

| MD5 |

e49c227961220ee1747aee040ea56605

|

|

| BLAKE2b-256 |

94caac09c265e2fbcbee3bdb287f8fc34d296a00e61b7828a8a7f42e22bed656

|