CatchemAlphaZero: AI techniques for solving games

Project description

Features

Installation

pip install catchem-alpha-zero

Project Overview

CatchemAlphaZero (CAZ) showcases techniques in artificial intelligence for solving two-player games without any human knowledge or input except for the rules of the game. Any two-player deterministic game can, in principle, leverage this framework. Little is needed beyond the ability to:

- get the games current legal moves

- play a move

- determine if the game has terminated.

The project includes:

- Alpha-Beta Minimax which is well suited to games with a small to medium sized decision tree (or games for which one can easily evaluate a position's likelihood of winning)

- Monte Carlo Tree Search which uses random simulation to estimate the value of a state, and biases the search towards paths that seem most promising

- AlphaZero: an attempt to replicate the brilliant work of DeepMind, who used deep convolutional neural networks in conjunction with Monte Carlo Tree SearchTo significantly improve upon the MCTS algorithm. The neural network learns the optimal policy entirely through self-play reinforcement learning.

Supported Games

- Chess

- ConnectX (Connect 4 for boards of arbitrary sizes)

- Tic Tac Toe

Play Chess Against CatchemAlphaZero

The crowning glory of this project is chess. CatchemAlphaZero bundles a GUI application with the wheel, alpha, installed into your virtual environments Scripts folder. To launch the GUI, either click the application within the scripts folder or type alpha into the command line (having activated your virtual environment). The application will launch with a splashscreen presenting the following options:

-

Play as White

-

Play as Black

-

Play as Both

-

Spectate Mode (watch CAZ play against itself)

Key features

- MCTS Num Sims: Increase the size of the Monte Carlo Tree Search to increase the difficulty (1,000 sims corresponds to a chess ELO of about 1,800).

- Show Eval Bar to see how CAZ evaluates the current position

- Asynchronous Ponder: CAZ is fully asynchronous and will ponder on your thinking time. CAZ efficiently recycles the tree search between moves and explores whilst its opponent is thinking. Take time to think but remember that CAZ is thinking too!

- Watch: Click here to watch a demo of the application.

How strong is CAZ?

The current Elo of CAZ is not precisely known (and obviously varies with increasing number of MCTS simulations). The ultimate plan is to integrate CAZ as a Lichess bot. For now, the strength of CAZ has been calculated by playing friends and colleagues who (are far better at chess than me and) have generously offered to play the bot. To date, CAZ has not lost on 1,000 simulations and Chess.com consistently estimates its Elo to be in the range 1,900 to 2,300. My suspicion is that it is at the lower end of this spectrum - like AlphaZero, CAZ is strong at strategic play and closed positions but struggles with tactical play and endgames (where technique matters more).

At the time of writing, the 'state of the art' is to use an Efficiently Updating Sparse Flat Neighborhood Network (SF NNUE). This is a smaller, faster CPU bound network that allows for deeper searches and therefore shines in tactical play as well as strategic play. This project uses a large convolutional ResNet architecture exactly as per DeepMind's specification. It works best on a GPU (but CPU only runs are supported as well).

A friend (white) vs CAZ (Black) 0-1 under classical conditions. CAZ wins via resignation. Chess.com estimated the Elo scores as 1,800 (white), 2,350 (black).

It's possible to train CAZ directly but if you'd like to use the weights that I have produced, you can download them from here. CAZ assumes that the weights are saved in a weights folder within the active current directory.

How it works

CatchemAlphaZero is a project that explores artificial intelligence techniques for two player games. The project started with minimax, which was then extended to alpha-beta minimax. MCTS was added to support games where leaf nodes could not be reached via brute force search. AlphaZero extends MCTS by using neural networks to both guide the search and provide and evaluation of each position rather than entering the rollout phase.

CAZ produces beautiful visualisations for each game. In particular, it is possible to render the full tree search as well as the policy & evaluation associated with each state. The image below shows the output of a tree search with 10 simulations (please note that CAZ assumes that you have the GraphViz application already installed.)

Rendered with ❤️ using CAZ. See the tutorial for details on how.

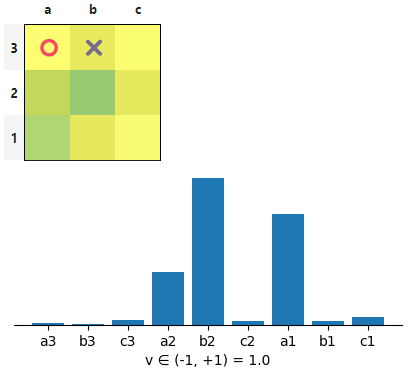

CAZ also renders each game state as HTML and will show the policy associated with each state as an HTML grid with a heatmap overlay. The darker squares correspond to squares favoured by the policy. The evaluation shows that CAZ believes it has a 100% chance of winning from here and correctly identifies the three moves that can lead to a win.

Heatmap policy for Tic-Tac-Toe. States and policied states are understood natively by IPython.

Learning through self-play reinforcement learning

CAZ is able to learn games entirely through self-play reinforcement learning. The idea is to use MCTS to explore a sample of the search space that seems most promising. At each step, the MCTS is guided by a policy retrieved from the neural network and evaluates a position using the neural network's evalution result. The neural network is a two-headed (policy head and value head) ResNet architecture that is described in detail in DeepMind's Nature paper. Below, is an illustration of the architecture used for TicTacToe. For chess, the architecture uses 19 residual network blocks:

Visualisation of the network architecture for TicTacToe using Tensorboard.

To improve the neural network, CAZ learns through self-play. The steps are:

- Play a game against itself relying on MCTS and the neural network

- At each step, improve the current policy via MCTS - MCTS is a policy improvement operator

- Record the outcome of the match after the game is played to completion

- Play 100s of matches and batch the results together

- Train the neural network against the match results. In particular, learn an improved policy based on the MCTS. Additionally, improve the network's evaluation function by assigning a score {-1, 0, +1} to each state depending upon the final outcome.

If you'd like to train the network yourself, check out the command line interface below.

Command line Interface Features

CAZ exposes five entry points via the command line: run, learn, kaggle, super, hyper

- Run CAZ from the terminal to see it play a game

- Learn through self-play if you wish to train a netowrk

- Kaggle enables you to run a Kaggle compliant agent that complies with the Kaggle Simulation Competitions API

- super trains a neural network in chess through supervised learning. Whilst it is entirely possible to learn solely through self-play reinforcement learning, it would take a very long time to do this exclusively for chess (Deepmind used 5,000 TPUs for training which is out of my budget!). Supervised learning was used to accelerate the learning process specifically for chess but not for any other game.

- Hyper performs a search over CAZ's various hyperparameters to ensure that it is learning optimally as training progresses

Examples of how to run the various entry points are shown below:

caz run --game=tic --player1=mcts --player2=az # MCTS vs AlphaZero algorithm.caz run --game=connectx --player1=mcts --player2=mctscaz run --game=chess --player1=az --player2=azcaz learn --game=connectxcaz kaggle --game=connectx --player1=mcts --player2=mctscaz supercaz hyper --game=connectx --gen=53

Chess Representation

CAZ follows Deepmind's proposal for representing input features and the action space per the follow up paper. In particular, a state is represented by an 8x8x20 input tensor where the 20 planes correspond to:

- Current player's pawn positions

- Current player's rook positions

- Current player's knight positions

- Current player's bishop positions

- Current player's queen positions

- Opposing player's king positions

- Opposing player's pawn positions

- Opposing player's rook positions

- Opposing player's knight positions

- Opposing player's bishop positions

- Opposing player's queen positions

- Opposing player's king positions

- 8x8 matrix of 0's or 1's denoting the player's turn (all zeroes or all ones)

- 8x8 matrix of 0's or 1's denoting current player's kingside castling rights

- 8x8 matrix of 0's or 1's denoting current player's queenside castling rights

- 8x8 matrix of 0's or 1's denoting opposing player's kingside castling rights

- 8x8 matrix of 0's or 1's denoting opposing player's queenside castling rights

- 8x8 matrix of showing the halfmove clock

- indicators for the position of any en passant squares

- 8x8 matrix denoting whether the state is a two-fold repetition

The action space is an 8x8x73 tensor where each 8x8 plane corresponds to:

- x7 N moves (the maximum number of squares you can move north is 7)

- x7 NE moves (again, moving 1-7 moves in a north-east direction)

- x7 E moves

- x7 SE moves

- x7 S moves

- x7 SW moves

- x7 W moves

- x7 NW moves

- Knight move NNE (i.e. one square east and two north)

- Knight move ENE (i.e. two square east and two north)

- Knight move ESE

- Knight move SSE

- Knight move SSW

- Knight move WSW

- Knight move WNW

- Knight move NNW

- Promote NW Knight (underpromote to a knight by capturing north-west)

- Promote N Knight (underpromote to a knight by advancing north)

- Promote NE Knight

- Promote NW Rook

- Promote N Rook

- Promote NE Rook

- Promote NW Bishop

- Promote N Bishop

- Promote NE Bishop

Tutorial

CAZ is not, nor ever intends to be a top chess engine*. Rather, it is a showcase in AI techniques and my attempt to reproduce Deepmind's work. If you'd like to know more about the inner working of self-play reinforcement learning, check out the tutorials. They contain beautifully rendered visualisations to help you understand the way the project works. The tutorials have been organised into four sections:

- MCTS Demo which showcases MCTS including the UCB formula. You can render search trees to see it in action

- Convolutional Layers which shows how convolutional layers work and can combine to transform the 'images' of the game

- Alpha Zero where you can see CatchemAlphaZero's policies and play against it within an IPython setting

- Chess - which illustrates the exact details of the chess setup. You can interrogate the network and play againt it here (although GUI app preferred)

* However, I am a glutton for punishment and will probably rewrite this project in C++ to make it stronger at some point...

Thanks for making it this far!

CatchemAL

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for catchem_alpha_zero-0.0.4-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 4dd881a3a560ad5721f8ee4a853c7fd7496a71a1b4108c98f7483c6038c360cc |

|

| MD5 | 7d40c7d07147092aa3b01102a5644465 |

|

| BLAKE2b-256 | 4d2a9830bbceeac994c2bd77518153ab8f12b0444f3bd778695d9cbc83463365 |