Cognee - is a library for enriching LLM context with a semantic layer for better understanding and reasoning.

Project description

Cognee - Accurate and Persistent AI Memory

Demo . Docs . Learn More · Join Discord · Join r/AIMemory . Community Plugins & Add-ons

Use your data to build personalized and dynamic memory for AI Agents. Cognee lets you replace RAG with scalable and modular ECL (Extract, Cognify, Load) pipelines.

🌐 Available Languages : Deutsch | Español | Français | 日本語 | 한국어 | Português | Русский | 中文

About Cognee

Cognee is an open-source tool and platform that transforms your raw data into persistent and dynamic AI memory for Agents. It combines vector search with graph databases to make your documents both searchable by meaning and connected by relationships. Cognee offers default memory creation and search which we describe bellow. But with Cognee you can build your own!

:star: Help us reach more developers and grow the cognee community. Star this repo!

Cognee Open Source:

- Interconnects any type of data — including past conversations, files, images, and audio transcriptions

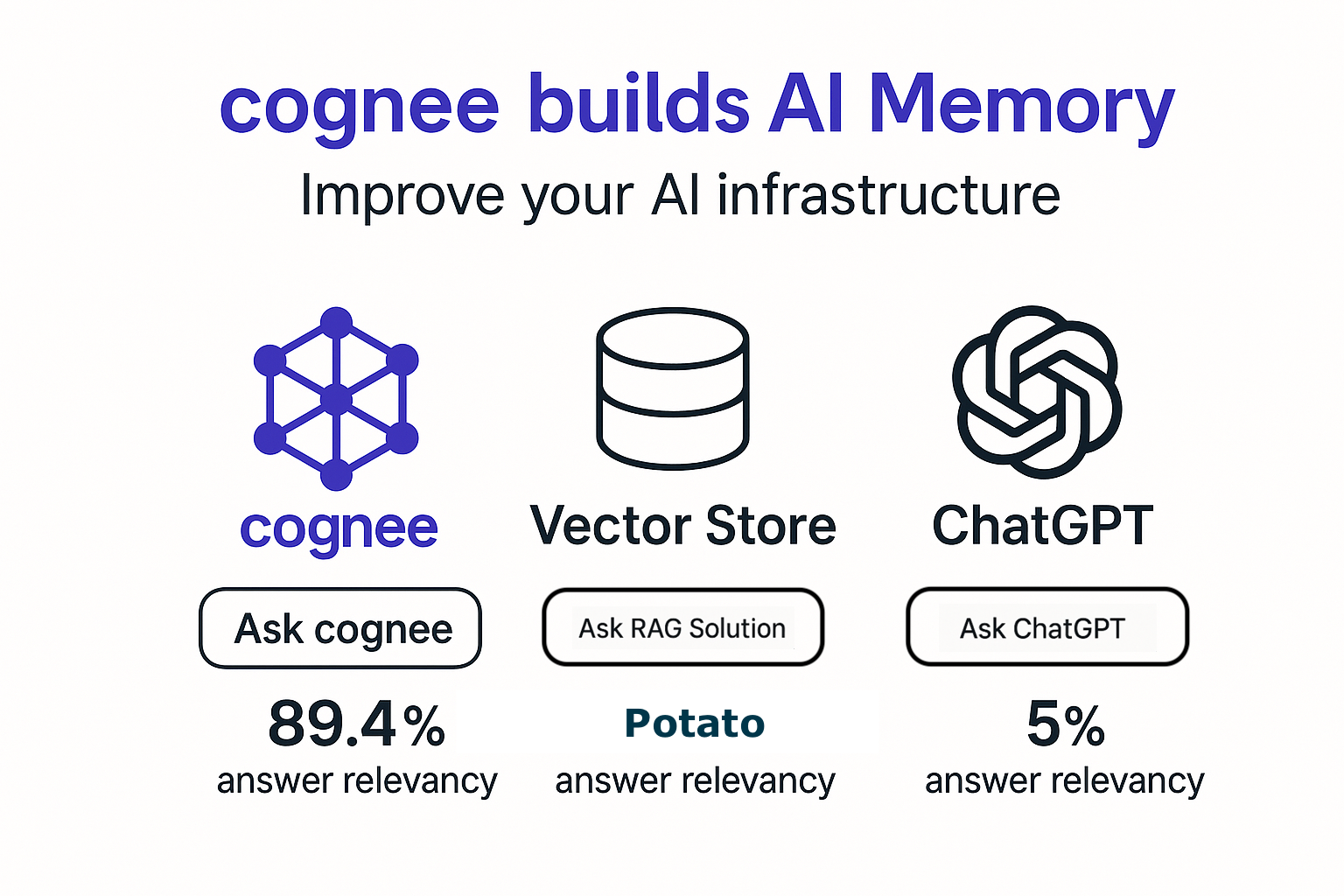

- Replaces traditional RAG systems with a unified memory layer built on graphs and vectors

- Reduces developer effort and infrastructure cost while improving quality and precision

- Provides Pythonic data pipelines for ingestion from 30+ data sources

- Offers high customizability through user-defined tasks, modular pipelines, and built-in search endpoints

Basic Usage & Feature Guide

To learn more, check out this short, end-to-end Colab walkthrough of Cognee's core features.

Quickstart

Let’s try Cognee in just a few lines of code. For detailed setup and configuration, see the Cognee Docs.

Prerequisites

- Python 3.10 to 3.13

Step 1: Install Cognee

You can install Cognee with pip, poetry, uv, or your preferred Python package manager.

uv pip install cognee

Step 2: Configure the LLM

import os

os.environ["LLM_API_KEY"] = "YOUR OPENAI_API_KEY"

Alternatively, create a .env file using our template.

To integrate other LLM providers, see our LLM Provider Documentation.

Step 3: Run the Pipeline

Cognee will take your documents, generate a knowledge graph from them and then query the graph based on combined relationships.

Now, run a minimal pipeline:

import cognee

import asyncio

from pprint import pprint

async def main():

# Add text to cognee

await cognee.add("Cognee turns documents into AI memory.")

# Generate the knowledge graph

await cognee.cognify()

# Add memory algorithms to the graph

await cognee.memify()

# Query the knowledge graph

results = await cognee.search("What does Cognee do?")

# Display the results

for result in results:

pprint(result)

if __name__ == '__main__':

asyncio.run(main())

As you can see, the output is generated from the document we previously stored in Cognee:

Cognee turns documents into AI memory.

Use the Cognee CLI

As an alternative, you can get started with these essential commands:

cognee-cli add "Cognee turns documents into AI memory."

cognee-cli cognify

cognee-cli search "What does Cognee do?"

cognee-cli delete --all

To open the local UI, run:

cognee-cli -ui

Demos & Examples

See Cognee in action:

Persistent Agent Memory

Cognee Memory for LangGraph Agents

Simple GraphRAG

Cognee with Ollama

Community & Support

Contributing

We welcome contributions from the community! Your input helps make Cognee better for everyone. See CONTRIBUTING.md to get started.

Code of Conduct

We're committed to fostering an inclusive and respectful community. Read our Code of Conduct for guidelines.

Research & Citation

We recently published a research paper on optimizing knowledge graphs for LLM reasoning:

@misc{markovic2025optimizinginterfaceknowledgegraphs,

title={Optimizing the Interface Between Knowledge Graphs and LLMs for Complex Reasoning},

author={Vasilije Markovic and Lazar Obradovic and Laszlo Hajdu and Jovan Pavlovic},

year={2025},

eprint={2505.24478},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2505.24478},

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file cognee-0.5.2.tar.gz.

File metadata

- Download URL: cognee-0.5.2.tar.gz

- Upload date:

- Size: 15.3 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: uv/0.9.29 {"installer":{"name":"uv","version":"0.9.29","subcommand":["publish"]},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

9a3c08bf524d0e09c2c10a9ebaad49f259d8ccbf7071308d2ef66dc5143482c3

|

|

| MD5 |

9a0e5a515e76379e743becce86e848ce

|

|

| BLAKE2b-256 |

c1e7257c56ec2c87332dbabab33237a25d49c5b24db145f4997cdf74b39988ac

|

File details

Details for the file cognee-0.5.2-py3-none-any.whl.

File metadata

- Download URL: cognee-0.5.2-py3-none-any.whl

- Upload date:

- Size: 1.5 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: uv/0.9.29 {"installer":{"name":"uv","version":"0.9.29","subcommand":["publish"]},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

1c9bc84b07a72c275c963d1c3487631cf8991c8d4ba59dffcefa2bc946ef099c

|

|

| MD5 |

75c87695e8d353de331fc9ab0ebbc306

|

|

| BLAKE2b-256 |

6aa31714c1e8d83f4bd42fd3293f8b770d6d3dddcee8e06496c2461eeadc7803

|