Bulk downloader for multiple file hosts and forum sites

Project description

cyberdrop-dl

Bulk downloader for multiple file hosts

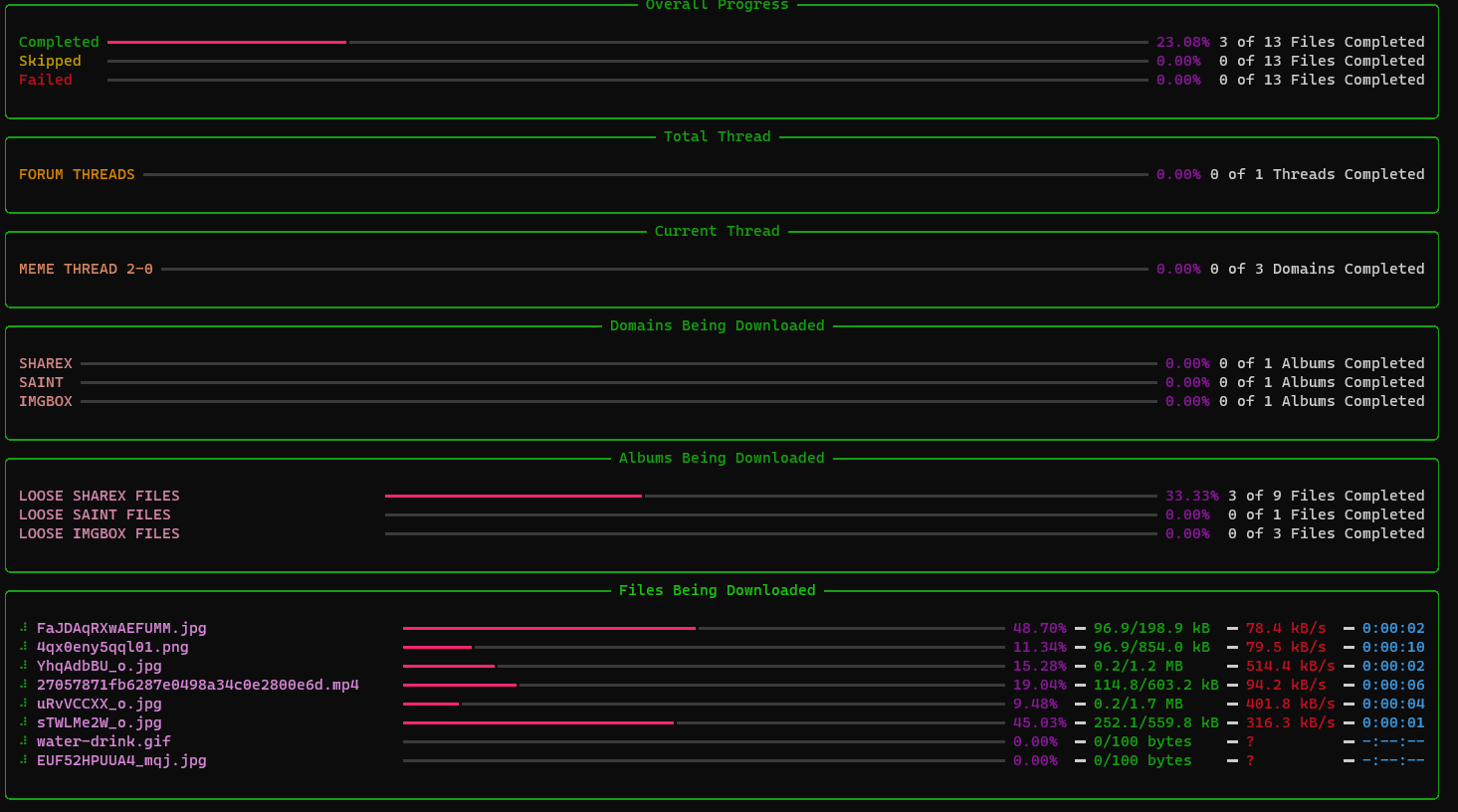

Brand new and improved! Cyberdrop-DL now has an updated paint job, fantastic new look. On top of this it also downloads from different domains simultaneously.

Support Cyberdrop-DL Development

If you want to support me and my effort you can buy me a coffee or send me some crypto:

BTC: bc1qzw7l9d8ju2qnag3skfarrd0t5mkn0zyapnrcsn

ETH: 0xf36ef155C43Ed220BfBb2CBe9c5Ae172A8640e9B

XMR: 46vMP5MXVZqQeGzkA1mbX9WQKU8fbWRBJGAktDcjYkCMRDY7HMdLzi1DFsHCPLgms968cyUz1gCWVhy9cZir9Ae7M6anQ8Q

More Information

Read the Wiki!

Supported Sites

| Website | Supported Link Types |

|---|---|

| Anonfiles | Download page: Anonfiles.com/... |

| Bayfiles | Download page: Bayfiles.com/... |

| Bunkr (ru/su/la) | Albums: bunkr.ru/a/... Direct Videos: stream.bunkr.ru/v/... Direct links: cdn.bunkr.ru/... Direct links: i.bunkr.ru/... Direct links: files.bunkr.ru/... Direct links: media-files.bunkr.ru/... |

| Coomer.party | Profiles: coomer.party/... Thumbnail Links: coomer.party/thumbnail/... Data Links: coomer.party/data/... coomer.party/.../post/... |

| Cyberdrop | Albums: cyberdrop.me/a/... Direct Videos: fs-0#.cyberdrop.me/... Direct Videos: f.cyberdrop.me/... Direct Images: img-0#.cyberdrop.me/... Direct Images: f.cyberdrop.me/... Also works with .cc, .to, and .nl |

| Cyberfile | folders: cyberfile.su/folder/... shared: cyberfile.su/shared/... Direct: cyberfile.su/... |

| E-Hentai | Albums: e-hentai.org/g/... Posts: e-hentai.org/s/... |

| Erome | Albums: erome.com/a/... |

| Fapello | Models: fapello.com/... |

| Gallery.DeltaPorno.com | Albums: Gallery.DeltaPorno.com/album/... Direct Images: Gallery.DeltaPorno.com/image/... User Profile: Gallery.DeltaPorno.com/#USER# All User Albums: Gallery.DeltaPorno.com/#USER#/albums |

| GoFile | Albums: gofile.io/d/... |

| Gfycat | Gif: gfycat.com/... |

| HGameCG | Albums: hgamecg.com/.../index.html |

| ImgBox | Albums: imgbox.com/g/... Direct Images: images#.imgbox.com/... Single Files: imgbox.com/... |

| IMG.Kiwi | Albums: img.kiwi/album/... Direct Images: img.kiwi/image/... User Profile: img.kiwi/#USER# All User Albums: img.kiwi/#USER#/albums |

| jpg.church jpg.fish jpg.fishing jpg.pet |

Albums: jpg.church/album/... Direct Images: jpg.church/image/... User Profile: jpg.church/#USER# All User Albums: jpg.church/#USER#/albums |

| LoveFap | Albums: lovefap.com/a/... Direct Images: s*.lovefap.com/content/photos/... Videos: lovefap.com/video/... |

| NSFW.XXX | Profile: nsfw.xxx/user/... Post: nsfw.xxx/post/... |

| PimpAndHost | Albums: pimpandhost.com/album/... Single Files: pimpandhost.com/image/... |

| PixelDrain | Albums: Pixeldrain.com/l/... Single Files: Pixeldrain.com/u/... |

| Pixl | Albums: pixl.li/album/... Direct Images: pixl.li/image/... User Profile: pixl.li/#USER# All User Albums: pixl.li/#USER#/albums |

| Postimg.cc | Albums: postimg.cc/gallery/... Direct Images: postimg.cc/... |

| NudoStar | Thread: nudostar.com/forum/threads/... Continue from (will download this post and after): nudostar.com/forum/threads/...post-NUMBER |

| SimpCity | Thread: simpcity.st/threads/... Continue from (will download this post and after): simpcity.st/threads/...post-NUMBER |

| SocialMediaGirls | Thread: forum.socialmediagirls.com/threads/... Continue from (will download this post and after): forum.socialmediagirls.com/threads/...post-NUMBER |

| XBunker | Thread: xbunker.nu/threads/... Continue from (will download this post and after): xbunker.nu/threads/...post-NUMBER |

| XBunkr | Album: xbunkr.com/a/... Direct Links: media.xbunkr.com/... |

Reminder to leave the link full (include the https://)

Information

Requires Python 3.7 or higher (3.10 recommended)

You can get Python from here: https://www.python.org/downloads/

Make sure you tick the check box for "Add python to path"

Mac users will also likely need to open terminal and execute the following command: xcode-select --install

Script Method

Go to the releases page and download the Cyberdrop_DL.zip file. Extract it to wherever you want the program to be.

Put the links in the URLs.txt file then run Start Windows.bat (Windows) or Start Mac.command (OS X) or Start Linux.sh (Linux).

** Mac users will need to run the command chmod +x 'Start Mac.command' to make the file executable.

CLI Method

Run pip3 install cyberdrop-dl in command prompt/terminal

Advanced users may want to use virtual environments (via pipx), but it's NOT required.

- Run

cyberdrop-dlonce to generate an emptyURLs.txtfile. - Copy and paste your links into

URLs.txt. Each link you add has to go on its own line (paste link, press enter, repeat). - Run

cyberdrop-dlagain. It will begin to download everything. - Enjoy!

Arguments & Config

If you know what you're doing, you can use the available options to adjust how the program runs.

You can read more about all of these options here. As they directly correlate with the config options.

$ cyberdrop-dl -h

usage: cyberdrop-dl [-h] [-V] [-i INPUT_FILE] [-o OUTPUT_FOLDER] [--log-file LOG_FILE] [--threads THREADS] [--attempts ATTEMPTS] [--include-id] [--exclude-videos] [--exclude-images] [--exclude-audio] [--exclude-other] [--ignore-history] [--simpcity-username "USERNAME"] [--simpcity-password "PASSWORD"] [--skip SITE] [link ...]

Bulk downloader for multiple file hosts

positional arguments:

link link to content to download (passing multiple links is supported)

optional arguments:

-h, --help show this help message and exit

-V, --version show program's version number and exit

-i INPUT_FILE, --input-file INPUT_FILE file containing links to download

-o OUTPUT_FOLDER, --output-folder OUTPUT_FOLDER folder to download files to

--config-file config file to read arguments from

--db-file history database file to write to

--errored-download-urls-file csv file to write failed download information to

--errored-scrape-urls-file csv file to write failed scrape information to

--log-file log file to write to

--output-last-forum-post-file text file to output last scraped post from a forum thread for re-feeding into CDL

--unsupported-urls-file csv file to output unsupported links into

--exclude-audio skip downloading of audio files

--exclude-images skip downloading of image files

--exclude-other skip downloading of images

--exclude-videos skip downloading of video files

--ignore-cache ignores previous runs cached scrape history

--ignore-history ignores previous download history

--only-hosts only allows downloads and scraping from these hosts

--skip-hosts doesn't allow downloads and scraping from these hosts

--allow-insecure-connections allows insecure connections from content hosts

--attempts number of attempts to download each file

--block-sub-folders block sub folders from being created

--disable-attempt-limit disables the attempt limitation

--include-id include the ID in the download folder name

--max-concurrent-threads number of threads to download simultaneously

--max-concurrent-domains number of domains to download simultaneously

--max-concurrent-albums number of albums to download simultaneously

--max-concurrent-downloads-per-domain number of simultaneous downloads per domain

--skip-download-mark-completed sets the scraped files as downloaded without downloading

--output-errored-urls sets the failed urls to be output to the errored urls file

--output-unsupported-urls sets the unsupported urls to be output to the unsupported urls file

--proxy HTTP/HTTPS proxy used for downloading, format [protocol]://[ip]:[port]

--remove-bunkr-identifier removes the bunkr added identifier from output filenames

--required-free-space required free space (in gigabytes) for the program to run

--sort-downloads sorts downloaded files after downloads have finished

--sort-directory folder to download files to

--sorted-audio schema to sort audio

--sorted-images schema to sort images

--sorted-others schema to sort other

--sorted-videos schema to sort videos

--connection-timeout number of seconds to wait attempting to connect to a URL during the downloading phase

--ratelimit this applies to requests made in the program during scraping, the number you provide is in requests/seconds

--throttle this is a throttle between requests during the downloading phase, the number is in seconds

--output-last-forum-post outputs the last post of a forum scrape to use as a starting point for future runs

--scrape-single-post scrapes a single post from a forum thread

--separate-posts separates forum scraping into folders by post number

--gofile-api-key api key for premium gofile

--gofile-website-token website token for gofile

--pixeldrain-api-key api key for premium pixeldrain

--nudostar-username username to login to nudostar

--nudostar-password password to login to nudostar

--simpcity-username username to login to simpcity

--simpcity-password password to login to simpcity

--socialmediagirls-username username to login to socialmediagirls

--socialmediagirls-password password to login to socialmediagirls

--xbunker-username username to login to xbunker

--xbunker-password password to login to xbunker

--apply-jdownloader enables sending unsupported URLs to a running jdownloader2 instance to download

--jdownloader-username username to login to jdownloader

--jdownloader-password password to login to jdownloader

--jdownloader-device device name to login to for jdownloader

--hide-new-progress disables the new rich progress entirely and uses older methods

--hide-overall-progress removes overall progress section while downloading

--hide-forum-progress removes forum progress section while downloading

--hide-thread-progress removes thread progress section while downloading

--hide-domain-progress removes domain progress section while downloading

--hide-album-progress removes album progress section while downloading

--hide-file-progress removes file progress section while downloading

--refresh-rate changes the refresh rate of the progress table

--visible-rows-threads number of visible rows to use for the threads table

--visible-rows-domains number of visible rows to use for the domains table

--visible-rows-albums number of visible rows to use for the albums table

--visible-rows-files number of visible rows to use for the files table

--only-hosts and --skip-hosts can use: "anonfiles", "bayfiles", "bunkr", "coomer.party", "cyberdrop", "cyberfile", "e-hentai", "erome", "fapello", "gfycat", "gallery.deltaporno.com", "gofile", "hgamecg", "img.kiwi", "imgbox", "jpg.church", "jpg.fish", "kemono.party", "lovefap", "nsfw.xxx", "nudostar", "pimpandhost", "pixeldrain", "pixl.li", "postimg", "saint", "simpcity", "socialmediagirls", "xbunker", "xbunkr"

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for cyberdrop_dl-4.2.59-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 0a506a71bb488b0754c924232a70905421a8bd3adda24c1d7394c09cbe6ca755 |

|

| MD5 | dda24998fee19143edc01de17b6ec788 |

|

| BLAKE2b-256 | 21470c9b7149e0b9fce720f1d401e5213da7aed19064e7ae1ab1dce6b38ed9f3 |