Estimate a .cube 3D lookup table from camera images for the Darktable lut 3D module.

Project description

This package estimates a .cube 3D lookup table (LUT) for use with the Darktable lut 3D module. It was designed to obtain 3D LUTs replicating in-camera jpeg styles. This is especially useful if one shoots large sets of RAW photos (e.g. for commission), where most shall simply resemble the standard out-of-camera (OOC) style when exported by darktable, while still being able to do some quick corrections on selected images while maintaining the OOC style. The resulting LUTs are, if using the default processing style, intended for usage without Filmic/Basecurve etc. (Set auto-apply pixel workflow defaults to none)

Below is an example using an LUT estimated to match the Provia film simulation on a Fujifilm X-T3. First is the OOC Jpeg, second is the RAW processed in Darktable with the LUT and third is the RAW processed in Darktable without any corrections:

Installation

Python 3 must be installed.

Installation of Darktable LUT Generator via pip:

pip install darktable_lut_generator

Usage

Run:

darktable_lut_generator [path to directory with images] [output .cube file]

For help and further arguments, run

darktable_lut_generator --help

A directory with image pairs of one RAW image and the corresponding OOC image (e.g. jpeg) is used as input.

The images should represent a wide variety of colors; ideally, the whole Adobe RGB color space is covered.

The resulting LUT is intended for application in Adobe RGB color space.

Hence, it is advisable to also shoot the in-camera jpegs in Adobe RGB in order to cover the whole available gamut.

In default configuration, Darktable may apply an exposure module with camera exposure bias correction automatically

to raw files. The LUTs produced by this module are constructed to resemble the OOC jpeg when used on a raw

image without the exposure bias correction. Also, the filmic rgb module should be turned off.

Another issue is in-camera lens correction. By default, this script does not use darktable's lens-correction module.

If possible, the images should be taken without any in-camera lens correction.

If this is not possible (e.g. because in-camera lens correction cannot be disabled on the used camera), see darktable_lut_generator --help for the appropriate option to enable darktable's lens correction.

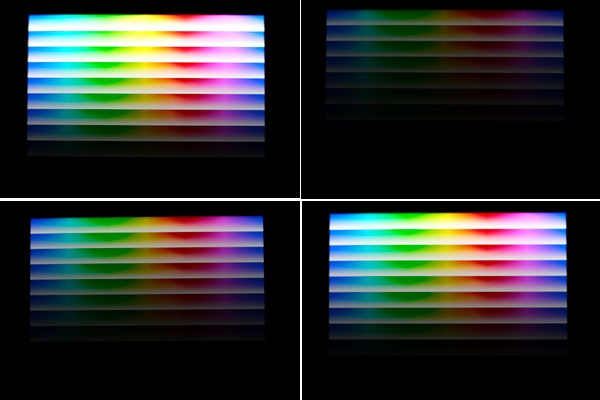

The command

darktable_lut_generate_pattern [path to output image]

may be used to generate a simple test pattern. If the pattern is displayed on a wide-gamut screen

(an OLED smartphone with vidid color settings is fine), approx. 5 RAW+JPEG pairs can be photographed at different

exposures. That may provide a good starting sample set and is often sufficient for good results, but additional real-world images are always

helpful.

When applying the resulting LUT to the RAWs with those test images, there will still be some artifacts near the gamut

limits.

I don't know (yet) whether this results from the estimation procedure or some issues / limited understanding

regarding the exact color space transformations used by Darktable when processing / saving the sample images

or when applying the LUT. An example of the test-set JPEGs generated by shooting a smartphone with the test pattern is

given below:

There are also some options helping the user to understand with the result interpretation for tweaking the settings

and check the sample images.

In particular, --path_dir_out_info defines a custom directory path to output some charts and images, like alignment

results

and visualizations of the generated LUT. TODO: documentation of outputs

Estimation

Estimation is performed by estimating the differences to an identity LUT using linear regression with an appropriately constrained parameter space, assuming trilinear interpolation when applying the LUT.

Very sparsely or non-sampled colors will be interpolated with neighboring colors. However, no sophisticated hyperparameter tuning has been conducted in order to identify sparsely sampled patches, especially regarding different cube size.

n_samples pixels are sampled from the image, as using all pixels is computationally expensive.

Sampling is performed weighted by the inverse estimated sample density conditioned on the raw pixel colors in order to

obtain a sample with approximately uniform distribution over the represented colors.

This reduces the needed sample count for good results by approx. an order of magnitude compared to drawing pixels

uniformly.

Additional Resources

About LUTs and color management

https://docs.darktable.org/usermanual/3.8/en/module-reference/processing-modules/lut-3d/ https://eng.aurelienpierre.com/ https://library.imageworks.com/pdfs/imageworks-library-cinematic_color.pdf

Forums

https://discuss.pixls.us/t/how-to-create-haldcluts-from-in-camera-processing-styles/12690 https://discuss.pixls.us/t/help-me-build-a-lua-script-for-automatically-applying-fujifilm-film-simulations-and-more/30287 https://discuss.pixls.us/t/creating-3d-cube-luts-for-camera-ooc-styles/30968

Similar tools

https://github.com/bastibe/LUT-Maker https://github.com/savuori/haldclut_dt

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file darktable_lut_generator-0.1.5.tar.gz.

File metadata

- Download URL: darktable_lut_generator-0.1.5.tar.gz

- Upload date:

- Size: 38.5 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.12.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 1cea2e7b4536c15e7bbc5a488970c9d90e40a079de8d83a8ace901eb3b0aafc2 |

|

| MD5 | 5b20fb0f22e2b2bce6fddad711246aa6 |

|

| BLAKE2b-256 | c77f9df5c6225684be7b264ced9a382fad6f595de1ccaf83ae03dbb3d7e9d66b |

File details

Details for the file darktable_lut_generator-0.1.5-py3-none-any.whl.

File metadata

- Download URL: darktable_lut_generator-0.1.5-py3-none-any.whl

- Upload date:

- Size: 39.7 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.12.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 6f03e5c93c24b639f72d89f49be50f838562a26cdceef7c466cfa1657928a393 |

|

| MD5 | c05c85254d19e8dd602d12cf968a77b1 |

|

| BLAKE2b-256 | 807b1961a43c1cfb3b5f5485b9ee04b2cd3bfd15c991bb165fcc32bd2946a7b3 |