Databank is an easy-to-use Python library for making raw SQL queries in a multi-threaded environment.

Project description

Databank

Databank is an easy-to-use Python library for making raw SQL queries in a multi-threaded environment.

No ORM, no frills. Only raw SQL queries and parameter binding. Thread-safe. Built on top of SQLAlchemy.

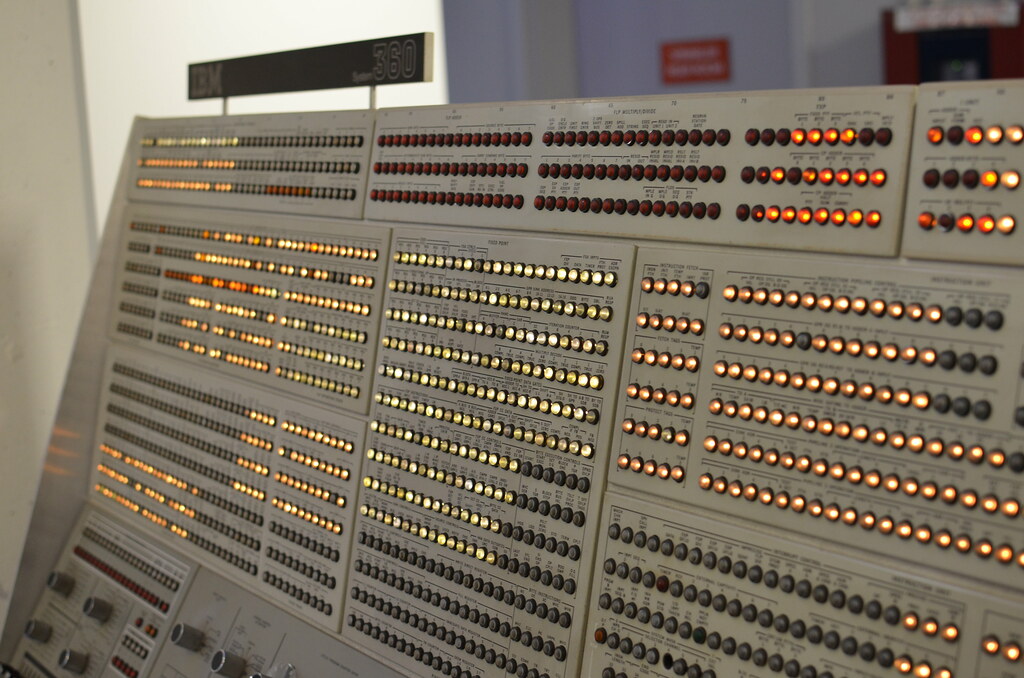

(The photo was taken by Matthew Ratzloff and is licensed under CC BY-NC-ND 2.0.)

Installation

You can install the latest stable version from PyPI:

$ pip install databank

Adapters not included. Install e.g. psycopg for PostgreSQL:

$ pip install psycopg

Usage

Connect to the database of your choice:

>>> from databank import Database

>>> db = Database("postgresql+psycopg://user:password@localhost/db", pool_size=2)

The keyword arguments are passed directly to SQLAlchemy's create_engine() function. Depending on the database you connect to, you have options like the size of connection pools.

If you are using

databankin a multi-threaded environment (e.g. in a web application), make sure the pool size is at least the number of worker threads.

Let's create a simple table:

>>> db.execute("CREATE TABLE beatles (id SERIAL PRIMARY KEY, member TEXT NOT NULL);")

You can insert multiple rows at once:

>>> params = [

... {"id": 0, "member": "John"},

... {"id": 1, "member": "Paul"},

... {"id": 2, "member": "George"},

... {"id": 3, "member": "Ringo"}

... ]

>>> db.execute_many("INSERT INTO beatles (id, member) VALUES (:id, :member);", params)

Fetch a single row:

>>> db.fetch_one("SELECT * FROM beatles;")

{'id': 0, 'member': 'John'}

But you can also fetch n rows:

>>> db.fetch_many("SELECT * FROM beatles;", n=2)

[{'id': 0, 'member': 'John'}, {'id': 1, 'member': 'Paul'}]

Or all rows:

>>> db.fetch_all("SELECT * FROM beatles;")

[{'id': 0, 'member': 'John'},

{'id': 1, 'member': 'Paul'},

{'id': 2, 'member': 'George'},

{'id': 3, 'member': 'Ringo'}]

If you are using PostgreSQL with jsonb columns, you can use a helper function to serialize the parameter values:

>>> from databank.utils import serialize_params

>>> serialize_params({"member": "Ringo", "song": ["Don't Pass Me By", "Octopus's Garden"]})

{'member': 'Ringo', 'song': '["Don\'t Pass Me By", "Octopus\'s Garden"]'}

Async

You can also use AsyncDatabase which implements basically the same methods as Database (but with an a prefix):

>>> from databank import AsyncDatabase

>>> db = AsyncDatabase("postgresql+psycopg://user:password@localhost/db", pool_size=2)

>>> await db.afetch_one("SELECT * FROM beatles;")

{'id': 0, 'member': 'John'}

Query Collection

You can also organize SQL queries in an SQL file and load them into a QueryCollection:

/* @name insert_data */

INSERT INTO beatles (id, member) VALUES (:id, :member);

/* @name select_all_data */

SELECT * FROM beatles;

This idea is borrowed from PgTyped

A query must have a header comment with the name of the query. If a query name is not unique, the last query with the same name will be used. You can parse that file and load the queries into a QueryCollection:

>>> from databank import QueryCollection

>>> queries = QueryCollection.from_file("queries.sql")

and access the queries like in a dictionary:

>>> queries["insert_data"]

'INSERT INTO beatles (id, member) VALUES (:id, :member);'

>>> queries["select_all_data"]

'SELECT * FROM beatles;'

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file databank-1.1.0.tar.gz.

File metadata

- Download URL: databank-1.1.0.tar.gz

- Upload date:

- Size: 33.0 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: uv/0.9.18 {"installer":{"name":"uv","version":"0.9.18","subcommand":["publish"]},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

16da75a68399871164f1dc5c5d35e3e3e25675e063bb45737cc2970b6a57693e

|

|

| MD5 |

5070b6f9d0b99a24b4cce2bd8d4fcf7d

|

|

| BLAKE2b-256 |

9bb337aeaa47e7eda5de7a0c6e59a4abe4aa01d6ac96b11b25f751cc09090692

|

File details

Details for the file databank-1.1.0-py3-none-any.whl.

File metadata

- Download URL: databank-1.1.0-py3-none-any.whl

- Upload date:

- Size: 7.4 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: uv/0.9.18 {"installer":{"name":"uv","version":"0.9.18","subcommand":["publish"]},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

c2a3068943f5c4938e46ef0eb9e90475994e691d77586f84654a01ead47e26e2

|

|

| MD5 |

bfa8223e2854c8de90c17f97e9f548ac

|

|

| BLAKE2b-256 |

1f9f3d6e2e1ca3e39145d5e7f7406d86c99906d345ef8c26eb5ac162f057fa9f

|