An eXplainable AI package for tabular data.

Project description

Effector

effector an eXplainable AI package for tabular data. It:

- creates global and regional effect plots

- has a simple API with smart defaults, but can become flexible if needed

- is model agnostic; can explain any underlying ML model

- integrates easily with popular ML libraries, like Scikit-Learn, Tensorflow and Pytorch

- is fast, for both global and regional methods

- provides a large collection of global and regional effects methods

📖 Documentation | 🔍 Intro to global and regional effects | 🔧 API | 🏗 Examples

Installation

Effector requires Python 3.10+:

pip install effector

Dependencies: numpy, scipy, matplotlib, tqdm, shap.

Quickstart

Train an ML model

import effector

import keras

import numpy as np

import tensorflow as tf

np.random.seed(42)

tf.random.set_seed(42)

# Load dataset

bike_sharing = effector.datasets.BikeSharing(pcg_train=0.8)

X_train, Y_train = bike_sharing.x_train, bike_sharing.y_train

X_test, Y_test = bike_sharing.x_test, bike_sharing.y_test

# Define and train a neural network

model = keras.Sequential([

keras.layers.Dense(1024, activation="relu"),

keras.layers.Dense(512, activation="relu"),

keras.layers.Dense(256, activation="relu"),

keras.layers.Dense(1)

])

model.compile(optimizer="adam", loss="mse", metrics=["mae", keras.metrics.RootMeanSquaredError()])

model.fit(X_train, Y_train, batch_size=512, epochs=20, verbose=1)

model.evaluate(X_test, Y_test, verbose=1)

Wrap it in a callable

def predict(x):

return model(x).numpy().squeeze()

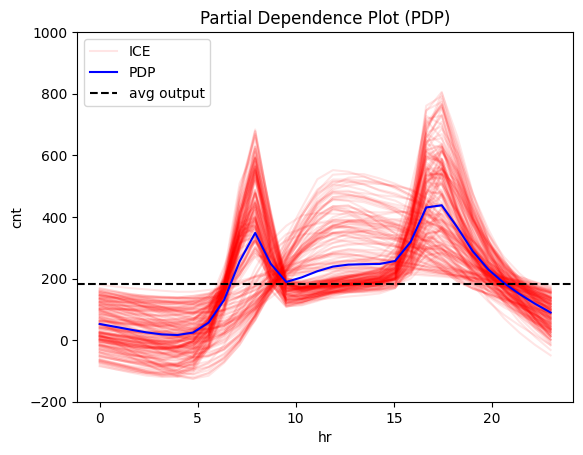

Explain it with global effect plots

# Initialize the Partial Dependence Plot (PDP) object

pdp = effector.PDP(

X_test, # Use the test set as background data

predict, # Prediction function

feature_names=bike_sharing.feature_names, # (optional) Feature names

target_name=bike_sharing.target_name # (optional) Target variable name

)

# Plot the effect of a feature

pdp.plot(

feature=3, # Select the 3rd feature (feature: hour)

nof_ice=200, # (optional) Number of Individual Conditional Expectation (ICE) curves to plot

scale_x={"mean": bike_sharing.x_test_mu[3], "std": bike_sharing.x_test_std[3]}, # (optional) Scale x-axis

scale_y={"mean": bike_sharing.y_test_mu, "std": bike_sharing.y_test_std}, # (optional) Scale y-axis

centering=True, # (optional) Center PDP and ICE curves

show_avg_output=True, # (optional) Display the average prediction

y_limits=[-200, 1000] # (optional) Set y-axis limits

)

Explain it with regional effect plots

# Initialize the Regional Partial Dependence Plot (RegionalPDP)

r_pdp = effector.RegionalPDP(

X_test, # Test set data

predict, # Prediction function

feature_names=bike_sharing.feature_names, # Feature names

target_name=bike_sharing.target_name # Target variable name

)

# Summarize the subregions of the 3rd feature (temperature)

r_pdp.summary(

features=3, # Select the 3rd feature for the summary

scale_x_list=[ # scale each feature with mean and std

{"mean": bike_sharing.x_test_mu[i], "std": bike_sharing.x_test_std[i]}

for i in range(X_test.shape[1])

]

)

Feature 3 - Full partition tree:

🌳 Full Tree Structure:

───────────────────────

hr 🔹 [id: 0 | heter: 0.43 | inst: 3476 | w: 1.00]

workingday = 0.00 🔹 [id: 1 | heter: 0.36 | inst: 1129 | w: 0.32]

temp ≤ 6.50 🔹 [id: 3 | heter: 0.17 | inst: 568 | w: 0.16]

temp > 6.50 🔹 [id: 4 | heter: 0.21 | inst: 561 | w: 0.16]

workingday ≠ 0.00 🔹 [id: 2 | heter: 0.28 | inst: 2347 | w: 0.68]

temp ≤ 6.50 🔹 [id: 5 | heter: 0.19 | inst: 953 | w: 0.27]

temp > 6.50 🔹 [id: 6 | heter: 0.20 | inst: 1394 | w: 0.40]

--------------------------------------------------

Feature 3 - Statistics per tree level:

🌳 Tree Summary:

─────────────────

Level 0🔹heter: 0.43

Level 1🔹heter: 0.31 | 🔻0.12 (28.15%)

Level 2🔹heter: 0.19 | 🔻0.11 (37.10%)

The summary of feature hr (hour) says that its effect on the output is highly dependent on the value of features:

workingday, wheteher it is a workingday or nottemp, what is the temperature the specific hour

Let's see how the effect changes on these subregions!

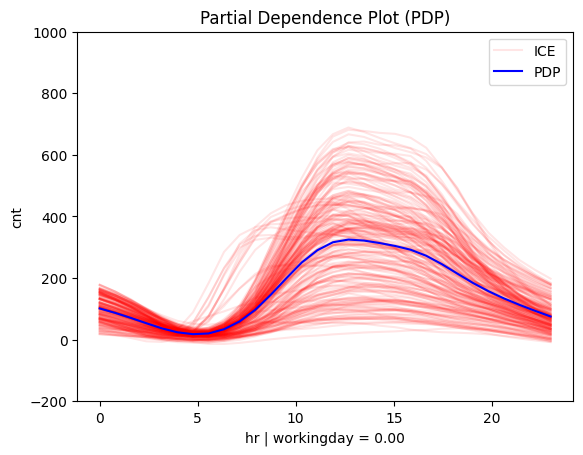

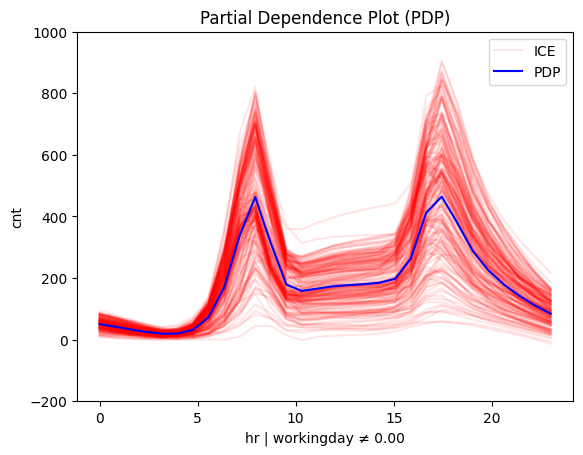

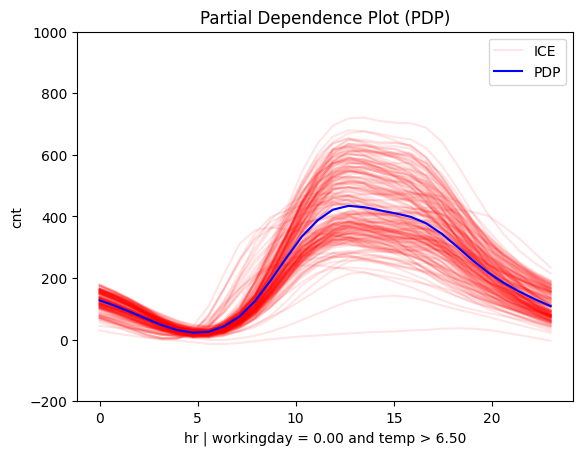

Is it workingday or not?

# Plot regional effects after the first-level split (workingday vs non-workingday)

for node_idx in [1, 2]: # Iterate over the nodes of the first-level split

r_pdp.plot(

feature=3, # Feature 3 (temperature)

node_idx=node_idx, # Node index (1: workingday, 2: non-workingday)

nof_ice=200, # Number of ICE curves

scale_x_list=[ # Scale features by mean and std

{"mean": bike_sharing.x_test_mu[i], "std": bike_sharing.x_test_std[i]}

for i in range(X_test.shape[1])

],

scale_y={"mean": bike_sharing.y_test_mu, "std": bike_sharing.y_test_std}, # Scale the target

y_limits=[-200, 1000] # Set y-axis limits

)

|

|

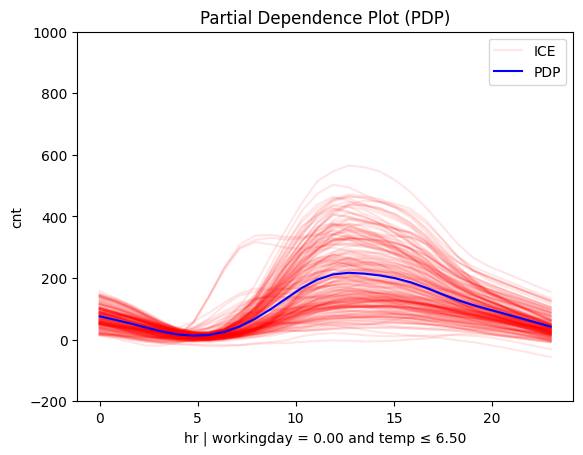

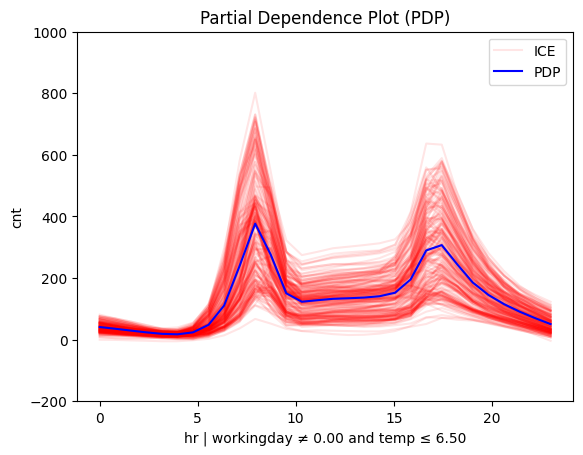

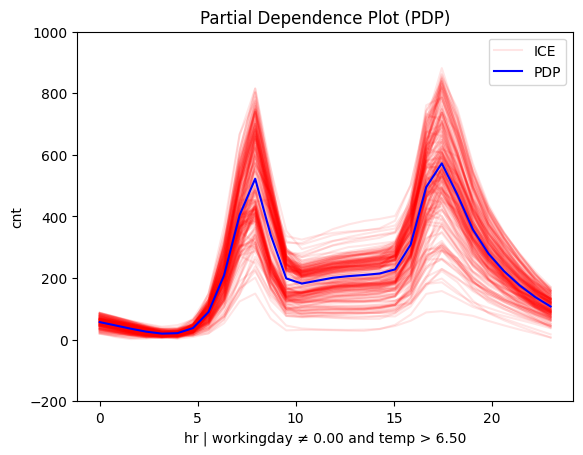

Is it hot or cold?

# Plot regional effects after second-level splits (workingday vs non-workingday and hot vs cold temperature)

for node_idx in [3, 4, 5, 6]: # Iterate over the nodes of the second-level splits

r_pdp.plot(

feature=3, # Feature 3 (temperature)

node_idx=node_idx, # Node index (hot/cold temperature and workingday/non-workingday)

nof_ice=200, # Number of ICE curves

scale_x_list=[ # Scale features by mean and std

{"mean": bike_sharing.x_test_mu[i], "std": bike_sharing.x_test_std[i]}

for i in range(X_test.shape[1])

],

scale_y={"mean": bike_sharing.y_test_mu, "std": bike_sharing.y_test_std}, # Scale target

y_limits=[-200, 1000] # Set y-axis limits

)

|

|

|

|

Supported Methods

effector implements global and regional effect methods:

| Method | Global Effect | Regional Effect | Reference | ML model | Speed |

|---|---|---|---|---|---|

| PDP | PDP |

RegionalPDP |

PDP | any | Fast for a small dataset |

| d-PDP | DerPDP |

RegionalDerPDP |

d-PDP | differentiable | Fast for a small dataset |

| ALE | ALE |

RegionalALE |

ALE | any | Fast |

| RHALE | RHALE |

RegionalRHALE |

RHALE | differentiable | Very fast |

| SHAP-DP | ShapDP |

RegionalShapDP |

SHAP | any | Fast for a small dataset and a light ML model |

Method Selection Guide

From the runtime persepective there are three criterias:

- is the dataset

small(N<10K) orlarge(N>10K instances) ? - is the ML model

light(runtime < 0.1s) orheavy(runtime > 0.1s) ? - is the ML model

differentiableornon-differentiable?

Trust us and follow this guide:

light+small+differentiable=any([PDP, RHALE, ShapDP, ALE, DerPDP])light+small+non-differentiable:[PDP, ALE, ShapDP]heavy+small+differentiable=any([PDP, RHALE, ALE, DerPDP])heavy+small+non differentiable=any([PDP, ALE])big+not differentiable=ALEbig+differentiable=RHALE

Citation

If you use effector, please cite it:

@misc{gkolemis2024effector,

title={effector: A Python package for regional explanations},

author={Vasilis Gkolemis et al.},

year={2024},

eprint={2404.02629},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Spotlight on effector

📚 Featured Publications

- Gkolemis, Vasilis, et al.

"Fast and Accurate Regional Effect Plots for Automated Tabular Data Analysis."

Proceedings of the VLDB Endowment | ISSN 2150-8097

🎤 Talks & Presentations

- LMU-IML Group Talk

Slides & Materials | LMU-IML Research - AIDAPT Plenary Meeting

Deep dive into effector - XAI World Conference 2024

Poster | Paper

🌍 Adoption & Collaborations

- AIDAPT Project

Leveragingeffectorfor explainable AI solutions.

🔍 Additional Resources

-

Medium Post

Effector: An eXplainability Library for Global and Regional Effects -

Courses & Lists:

IML Course @ LMU

Awesome ML Interpretability

Awesome XAI

Best of ML Python

📚 Related Publications

Papers that have inspired effector:

-

REPID: Regional Effects in Predictive Models

Herbinger et al., 2022 - Link -

Decomposing Global Feature Effects Based on Feature Interactions

Herbinger et al., 2023 - Link -

RHALE: Robust Heterogeneity-Aware Effects

Gkolemis Vasilis et al., 2023 - Link -

DALE: Decomposing Global Feature Effects

Gkolemis Vasilis et al., 2023 - Link -

Greedy Function Approximation: A Gradient Boosting Machine

Friedman, 2001 - Link -

Visualizing Predictor Effects in Black-Box Models

Apley, 2016 - Link -

SHAP: A Unified Approach to Model Interpretation

Lundberg & Lee, 2017 - Link -

Regionally Additive Models: Explainable-by-design models minimizing feature interactions

Gkolemis Vasilis et al., 2023 - Link

License

effector is released under the MIT License.

Powered by:

-

XMANAI

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file effector-0.2.0.tar.gz.

File metadata

- Download URL: effector-0.2.0.tar.gz

- Upload date:

- Size: 55.0 kB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e9775b6da2d51697c01f69e07f45931a9f42e3537f66f9d415c5c2fdf3094934

|

|

| MD5 |

82d867f74d8e61b18c4b3d6ba8d19ae0

|

|

| BLAKE2b-256 |

967a6a6a1dafac2161b28eb5e7cee4363649970d4c0175cc5aaed4fa1310b63a

|

Provenance

The following attestation bundles were made for effector-0.2.0.tar.gz:

Publisher:

publish_to_pypi.yml on givasile/effector

-

Statement:

-

Statement type:

https://in-toto.io/Statement/v1 -

Predicate type:

https://docs.pypi.org/attestations/publish/v1 -

Subject name:

effector-0.2.0.tar.gz -

Subject digest:

e9775b6da2d51697c01f69e07f45931a9f42e3537f66f9d415c5c2fdf3094934 - Sigstore transparency entry: 298979377

- Sigstore integration time:

-

Permalink:

givasile/effector@1c959cd13180dfc72ee207df10e4a2ac3418a002 -

Branch / Tag:

refs/tags/v0.2.0 - Owner: https://github.com/givasile

-

Access:

public

-

Token Issuer:

https://token.actions.githubusercontent.com -

Runner Environment:

github-hosted -

Publication workflow:

publish_to_pypi.yml@1c959cd13180dfc72ee207df10e4a2ac3418a002 -

Trigger Event:

push

-

Statement type:

File details

Details for the file effector-0.2.0-py3-none-any.whl.

File metadata

- Download URL: effector-0.2.0-py3-none-any.whl

- Upload date:

- Size: 63.6 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/6.1.0 CPython/3.12.9

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

116efaa99fb2573e4ba5a4bc8f134c62b479499d53930f8eda8c2aa640affbcf

|

|

| MD5 |

6d1f90a5bb326147575391a6cab4d32c

|

|

| BLAKE2b-256 |

9892c484fba057240e299ff46af7322a2d1e521ba13858f20747c854b4908131

|

Provenance

The following attestation bundles were made for effector-0.2.0-py3-none-any.whl:

Publisher:

publish_to_pypi.yml on givasile/effector

-

Statement:

-

Statement type:

https://in-toto.io/Statement/v1 -

Predicate type:

https://docs.pypi.org/attestations/publish/v1 -

Subject name:

effector-0.2.0-py3-none-any.whl -

Subject digest:

116efaa99fb2573e4ba5a4bc8f134c62b479499d53930f8eda8c2aa640affbcf - Sigstore transparency entry: 298979409

- Sigstore integration time:

-

Permalink:

givasile/effector@1c959cd13180dfc72ee207df10e4a2ac3418a002 -

Branch / Tag:

refs/tags/v0.2.0 - Owner: https://github.com/givasile

-

Access:

public

-

Token Issuer:

https://token.actions.githubusercontent.com -

Runner Environment:

github-hosted -

Publication workflow:

publish_to_pypi.yml@1c959cd13180dfc72ee207df10e4a2ac3418a002 -

Trigger Event:

push

-

Statement type: