GReedy Augmented Sequential Patterns: an algorithm for extracting patterns from text data

Project description

GrASP

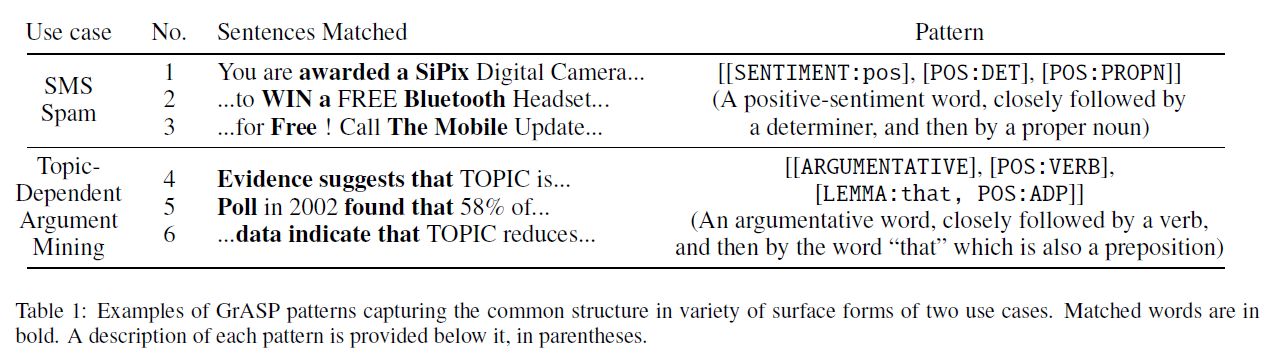

GrASP (GReedy Augmented Sequential Patterns) is an algorithm for extracting patterns from text data (Shnarch et. al., 2017). Basically, it takes as input a list of positive and negative examples of a target phenomenon and outputs a ranked list of patterns that distinguish between the positive and the negative examples. For instance, two GrASP patterns from two use cases are shown in the Table below along with the sentences they match.

This repository provides the implementation of GrASP, a web-based tool for exploring the results from GrASP, and two example notebooks for use cases of GrASP. This project is a joint collaboration between Imperial College London and IBM Research.

Paper: GrASP: A Library for Extracting and Exploring Human-Interpretable Textual Patterns

Authors: Piyawat Lertvittayakumjorn, Leshem Choshen, Eyal Shnarch, and Francesca Toni.

Contact: Piyawat Lertvittayakumjorn (plkumjorn [at] gmail [dot] com)

Installation

This library can be installed via pip under the name grasptext.

pip install grasptext

Otherwise, you may use the stand-alone version of our library (no longer maintained) by switching to the standalone branch of this repository and following the README instructions there.

Usage

import grasptext

# Step 1: Create the GrASP model

grasp_model = grasptext.GrASP(num_patterns = 50,

gaps_allowed = 2,

alphabet_size = 50,

include_standard = ['LEMMA', 'POS', 'NER', 'HYPERNYM'])

# Step 2: Fit it to the training data

from sklearn.datasets import fetch_20newsgroups

def get_20newsgroups_examples(class_name, num_examples = 50):

assert num_examples > 0

data = fetch_20newsgroups(categories=[class_name], shuffle=False, remove=('headers','footers'))['data']

return data[:min(num_examples, len(data))]

pos_exs = get_20newsgroups_examples('rec.autos', num_examples = 50)

neg_exs = get_20newsgroups_examples('rec.motorcycles', num_examples = 50)

the_patterns = grasp_model.fit_transform(pos_exs, neg_exs)

# Step 3: Export the results

grasp_model.to_csv('results.csv')

grasp_model.to_json('results.json')

As shown above, GrASP can be used in three steps:

- Creating a GrASP model (with hyperparameters specified)

- Fit the GrASP model to the lists of positive and negative examples

- Export the results to a csv or a json file

Hyperparameters for GrASP (Step 1)

min_freq_threshold(float, default = 0.005) -- Attributes which appear less often than this proportion of the number of training examples will be discarded as they are non-frequent.correlation_threshold(float, default = 0.5) -- Attributes/patterns whose correlation to some previously selected attribute/pattern is above this threshold, measured by the normalized mutual information, will be discarded.alphabet_size(int, default = 100) -- The alphabet size.num_patterns(int, default = 100) -- The number of output patterns.max_len(int, default = 5) -- The maximum number of attributes per pattern.window_size(Optional[int], default = 10) -- The window size for the output patterns.gaps_allowed(Optional[int], default = None) -- If gaps allowed is not None, it overrules the window size and specifies the number of gaps allowed in each output pattern.gain_criteria(str or Callable[[Pattern], float]], default = 'global') -- The criterion for selecting alphabet and patterns. 'global' refers to the information gain criterion. The current version also supports a criterion ofF_x(such asF_0.01).min_coverage_threshold(Optional[float], default = None) -- The minimum proportion of examples matched for output patterns (so GrASP does not generate too specific patterns).print_examples(Union[int, Sequence[int]], default = 2) -- The number of examples and counter-examples to print when printing a pattern. Ifprint_examplesequals(x, y), it printsxexamples andycounter-examples for each pattern. Ifprint_examplesequalsx, it is equivalent to(x, x).include_standard(List[str], default = ['TEXT', 'POS', 'NER', 'HYPERNYM', 'SENTIMENT']) -- The built-in attributes to use. Available options are ['TEXT', 'LEMMA', 'POS', 'DEP', 'NER', 'HYPERNYM', 'SENTIMENT'].include_custom(List[CustomAttribute], default = []) -- The list of custom attributes to use.

Built-in attributes

The current implementation of GrASP consists of seven standard attributes. The full lists of tags for POS, DEP, and NER can be found from SPACY.

- TEXT attribute of a token is the token in lower case.

- LEMMA attribute of a token is its lemma obtained from SPACY.

- POS attribute of a token is the part-of-speech tag of the token according to the universal POS tags

- DEP attribute of a token is the dependency parsing tag of the token (the type of syntactic relation that connects the child to the head)

- NER attribute is a token (if any) is the named entity type of the token.

- HYPERNYM attribute of a/an (noun, verb, adjective, adverb) token is the synsets of the hypernyms of the token (including the synset of the token itself). The hypernym hierarchy is based on WordNet (nltk). Note that we consider only three levels of synsets above the token of interest in order to exclude synsets that are too abstract to comprehend (e.g., psychological feature, group action, and entity).

- SENTIMENT attribute of a token (if any) indicates the sentiment (pos or neg) of the token based on the lexicon in Minqing Hu and Bing Liu. 2004. Mining and summarizing customer reviews. In International Conference on Knowledge Discovery and Data Mining, KDD’04, pages 168–177.

Examples of augmented texts

Input sentence: London is the capital and largest city of England and the United Kingdom.

London: {'SPACY:NER-GPE', 'SPACY:POS-PROPN', 'TEXT:london', 'HYPERNYM:london.n.01'}

is: {'SPACY:POS-VERB', 'TEXT:is', 'HYPERNYM:be.v.01'}

the: {'TEXT:the', 'SPACY:POS-DET'}

capital: {'SPACY:POS-NOUN', 'HYPERNYM:capital.n.06', 'TEXT:capital'}

and: {'TEXT:and', 'SPACY:POS-CCONJ'}

largest: {'HYPERNYM:large.a.01', 'TEXT:largest', 'SPACY:POS-ADJ'}

city: {'HYPERNYM:urban_area.n.01', 'HYPERNYM:municipality.n.01', 'TEXT:city', 'SPACY:POS-NOUN', 'HYPERNYM:geographical_area.n.01', 'HYPERNYM:administrative_district.n.01', 'HYPERNYM:district.n.01', 'HYPERNYM:city.n.01'}

of: {'SPACY:POS-ADP', 'TEXT:of'}

England: {'SPACY:NER-GPE', 'HYPERNYM:england.n.01', 'SPACY:POS-PROPN', 'TEXT:england'}

and: {'TEXT:and', 'SPACY:POS-CCONJ'}

the: {'TEXT:the', 'SPACY:POS-DET', 'SPACY:NER-GPE'}

United: {'TEXT:united', 'SPACY:NER-GPE', 'SPACY:POS-PROPN'}

Kingdom: {'HYPERNYM:kingdom.n.05', 'SPACY:POS-PROPN', 'HYPERNYM:taxonomic_group.n.01', 'TEXT:kingdom', 'SPACY:NER-GPE', 'HYPERNYM:biological_group.n.01', 'HYPERNYM:group.n.01'}

.: {'TEXT:.', 'SPACY:POS-PUNCT'}

Input sentence: This was the worst restaurant I have ever had the misfortune of eating at.

This: {'SPACY:POS-DET', 'TEXT:this'}

was: {'TEXT:was', 'SPACY:POS-VERB', 'HYPERNYM:be.v.01'}

the: {'TEXT:the', 'SPACY:POS-DET'}

worst: {'SENTIMENT:neg', 'HYPERNYM:worst.a.01', 'SPACY:POS-ADJ', 'TEXT:worst'}

restaurant: {'SPACY:POS-NOUN', 'HYPERNYM:artifact.n.01', 'HYPERNYM:restaurant.n.01', 'HYPERNYM:building.n.01', 'TEXT:restaurant', 'HYPERNYM:structure.n.01'}

I: {'TEXT:i', 'SPACY:POS-PRON'}

have: {'SPACY:POS-VERB', 'TEXT:have', 'HYPERNYM:own.v.01'}

ever: {'SPACY:POS-ADV', 'TEXT:ever', 'HYPERNYM:always.r.01'}

had: {'SPACY:POS-VERB', 'TEXT:had', 'HYPERNYM:own.v.01'}

the: {'TEXT:the', 'SPACY:POS-DET'}

misfortune: {'TEXT:misfortune', 'HYPERNYM:fortune.n.04', 'HYPERNYM:state.n.02', 'SPACY:POS-NOUN', 'SENTIMENT:neg', 'HYPERNYM:misfortune.n.02', 'HYPERNYM:condition.n.03'}

of: {'SPACY:POS-ADP', 'TEXT:of'}

eating: {'SPACY:POS-VERB', 'HYPERNYM:change.v.01', 'HYPERNYM:damage.v.01', 'TEXT:eating', 'HYPERNYM:corrode.v.01'}

at: {'SPACY:POS-ADP', 'TEXT:at'}

.: {'TEXT:.', 'SPACY:POS-PUNCT'}

Supporting features

- Translating from a pattern to its English explanation

# Continue from the code snippet above

print(grasptext.pattern2text(the_patterns[0]))

- Removing redundant patterns

- Mode = 1: Remove pattern p2 if there exists p1 in the patterns set such that p2 is a specialization of p1 and metric of p2 is lower than p1

- Mode = 2: Remove pattern p2 if there exists p1 in the patterns set such that p2 is a specialization of p1 regardless of the metric value of p1 and p2

selected_patterns = grasptext.remove_specialized_patterns(the_patterns, metric = lambda x: x.precision, mode = 1)

- Vectorizing texts using patterns

X_array = grasptext.extract_features(texts = pos_exs + neg_exs,

patterns = selected_patterns,

include_standard = ['TEXT', 'POS', 'NER', 'SENTIMENT'])

- Creating a custom attribute

In order to create a custom attribute, you are required to implement two functions.

- An extraction function extracts attributes for a given input text.

- Input: An input text (

text) and a list of tokens in this input text (tokens). (You may use either or both of them in your extraction function.) - Output: A list of sets where the set at index

icontains attributes extracted for the input tokeni.

- Input: An input text (

- A translation function tells our library how to read this custom attribute in patterns.

- Input: An attribute to read (

attr) in the form ATTRIBUTE_NAME:ATTRIBUTE (e.g., SENTIMENT:pos) and a booleanis_complementspecifying whether we want the returned attribute description as an adjective phrase or as a noun. - Output: The natural language read (i.e., description) of the attribute. For instance, given an attribute SENTIMENT:pos, the output could be 'bearing a positive sentiment' or 'a positive-sentiment word' depending on whether

is_complementequals True or False.

- Input: An attribute to read (

After you obtain both functions, put them as parameters of grasptext.CustomAttribute together with the attribute name to create the custom attribute and use it in the GrASP engine via the include_custom hyperparameter.

An example demonstrating how to create and use a custom attribute is shown below.

ARGUMENTATIVE_LEXICON = [line.strip().lower() for line in open('data/argumentative_unigrams_lexicon_shortlist.txt', 'r') if line.strip() != '']

def _argumentative_extraction(text: str, tokens: List[str]) -> List[Set[str]]:

tokens = map(str.lower, tokens)

ans = []

for t in tokens:

t_ans = []

if t.lower() in ARGUMENTATIVE_LEXICON:

t_ans.append('Yes')

ans.append(set(t_ans))

return ans

def _argumentative_translation(attr:str,

is_complement:bool = False) -> str:

word = attr.split(':')[1]

assert word == 'Yes'

return 'an argumentative word'

ArgumentativeAttribute = grasptext.CustomAttribute(name = 'ARGUMENTATIVE',

extraction_function = _argumentative_extraction,

translation_function = _argumentative_translation)

grasp_model = grasptext.GrASP(include_standard = ['TEXT', 'POS', 'NER', 'SENTIMENT'],

include_custom = [ArgumentativeAttribute]

)

Data structure of the JSON result file

If you want to use our web exploration tool to display results from other pattern extraction algorithms, you may do so by organizing the results into a JSON file with the structure required by our web exploration tool (i.e., the same structure as produced by grasptext). Note that you don't need to fill in the fields that are not applicable to your pattern extraction algorithm.

{

"configuration": { // A dictionary of hyperparameters of the pattern extraction algorithm

"min_freq_threshold": 0.005,

"correlation_threshold": 0.5,

"alphabet_size": 200,

"num_patterns": 200,

"max_len": 5,

"window_size": null,

"gaps_allowed": 0,

"gain_criteria": "global",

"min_coverage_threshold": null,

"include_standard": ["TEXT", "POS", "NER", "HYPERNYM"],

"include_custom": [], // A list of names of custom attributes

"comment": "" // An additional comment

},

"alphabet": [ // A list of alphabet (all the unique attributes)

"HYPERNYM:jesus.n.01",

"HYPERNYM:christian.n.01",

"TEXT:christ",

...

],

"rules": [ // A list of dictionaries each of which contains information of a specific pattern (i.e., rule)

{

"index": 0, // Pattern index

"pattern": "[['HYPERNYM:jesus.n.01']]", // The pattern

"meaning": "A type of jesus (n)", // The translation

"class": "pos", // The associated class

"#pos": 199, // The number of positive examples matched (in the training set)

"#neg": 34, // The number of negative examples matched (in the training set)

"score": 0.10736560419589636, // The metric score (on the training set)

"coverage": 0.26998841251448435, // Coverage (on the training set)

"precision": 0.8540772532188842, // Precision (on the training set)

"recall": 0.4171907756813417, // Recall (on the training set)

"F1": 0.5605633802816902, // F1 (on the training set)

"pos_example_labels": [false, false, false, ...], // A list showing whether each positive example is matched by this pattern

"neg_example_labels": [false, false, false, ...] // A list showing whether each negative example is matched by this pattern

},

{

"index": 1,

"pattern": "[['HYPERNYM:christian.n.01']]",

"meaning": "A type of christian (n)",

...

},

...

],

"dataset":{

"info": {"total": 863, "#pos": 477, "#neg": 386}, // Information about the training set (Number of all examples, positive examples, and negative examples, respectively

"pos_exs": [ // A list of positive examples in the training set

{

"idx": 0, // Positive example index

"text": "Hi,\n\tDoes anyone ...", // The full text

"tokens": ["Hi", ",", "\n\t", "Does"], // The tokenized text

"label": "pos", // The label in the training set

"rules": [[], [], [], [], [165, 171], [], ...], // A list where element i shows indices of rules matching token i of this text

"class": [[], [], [], [], [1, 1], [], ...] // A list where element i shows the associated classes (0 or 1) of rules matching token i of this text

},

{

"idx": 1,

"text": "jemurray@magnus.acs.ohio-state.edu ...",

"tokens": ["jemurray@magnus.acs.ohio-state.edu", "(", "John", ...],

...

},

...

],

"neg_exs": [ // A list of negative examples in the training set

{

"idx": 0,

"text": "Tony Lezard <tony@mantis.co.uk> writes: ...",

"tokens": ["Tony", "Lezard", "<", "tony@mantis.co.uk", ">", "writes", ...],

...

},

...

]

}

}

The Web Exploration Tool

Requirements: Python 3.6 and Flask

Steps

- To import json result files to the web system, please edit

web_demo/settings.py. For instance,

CASES = {

1: {'name': 'SMS Spam Classification', 'result_path': '../examples/results/case_study_1.json'},

2: {'name': 'Topic-dependent Argument Mining', 'result_path': '../examples/results/case_study_2.json'},

}

- To run the web system, go inside the web_demo folder and run

python -u app.py. You will see the following messages.

$ python -u app.py

* Restarting with stat

* Debugger is active!

* Debugger PIN: 553-838-653

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

So, using your web browser, you can access all the reports at http://127.0.0.1:5000/.

Note that we have the live demo of our two case studies (spam detection and argument mining) now running here.

Repository Structure

.

├── examples/

│ ├── data/ # For downloaded data

│ ├── results/ # For exported results (.json, .csv)

│ ├── CaseStudy1_SMSSpamCollection.ipynb

│ └── CaseStudy2_ArgumentMining.ipynb

├── figs/ # For figures used in this README file

├── grasptext/ # Main Python package directory

│ └── grasptext.py # The main grasptext code

├── web_demo/ # The web-based exploration tool

│ ├── static/ # For CSS and JS files

│ ├── templates/ # For Jinja2 templates for rendering the html output

│ ├── app.py # The main Flask application

│ └── settings.py # For specifying locations of JSON result files to explore

├── .gitignore

├── LICENSE

├── MANIFEST.in

├── README.md

├── index.html # For redirecting to our demo website

└── setup.py # For building Python package and pushing to PyPi

Citation

If you use or refer to the implementation in this repository, please cite the following paper.

@InProceedings{lertvittayakumjorn-EtAl:2022:LREC,

author = {Lertvittayakumjorn, Piyawat and Choshen, Leshem and Shnarch, Eyal and Toni, Francesca},

title = {GrASP: A Library for Extracting and Exploring Human-Interpretable Textual Patterns},

booktitle = {Proceedings of the Language Resources and Evaluation Conference},

month = {June},

year = {2022},

address = {Marseille, France},

publisher = {European Language Resources Association},

pages = {6093--6103},

url = {https://aclanthology.org/2022.lrec-1.655}

}

If you refer to the original GrASP algorithm, please cite the following paper.

@inproceedings{shnarch-etal-2017-grasp,

title = "{GRASP}: Rich Patterns for Argumentation Mining",

author = "Shnarch, Eyal and

Levy, Ran and

Raykar, Vikas and

Slonim, Noam",

booktitle = "Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing",

month = sep,

year = "2017",

address = "Copenhagen, Denmark",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/D17-1140",

doi = "10.18653/v1/D17-1140",

pages = "1345--1350",

}

Contact

Piyawat Lertvittayakumjorn (plkumjorn [at] gmail [dot] com)

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file grasptext-0.0.2.tar.gz.

File metadata

- Download URL: grasptext-0.0.2.tar.gz

- Upload date:

- Size: 1.6 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.8.0 pkginfo/1.8.3 readme-renderer/34.0 requests/2.18.4 requests-toolbelt/0.8.0 urllib3/1.26.12 tqdm/4.46.0 importlib-metadata/4.8.3 keyring/23.4.1 rfc3986/1.5.0 colorama/0.4.5 CPython/3.6.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

ad948e1ef0eaeae0a6298f7d958712e995b7e5e3158c2d440a870c426a318fdc

|

|

| MD5 |

fc0568dafa5ed47ee14a7eb11ec43416

|

|

| BLAKE2b-256 |

285bfad26d8ad51d475b31333cf86644824ffa31653f3a9b3ad31e542ae47658

|

File details

Details for the file grasptext-0.0.2-py3-none-any.whl.

File metadata

- Download URL: grasptext-0.0.2-py3-none-any.whl

- Upload date:

- Size: 21.1 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.8.0 pkginfo/1.8.3 readme-renderer/34.0 requests/2.18.4 requests-toolbelt/0.8.0 urllib3/1.26.12 tqdm/4.46.0 importlib-metadata/4.8.3 keyring/23.4.1 rfc3986/1.5.0 colorama/0.4.5 CPython/3.6.3

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0d2623dc710a94aed5ec5e4851630726c69c39950cbb21aad6a7bedaddc598ac

|

|

| MD5 |

b0624885f3b1a0b3e97dceef09a02de8

|

|

| BLAKE2b-256 |

feca6eb01ff470a6bf6158bd5dd13560e9b1347682aaf52c8af056f6e01da33f

|