An OpenAI gym environment for ad serving algorithms.

Project description

gym-adserver

gym-adserver is an OpenAI Gym environment for reinforcement learning-based online advertising algorithms. gym-adserver is now one of the official OpenAI environments.

The AdServer environment implements a typical multi-armed bandit scenario where an ad server agent must select the best advertisement (ad) to be displayed in a web page.

Each time an ad is selected, it is counted as one impression. A displayed ad can be clicked (reward = 1) or not (reward = 0), depending on the interest of the user The agent must maximize the overall click-through rate.

OpenAI Environment Attributes

| Attribute | Value | Notes |

|---|---|---|

| Action Space | Discrete(n) | n is the number of ads to choose from |

| Observation Space | Box(0, +inf, (2, n)) | Number of impressions and clicks for each ad |

| Actions | [0...n] | Index of the selected ad |

| Rewards | 0, 1 | 1 = clicked, 0 = not clicked |

| Render Modes | 'human' | Displays the agent's performance graphically |

Installation

You can download the source code and install the dependencies with:

git clone https://github.com/falox/gym-adserver

cd gym-adserver

pip install -e .

Alternatively, you can install gym-adserver as a pip package:

pip install gym-adserver

Basic Usage

You can test the environment by running one of the built-in agents:

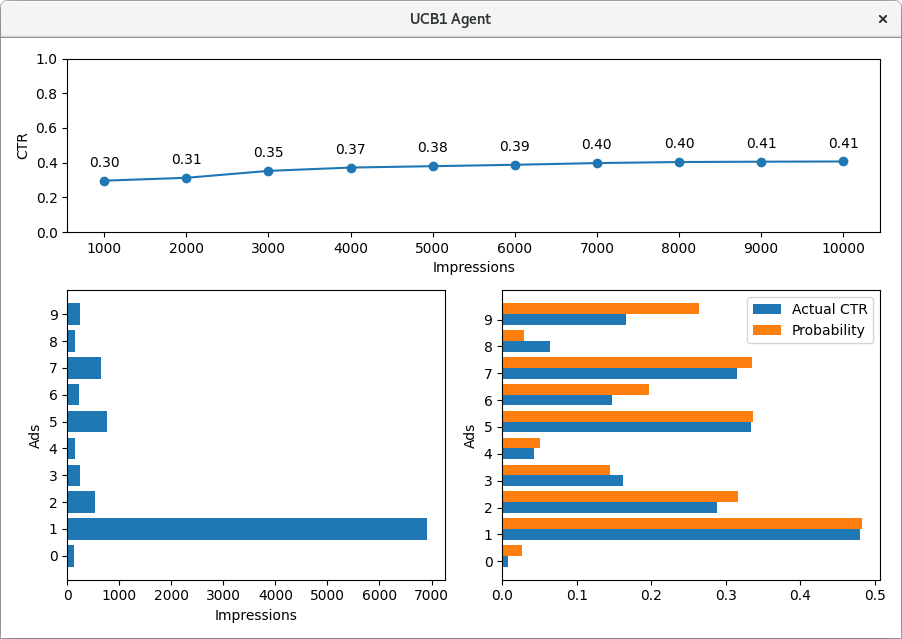

python gym_adserver/agents/ucb1_agent.py --num_ads 10 --impressions 10000

Or comparing multiple agents (defined in compare_agents.py):

python gym_adserver/wrappers/compare_agents.py --num_ads 10 --impressions 10000

The environent will generate 10 (num_ads) ads with different performance rates and the agent, without prior knowledge, will learn to select the most performant ones. The simulation will last 10000 iterations (impressions).

A window will open and show the agent's performance and the environment's state:

The overall CTR increases over time as the agent learns what the best actions are.

During the initialization, the environment assigns to each ad a "Probability" to be clicked. Such a probability is known by the environment only and will be used to draw the rewards during the simulation. The "Actual CTR" is the CTR actually occurred during the simulation: with time, it approximates the probability.

The effective agent will give most impressions to the most performant ads.

Built-in Agents

The gym_adserver/agents directory contains a collection of agents implementing the following strategies:

- Random

- epsilon-Greedy

- Softmax

- UCB1

Each agent has different parameters to adjust and optimize its performance.

You can use the built-in agents as a starting point to implement your own algorithm.

Unit Tests

You can run the unit test for the environment with:

pytest -v

Next Steps

- Extend AdServer with the concepts of budget and bid

- Extend AdServer to change the ad performance over time (currently the CTR is constant)

- Implement Q-learning agents

- Implement a meta-agent that exploits multiple sub-agents with different algorithms

- Implement epsilon-Greedy variants

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file gym_adserver-1.0.2.tar.gz.

File metadata

- Download URL: gym_adserver-1.0.2.tar.gz

- Upload date:

- Size: 5.9 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/52.0.0 requests-toolbelt/0.9.1 tqdm/4.56.0 CPython/3.6.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

ef174548a7cc30e4f49c513c7db63e2f865294aa0e43fa92cb81b587804a06e7

|

|

| MD5 |

759d3b2556ef2bb9446c494267c3fbdb

|

|

| BLAKE2b-256 |

2cf987441d9ac3dc24933c6f4145a789e41dad4de46c6c19f649d3894c998bc6

|

File details

Details for the file gym_adserver-1.0.2-py3-none-any.whl.

File metadata

- Download URL: gym_adserver-1.0.2-py3-none-any.whl

- Upload date:

- Size: 8.0 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.3.0 pkginfo/1.7.0 requests/2.25.1 setuptools/52.0.0 requests-toolbelt/0.9.1 tqdm/4.56.0 CPython/3.6.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

c16ffc6240fb86b663a4ea436ebf95910cdc8710ab05868864e9445a01a4f8f2

|

|

| MD5 |

bdf9630f921e63de743f6f94d50e9aab

|

|

| BLAKE2b-256 |

64446fcf2382f5bb1f6e10b9cfbd0ab431a0460ec63f42646ad318487cecf422

|