Jacobian-Enhanced Neural Nets (JENN)

Project description

Jacobian-Enhanced Neural Network (JENN)

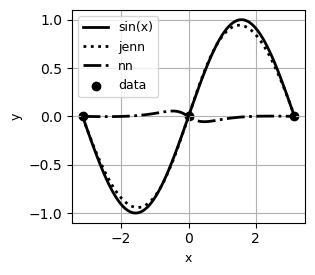

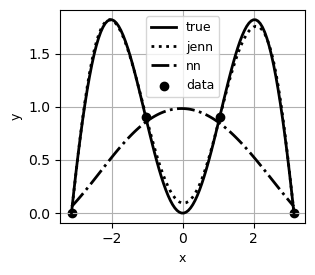

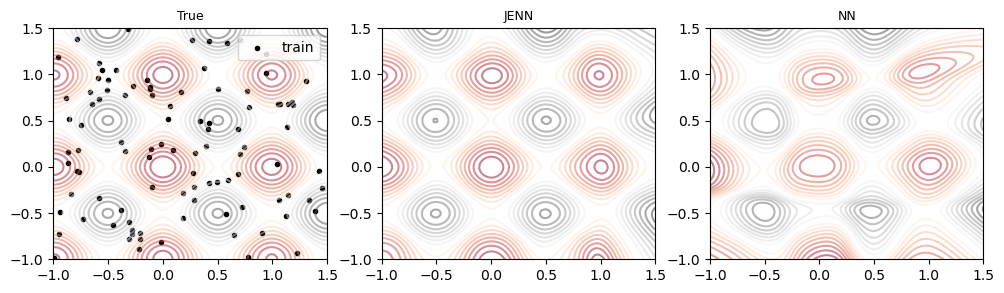

Jacobian-Enhanced Neural Networks (JENN) are fully connected multi-layer perceptrons, whose training process is modified to predict partial derivatives more accurately. This is accomplished by minimizing a modified version of the Least Squares Estimator (LSE) that accounts for Jacobian prediction error (see paper). The main benefit of jacobian-enhancement is better accuracy with fewer training points compared to standard fully connected neural nets, as illustrated below.

| Example #1 | Example #2 |

|---|---|

|

|

| Example #3 |

|---|

|

Citation

If you use JENN in a scientific publication, please consider citing it:

@misc{berguin2024jacobianenhanced,

title={Jacobian-Enhanced Neural Networks},

author={Steven H. Berguin},

year={2024},

eprint={2406.09132},

archivePrefix={arXiv},

primaryClass={id='cs.LG' full_name='Machine Learning' is_active=True alt_name=None in_archive='cs' is_general=False description='Papers on all aspects of machine learning research (supervised, unsupervised, reinforcement learning, bandit problems, and so on) including also robustness, explanation, fairness, and methodology. cs.LG is also an appropriate primary category for applications of machine learning methods.'}

}

Main Features

- Multi-Task Learning : predict more than one output with same model Y = f(X) where Y = [y1, y2, ...]

- Jacobian prediction : analytically compute the Jacobian (i.e. forward propagation of dY/dX)

- Gradient-Enhancement: minimize prediction error of partials (i.e. back-prop accounts for dY/dX)

Installation

pip install jenn

Example Usage

See demo notebooks for more details

Import library:

import jenn

Generate example training and test data:

x_train, y_train, dydx_train = jenn.utilities.sample(

f=jenn.synthetic_data.sinusoid.compute,

f_prime=jenn.synthetic_data.sinusoid.compute_partials,

m_random=0,

m_levels=4,

lb=-3.14,

ub=3.14,

)

x_test, y_test, dydx_test = jenn.utilities.sample(

f=jenn.synthetic_data.sinusoid.compute,

f_prime=jenn.synthetic_data.sinusoid.compute_partials,

m_random=30,

m_levels=0,

lb=-3.14,

ub=3.14,

)

Train a model:

nn = jenn.NeuralNet(

layer_sizes=[1, 12, 1],

).fit(

x=x_train,

y=y_train,

dydx=dydx_train,

lambd=0.1, # regularization parameter

is_normalize=True, # normalize data before fitting it

)

Make predictions:

y, dydx = nn(x)

# OR

y = nn.predict(x)

dydx = nn.predict_partials(x)

Save model (parameters) for later use:

nn.save('parameters.json')

Reload saved parameters into new model:

reloaded = jenn.NeuralNet.load('parameters.json')

Check goodness of fit:

jenn.plot_goodness_of_fit(

y_true=y_test,

y_pred=nn.predict(x_test),

title="y (JENN)"

)

Check goodness of fit of partials:

jenn.plot_goodness_of_fit(

y_true=dydx_test,

y_pred=nn.predict_partials(x_test),

title="dy/dx (JENN)"

)

Show sensitivity profiles:

jenn.plot_sensitivity_profiles(

func=[jenn.synthetic_data.sinusoid.compute, nn.predict],

x_min=x_train.min(),

x_max=x_train.max(),

x_true=x_train,

y_true=y_train,

resolution=100,

legend_label=['true', 'pred'],

xlabels=['x'],

ylabels=['y'],

)

Use Case

JENN is intended for the field of computer aided design, where there is often a need to replace computationally expensive, physics-based models with so-called surrogate models in order to save time down the line. Since the surrogate model emulates the original model accurately in real time, it offers a speed benefit that can be used to carry out orders of magnitude more function calls quickly, opening the door to Monte Carlo simulation of expensive functions for example.

In general, the value proposition of a surrogate is that the computational expense of generating training data to fit the model is much less than the computational expense of performing the analysis with the original physics-based model itself. However, in the special case of gradient-enhanced methods, there is the additional value proposition that partials are accurate which is a critical property for one important use-case: surrogate-based optimization. The field of aerospace engineering is rich in applications of such a use-case.

Limitations

Gradient-enhanced methods require responses to be continuous and smooth, but they are only beneficial if the cost of obtaining partials is not excessive in the first place (e.g. adjoint methods), or if the need for accuracy outweighs the cost of computing the partials. Users should therefore carefully weigh the benefit of gradient-enhanced methods relative to the needs of their application.

License

Distributed under the terms of the MIT License.

Acknowledgement

This code used the code by Prof. Andrew Ng in the Coursera Deep Learning Specialization as a starting point. It then built upon it to include additional features such as line search and plotting but, most of all, it fundamentally changed the formulation to include gradient-enhancement and made sure all arrays were updated in place (data is never copied). The author would like to thank Andrew Ng for offering the fundamentals of deep learning on Coursera, which took a complicated subject and explained it in simple terms that even an aerospace engineer could understand.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file jenn-2.0.0.tar.gz.

File metadata

- Download URL: jenn-2.0.0.tar.gz

- Upload date:

- Size: 50.0 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.13.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

d4e6f8299e49018e5c1b5c4a2ef2c0db5fc42bf73c71f31ef182b3def0b51fe4

|

|

| MD5 |

3390fb6cb6b2c991c668161545987f51

|

|

| BLAKE2b-256 |

3ba4f10e512678ac8a44e9c3a2134a925d04a41b2c1984764906127ae2155068

|

File details

Details for the file jenn-2.0.0-py3-none-any.whl.

File metadata

- Download URL: jenn-2.0.0-py3-none-any.whl

- Upload date:

- Size: 47.7 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.13.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

11e414605d8e3953149869d4c7f4241ae64b32899e12a9ece1e985d219534716

|

|

| MD5 |

1b3856ffb991e8113540603b07ee4ffe

|

|

| BLAKE2b-256 |

c8fd97629a5f1dc5dfec1a36915db651b1e889c989a9484b2d92fbe4af203c48

|