Keras Utility & Layer Collection.

Project description

Keras Utility & Layer Collection [WIP]

Collection of custom layers for Keras which are missing in the main framework. These layers might be useful to reproduce current state-of-the-art deep learning papers using Keras.

Applications

Using this library the following research papers have been reimplemented in Keras:

Overview of implemented Layers

At the moment the Keras Layer Collection offers the following layers/features:

- Scaled Dot-Product Attention

- Multi-Head Attention

- Layer Normalization

- Sequencewise Attention

- Attention Wrapper

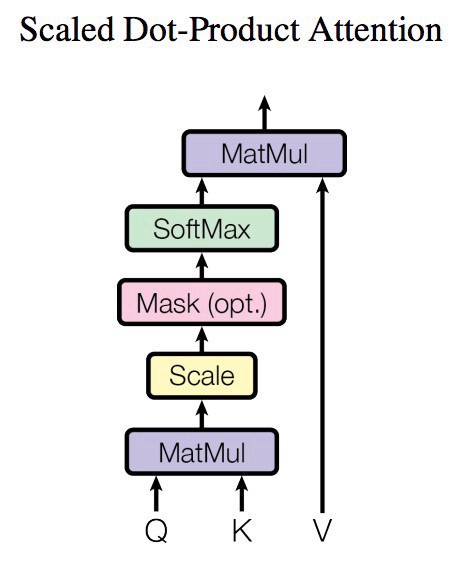

Scaled Dot-Product Attention

Implementation as described in Attention Is All You Need. Performs a non-linear transformation on the values V by comparing the queries Q with the keys K. The illustration below is taken from the paper cited above.

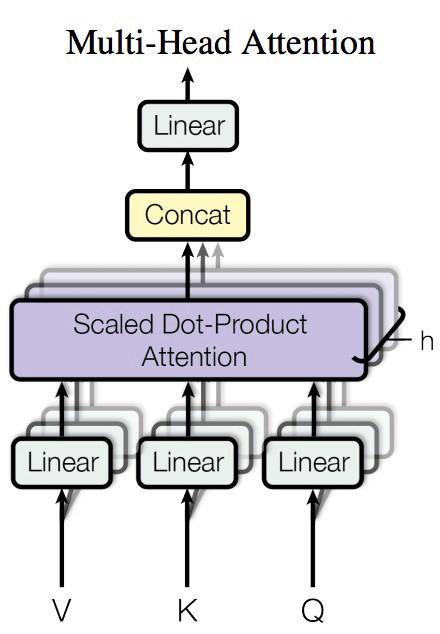

Multi-Head Attention

Implementation as described in Attention Is All You Need. This is basically just a bunch a Scaled Dot-Product Attention blocks whose output is combined with a linear transformation. The illustration below is taken from the paper cited above.

Layer Normalization

Sequencewise Attention

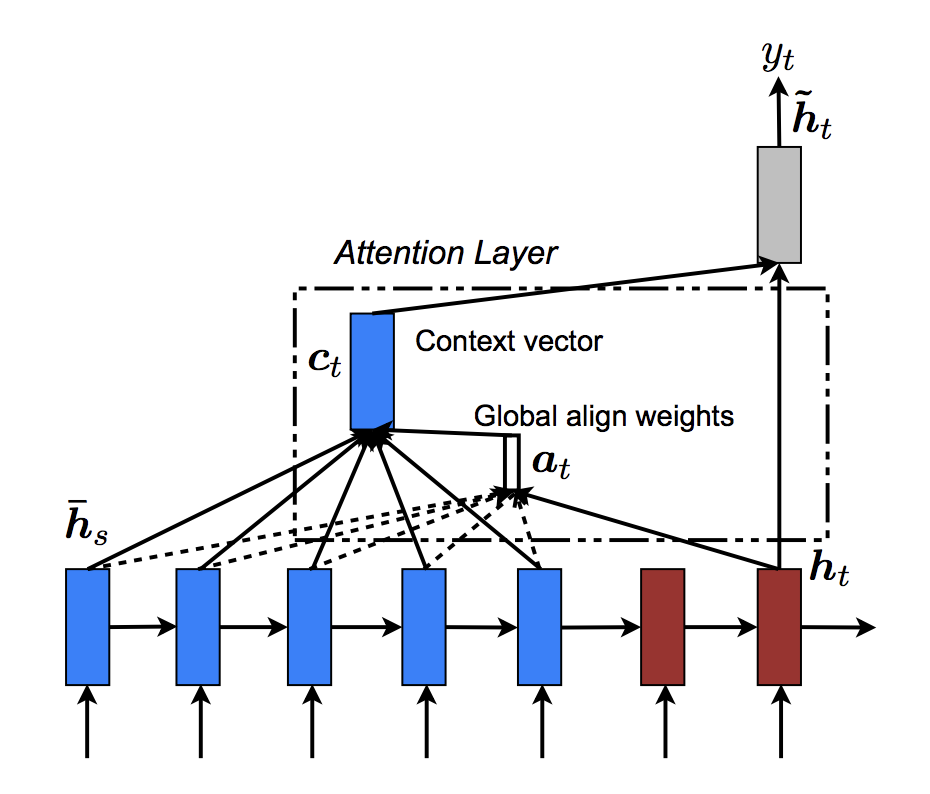

This layer applies various attention transformations on data. It needs a time-series of queries and a time-series of values to calculate the attention and the final linear transformation to obtain the output. This is a faster version of the general attention technique. It is similar to the global attention method described in Effective Approaches to Attention-based Neural Machine Translation

Attention Wrapper

The idea of the implementation is based on the paper Effective Approaches to Attention-based Neural Machine Translation. This layer can be wrapped around any RNN in Keras. It calculates for each time step of the RNN the attention vector between the previous output and all input steps. This way, a new attention-based input for the RNN is constructed. This input is finally fed into the RNN. This technique is similar to the input-feeding method described in the paper cited. The illustration below is taken from the paper cited above.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file kulc-0.0.9.tar.gz.

File metadata

- Download URL: kulc-0.0.9.tar.gz

- Upload date:

- Size: 12.5 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.11.0 pkginfo/1.4.2 requests/2.18.4 setuptools/39.1.0 requests-toolbelt/0.8.0 tqdm/4.25.0 CPython/3.6.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

7286bbac48ed16d124621f4924d62d21eb34b763da91bb6ca3432db5730dd0f0

|

|

| MD5 |

3d9b93311964e6c5761375e7781a0c4c

|

|

| BLAKE2b-256 |

328e0d900e8b0ddec62c92e7023808f1f7943fff9c1f374edeb3190dbc5ab683

|

File details

Details for the file kulc-0.0.9-py2.py3-none-any.whl.

File metadata

- Download URL: kulc-0.0.9-py2.py3-none-any.whl

- Upload date:

- Size: 13.5 kB

- Tags: Python 2, Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.11.0 pkginfo/1.4.2 requests/2.18.4 setuptools/39.1.0 requests-toolbelt/0.8.0 tqdm/4.25.0 CPython/3.6.5

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

bdcc5b01a1b53f05fb8928f83fdb8ed1c69c42aad8a7737cec1abc22bcd1064f

|

|

| MD5 |

c05f18a1bec89308ee4ae7e43dc01e4e

|

|

| BLAKE2b-256 |

2c09dd1fadedf4d563de31530cdd7d8bbfc6b717d9ba0ccb653a285070e577bc

|