An AI assistant powered by Llama models

Project description

🌟 Llama Assistant 🌟

Local AI Assistant That Respects Your Privacy! 🔒

Website: llama-assistant.nrl.ai

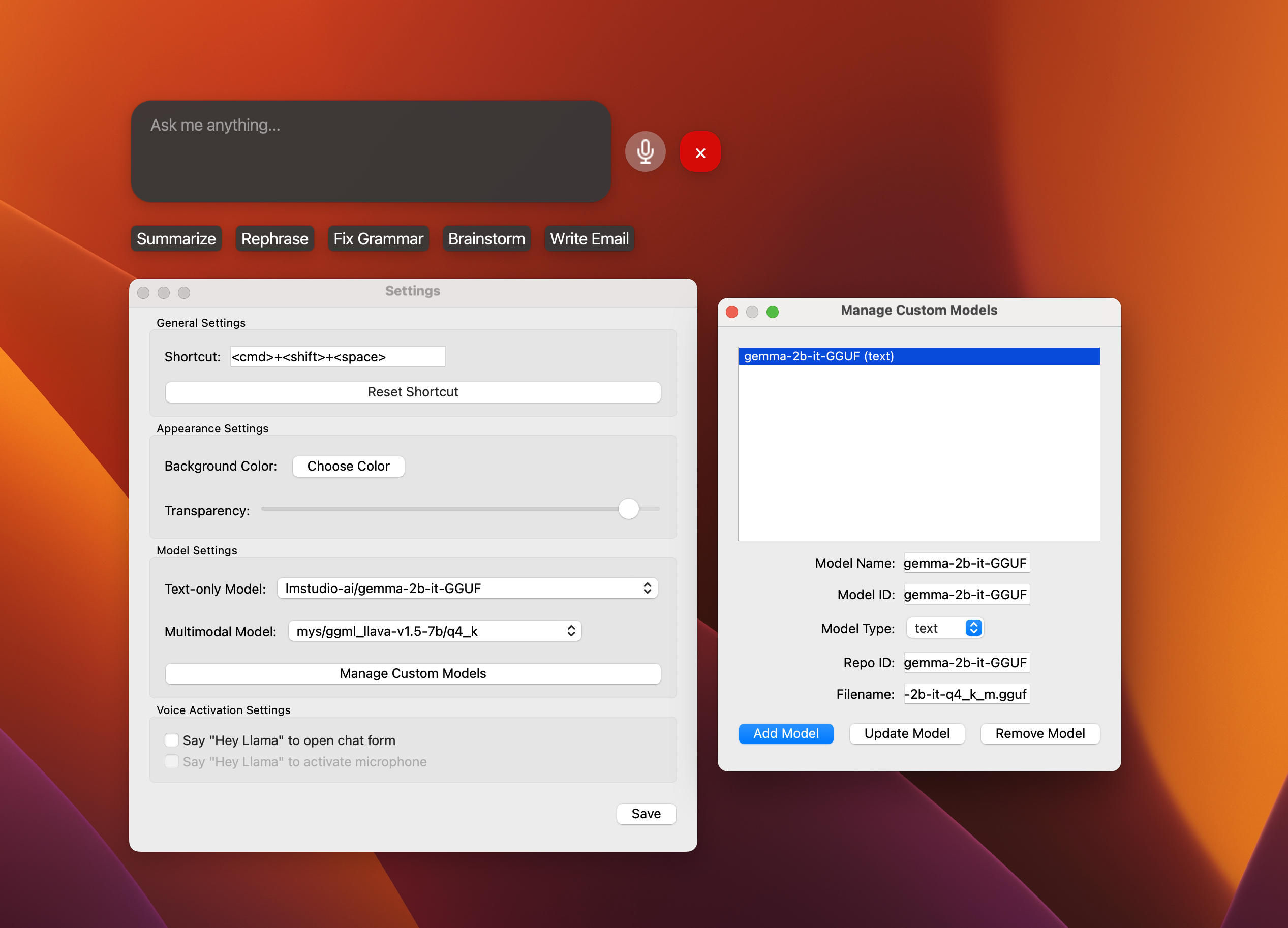

AI-powered assistant to help you with your daily tasks, powered by Llama 3.2. It can recognize your voice, process natural language, and perform various actions based on your commands: summarizing text, rephrasing sentences, answering questions, writing emails, and more.

This assistant can run offline on your local machine, and it respects your privacy by not sending any data to external servers.

https://github.com/user-attachments/assets/af2c544b-6d46-4c44-87d8-9a051ba213db

Supported Models

-

📝 Text-only models:

- Llama 3.2 - 1B, 3B (4/8-bit quantized).

- Qwen2.5-0.5B-Instruct (4-bit quantized).

- And other models that LlamaCPP supports via custom models. See the list.

-

🖼️ Multimodal models:

- Moondream2.

- MiniCPM-v2.6.

- LLaVA 1.5/1.6.

- Besides supported models, you can try other variants via custom models.

TODO

- 🖼️ Support multimodal model: moondream2.

- 🗣️ Add wake word detection: "Hey Llama!".

- 🛠️ Custom models: Add support for custom models.

- 📚 Support 5 other text models.

- 🖼️ Support 5 other multimodal models.

- ⚡ Streaming support for response.

- 🎙️ Add offline STT support: WhisperCPP.

- 🧠 Knowledge database: Langchain or LlamaIndex?.

- 🔌 Plugin system for extensibility.

- 📰 News and weather updates.

- 📧 Email integration with Gmail and Outlook.

- 📝 Note-taking and task management.

- 🎵 Music player and podcast integration.

- 🤖 Workflow with multiple agents.

- 🌐 Multi-language support: English, Spanish, French, German, etc.

- 📦 Package for Windows, Linux, and macOS.

- 🔄 Automated tests and CI/CD pipeline.

Features

- 🎙️ Voice recognition for hands-free interaction.

- 💬 Natural language processing with Llama 3.2.

- 🖼️ Image analysis capabilities (TODO).

- ⚡ Global hotkey for quick access (Cmd+Shift+Space on macOS).

- 🎨 Customizable UI with adjustable transparency.

Note: This project is a work in progress, and new features are being added regularly.

Technologies Used

Installation

Install PortAudio:

-

For Mac OS X, you can use

Homebrew_::brew install portaudioNote: if you encounter an error when running

pip installthat indicates it can't findportaudio.h, try runningpip installwith the following flags::pip install --global-option='build_ext' \ --global-option='-I/usr/local/include' \ --global-option='-L/usr/local/lib' \ pyaudio -

For Debian / Ubuntu Linux::

apt-get install portaudio19-dev python3-all-dev -

Windows may work without having to install PortAudio explicitly (it will get installed with PyAudio).

For more details, see the PyAudio installation_ page.

.. _PyAudio: https://people.csail.mit.edu/hubert/pyaudio/ .. _PortAudio: http://www.portaudio.com/ .. _PyAudio installation: https://people.csail.mit.edu/hubert/pyaudio/#downloads .. _Homebrew: http://brew.sh

Install from PyPI:

pip install llama-assistant

pip install pyaudio

Or install from source:

- Clone the repository:

git clone https://github.com/vietanhdev/llama-assistant.git

cd llama-assistant

- Install the required dependencies:

pip install -r requirements.txt

pip install pyaudio

Speed Hack for Apple Silicon (M1, M2, M3) users: 🔥🔥🔥

- Install Xcode:

# check the path of your xcode install

xcode-select -p

# xcode installed returns

# /Applications/Xcode-beta.app/Contents/Developer

# if xcode is missing then install it... it takes ages;

xcode-select --install

- Build

llama-cpp-pythonwith METAL support:

pip uninstall llama-cpp-python -y

CMAKE_ARGS="-DGGML_METAL=on" pip install -U llama-cpp-python --no-cache-dir

# You should now have llama-cpp-python v0.1.62 or higher installed

# llama-cpp-python 0.1.68

Usage

Run the assistant using the following command:

llama-assistant

# Or with a

python -m llama_assistant.main

Use the global hotkey (default: Cmd+Shift+Space) to quickly access the assistant from anywhere on your system.

Configuration

The assistant's settings can be customized by editing the settings.json file located in your home directory: ~/llama_assistant/settings.json.

Contributing

Contributions are welcome! Please feel free to submit a Pull Request.

License

This project is licensed under the GPLv3 License - see the LICENSE file for details.

Acknowledgements

- This project uses llama.cpp, llama-cpp-python for running large language models. The default model is Llama 3.2 by Meta AI Research.

- Speech recognition is powered by whisper.cpp and whisper-cpp-python.

Star History

Contact

- Viet-Anh Nguyen - vietanhdev, contact form.

- Project Link: https://github.com/vietanhdev/llama-assistant, https://llama-assistant.nrl.ai/.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file llama_assistant-0.1.30.tar.gz.

File metadata

- Download URL: llama_assistant-0.1.30.tar.gz

- Upload date:

- Size: 6.0 MB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.1.1 CPython/3.12.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 277392a2f3c09fdd02956a25e72606ead28e3280f9b6fda24aea5e982e4464b8 |

|

| MD5 | 8449bcf46da4bf78f1911976b65f43f5 |

|

| BLAKE2b-256 | 684654520db464baab8ae5d1f19c67687bd205706f3fe3b5f4c2829369a49f39 |

File details

Details for the file llama_assistant-0.1.30-py3-none-any.whl.

File metadata

- Download URL: llama_assistant-0.1.30-py3-none-any.whl

- Upload date:

- Size: 383.0 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: twine/5.1.1 CPython/3.12.7

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | e3d8e31e6d583985b09f9d2868ba78e7ae106ededa2b8d2f9578b5d6a354680e |

|

| MD5 | f133a6d475c0a81e484014d06bb3e6fd |

|

| BLAKE2b-256 | e5ad20afc1e3810ea5c20bd955260da15cd2b44e171b5eaa9aa1944c1517aed6 |