Learning rate schedulers, for libraries like PyTorch

Project description

lr_schedules

This project currently just contains LinearScheduler, for custom linear learning rate schedules.

from lr_schedules import LinearScheduler

import matplotlib.pyplot as plt

import torch

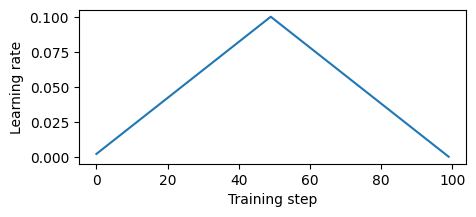

PyTorch example, triangle

times = [0, 0.5, 1]

values = [0, 1, 0]

W = torch.tensor([1.0], requires_grad=True)

optimizer = torch.optim.SGD([W], lr=0.1)

linear_scheduler = LinearScheduler(times, values, total_training_steps=100)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, linear_scheduler)

lr_vals = []

for step in range(100):

optimizer.zero_grad()

loss = torch.sum(W**2)

loss.backward()

optimizer.step()

scheduler.step()

lr_vals.append(optimizer.param_groups[0]["lr"])

plt.figure(figsize=(5, 2))

plt.plot(lr_vals)

plt.xlabel("Training step")

plt.ylabel("Learning rate")

plt.show()

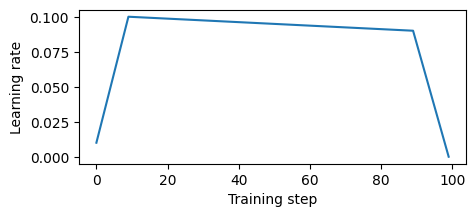

Pytorch example, ramp up and down

times = [0, 0.1, 0.9, 1]

values = [0, 1, 0.9, 0]

W = torch.tensor([1.0], requires_grad=True)

optimizer = torch.optim.SGD([W], lr=0.1)

linear_scheduler = LinearScheduler(times, values, total_training_steps=100)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, linear_scheduler)

lr_vals = []

for step in range(100):

optimizer.zero_grad()

loss = torch.sum(W**2)

loss.backward()

optimizer.step()

scheduler.step()

lr_vals.append(optimizer.param_groups[0]["lr"])

plt.figure(figsize=(5, 2))

plt.plot(lr_vals)

plt.xlabel("Training step")

plt.ylabel("Learning rate")

plt.show()

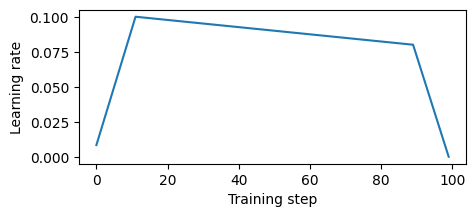

Pytorch example, specifying absolute number of steps

times = [0, 12, 90, 100]

values = [0, 1, 0.8, 0]

W = torch.tensor([1.0], requires_grad=True)

optimizer = torch.optim.SGD([W], lr=0.1)

linear_scheduler = LinearScheduler(times, values)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, linear_scheduler)

lr_vals = []

for step in range(100):

optimizer.zero_grad()

loss = torch.sum(W**2)

loss.backward()

optimizer.step()

scheduler.step()

lr_vals.append(optimizer.param_groups[0]["lr"])

plt.figure(figsize=(5, 2))

plt.plot(lr_vals)

plt.xlabel("Training step")

plt.ylabel("Learning rate")

plt.show()

Dev set up of repo

- Clone the repo

- Install

poetry(repo was run with python3.9) - Run

poetry install --with docs - Run

poetry run pre-commit install

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

lr_schedules-0.0.2.tar.gz

(6.8 kB

view details)

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file lr_schedules-0.0.2.tar.gz.

File metadata

- Download URL: lr_schedules-0.0.2.tar.gz

- Upload date:

- Size: 6.8 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.6.1 CPython/3.9.17 Linux/5.15.0-1041-azure

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

dd22373bdcc4effad90ff0d08ace8b7bdc3a54f1a39d4e83a68d89bc4e060e84

|

|

| MD5 |

19df95dd2e50cb1c042cedc4ad80f802

|

|

| BLAKE2b-256 |

b97c1b63121aff4820e3240463faf0a668c1b403c6832a36dc14c6b89bd949fa

|

File details

Details for the file lr_schedules-0.0.2-py3-none-any.whl.

File metadata

- Download URL: lr_schedules-0.0.2-py3-none-any.whl

- Upload date:

- Size: 12.4 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.6.1 CPython/3.9.17 Linux/5.15.0-1041-azure

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

0ae598c4e917294bf719ab9022365aec7dd74e03ac6059cff05e9c013961328f

|

|

| MD5 |

14140846ee57311ea262032eb8ec2e64

|

|

| BLAKE2b-256 |

165fbf243bc1c3ad798dea2a44de3bdcaa8e4866c58bd65461ea7bda4cf21567

|