Fine-tuning LLMs using Forward Passes

Project description

MeZO: Fine-Tuning Language Models with Just Forward Passes

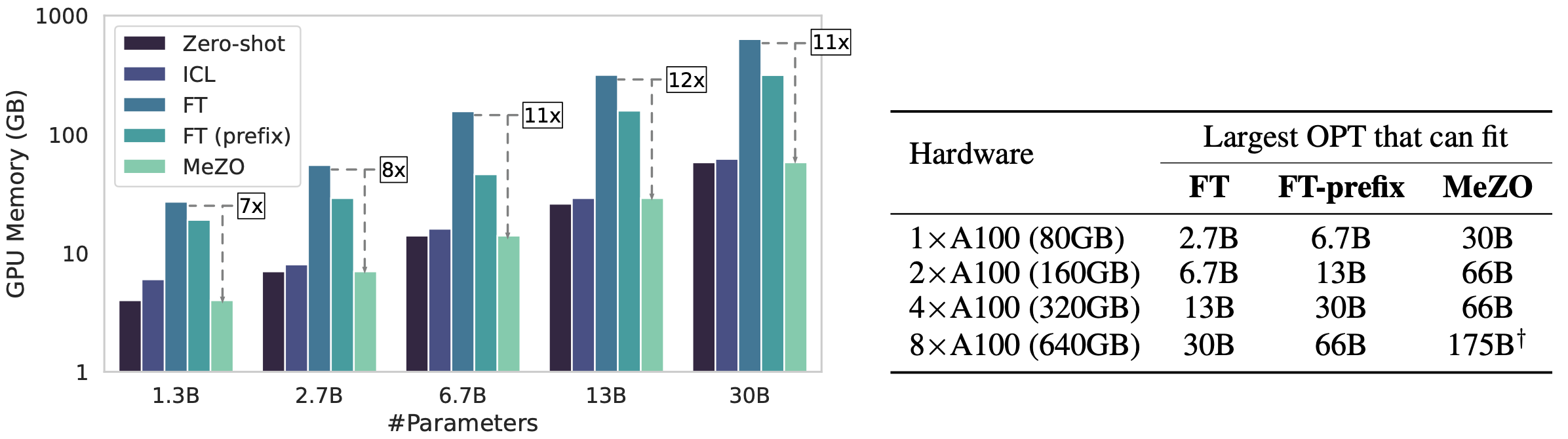

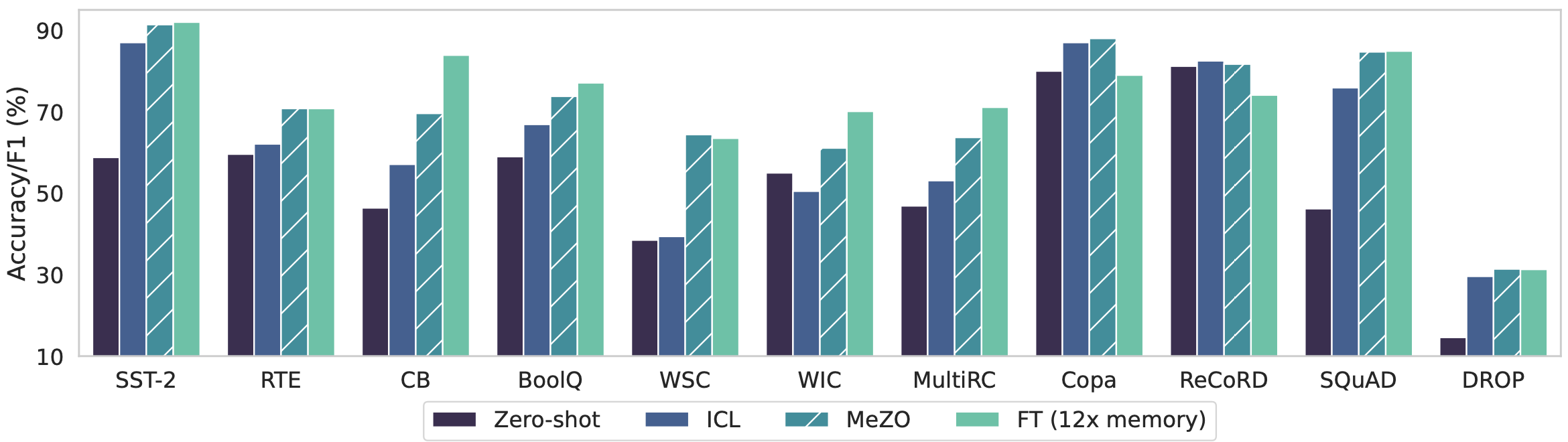

This is the implementation for the paper Fine-Tuning Language Models with Just Forward Passes. In this paper we propose a memory-efficient zeroth-order optimizer (MeZO), adapting the classical zeroth-order SGD method to operate in-place, thereby fine-tuning language models (LMs) with the same memory footprint as inference.

With a single A100 80GB GPU, MeZO can train a 30-billion parameter OPT model, whereas fine-tuning with Adam can train only a 2.7B LM. MeZO demonstrates comparable performance to fine-tuning with backpropagation across multiple tasks, with up to 12× memory reduction. MeZO is also compatible with both full-parameter and parameter-efficient tuning techniques such as LoRA and prefix tuning. We also show that MeZO can effectively optimize non-differentiable objectives (e.g., maximizing accuracy or F1).

Installation

pip install git+https://www.github.com/lebrice/MeZO

Reproduce our paper results

For reproducing RoBERTa-large experiments, please refer to the medium_models folder. For autoregressive LM (OPT) experiments, please refer to the large_models folder. If you want to learn more about how MeZO works and how we implement it, we recommend you to read the large_models folder as the implementation is clearer and more extensible. If you want to explore more variants of MeZO, we recommend trying out medium_models as it's faster and has more variants implemented.

How to add MeZO to my own code?

Our implementation of MeZO is based on HuggingFace's Trainer. We add MeZO to the official implementation of trainer with minimum editing. Please refer to "How to add MeZO to my own code?" section in large_models README for more details.

Bugs or questions?

If you have any questions related to the code or the paper, feel free to email Sadhika (smalladi@princeton.edu) or Tianyu (tianyug@princeton.edu). If you encounter any problems when using the code, or want to report a bug, you can open an issue. Please try to specify the problem with details so we can help you better and quicker!

Citation

@article{malladi2023mezo,

title={Fine-Tuning Large Language Models with Just Forward Passes},

author={Malladi, Sadhika and Gao, Tianyu and Nichani, Eshaan and Damian, Alex and Lee, Jason D and Chen, Danqi and Arora, Sanjeev},

year={2023}

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file mezo-0.0.1.tar.gz.

File metadata

- Download URL: mezo-0.0.1.tar.gz

- Upload date:

- Size: 5.3 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.7.1 CPython/3.11.7 Linux/5.15.0-83-generic

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

180d168c635c3df0adab28d88760622ec4bfb343c204d39318129e21841eff6e

|

|

| MD5 |

6e36dddb267c561cb68e617b1a739c11

|

|

| BLAKE2b-256 |

4a42066a6d31ea5251f676c9c31737f8a764e5d762f06a9dd1bcf28bb87a4d35

|

File details

Details for the file mezo-0.0.1-py3-none-any.whl.

File metadata

- Download URL: mezo-0.0.1-py3-none-any.whl

- Upload date:

- Size: 6.2 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: poetry/1.7.1 CPython/3.11.7 Linux/5.15.0-83-generic

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

72032843ded1cd6fba0a4e15f0b8a54b24f038462f2bf5bba932c29ec98e4b10

|

|

| MD5 |

c15b151d07ffc0cfc6722bc7f89141cf

|

|

| BLAKE2b-256 |

7b3a7cf86bd226259bdeb1dff4130cc5e4813ebed8ba89a7290dacc981897af4

|