MLflow is an open source platform for the complete machine learning lifecycle

Project description

Open-Source Platform for Productionizing AI

MLflow is an open-source developer platform to build AI/LLM applications and models with confidence. Enhance your AI applications with end-to-end experiment tracking, observability, and evaluations, all in one integrated platform.

🚀 Installation

To install the MLflow Python package, run the following command:

pip install mlflow

📦 Core Components

MLflow is the only platform that provides a unified solution for all your AI/ML needs, including LLMs, Agents, Deep Learning, and traditional machine learning.

💡 For LLM / GenAI Developers

🔍 Tracing / Observability Trace the internal states of your LLM/agentic applications for debugging quality issues and monitoring performance with ease. Getting Started → |

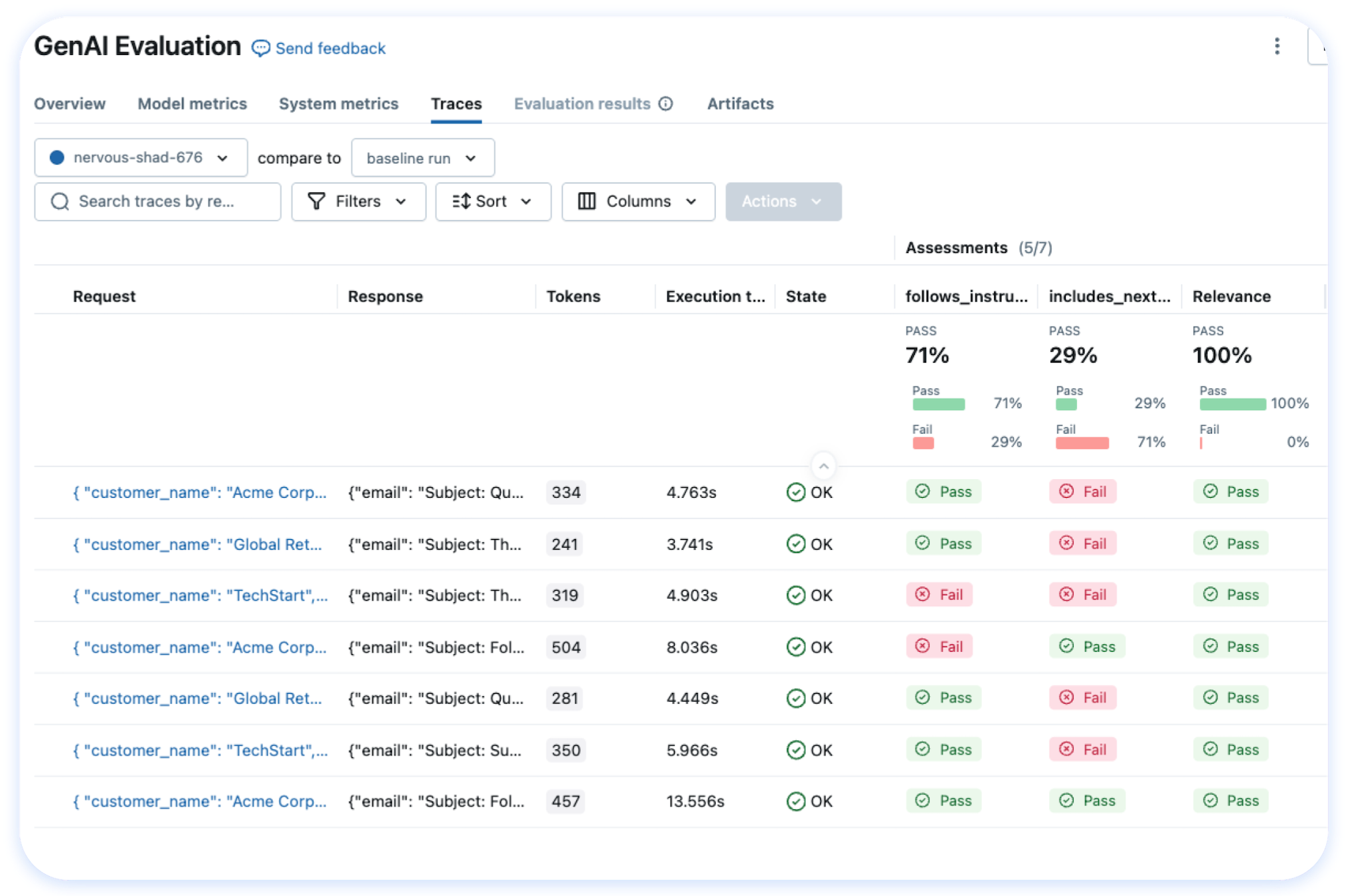

📊 LLM Evaluation A suite of automated model evaluation tools, seamlessly integrated with experiment tracking to compare across multiple versions. Getting Started → |

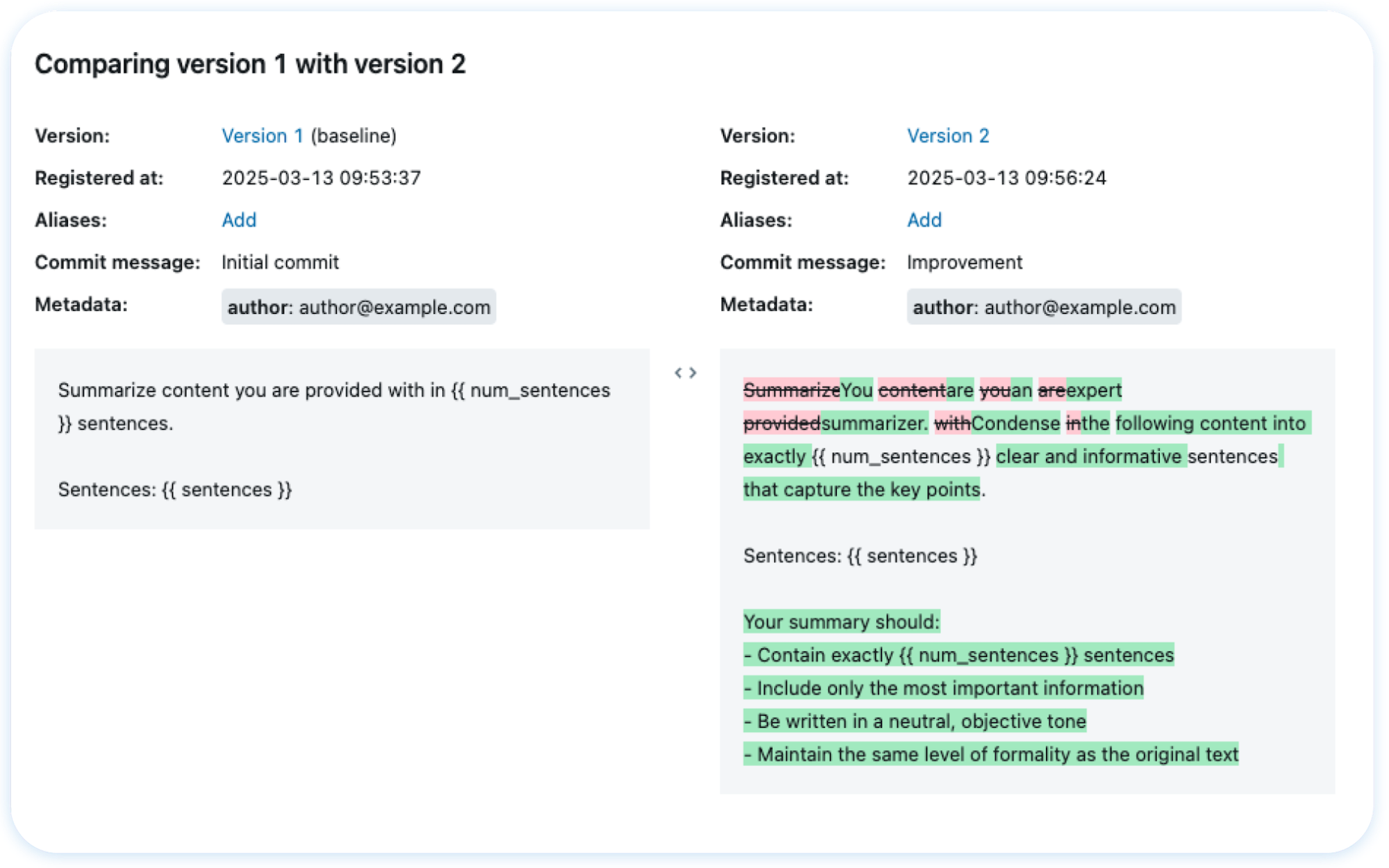

🤖 Prompt Management Version, track, and reuse prompts across your organization, helping maintain consistency and improve collaboration in prompt development. Getting Started → |

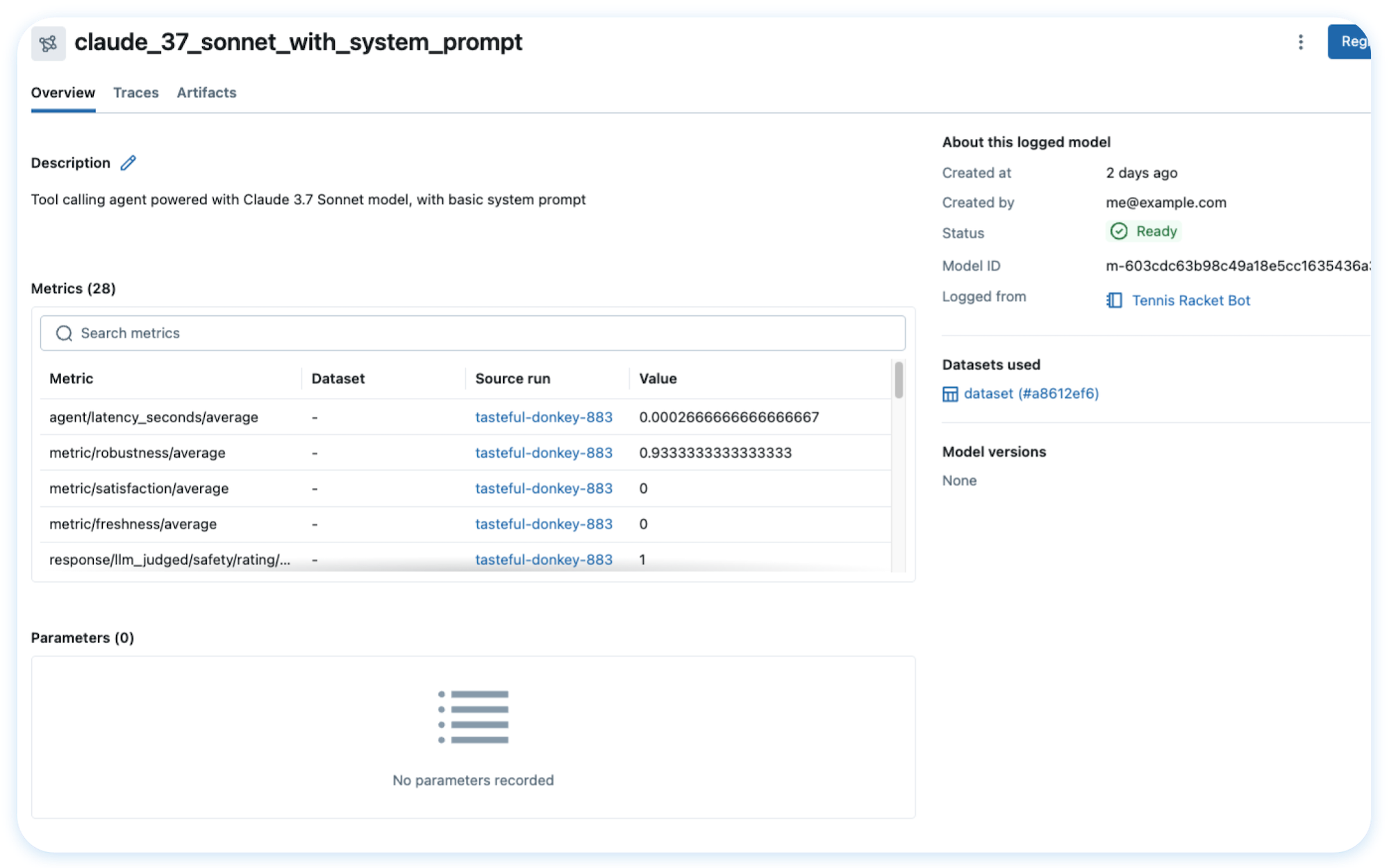

📦 App Version Tracking MLflow keeps track of many moving parts in your AI applications, such as models, prompts, tools, and code, with end-to-end lineage. Getting Started → |

🎓 For Data Scientists

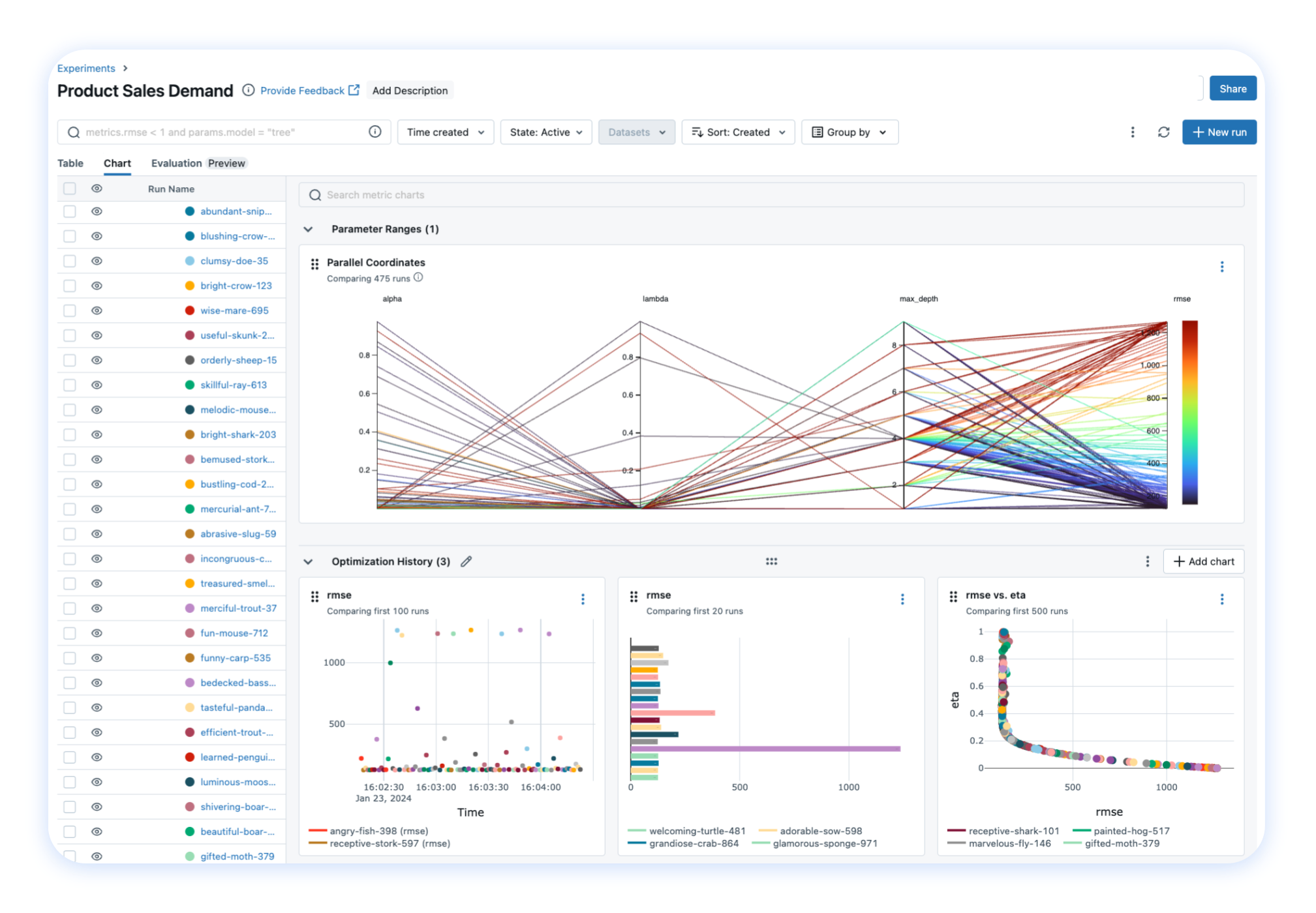

📝 Experiment Tracking Track your models, parameters, metrics, and evaluation results in ML experiments and compare them using an interactive UI. Getting Started → |

|

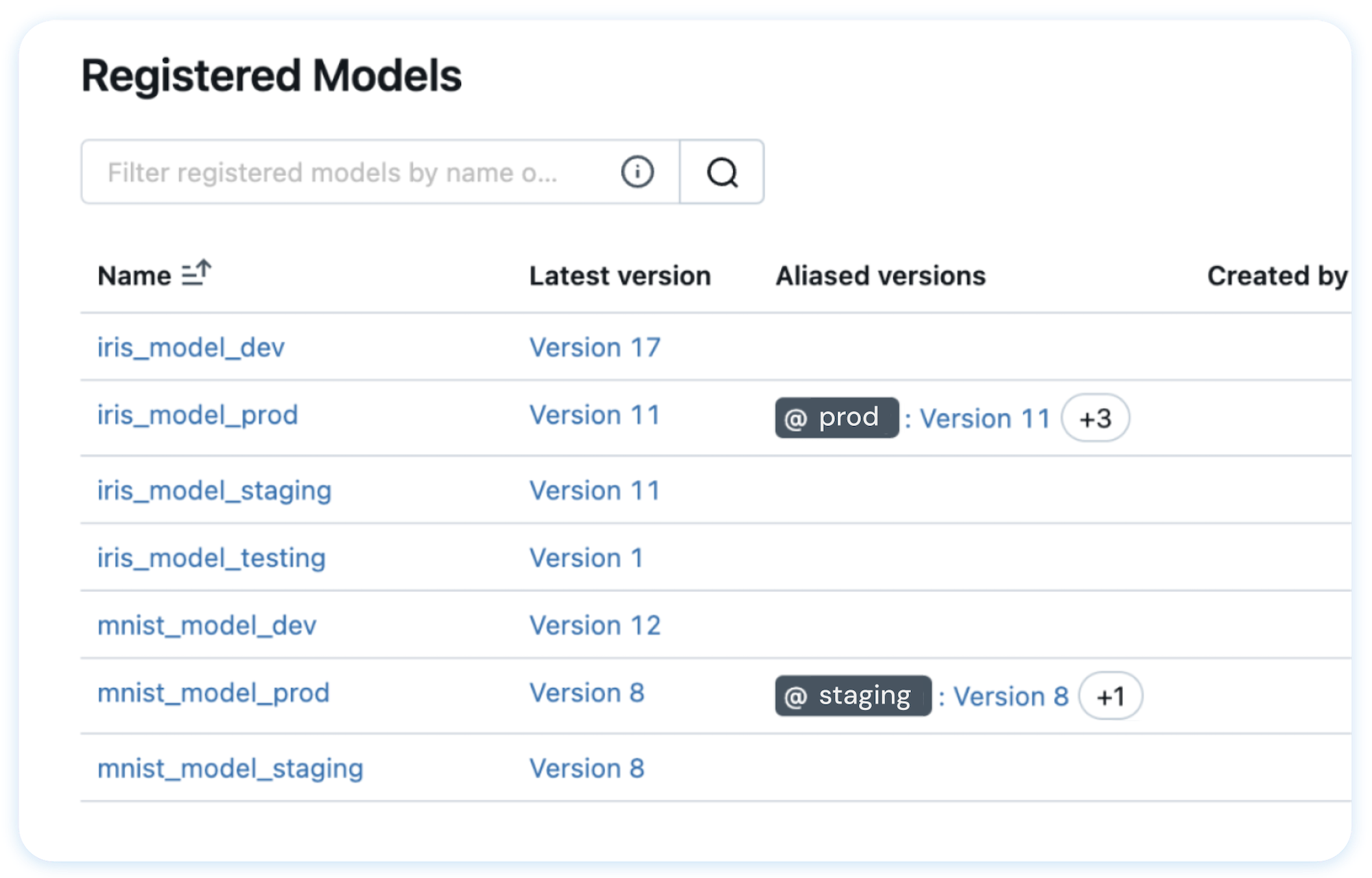

💾 Model Registry A centralized model store designed to collaboratively manage the full lifecycle and deployment of machine learning models. Getting Started → |

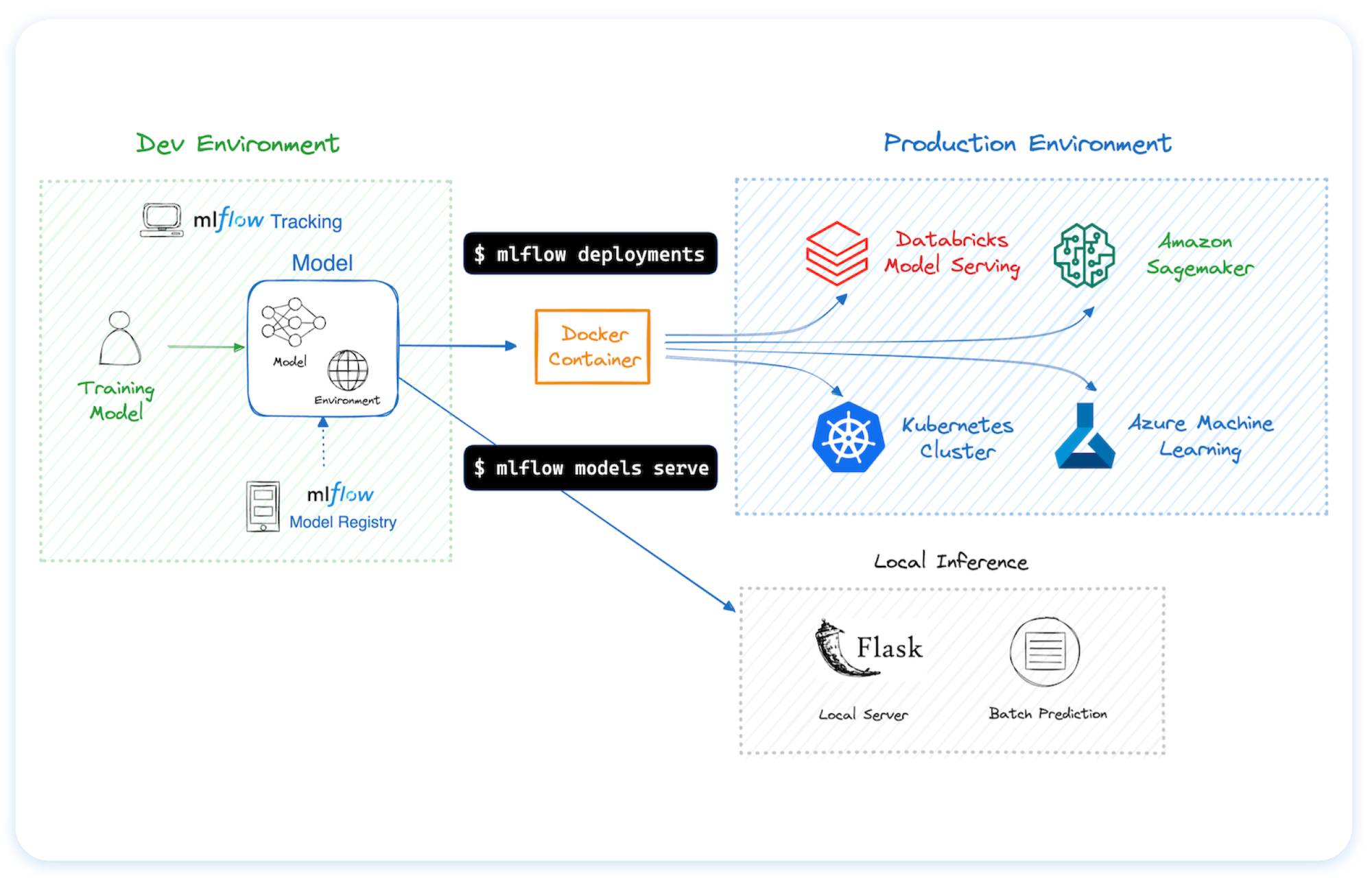

🚀 Deployment Tools for seamless model deployment to batch and real-time scoring on platforms like Docker, Kubernetes, Azure ML, and AWS SageMaker. Getting Started → |

🌐 Hosting MLflow Anywhere

You can run MLflow in many different environments, including local machines, on-premise servers, and cloud infrastructure.

Trusted by thousands of organizations, MLflow is now offered as a managed service by most major cloud providers:

For hosting MLflow on your own infrastructure, please refer to this guidance.

🗣️ Supported Programming Languages

🔗 Integrations

MLflow is natively integrated with many popular machine learning frameworks and GenAI libraries.

Usage Examples

Tracing (Observability) (Doc)

MLflow Tracing provides LLM observability for various GenAI libraries such as OpenAI, LangChain, LlamaIndex, DSPy, AutoGen, and more. To enable auto-tracing, call mlflow.xyz.autolog() before running your models. Refer to the documentation for customization and manual instrumentation.

import mlflow

from openai import OpenAI

# Enable tracing for OpenAI

mlflow.openai.autolog()

# Query OpenAI LLM normally

response = OpenAI().chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": "Hi!"}],

temperature=0.1,

)

Then navigate to the "Traces" tab in the MLflow UI to find the trace records for the OpenAI query.

Evaluating LLMs, Prompts, and Agents (Doc)

The following example runs automatic evaluation for question-answering tasks with several built-in metrics.

import os

import openai

import mlflow

from mlflow.genai.scorers import Correctness, Guidelines

client = openai.OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# 1. Define a simple QA dataset

dataset = [

{

"inputs": {"question": "Can MLflow manage prompts?"},

"expectations": {"expected_response": "Yes!"},

},

{

"inputs": {"question": "Can MLflow create a taco for my lunch?"},

"expectations": {

"expected_response": "No, unfortunately, MLflow is not a taco maker."

},

},

]

# 2. Define a prediction function to generate responses

def predict_fn(question: str) -> str:

response = client.chat.completions.create(

model="gpt-4o-mini", messages=[{"role": "user", "content": question}]

)

return response.choices[0].message.content

# 3. Run the evaluation

results = mlflow.genai.evaluate(

data=dataset,

predict_fn=predict_fn,

scorers=[

# Built-in LLM judge

Correctness(),

# Custom criteria using LLM judge

Guidelines(name="is_english", guidelines="The answer must be in English"),

],

)

Navigate to the "Evaluations" tab in the MLflow UI to find the evaluation results.

Tracking Model Training (Doc)

The following example trains a simple regression model with scikit-learn, while enabling MLflow's autologging feature for experiment tracking.

import mlflow

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_diabetes

from sklearn.ensemble import RandomForestRegressor

# Enable MLflow's automatic experiment tracking for scikit-learn

mlflow.sklearn.autolog()

# Load the training dataset

db = load_diabetes()

X_train, X_test, y_train, y_test = train_test_split(db.data, db.target)

rf = RandomForestRegressor(n_estimators=100, max_depth=6, max_features=3)

# MLflow triggers logging automatically upon model fitting

rf.fit(X_train, y_train)

Once the above code finishes, run the following command in a separate terminal and access the MLflow UI via the printed URL. An MLflow Run should be automatically created, which tracks the training dataset, hyperparameters, performance metrics, the trained model, dependencies, and even more.

mlflow server

💭 Support

- For help or questions about MLflow usage (e.g. "how do I do X?") visit the documentation.

- In the documentation, you can ask the question to our AI-powered chat bot. Click on the "Ask AI" button at the right bottom.

- Join the virtual events like office hours and meetups.

- To report a bug, file a documentation issue, or submit a feature request, please open a GitHub issue.

- For release announcements and other discussions, please subscribe to our mailing list (mlflow-users@googlegroups.com) or join us on Slack.

🤝 Contributing

We happily welcome contributions to MLflow!

- Submit bug reports and feature requests

- Contribute for good-first-issues and help-wanted

- Writing about MLflow and sharing your experience

Please see our contribution guide to learn more about contributing to MLflow.

⭐️ Star History

✏️ Citation

If you use MLflow in your research, please cite it using the "Cite this repository" button at the top of the GitHub repository page, which will provide you with citation formats including APA and BibTeX.

👥 Core Members

MLflow is currently maintained by the following core members with significant contributions from hundreds of exceptionally talented community members.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file mlflow-3.9.0.tar.gz.

File metadata

- Download URL: mlflow-3.9.0.tar.gz

- Upload date:

- Size: 9.1 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.9.24

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

47a41fa22107b0ceee1f91e2184759ebfaffa31d7913b70318b78fb5369e52ec

|

|

| MD5 |

7a31faf4f52da69b8a0d42ede59d31ce

|

|

| BLAKE2b-256 |

95e59f8481b879329ed0f5317eda459fb7eebe9eb9bae7d99ba6a7d68074f619

|

File details

Details for the file mlflow-3.9.0-py3-none-any.whl.

File metadata

- Download URL: mlflow-3.9.0-py3-none-any.whl

- Upload date:

- Size: 9.7 MB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.2.0 CPython/3.9.24

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

280f94854e5ece42fc5538180b276661c62dbfb2c848a98e8873e78915379ac6

|

|

| MD5 |

12d322a58e4c8d2bd9064cf8e0c9f060

|

|

| BLAKE2b-256 |

e9ce871d1168206164242856b5cbf327693b708d6a63c3163d90f89ad5e70807

|