A deep learning framework based on PyTorch and prevalent image processing libraries. It aims to offer a set of useful tools and functions.

Project description

Nebulae

A deep learning framework based on PyTorch and prevalent image processing libraries

It aims to offer a set of useful tools and functions.

🚀 Spotlight

Several frequently used features are integrated with simple interfaces.

🛠️ Integrated Feats - EMA module, multi-source datasets, training log plotting and timer etc.

🎯 Simplified API - Unified API for distributed and single-GPU training.

⚡️ Efficiency - Data augmentations are reimplemented using Numpy which is faster than PIL.

🧩 High Compatibility - Users are able to build networks using Nebulae with PyTorch seamlessly.

⚡ Quick Start

📸 Utility

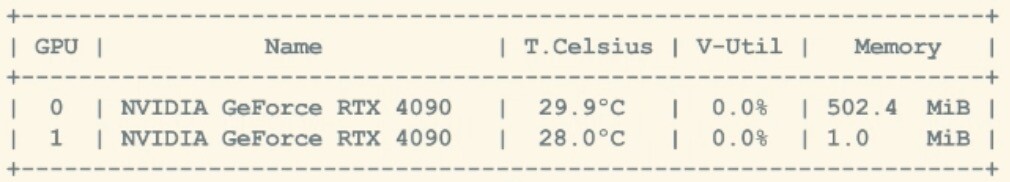

Obtain GPU stats after some training epochs.

import nebulae as neb

from nebulae import *

gu = kit.GPUtil()

gu.monitor()

for epoch in range(10):

# --- training code --- #

gu.status()

Automatically select unoccupied GPUs. It is useful for a shared machine.

import nebulae as neb

from nebulae import *

# select 4 GPUs with 2GB or more memory left

engine = npe(device=power.GPU, ngpu=4, least_mem=2048)

Find entire distributed training and test code in ./examples/demo_core.py

📦 Installation

Users can install nebulae from pip

pip install nebulae

For better development, building from Dockerfile is also available. Modifying the libs version and have nvidia-docker on your machine is recommended.

sudo docker build -t nebulae:std -f Dockerfile.std .

sudo docker run -it --gpus all --ipc=host --ulimit memlock=-1 nebulae:std

The latest version supports PyTorch1.6 and above

❤️ Support

If you find Nebulae helpful, consider giving it a ⭐ on GitHub! ▶️ https://github.com/SeriaQ/Nebulae

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file nebulae-0.6.27.tar.gz.

File metadata

- Download URL: nebulae-0.6.27.tar.gz

- Upload date:

- Size: 58.8 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.10.12

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

6146874aa34fe99038a172f9928a76d081227bce75e21ba9a2358a1ef4d370ae

|

|

| MD5 |

5b0f9b625d0a0c1154db1a98d987c04a

|

|

| BLAKE2b-256 |

bd03f9243677fc3d74dd946d87d74be2645c9d0b18ae58bd6866cb537818d541

|

File details

Details for the file nebulae-0.6.27-py3-none-any.whl.

File metadata

- Download URL: nebulae-0.6.27-py3-none-any.whl

- Upload date:

- Size: 64.3 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.10.12

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

9c629e93caf5a6947efa45d08af51deee6590196495194f40dd18d922fcfdec1

|

|

| MD5 |

9e067a0aa9b54f6212307fbec972ba33

|

|

| BLAKE2b-256 |

8cc85a8a5a9d1ad68f518c4be1a4ac03389cb68d075d98d53baf552482432bd0

|