Time series forecasting suite using deep learning models

Project description

Nixtla

Neural 🧠 Forecast

User friendly state-of-the-art neural forecasting models.

NeuralForecast offers a large collection of neural forecasting models focused on their usability, and robustness. The models range from classic networks like MLP, RNNs to novel proven contributions like NBEATS, TFT and other architectures.

💻 Installation

PyPI

You can install NeuralForecast's released version from the Python package index pip with:

pip install neuralforecast

(Installing inside a python virtualenvironment or a conda environment is recommended.)

Conda

Also you can install NeuralForecast's released version from conda with:

conda install -c conda-forge neuralforecast

(Installing inside a python virtual environment or a conda environment is recommended.)

Dev Mode

If you want to make some modifications to the code and see the effects in real time (without reinstalling), follow the steps below:git clone https://github.com/Nixtla/neuralforecast.git

cd neuralforecast

pip install -e .

🏃🏻♀️🏃 Getting Started

To get started follow this guide, where we explore NBEATS, extend it towards probabilistic predictions and exogenous variables.

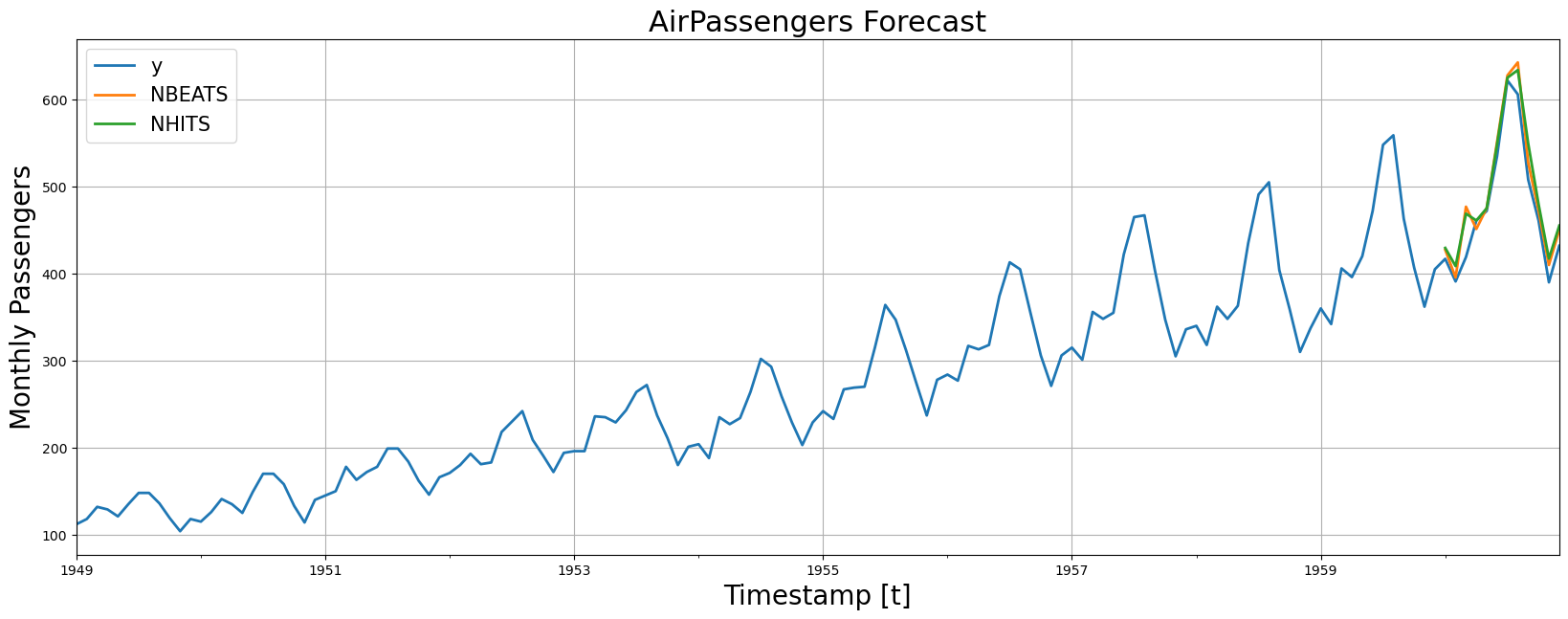

Or follow this simple example where we train the NBEATS model and predict the classic Box-Jenkins air passengers dataset.

import numpy as np

import pandas as pd

from IPython.display import display, Markdown

import matplotlib.pyplot as plt

from neuralforecast import NeuralForecast

from neuralforecast.models import NBEATS, NHITS

from neuralforecast.utils import AirPassengersDF

# Split data and declare panel dataset

Y_df = AirPassengersDF

Y_train_df = Y_df[Y_df.ds<='1959-12-31'] # 132 train

Y_test_df = Y_df[Y_df.ds>'1959-12-31'] # 12 test

# Fit and predict with NBEATS and NHITS models

horizon = len(Y_test_df)

models = [NBEATS(input_size=2 * horizon, h=horizon, max_steps=50),

NHITS(input_size=2 * horizon, h=horizon, max_steps=50)]

nf = NeuralForecast(models=models, freq='M')

nf.fit(df=Y_train_df)

Y_hat_df = nf.predict().reset_index()

# Plot predictions

fig, ax = plt.subplots(1, 1, figsize = (20, 7))

Y_hat_df = Y_test_df.merge(Y_hat_df, how='left', on=['unique_id', 'ds'])

plot_df = pd.concat([Y_train_df, Y_hat_df]).set_index('ds')

plot_df[['y', 'NBEATS', 'NHITS']].plot(ax=ax, linewidth=2)

ax.set_title('AirPassengers Forecast', fontsize=22)

ax.set_ylabel('Monthly Passengers', fontsize=20)

ax.set_xlabel('Timestamp [t]', fontsize=20)

ax.legend(prop={'size': 15})

ax.grid()

🎉 New!

Quick Start: Minimal usage example of the NeuralForecast library on Box's AirPassengers Data.

Multi Quantile NBEATS Example: Produce accurate and efficient probabilistic forecasts in long-horizon settings. Outperforming AutoARIMA's accuracy in a fraction of the time.

Long Horizon NHITS Example: Load, train, and tune hyperparameters, to achieve Long-Horizon SoTA. Outperform Award Winning Transformers by 25% in 50x less time.

Hierarchical Forecasting HINT Example: Obtain accurate probabilistic coherent predictions for hierarchical datasets.

🔥 Highlights

- Unified

StatsForecastinterfaceNeuralForecast().fit(Y_df).predict(h=7). - Industry/Competition proven

ESRNN,NBEATS, andTFTimplementations. - Improve accuracy and speed over classic

ARIMA/ETSin two lines of code. Check the experiments here. - Predict Series with little to no history, using Transfer learning. Check the experiments here.

🎊 Features

- Exogenous Variables: Static, lagged and future exogenous support.

- Forecast Interpretability: Plot trend, seasonality and exogenous

NBEATS,NHITS,TFT,ESRNNprediction components. - Probabilistic Forecasting: Simple model adapters for quantile losses and parametric distributions.

- Train and Evaluation Losses Scale-dependent, percentage and scale independent errors, and parametric likelihoods.

- Automatic Model Selection Parallelized automatic hyperparameter tuning, that efficiently searches best validation configuration.

- Simple Interface Unified SKLearn Interface for

StatsForecastandMLForecastcompatibility. - Model Collection: Out of the box implementation of

MLP,LSTM,RNN,TCN,DilatedRNN,NBEATS,NHITS,ESRNN,TFT,Informer,PatchTSTandHINT. See the entire collection here.

Missing something? Please open an issue or write us in

📖 Why?

There is a shared belief in Neural forecasting methods' capacity to improve our pipeline's accuracy and efficiency.

Unfortunately, available implementations and published research are yet to realize neural networks' potential. They are hard to use and continuously fail to improve over statistical methods while being computationally prohibitive. For this reason, we created NeuralForecast, a library favoring proven accurate and efficient models focusing on their usability.

🔬 Accuracy & ⏲ Speed

Industry/Competition Proven Methods

An extensive empirical evaluation is critical to generate confidence and promote the adoption and development of novel methods. For this reason, we replicate and verify the results of our implementation of the following industry/competition-proven methods: ESRNN, NBEATS, NHITS, and TFT. If you are interested in reproducing the results, check the experiments here.

Simple and Efficient Method's Comparison

Like core.StatsForecast, the core.NeuralForecast wrapper class allows us to easily compare any model in the collection to select or ensemble the best performing methods. Aditionally it offers a high-end interface that operates with (potentially large) sets of time series data stored in pandas DataFrames. The core.NeuralForecast efficiently parallelizes computation across GPU resources. Check the experiments here.

📖 Documentation (WIP)

The documentation page contains the models' code documentation, methods, utils, and other tutorials. Docstrings accompany most code.

🔨 How to contribute

If you wish to contribute to the project, please refer to our contribution guidelines.

📚 References

This work is highly influenced by the fantastic work of previous contributors and other scholars on the neural forecasting methods presented here. We want to highlight the work of Boris Oreshkin, Slawek Smyl, Bryan Lim, and David Salinas. We refer to Benidis et al. for a comprehensive survey of neural forecasting methods.

Contributors ✨

Thanks goes to these wonderful people (emoji key):

fede 💻 🐛 📖 |

Greg DeVos 🤔 |

Cristian Challu 💻 |

mergenthaler 📖 💻 |

Kin 💻 🐛 🔣 |

José Morales 💻 |

Alejandro 💻 |

stefanialvs 🎨 |

Ikko Ashimine 🐛 |

vglaucus 🐛 |

Pietro Monticone 🐛 |

This project follows the all-contributors specification. Contributions of any kind welcome!

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file neuralforecast-1.6.3.tar.gz.

File metadata

- Download URL: neuralforecast-1.6.3.tar.gz

- Upload date:

- Size: 124.5 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

71db98ad9aff0aa7bd4dbe8665c41df4e0401a5b10078e28061b16ac7d070489

|

|

| MD5 |

af42156aaa0536abb297735a8011dd0a

|

|

| BLAKE2b-256 |

53fe7c55e43907407bcfed20377a63a02c4067afd97d683a30e0756766d93678

|

File details

Details for the file neuralforecast-1.6.3-py3-none-any.whl.

File metadata

- Download URL: neuralforecast-1.6.3-py3-none-any.whl

- Upload date:

- Size: 162.6 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

82462932d8dc7b4c1dc7e365c98ed5b1299c89fef60c55754d288a3878a4c23b

|

|

| MD5 |

0f23c03896ea90a27102d8657eabe92d

|

|

| BLAKE2b-256 |

70f256ae7bf2201d0d2a052be00ed9b06dfceb311cdf3cdc9ae3e5213d2e78ab

|