Perceptual similarity embeddings for non-matching reference audio quality assessment and speech enhancement

Project description

NOMAD: Non-Matching Audio Distance

NOMAD is a perceptual similarity metric for non-matching reference speech quality assessment. NOMAD embeddings can be used to:

- Measuring quality with any clean reference e.g., both paired and unpaired speech

- As a loss function to improve speech enhancement models

Table of contents

Installation

NOMAD is hosted on PyPi. It can be installed in your Python environment with the following command

pip install nomad_audio

The model works with 16 kHz sampling rate. If your wav files have different sampling rates, automatic downsampling or upsampling is performed to use correctly the model.

NOMAD was built with Python 3.9.16.

Using NOMAD similarity score

Data wav files can be passed in 2 modes:

- In

mode=='dir', you need to indicate two directories for the reference and the degraded .wav files. - In

mode=='csv, you need to indicated two csv for the reference and the degraded .wav files.

Reference files can be any clean speech.

Using NOMAD from the command line

To predict perceptual similarity of all .wav files between two directories:

python -m nomad_audio --mode dir --nmr /path/to/dir/non-matching-references --deg /path/to/dir/degraded

To predict perceptual similarity of all .wav files between two csv files:

python -m nomad_audio --mode csv --nmr /path/to/csv/non-matching-references --deg /path/to/csv/degraded

Both csv files should include a column filename with the absolute path for each wav file.

In both modes, the script will create two csv files in results-csv with date time format.

DD-MM-YYYY_hh-mm-ss_nomad_avg.csvincludes the average NOMAD scores with respect to all the references innmr_pathDD-MM-YYYY_hh-mm-ss_nomad_scores.csvincludes pairwise scores between the degraded speech samples intest_pathand the references innmr_path

You can choose where to save the csv files by setting results_path.

You can run this example using some .wav files that are provided in the repo:

python -m nomad_audio --mode dir --nmr_path data/nmr-data --test_path data/test-data

The resulting csv file DD-MM-YYYY_hh-mm-ss_nomad_avg.csv shows the mean computed using the 4 non-matching reference files:

Test File NOMAD

445-123860-0012_NOISE_15 1.587

6563-285357-0042_OPUS_64k 0.294

The other csv file DD-MM-YYYY_hh-mm-ss_nomad_scores.csv includes the pairwise scores between the degraded and the non-matching reference files:

Test File MJ60_10 FL67_01 FI53_04 MJ57_01

445-123860-0012_NOISE_15 1.627 1.534 1.629 1.561

6563-285357-0042_OPUS_64k 0.23 0.414 0.186 0.346

Using NOMAD inside Python

You can import NOMAD as a module in Python. Here is an example:

from nomad_audio import nomad

nmr_path = 'data/nmr-data'

test_path = 'data/test-data'

nomad_avg_scores, nomad_scores = nomad.predict('dir', nmr_path, test_path)

Using NOMAD loss function

NOMAD has been evaluated as a loss function to improve speech enhancement models.

NOMAD loss can be used as a PyTorch loss function as follows:

from nomad_audio import nomad

# Here is your training loop where you calculate your loss

loss = mse_loss(estimate, clean) + weight * nomad.forward(estimate, clean)

We provide a full example on how to use NOMAD loss for speech enhancement using a wave U-Net architecture, see src/nomad_audio/nomad_loss_test.py.

In this example we show that using NOMAD as an auxiliary loss you can get quality improvement:

- MSE -> PESQ = 2.39

- MSE + NOMAD loss -> PESQ = 2.60

Steps to reproduce this experiment:

- Download Valentini speech enhancement dataset here

- In

src/nomad_audio/se_config.yamlchange the following parametersnoisy_train_dirpath to noisy_trainset_28spk_wavclean_train_dirpath to clean_trainset_28spk_wavnoisy_valid_dirpath to noisy_validset_28spk_wavclean_valid_dirpath to clean_validset_28spk_wavnoisy_test_dirpath to noisy_testset_wavclean_test_dirpath to clean_testset_wav

Notice that the Valentini dataset does not explicitly provide a validation partition. We created one by using speech samples from speakers p286 and p287 from the training set.

See the paper for more details on speech enhancement results using the model DEMUCS and evaluated with subjective listening tests.

NOMAD loss weighting

We recommend to tune the weight of the NOMAD loss. Paper results with the DEMUCS model has been done by setting the weight to 0.1.

The U-Net model provided in this repo uses a weight equal to 0.001.

Training

Package dependencies

After cloning the repo you can either pip install nomad_audio as above or install the required packages from requirements.txt. If you install the pip package you will also have the additional nomad_audio module which is not needed to train NOMAD but only for usage.

Dataset generation

NOMAD is trained on degraded samples from the Librispeech dataset.

Download the dataset to train the model.

In addition, we provide instructions to generate the dataset above. Notice that the process can be time-consuming, we recommend to download the dataset from the link.

Training the model

The following steps are required to train the model:

- Download wav2vec from this link and save it into

pt-models. If you ran abovepip install nomad_audioin your working directory you can skip this step. - Change the following parameters in

src/config/train_triplet.yamlrootshould be set to degraded Librispeech dataset path

- From the working directory run:

python main.py --config_file train_triplet.yaml

This will generate a path in your working directory out-models/train-triplet/dd-mm-yyyy_hh-mm-ss that includes the best model and the configuration parameters used to train this model.

Performance

We evaluated NOMAD for ranking degradation intensity, speech quality assessment, and as a loss function for speech enhancement. See the paper for more details. As clean non-matching references, we extracted 899 samples from the TSP speech database.

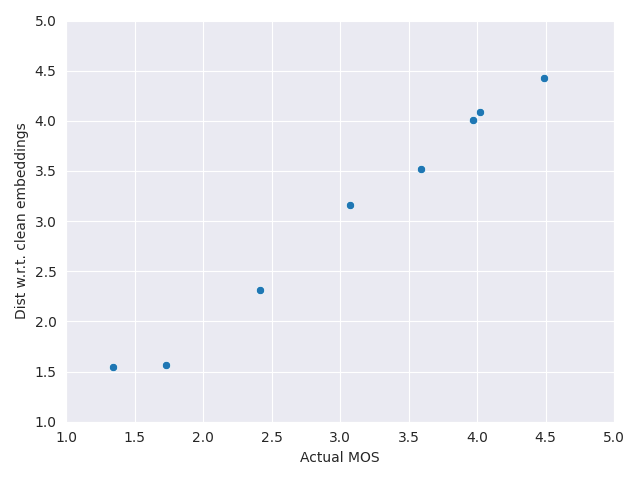

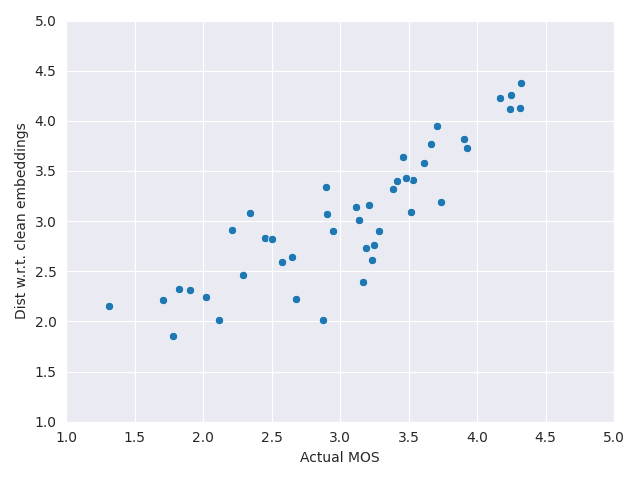

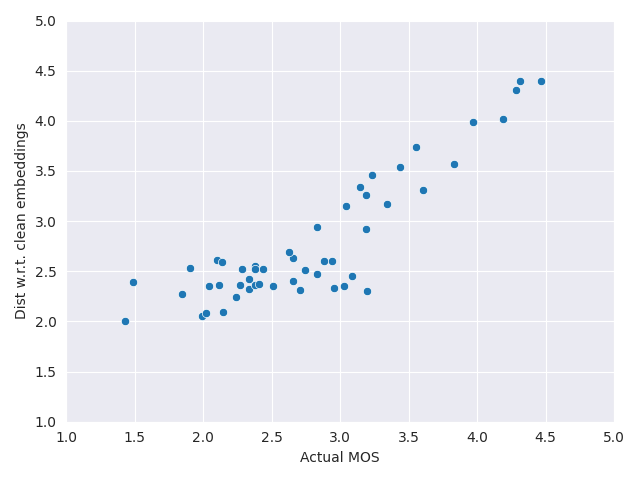

Here we show the scatter plot between NOMAD scores (computed with unpaired speech) and MOS quality labels. For each database we mapped NOMAD scores to MOS using a third order polynomial. Notice that performances are reported without mapping in the paper.

Genspeech

P23 EXP1

P23 EXP3

Paper and license

If you use NOMAD or the training corpus for your research, please cite this pre-print.

Ragano, A., Skoglund, J. and Hines, A., 2023. NOMAD: Unsupervised Learning of Perceptual Embeddings for Speech Enhancement and Non-matching Reference Audio Quality Assessment. arXiv preprint arXiv:2309.16284.

The NOMAD code is licensed under MIT license.

Copyright © 2023 Alessandro Ragano

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for nomad_audio-0.0.9-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | f1471acd58632708c53af52def044674b6678b3cebd68b30b1840588867e0f20 |

|

| MD5 | d7595411f1f316d97b943bb0594c75ee |

|

| BLAKE2b-256 | 79c9842e1295fda9b9c8904f671ac4a22164ce20bced8d8553c3fde2998451b3 |