ETL processes for medical and scientific papers

Project description

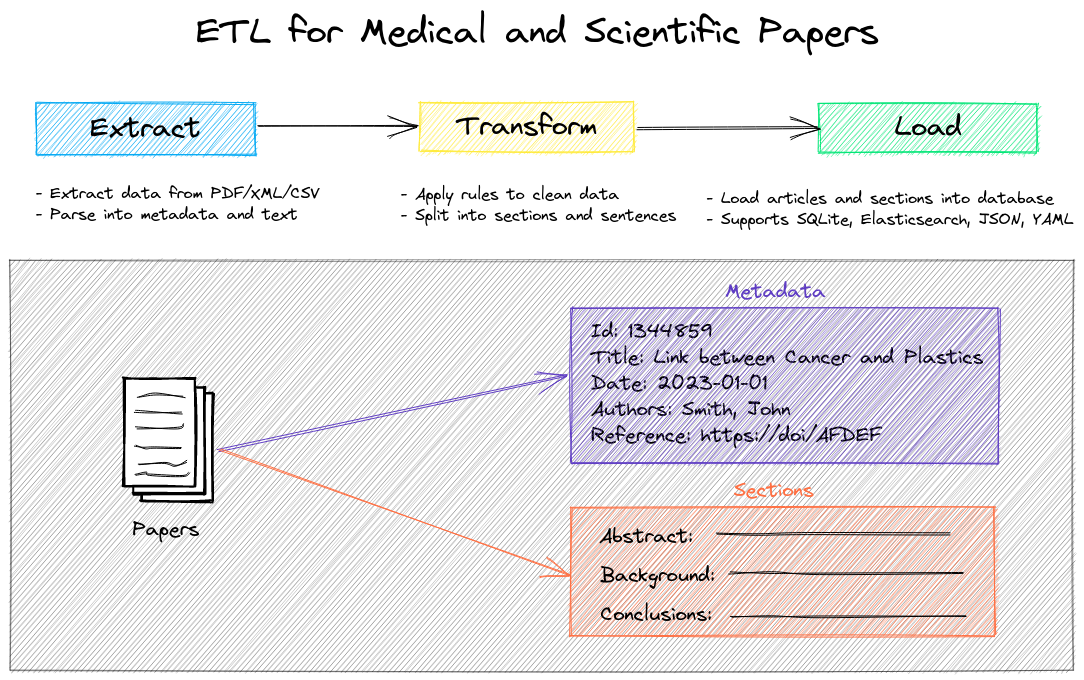

ETL processes for medical and scientific papers

paperetl is an ETL library for processing medical and scientific papers.

paperetl supports the following sources:

- Full PDF articles

- PubMed XML

- ArXiv XML

- Text Encoding Initiative (TEI) XML

- CSV with article metadta

paperetl supports the following datastores for parsed articles.

- SQLite

- JSON files

- YAML files

Additional optional datastores are available.

- Elasticsearch

Installation

The easiest way to install is via pip and PyPI

pip install paperetl

Python 3.10+ is supported. Using a Python virtual environment is recommended.

paperetl can also be installed directly from GitHub to access the latest, unreleased features.

pip install git+https://github.com/neuml/paperetl

Additional dependencies

PDF parsing relies on an existing GROBID instance to be up and running. It is assumed that this is running locally on the ETL server. This is only necessary for PDF files.

Note: In some cases, the GROBID engine pool can be exhausted, resulting in a 503 error. This can be fixed by increasing concurrency and/or poolMaxWait in the GROBID configuration file.

Docker

A Dockerfile with commands to install paperetl, all dependencies and scripts are available in this repository.

wget https://raw.githubusercontent.com/neuml/paperetl/master/docker/Dockerfile

docker build -t paperetl -f Dockerfile .

docker run --name paperetl --rm -it paperetl

This will bring up a paperetl command shell. Standard Docker commands can be used to copy files over or commands can be run directly in the shell to retrieve input content.

Examples

Notebooks

| Notebook | Description | |

|---|---|---|

| Introducing paperetl | Overview of the functionality provided by paperetl |

|

Load Articles into SQLite

The following example shows how to use paperetl to load a set of medical/scientific articles into a SQLite database.

-

Download the desired medical/scientific articles in a local directory. For this example, it is assumed the articles are in a directory named

paperetl/data -

Build the database

python -m paperetl.file paperetl/data paperetl/models

Once complete, there will be an articles.sqlite file in paperetl/models

Load into Elasticsearch

Elasticsearch is a supported datastore. It's an optional install feature via the Elasticsearch extra.

pip install paperetl[elasticsearch]

This example assumes Elasticsearch is running locally, change the URL to a remote server as appropriate.

python -m paperetl.file paperetl/data http://localhost:9200

Once complete, there will be an articles index in Elasticsearch with the metadata and full text stored.

Convert articles to JSON/YAML

paperetl can also be used to convert articles into JSON or YAML files. This is useful if the data is to be fed into another system or for manual inspection/debugging of a single file.

JSON:

python -m paperetl.file paperetl/data json://paperetl/json

YAML:

python -m paperetl.file paperetl/data yaml://paperetl/yaml

Converted files will be stored in paperetl/(json|yaml)

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file paperetl-2.5.2.tar.gz.

File metadata

- Download URL: paperetl-2.5.2.tar.gz

- Upload date:

- Size: 24.3 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.10.19

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

e03fa265564ff7533bde018ebdba2c165fa1247eb9fab0f683d10030498bccec

|

|

| MD5 |

3fba7a6f4c40162db43f6664905b584c

|

|

| BLAKE2b-256 |

5570b2850b9edcba403185c29c1f9709edd63283d5357c53a08d23b9c30e8b7e

|

File details

Details for the file paperetl-2.5.2-py3-none-any.whl.

File metadata

- Download URL: paperetl-2.5.2-py3-none-any.whl

- Upload date:

- Size: 26.9 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.10.19

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

199a44be7e4f82fc08e0720110f3abc3ba17b5d8e1d7577892dd432567fc1819

|

|

| MD5 |

804bb78a1fdd20bd10971eb7147eb7b3

|

|

| BLAKE2b-256 |

2be8db69bf35fac87b7d051770208294dd32a680070c3b129892adf3727d6744

|