Turn AML cluster into Ray

Project description

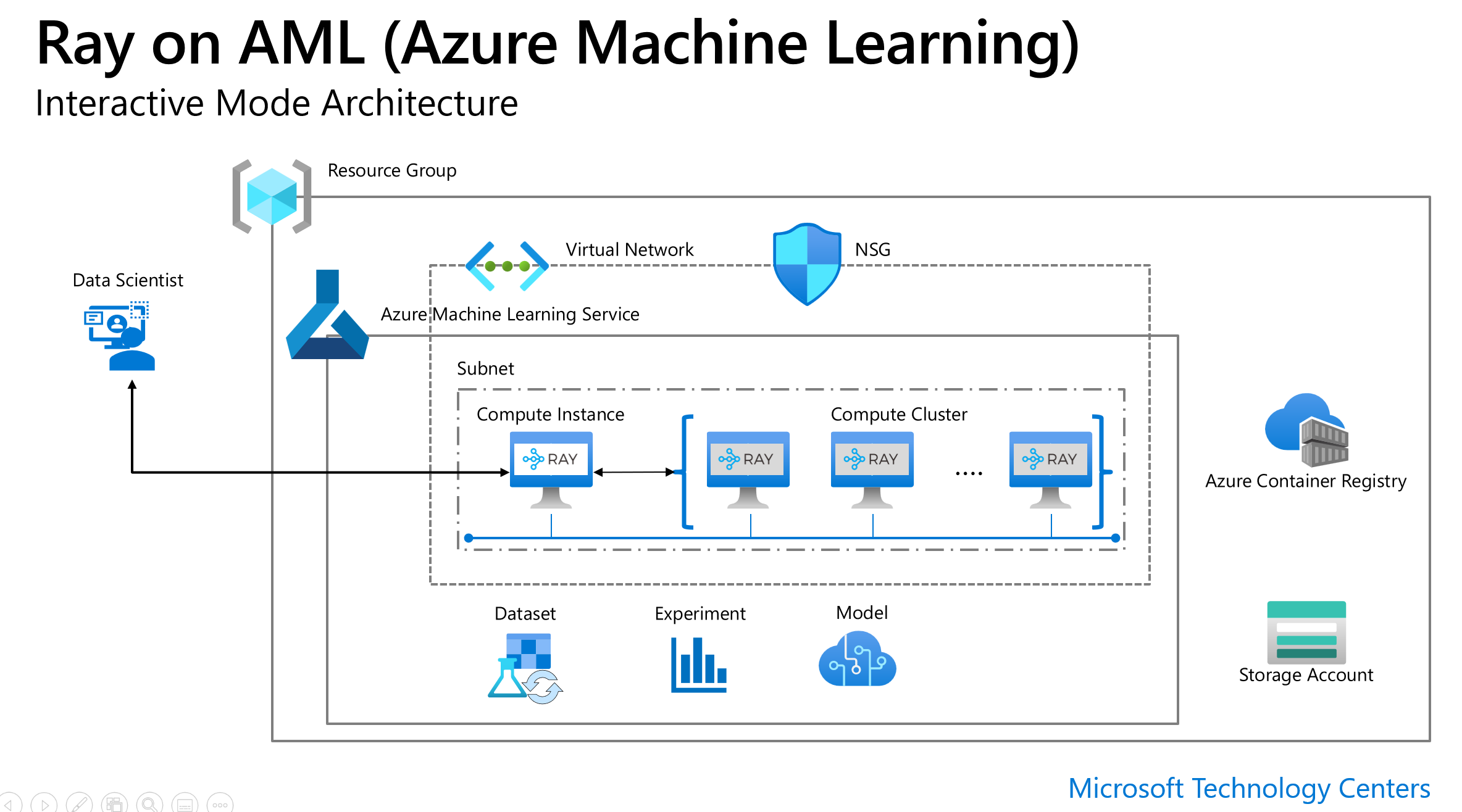

Ray on Azure ML

- ray-on-aml version 1.1.8 or lower supports Spark from raydp with Delta Lake, Synapse JDBC and latest pyspark 3.2.1. Checkout spark examples

- GPU & custom base image for interactive use: if you have GPU compute cluster, then either use ray_on_aml.getRay(gpu_support=True) which internally uses mcr.microsoft.com/azureml/openmpi4.1.0-cuda11.1-cudnn8-ubuntu18.04:20211221.v1 as base image. You can supply your own base image with ray_on_aml.getRay(base_image="YOUR_OWN_BASE_IMAGE")

- VSCode is now supported. You can run interactive notebook with VSCode.

This package simplifies setup of core Ray and Ray's components such as Dask on Ray, Ray tune,Ray rrlib, Ray serve and Spark in Azure ML. It also comes with supports for high performance data access to Azure data sources such as Azure Storage, Delta Lake , Synapse SQL. It supports both interactive and job uses.

Architecture

Setup & Quick Start Guide

1. Prepare Azure ML environment

For interactive mode, setup a compute cluster and a compute instance in the same VNET.

Checklist

[ ] Azure Machine Learning Workspace

[ ] Virtual network/Subnet

[ ] Create Compute Instance in the Virtual Network

[ ] Create Compute Cluster in the same Virtual Network

2. Select kernel

Use a python 3.7+ conda environment from (Jupyter) Notebook in Azure Machine Learning Studio.

3. Install library

pip install --upgrade ray-on-aml

Installing this library will also install

ray[tune]==1.12.0, ray[serve]==1.12.0, pyarrow>= 5.0.0, dask[complete]==2021.12.0, adlfs==2021.10.0, fsspec==2021.10.1, xgboost_ray==0.1.8, fastparquet==0.7.2

4. Run ray-on-aml

Run in interactive mode in a Compute Instance notebook

from ray_on_aml.core import Ray_On_AML

ws = Workspace.from_config()

ray_on_aml =Ray_On_AML(ws=ws, compute_cluster ="Name_of_Compute_Cluster", maxnode=3)

ray = ray_on_aml.getRay()

# may take 7 mintues or longer.Check the AML run under ray_on_aml experiment for cluster status.

Note that by default,the library sets up your current compute instance as Ray head and all nodes in the remote compute cluster as workers.

If you want to use one of the nodes in the remote AML compute cluster as head node and the remaining are worker nodes, simply pass ci_is_head=False

to ray_on_aml.getRay().

To install additional library, use additional_pip_packages and additional_conda_packages parameters.

The ray cluster will request 5 nodes from AML if maxnode is not specified.

ray_on_aml =Ray_On_AML(ws=ws, compute_cluster ="Name_of_Compute_Cluster", additional_pip_packages= \

['torch==1.10.0', 'torchvision', 'sklearn'])

For use in an Azure ML job, include ray_on_aml as a pip dependency and inside your script, do this to get ray Remember to use RunConfiguration(communicator='OpenMpi') in your AML job's ScriptRunConfig so that ray-on-aml can work correctly.

from ray_on_aml.core import Ray_On_AML

ray_on_aml =Ray_On_AML()

ray = ray_on_aml.getRay()

if ray: #in the headnode

pass

#logic to use Ray for distributed ML training, tunning or distributed data transformation with Dask

else:

print("in worker node")

5. Ray Dashboard

The easiest way to view Ray dashboard is using the connection from VSCode for Azure ML.

Open VSCode to your Compute Instance then open a terminal, type http://127.0.0.1:8265/ then ctrl+click to open the Ray Dashboard.

This trick tells VScode to forward port to your local machine without having to setup ssh port forwarding using VScode's extension on the CI.

6. Shutdown ray cluster

To shutdown cluster, run following.

ray_on_aml.shutdown()

7. Customize Ray version and the library's base configurations

Interactive cluster: There are two arguments in Ray_On_AML() class initilization to specify base configuration for the library with following default values.

Ray_On_AML(ws=ws, compute_cluster ="Name_of_Compute_Cluster",base_conda_dep =['adlfs==2021.10.0','pip==21.3.1'],\

base_pip_dep = ['ray[tune]==1.12.0','ray[rllib]==1.12.0','ray[serve]==1.12.0', 'xgboost_ray==0.1.6', 'dask==2021.12.0',\

'pyarrow >= 5.0.0','fsspec==2021.10.1','fastparquet==0.7.2','tabulate==0.8.9'])

You can change ray and other libraries versions. Do this with extreme care as it may result in conflicts impacting intended features of the package. If you change ray version here, you will need to manually re-install the ray library at the compute instance to match with the custom version of the cluster in case the compute instance is the head node. AML Job cluster: If you need to customize your ray version, you can do so by adding ray dependency after ray-on-aml. The reason is ray-on-aml comes with some recent ray version. It needs to be overidden. For example if you need ray 0.8.7, you can do like following in your job's env.yml file

- ray-on-aml==0.1.8

- ray[rllib,tune,serve]==0.8.7

Check out RLlib example with customized ray version to learn more

8. Quick start examples

Check out quick start examples to learn more

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

Trademarks

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

Security

Microsoft takes the security of our software products and services seriously, which includes all source code repositories managed through our GitHub organizations, which include Microsoft, Azure, DotNet, AspNet, Xamarin, and our GitHub organizations.

If you believe you have found a security vulnerability in any Microsoft-owned repository that meets Microsoft's definition of a security vulnerability, please report it to us as described below.

Reporting Security Issues

Please do not report security vulnerabilities through public GitHub issues.

Instead, please report them to the Microsoft Security Response Center (MSRC) at https://msrc.microsoft.com/create-report.

If you prefer to submit without logging in, send email to secure@microsoft.com. If possible, encrypt your message with our PGP key; please download it from the Microsoft Security Response Center PGP Key page.

You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Additional information can be found at microsoft.com/msrc.

Please include the requested information listed below (as much as you can provide) to help us better understand the nature and scope of the possible issue:

- Type of issue (e.g. buffer overflow, SQL injection, cross-site scripting, etc.)

- Full paths of source file(s) related to the manifestation of the issue

- The location of the affected source code (tag/branch/commit or direct URL)

- Any special configuration required to reproduce the issue

- Step-by-step instructions to reproduce the issue

- Proof-of-concept or exploit code (if possible)

- Impact of the issue, including how an attacker might exploit the issue

This information will help us triage your report more quickly.

If you are reporting for a bug bounty, more complete reports can contribute to a higher bounty award. Please visit our Microsoft Bug Bounty Program page for more details about our active programs.

Preferred Languages

We prefer all communications to be in English.

Policy

Microsoft follows the principle of Coordinated Vulnerability Disclosure.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file ray-on-aml-0.2.4.tar.gz.

File metadata

- Download URL: ray-on-aml-0.2.4.tar.gz

- Upload date:

- Size: 17.8 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.1

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

288ab970692b845de6574f88ce3389a8a91006e704a765bb7c9cbb365fdca7e9

|

|

| MD5 |

9cd3cc040b585ea6014d9b7063fc407c

|

|

| BLAKE2b-256 |

8268db3857bc19fdbf6de828d9059707280c4db003c6c15d346cb3304c144b68

|

File details

Details for the file ray_on_aml-0.2.4-py3-none-any.whl.

File metadata

- Download URL: ray_on_aml-0.2.4-py3-none-any.whl

- Upload date:

- Size: 15.1 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.1 CPython/3.11.1

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

146b0c15957b1b22d2e1f864bf26d7a822adb6f524e752d4f87b338fdcdfc659

|

|

| MD5 |

7380cb1c02a7d58301e3dec19d0e96ec

|

|

| BLAKE2b-256 |

9b459e4a6241d4ce474be9bded5f69fc22a52050a87c8f1632c46b807b57f80c

|