a lib for sourcing actionpacked entities capable of getting the job done

Project description

recruitment

a lib for sourcing actionpacked entities capable of getting the job done

Overview

This code provides abstractions (mostly housed here at time of writing this) that support unified and robust interaction with cloud services.

The Broker concept allows for the recasting of methods provided by cloud integration SDKs (e.g. boto) into an interface of your choosing. The Commlink concept houses method bindings defined by the Broker.interface while the Consumer, Publisher, and Agent entities implement the bound interface with actionpacked resilience 💥

Some Terms

When dealing with AWS data storage services, data is either published or consumed.

This library presents a flexible API for doing just that.

The primary entities are a type of Job as follows:

ConsumerPublisher

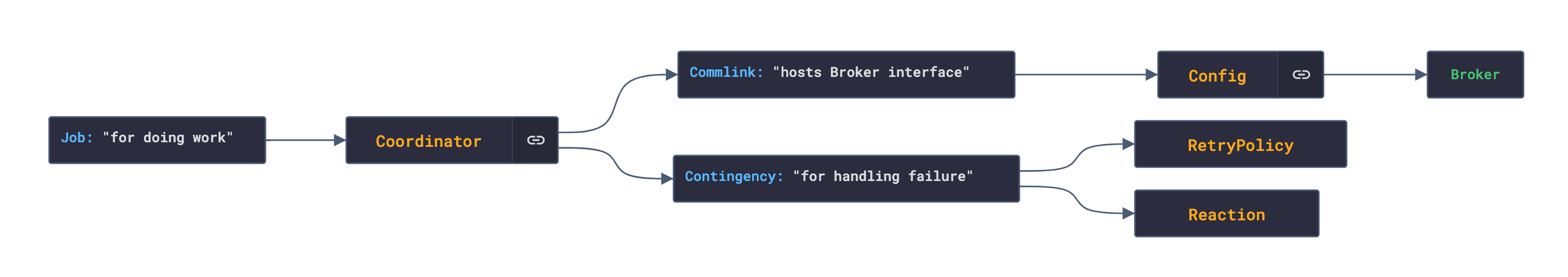

A Job leverages a Coordinator to do work.

A Coordinator is constructed using a Commlink for making external calls and an optional Contingency for responding to failures when making those external calls.

Each Commlink hosts the communication interface provided by a Config.

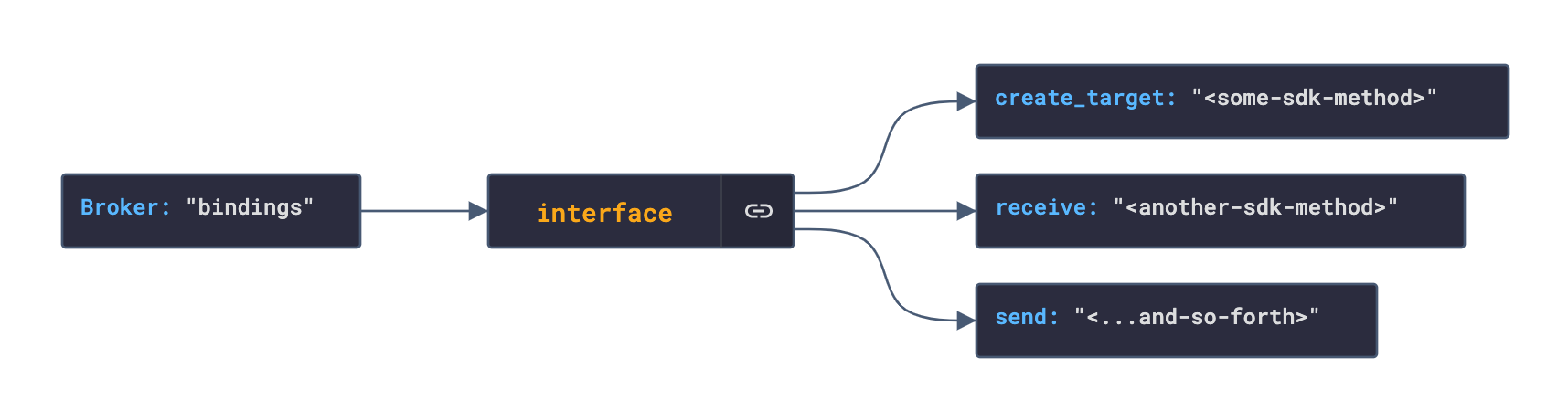

Instatiating a Config with a given service name binds a different interface.

The following diagram the relationship between the types:

This one zooms-in on the Broker:

There also exists an Agent type (not pictured) capable of both consuming and publishing by requiring injection of both aforementioned Job types upon construction.

Work done (say .consume or .publish), is encapsulated as an Effort type.

The culmination of that work can be found under the eponymous attribute of an Effort instance.

Effort |

Description |

|---|---|

.culmination |

outcome from retrying |

.initial_attempt |

first attmept |

.final_attempt |

last attempt |

.attempts |

all attempts |

.retries |

attempts - initial_attempt |

Attempts are returned as Result types for convenience (see here for more info).

Usage

Say you'd like to pull files from s3; just follow these steps:

- Define a

Config

config = Config(

service_name='s3', # can also pass Broker.s3

region_name='somewhere-in-the-world',

access_key_id='s3curityBadge!',

secret_access_key='p@ssw0rd!',

endpoint_url='some-computer.com',

)

- Build the

Job

consumer = Consumer(

Coordinator(

Commlink(config),

Contingency

)

)

Simple as that.

Give it a try.

Being that a Consumer was built, above, the .consume method is available.

Similar can by done with a Publisher.

Contingencies

Things can go wrong and when they do, it may be helpful to try again.

Passing a Contingency to a Coordinator is how you do that.

The class, alone, can be passed for some default behavior or it can be instantiated with the params max_retries and/or reaction.

The max_retries param is self-expanatory as it governs the maximum number of retries that will be attempted.

from actionpack.actions import Call

from actionpack.utils import Closure

callback = Call(Closure(print, 'did a thing!')

Contingency(max_retries=3, reaction=callback)

The reaction param is a bit more nuanced.

If an Action is passed, it's guaranteed to be performed after the original job is completed.

This feature is great for logging or notifying other processes of the what has occurred.

Development

Setup

Build scripting is managed via noxfile.

Execute nox -l to see the available commands (set the USEVENV environment variable to view virtualenv-oriented commands).

To get started, simply run nox.

Doing so will install recruitment on your PYTHONPATH.

Using the USEVENV environment variable, a virtualenv can be created in the local ".nox/" directory with something like: USEVENV=virtualenv nox -s recruitment-venv-install-3.10.

All tests can be run with nox -s test and a single test can be run with something like the following:

TESTNAME=<tests-subdir>.<test-module>.<class-name>.<method-name> nox -s test

Coverage reports are optional and can be disabled using the COVERAGE environment variable set to a falsy value like "no".

Coming Soon...

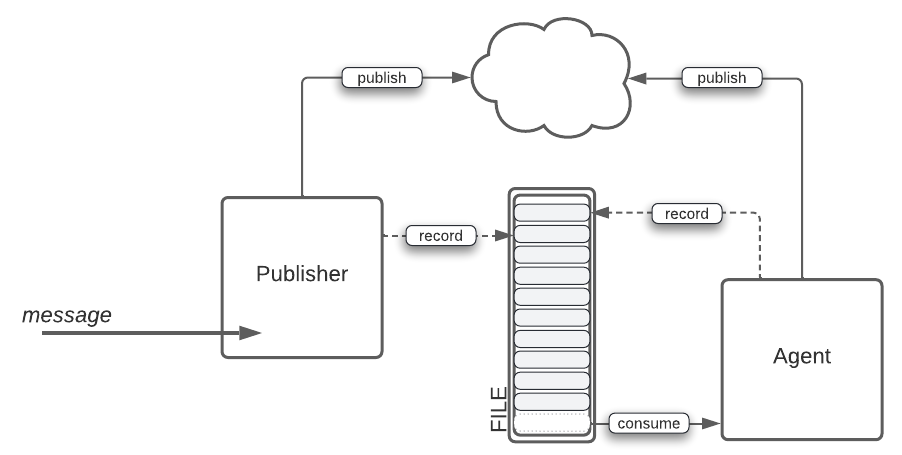

Sometimes you'd like to resume work or automate remediation. In such a case, you could serialize, then persist progress locally for some other process to work from later. This sort of design would facilitate the closed loop for ensuring whatever work tasked eventually gets done without error.

The picture, above, demonstrates a fail-safe apparatus where a Publisher publishes messages to some cloud backend and record failures to local disk when encountered. The Agent lives in a separate execution context and can re-publish failed messages.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.