Tensor Network Library with Symbolic Autograd

Project description

Tensorgrad and Tensor Cookbook

Tensorgrad

A Tensor & Deep Learning framework - It's like PyTorch meets SymPy.

Tensorgrad is an open-source python package for symbolic tensor manipulation. It performs any simplification described in the Tensor Cookbook (draft) automatically, and can even be used as a machine learning framework.

Quickstart

Install tensorgrad via pip:

uv pip install tensorgrad

(Optional) For diagram visualizations (LaTeX/TikZ), install:

apt-get install texlive-luatex texlive-latex-extra texlive-fonts-extra poppler-utils

Examples

To run the examples for yourself, use the playground or see this notebook.

Derivative of L2 Loss

from tensorgrad import Variable

import tensorgrad.functions as F

# Define sizes for the tensor edges and variables

b, x, y = sp.symbols("b x y")

X = tg.Variable("X", b, x)

Y = tg.Variable("Y", b, y)

W = tg.Variable("W", x, y)

# Define the error

error = X @ W - Y

# The Frobenius norm squared is just the contraction

# of the all the edges of the tensor with itself

loss = error @ error

# Compute the derivative of the loss wrt W

expr = tg.Derivative(loss, W)

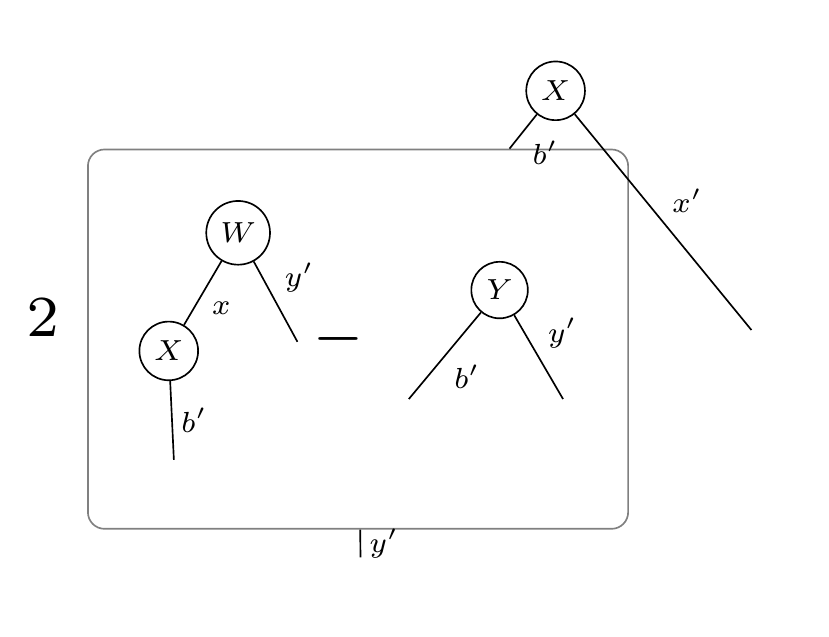

This will output the tensor diagram:

Tensorgrad can also output pytorch code for numerically computing the gradient with respect to W:

>>> to_pytorch(grad)

"""

import torch

WX = torch.einsum('xy,bx -> by', W, X)

subtraction = WX - Y

X_subtraction = torch.einsum('bx,by -> xy', X, subtraction)

final_result = 2 * X_subtraction

"""

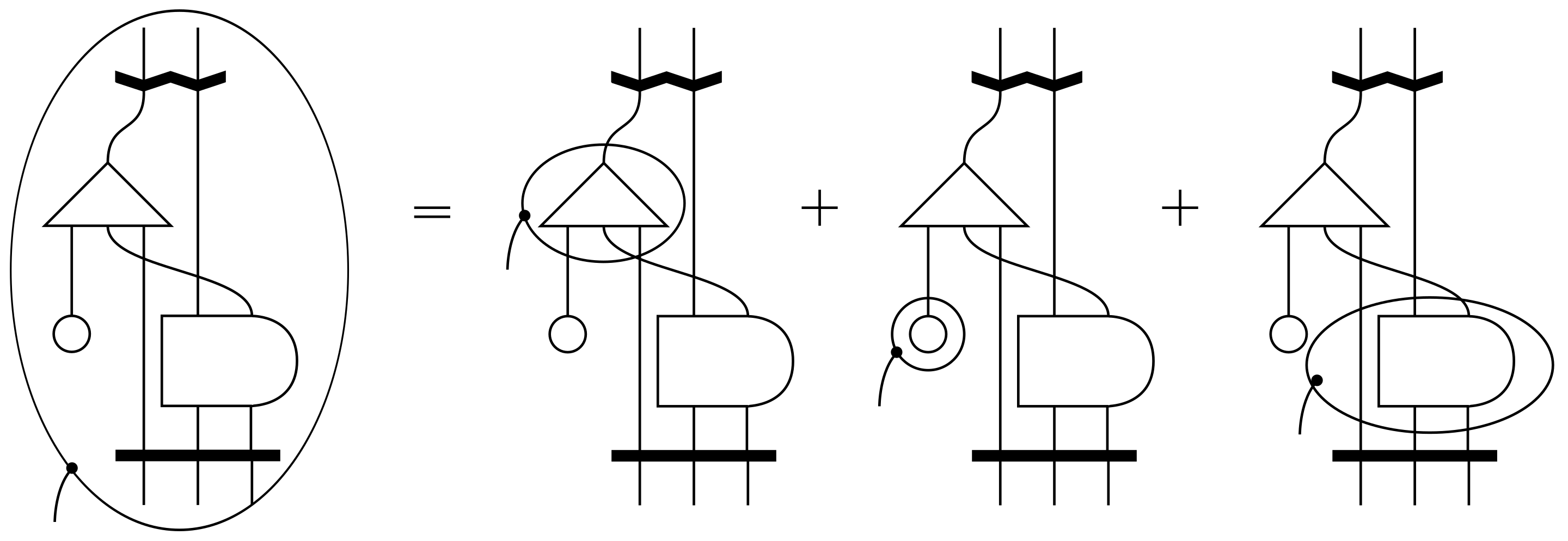

Hessian of CE Loss

For a more complicated example, consider the following program for computing the Entropy of Cross Entropy Loss:

# Define the logits and targets as vectors

i = sp.symbols("i")

logits = tg.Variable("logits", i)

target = tg.Variable("target", i)

# Define the softmax cross-entropy loss

e = F.exp(logits)

softmax = e / F.sum(e)

ce = -target @ F.log(softmax)

# Compute the Hessian by taking the gradient of the gradient

H = ce.grad(logits).grad(logits)

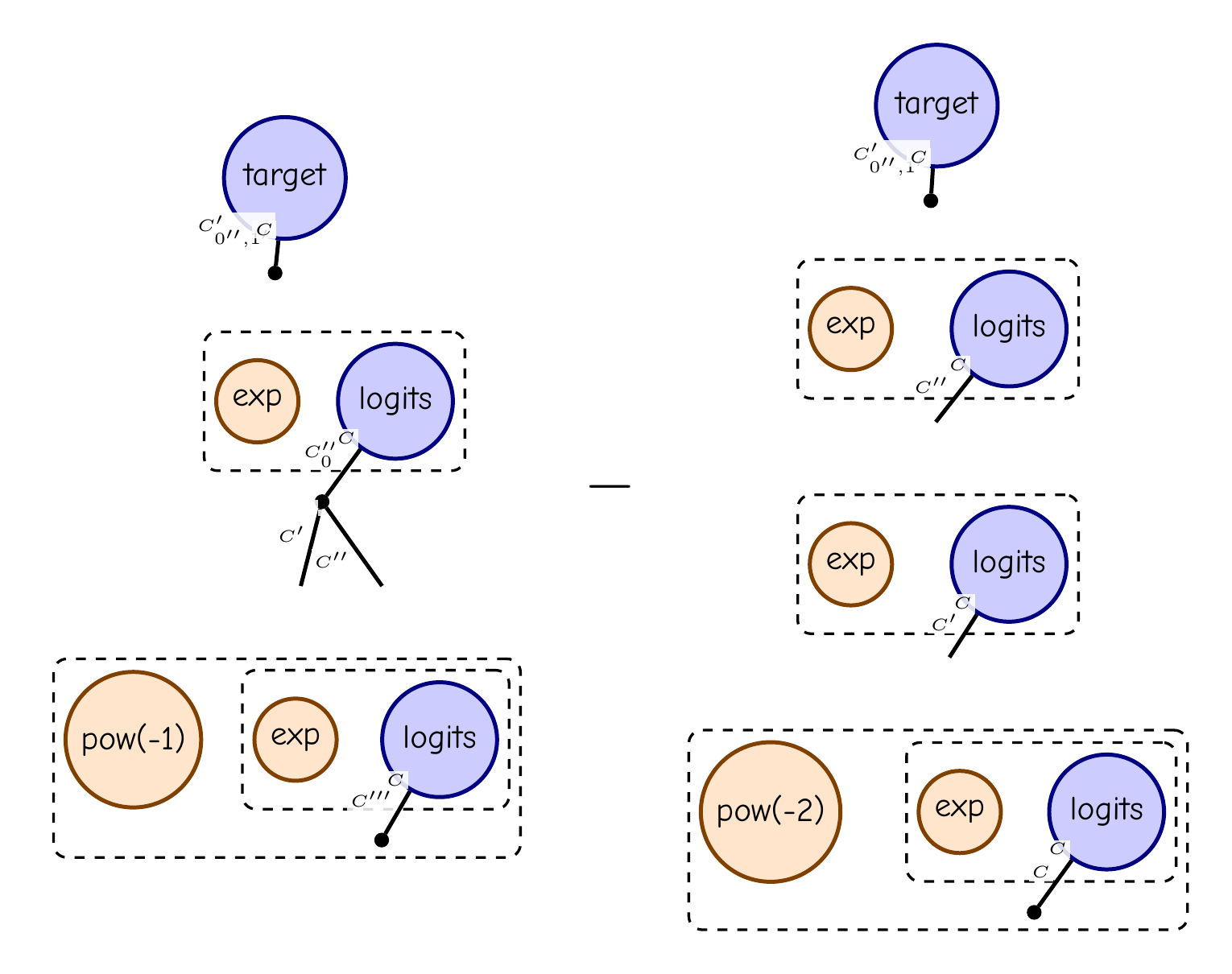

display_pdf_image(to_tikz(H.full_simplify()))

This is tensor diagram notation for (diag(p) - pp^T) sum(target), where p = softmax(logits).

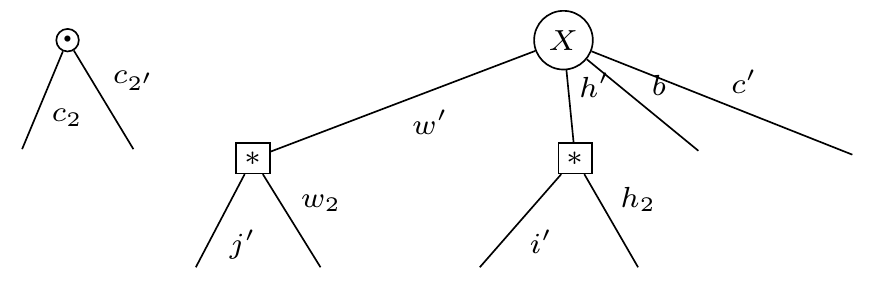

Expectations

Tensorgrad can also take expectations of arbitrary functions with respect to Gaussian tensors.

As an example, consider the L2 Loss program from before:

# Define sizes for the tensor edges and variables

b, x, y = sp.symbols("b x y")

X = tg.Variable("X", b, x)

Y = tg.Variable("Y", b, y)

W = tg.Variable("W", x, y)

# Define the mean and covariance variables of the distribution

mu = tg.Variable("mu", x, y)

# The coveriance of a matrix is a 4-tensor with two symmetries

C = tg.Variable("C", x, y, x2=x, y2=y).with_symmetries("x x2, y y2")

# Take the expectation of the L2 loss, assuming W ~ Normal(mu, C)

l2 = F.sum((X @ W - Y)**2)

E = tg.Expectation(l2, W, mu, C, covar_names={"x": "x2", "y": "y2"})

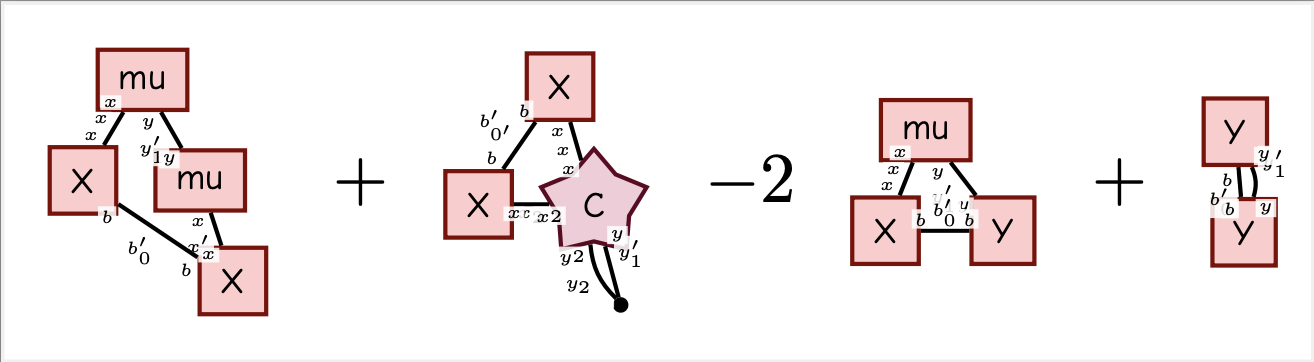

display_pdf_image(to_tikz(E.full_simplify()))

Note that the covariance is a rank-4 tensor (illustrated with a star) since we take the expectation with respect to a matrix. This is different from the normal "matrix shaped" covariance you get if you take expectation with respect to a vector.

Evaluation

Tensorgrad can evaluate your diagrams using Pytorch Named Tensors. It uses graph isomorphism detection to eliminated common subexpressions.

Code Generation

Tensorgrad can convert your diagrams back into pytorch code. This gives a super optimized way to do gradients and higher order derivatives in neural networks.

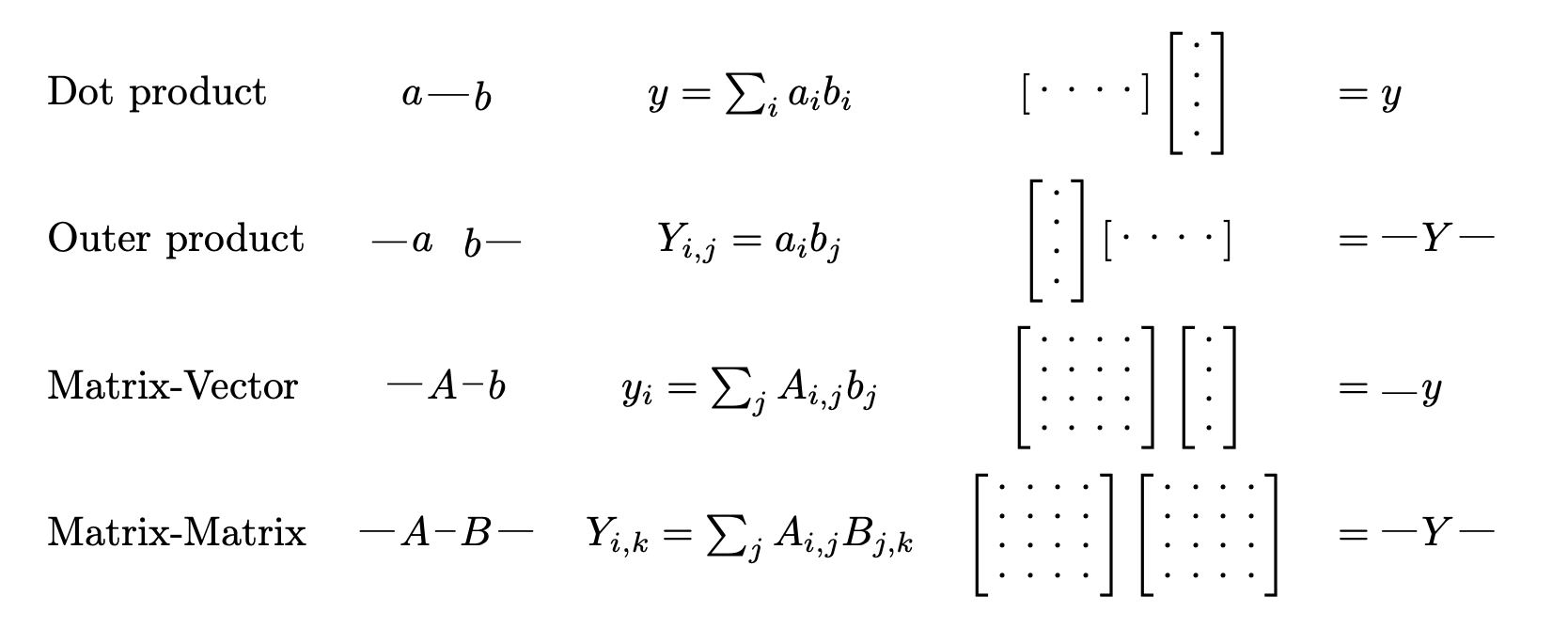

Matrix Calculus

In Penrose's book, The Road to Reality: A Complete Guide to the Laws of the Universe, he introduces a notation for taking derivatives on tensor networks. In this library we try to follow Penrose's notation, expanding it as needed to handle a full "chain rule" on tensor functions.

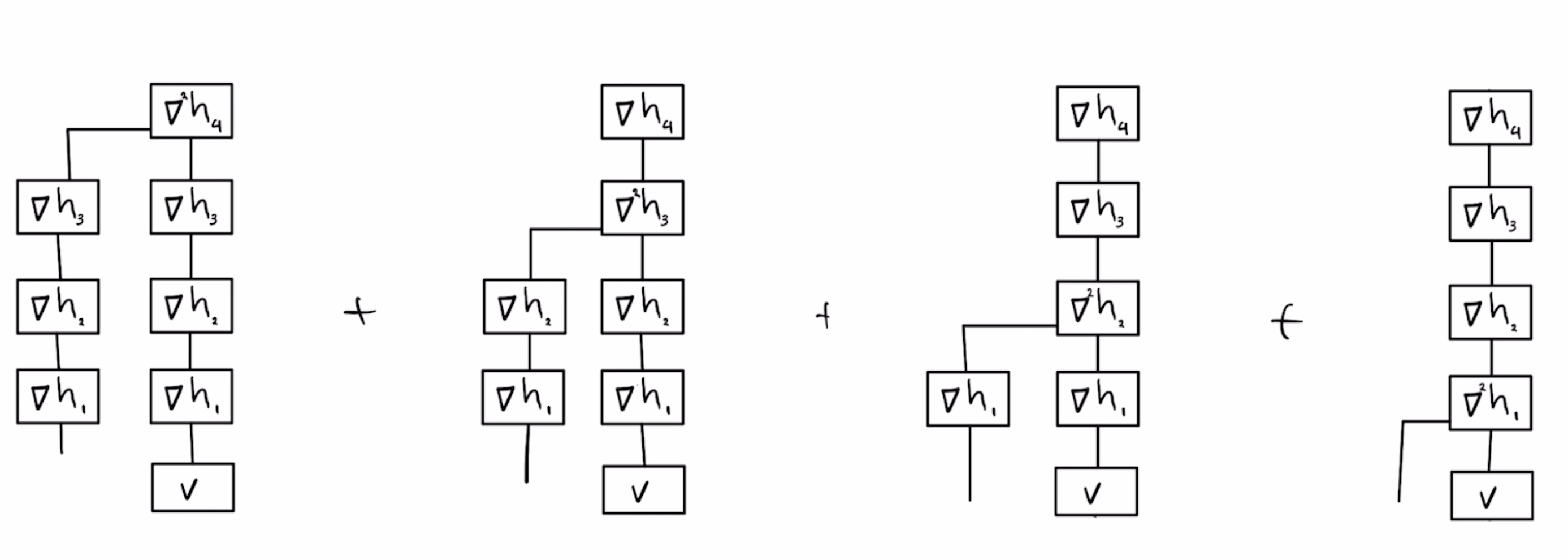

Another source of inspiration was Yaroslav Bulatov's derivation of the hessian of neural networks:

More stuff

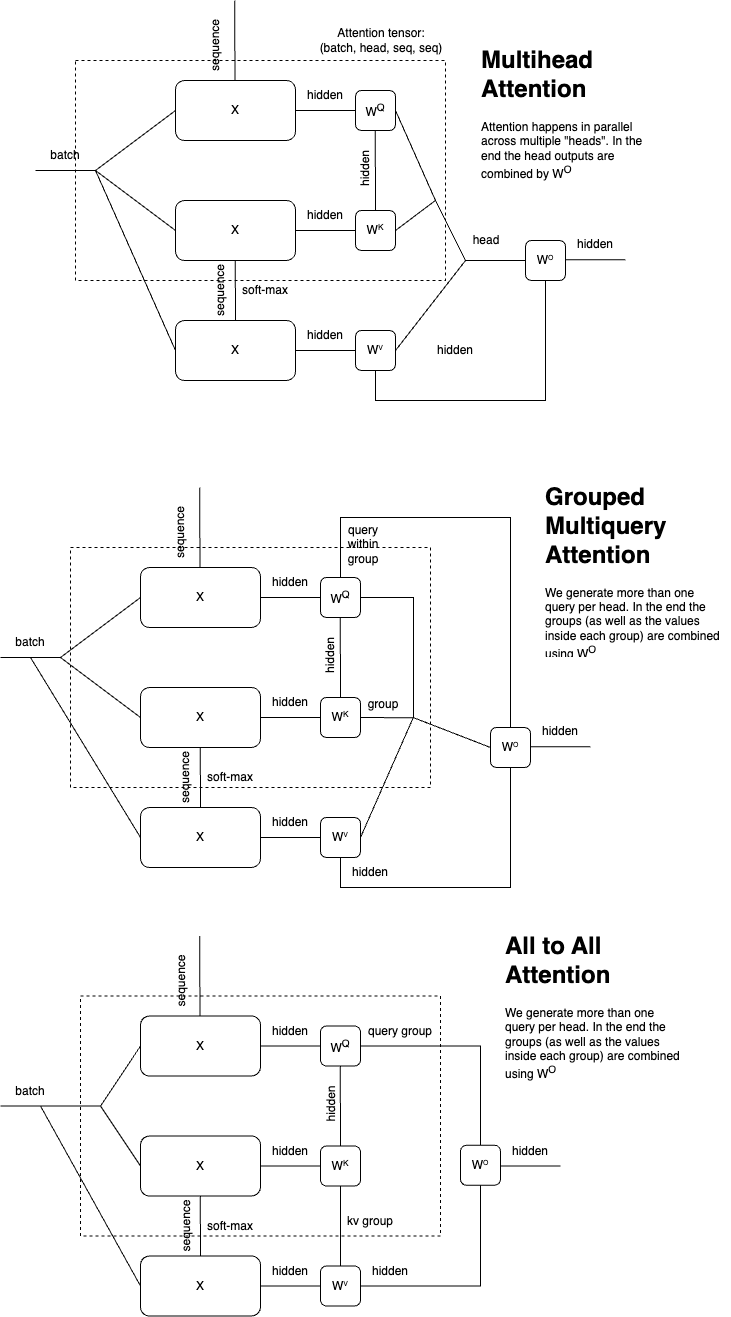

Transformers

Convolutional Neural Networks

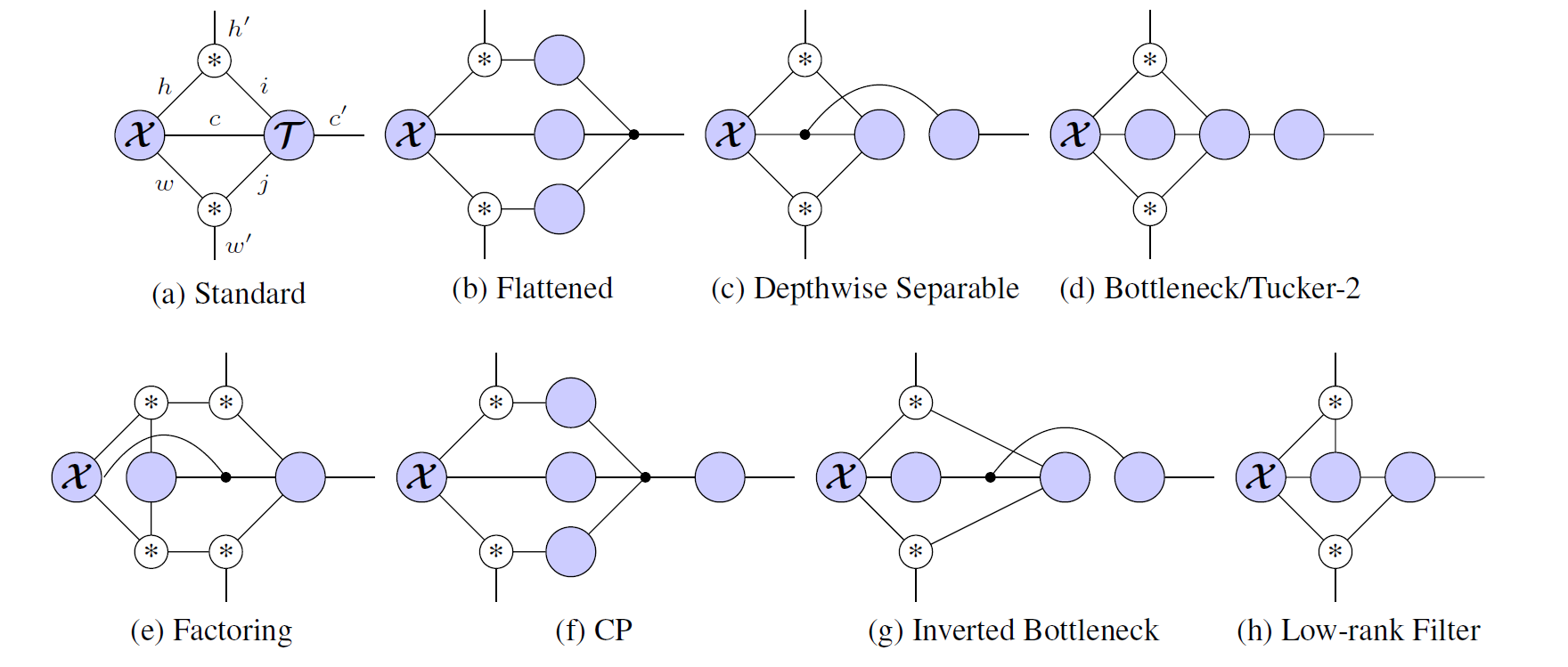

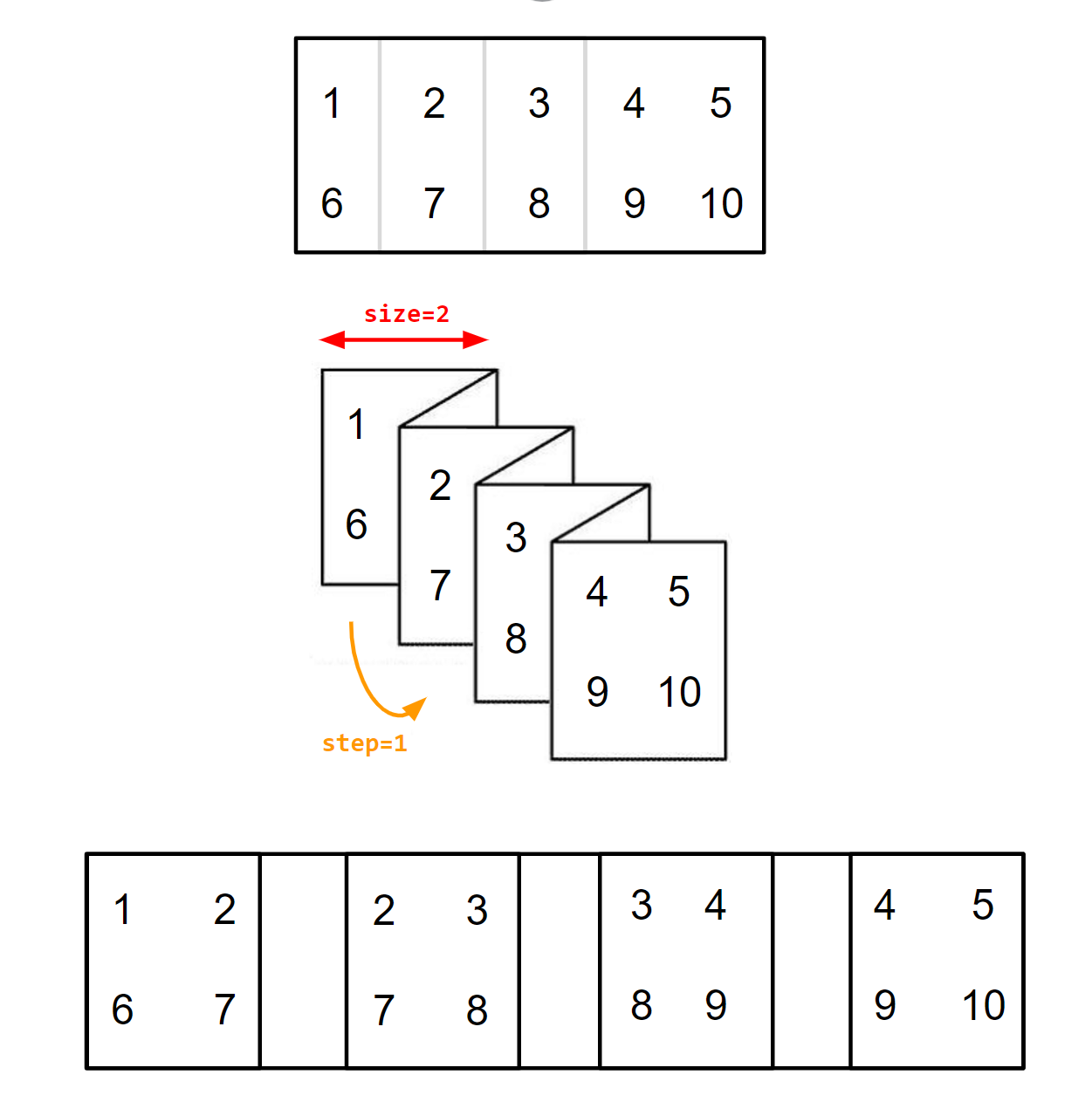

The main ingredient in CNNs are the linear operations Fold and Unfold. Unfold takes an image, with dimensions HxW and outputs P "patches" of size K^2, where K is the kernel size. Fold is the reverse operation. Since they are linear operations (they consist only of copying/adding) we can express them as a tensor with shape (H, W, P, K^2).

Hayashi et al. show that if you define a tensor (∗)_{i,j,k} = [i=j+k], then the "Unfold" operator factors along the spatial dimensions, and you can write a bunch of different convolutional neural networks easily as tensor networks:

With tensorgrad you can write the "standard" convolutional neural network like this:

data = Variable("data", ["b", "c", "w", "h"])

unfold = Convolution("w", "j", "w2") @ Convolution("h", "i", "h2")

kernel = Variable("kernel", ["c", "i", "j", "c2"])

expr = data @ unfold @ kernel

And then easily find the jacobian symbolically with expr.grad(kernel):

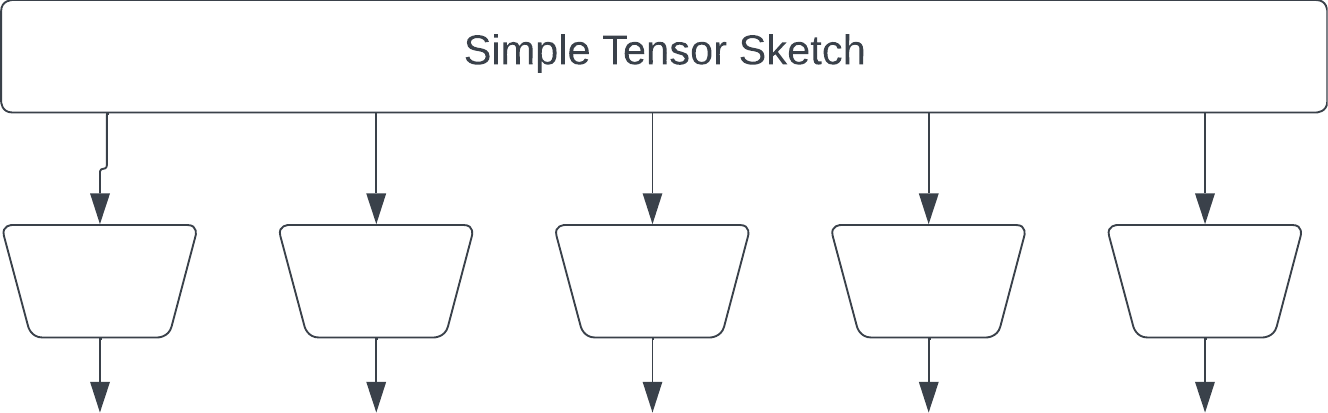

Tensor Sketch

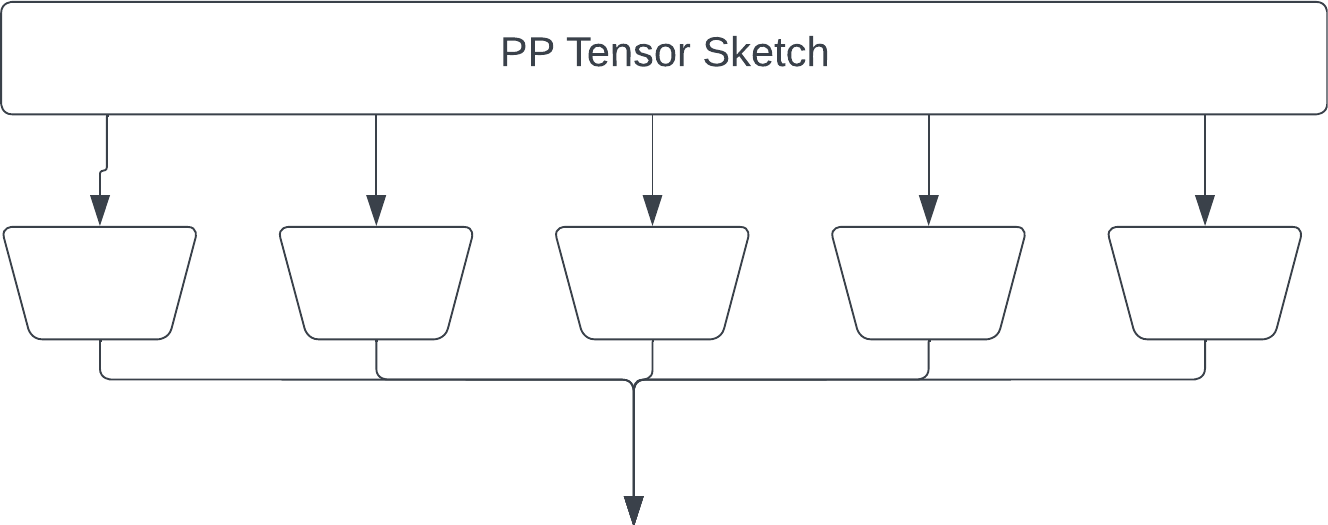

Taken from this Twitter thread: I wish I had known about Tensor Graphs back when I worked on Tensor-sketching. Let me correct this now and explain dimensionality reduction for tensors using Tensor Networks:

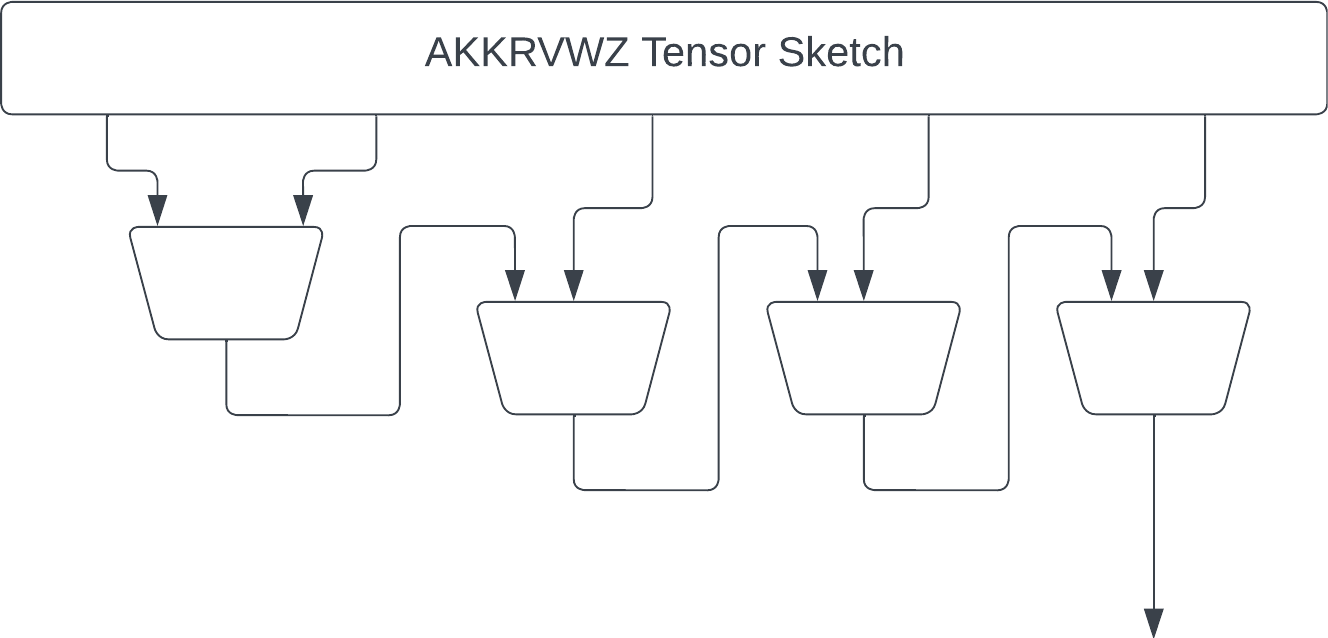

The second version is the "original" Tensor Sketch by Rasmus Pagh and Ninh Pham. (https://rasmuspagh.net/papers/tensorsketch.pdf) Each fiber is reduced by a JL sketch, and the result is element-wise multiplied. Note the output of each JL is larger than in the "simple" sketch to give the same output size.

Next we have the "recursive" sketch by myself and coauthors in https://thomasahle.com/#paper-tensorsketch-joint. In the paper we sometimes describe this as a tree, but it doesn't really matter. We just had already created the tree-graphic when we realized.

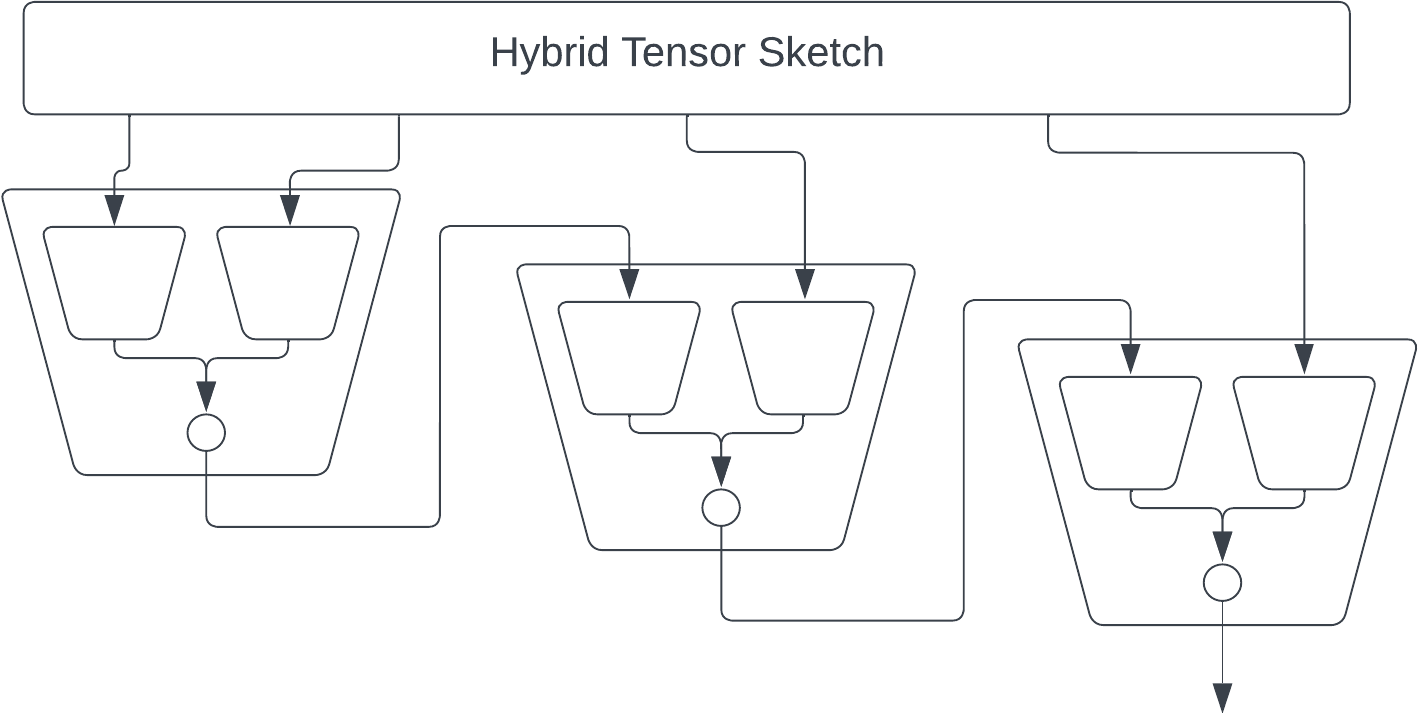

The main issue with the AKKRVWZ-sketch was that we used order-3 tensors internally, which require more space/time than simple random matrices in the PP-sketch. We can mitigate this issue by replacing each order-3 tensor with a simple order-2 PP-sketch.

Finally we can speed up each matrix multiplication by using FastJL, which is itself basically an outer product of a bunch of tiny matrices. But at this point my picture is starting to get a bit overwhelming.

See also

- Tool for creating tensor diagrams from einsum by Thomas Ahle

- Ideograph: A Language for Expressing and Manipulating Structured Data by Stephen Mell, Osbert Bastani, Steve Zdancewic

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file tensorgrad-0.2.1.tar.gz.

File metadata

- Download URL: tensorgrad-0.2.1.tar.gz

- Upload date:

- Size: 171.0 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: uv/0.9.9 {"installer":{"name":"uv","version":"0.9.9"},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

f38545f9915d7e8d3c2b86d9c9fd0f29f5c91963cc5b5ab29ad8ca21a478b4b3

|

|

| MD5 |

da45cd4a798c90d8c7b465fcdd55092d

|

|

| BLAKE2b-256 |

e1bf36e71c755854616ada9a522ab3841bf0ff18faea8adfae8072ade15f2a42

|

File details

Details for the file tensorgrad-0.2.1-py3-none-any.whl.

File metadata

- Download URL: tensorgrad-0.2.1-py3-none-any.whl

- Upload date:

- Size: 182.0 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: uv/0.9.9 {"installer":{"name":"uv","version":"0.9.9"},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

63887aff69ce364cf685f2de5c887bba02ef33b417d61af7e435f9a2eb04ef12

|

|

| MD5 |

cf349fab669af5afd80375793788cb8f

|

|

| BLAKE2b-256 |

5357db69141802f485c0f25a050150f0345f0f102f2cde9a0c333036d8a394a9

|