Torch Dimensionality Reduction Library

Project description

Torch Dimensionality Reduction

TorchDR is a high-performance dimensionality reduction library built on PyTorch. It provides GPU and multi-GPU accelerated DR methods in a unified framework with a simple, scikit-learn-compatible API.

Key Features

| Feature | Description |

|---|---|

| High Performance | Engineered for speed with GPU acceleration, torch.compile support, and optimized algorithms leveraging sparsity and negative sampling. |

| Multi-GPU Support | Scale to massive datasets with built-in distributed computing. Use the torchdr CLI or torchrun for easy multi-GPU execution of compatible modules and methods. |

| Modular by Design | Every component is designed to be easily customized, extended, or replaced to fit your specific needs. |

| Memory-Efficient | Natively handles sparsity and memory-efficient symbolic operations. Supports PyTorch DataLoader for streaming large datasets. |

| Seamless Integration | Fully compatible with the scikit-learn and PyTorch ecosystems. Use familiar APIs and integrate effortlessly into your existing workflows. |

| Minimal Dependencies | Requires only PyTorch, NumPy, and scikit‑learn; optionally add Faiss for fast k‑NN or KeOps for symbolic computation. |

Getting Started

TorchDR offers a user-friendly API similar to scikit-learn where dimensionality reduction modules can be called with the fit_transform method. It seamlessly accepts both NumPy arrays and PyTorch tensors as input, ensuring that the output matches the type and backend of the input.

from sklearn.datasets import fetch_openml

from torchdr import UMAP

x = fetch_openml("mnist_784").data.astype("float32")

z = UMAP(n_neighbors=30).fit_transform(x)

GPU Acceleration: Set device="cuda" to run on GPU. By default (device="auto"), TorchDR uses the input data's device.

z = UMAP(n_neighbors=30, device="cuda").fit_transform(x)

Multi-GPU: Use the torchdr CLI to parallelize across GPUs with no code changes:

torchdr my_script.py # Use all available GPUs

torchdr --gpus 4 my_script.py # Use 4 GPUs

torch.compile: Enable compile=True for additional speed on PyTorch 2.0+.

Backends: The backend parameter controls k-NN and memory-efficient computations:

| Backend | Description |

|---|---|

"faiss" |

Fast approximate k-NN via Faiss (Recommended) |

"keops" |

Exact symbolic computation via KeOps with linear memory |

None |

Raw PyTorch |

DataLoader for Large Datasets: Pass a PyTorch DataLoader instead of a tensor to stream data batch-by-batch. Requires backend="faiss".

from torch.utils.data import DataLoader, TensorDataset

dataloader = DataLoader(TensorDataset(X), batch_size=10000, shuffle=False)

z = UMAP(backend="faiss").fit_transform(dataloader)

Methods

Neighbor Embedding

TorchDR provides a suite of neighbor embedding methods, optimal for data visualization.

| Method | Complexity | Multi-GPU | Paper |

|---|---|---|---|

UMAP |

O(n) | ✅ | ↗ |

LargeVis |

O(n) | ✅ | ↗ |

InfoTSNE |

O(n) | ✅ | ↗ |

PACMAP |

O(n) | ❌ | ↗ |

SNE |

O(n²) | ❌ | ↗ |

TSNE |

O(n²) | ❌ | ↗ |

TSNEkhorn |

O(n²) | ❌ | ↗ |

COSNE |

O(n²) | ❌ | ↗ |

Note: Quadratic methods support

backend="keops"for exact computation with linear memory usage.

Spectral Embedding

TorchDR provides various spectral embedding methods: PCA, IncrementalPCA, ExactIncrementalPCA, KernelPCA, PHATE. PCA and ExactIncrementalPCA support multi-GPU distributed training via the distributed="auto" parameter.

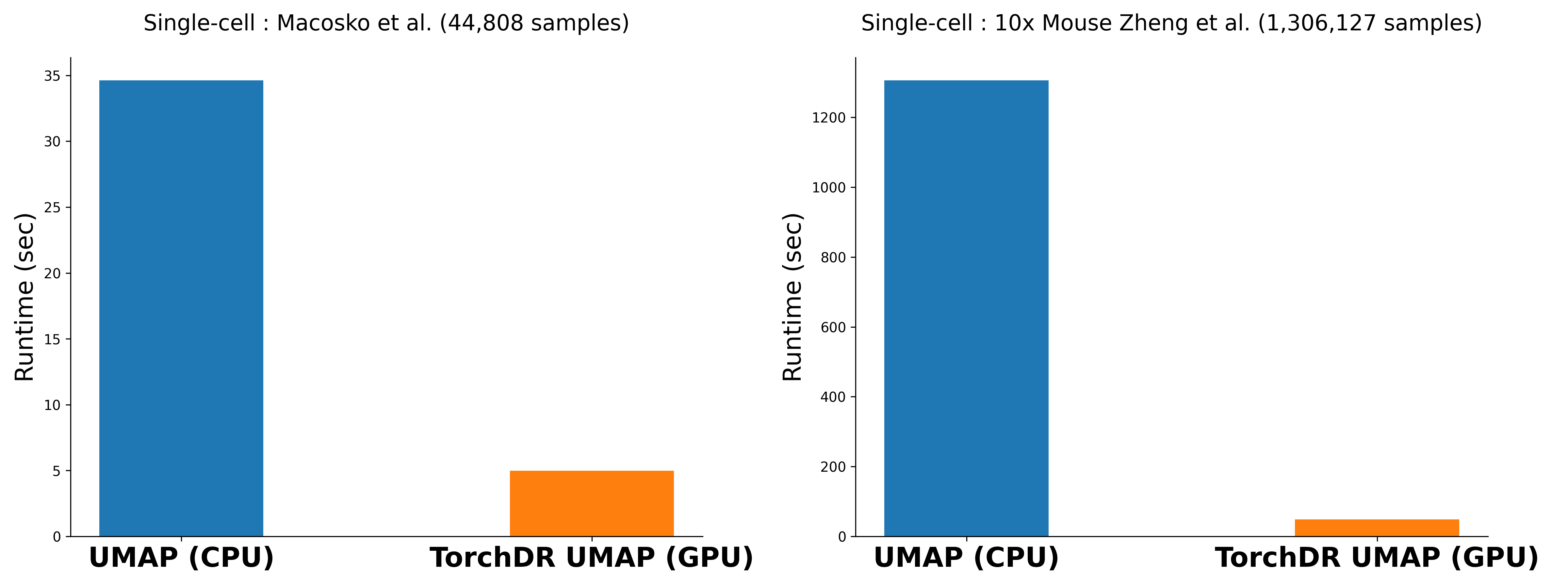

Benchmarks

Relying on TorchDR enables an orders-of-magnitude improvement in runtime performance compared to CPU-based implementations. See the code.

Examples

See the examples folder for all examples.

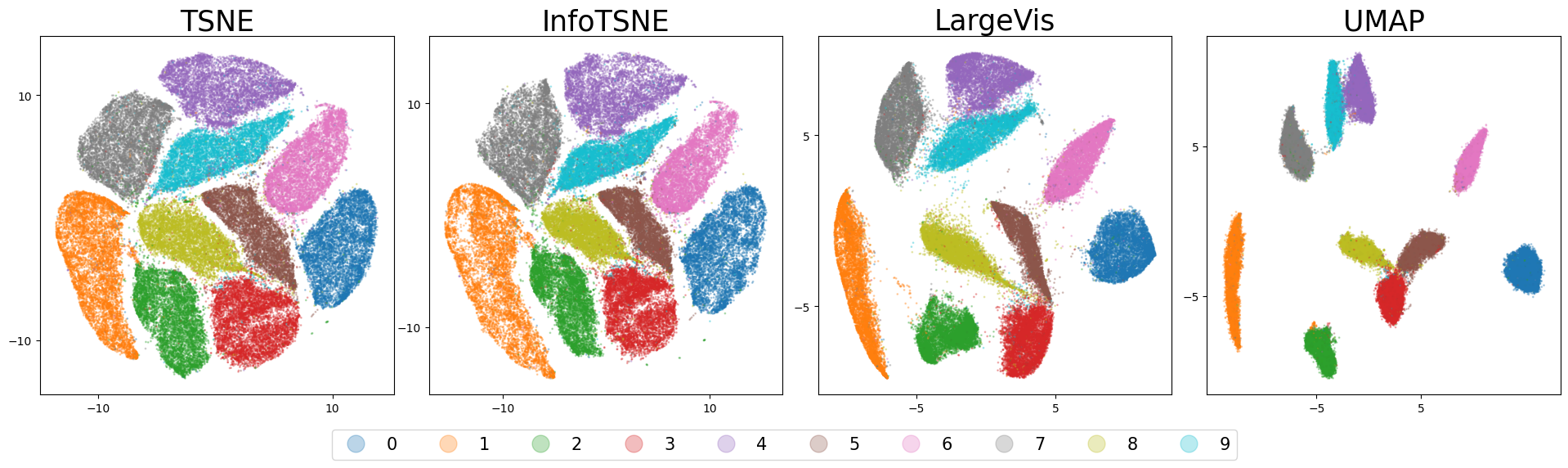

MNIST. (Code) A comparison of various neighbor embedding methods on the MNIST digits dataset.

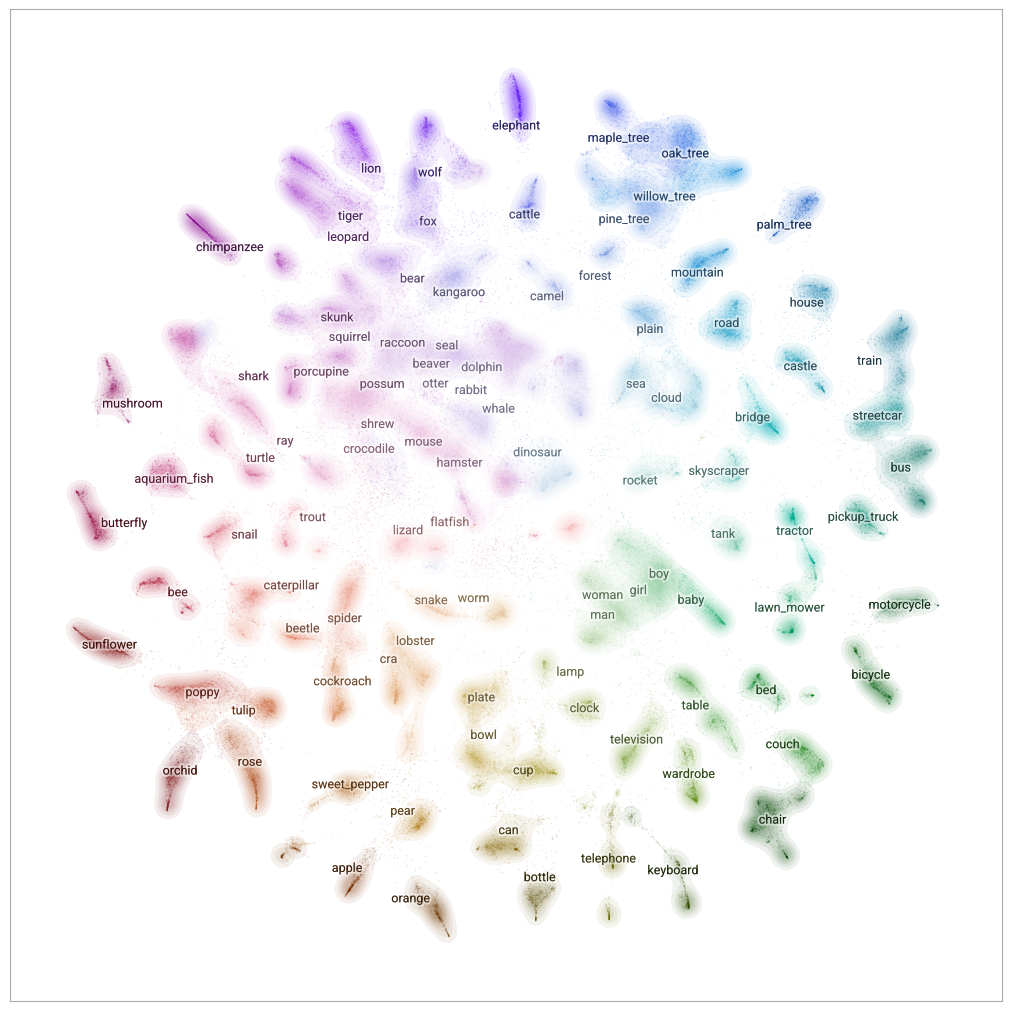

CIFAR100. (Code)

Visualizing the CIFAR100 dataset using DINO features and TSNE.

Advanced Features

Affinities

TorchDR features a wide range of affinities which can then be used as a building block for DR algorithms. It includes:

- Affinities based on k-NN normalizations:

SelfTuningAffinity,MAGICAffinity,UMAPAffinity,PHATEAffinity,PACMAPAffinity. - Doubly stochastic affinities:

SinkhornAffinity,DoublyStochasticQuadraticAffinity. - Adaptive affinities with entropy control:

EntropicAffinity,SymmetricEntropicAffinity.

Evaluation Metrics

TorchDR provides efficient GPU-compatible evaluation metrics: silhouette_score, knn_label_accuracy, neighborhood_preservation, kmeans_ari.

Installation

Install the core torchdr library from PyPI:

pip install torchdr # or: uv pip install torchdr

Note: torchdr does not install faiss-gpu or pykeops by default. You need to install them separately to use the corresponding backends.

-

Faiss (Recommended): For the fastest k-NN computations, install Faiss. Please follow their official installation guide. A common method is using

conda:conda install -c pytorch -c nvidia faiss-gpu

-

KeOps: For memory-efficient symbolic computations, install PyKeOps.

pip install pykeops

Installation from Source

If you want to use the latest, unreleased version of torchdr, you can install it directly from GitHub:

pip install git+https://github.com/torchdr/torchdr

Finding Help

If you have any questions or suggestions, feel free to open an issue on the issue tracker or contact Hugues Van Assel directly.

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file torchdr-0.4.tar.gz.

File metadata

- Download URL: torchdr-0.4.tar.gz

- Upload date:

- Size: 3.9 MB

- Tags: Source

- Uploaded using Trusted Publishing? Yes

- Uploaded via: uv/0.9.29 {"installer":{"name":"uv","version":"0.9.29","subcommand":["publish"]},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

1da342e2a46670ec939e3239828f6e67a944124888db159f29328f3e1aa743a0

|

|

| MD5 |

e389b9a5bc717a49b061ddcd4206d6e8

|

|

| BLAKE2b-256 |

ea75ac974c233bc6df0c3fae56190e16d3e42a44cc2f84dd1ba90ff769bcbb2c

|

File details

Details for the file torchdr-0.4-py3-none-any.whl.

File metadata

- Download URL: torchdr-0.4-py3-none-any.whl

- Upload date:

- Size: 178.8 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? Yes

- Uploaded via: uv/0.9.29 {"installer":{"name":"uv","version":"0.9.29","subcommand":["publish"]},"python":null,"implementation":{"name":null,"version":null},"distro":{"name":"Ubuntu","version":"24.04","id":"noble","libc":null},"system":{"name":null,"release":null},"cpu":null,"openssl_version":null,"setuptools_version":null,"rustc_version":null,"ci":true}

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

97b07f0f88ff46dddc7e6bd218bec9d367e2cf9a0488775843e3780acc77b888

|

|

| MD5 |

04376a371da84f6b68b2b41a9001c91d

|

|

| BLAKE2b-256 |

070b80261313c9dcc092dc45463dfafffffa26e5c9864dc76c976b677878ec4f

|