XGBoost for probabilistic prediction.

Project description

xgboost-distribution

XGBoost for probabilistic prediction. Like NGBoost, but faster, and in the XGBoost scikit-learn API.

Installation

$ pip install xgboost-distribution

$ pip install xgboost-distribution[gpu] # for GPU supportpython_requires = >=3.10

install_requires =

scikit-learn

xgboost>=3.0.0Usage

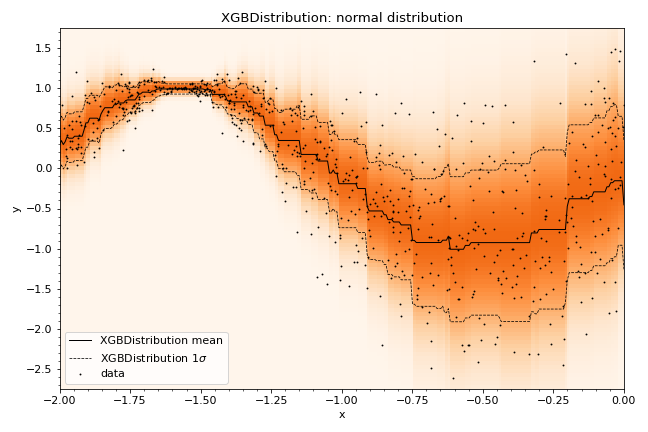

XGBDistribution follows the XGBoost scikit-learn API, with an additional keyword argument specifying the distribution, which is fit via Maximum Likelihood Estimation:

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

from xgboost_distribution import XGBDistribution

data = fetch_california_housing()

X, y = data.data, data.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

model = XGBDistribution(

distribution="normal",

n_estimators=500,

early_stopping_rounds=10

)

model.fit(X_train, y_train, eval_set=[(X_test, y_test)])See the documentation for all available distributions.

Note: We recommend using early stopping to avoid overfitting, as otherwise the determined distribution parameters can become unreliable.

After fitting, we can predict the parameters of the distribution:

preds = model.predict(X_test)

mean, std = preds.loc, preds.scaleNote that this returned a namedtuple of numpy arrays for each parameter of the distribution (we use the scipy stats naming conventions for the parameters, see e.g. scipy.stats.norm for the normal distribution).

NGBoost performance comparison

XGBDistribution follows the method shown in the NGBoost library, using natural gradients to estimate the parameters of the distribution.

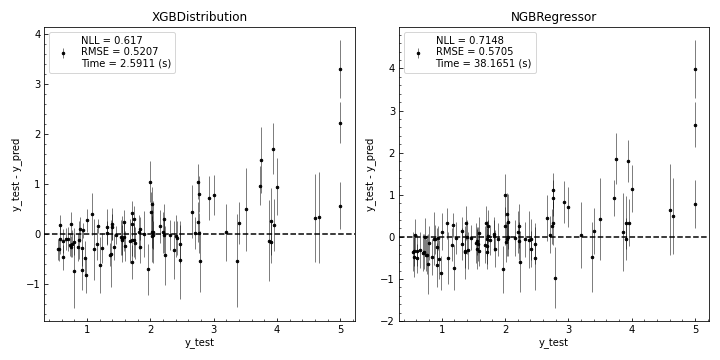

Below, we show a performance comparison of XGBDistribution and the NGBoost NGBRegressor, using the California Housing dataset, estimating normal distributions. While the performance of the two models is fairly similar (measured on negative log-likelihood of a normal distribution and the RMSE), XGBDistribution is around 15x faster (timed on both fit and predict steps):

Please see the experiments page for results across various datasets.

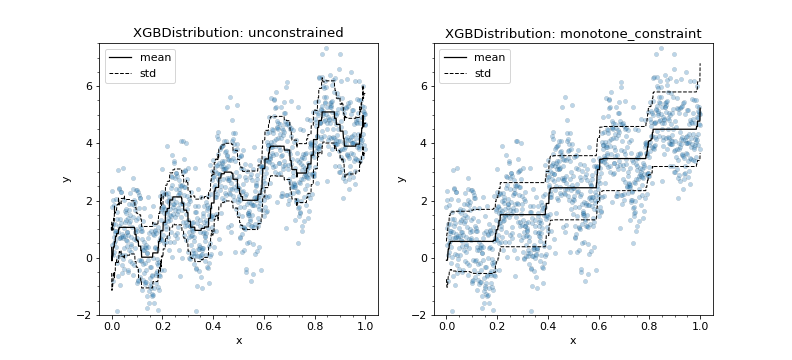

Full XGBoost features

XGBDistribution offers the full set of XGBoost features available in the XGBoost scikit-learn API, allowing, for example, probabilistic regression with monotonic constraints:

Acknowledgements

This package would not exist without the excellent work from:

NGBoost - Which demonstrated how gradient boosting with natural gradients can be used to estimate parameters of distributions. Much of the gradient calculations code were adapted from there.

XGBoost - Which provides the gradient boosting algorithms used here, in particular the sklearn APIs were taken as a blue-print.

Note

This project has been set up using PyScaffold 4.0.1. For details and usage information on PyScaffold see https://pyscaffold.org/.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file xgboost_distribution-0.4.0.tar.gz.

File metadata

- Download URL: xgboost_distribution-0.4.0.tar.gz

- Upload date:

- Size: 212.7 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.10.16

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

b6f86225f17cf207d4bbf76c7ed1f60549ad9afc74e243741d8f9f2c29c4dbe8

|

|

| MD5 |

680adf76998952ec4aca290cecef8fe9

|

|

| BLAKE2b-256 |

1ec083683038b36c7c31c4b3f290978c2b69076bfe774be8b50fb959f820c764

|

File details

Details for the file xgboost_distribution-0.4.0-py2.py3-none-any.whl.

File metadata

- Download URL: xgboost_distribution-0.4.0-py2.py3-none-any.whl

- Upload date:

- Size: 19.2 kB

- Tags: Python 2, Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/6.1.0 CPython/3.10.16

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

934083f1b51e6ff13ff443b9fb4a226f75628b2e3cfb2af3f7694bc68ac464d4

|

|

| MD5 |

55447ce2b41fbdb0862c0aed51426f45

|

|

| BLAKE2b-256 |

adf75b7b62d43b6426b0b3dc21ff8757dc452df189431e4811d38391b740bc21

|