Aggregator tool for multiple Amplicon Architect outputs

Project description

Amplicon Suite Aggregator

Description

Aggregates the results from AmpliconSuite

- Takes in zip files (completed results of individual or grouped Amplicon Suite runs)

- Aggregates results

- Packages results into a new file

- Outputs an aggregated .html and .csv file of results.

- Result file (in .tar.gz) is the aggregated results of all individual AmpliconSutie runs. It can be directly loaded onto AmpliconRepository.

- Can also take additional files along with the upload, provided the directory they are in contains a file named

AUX_DIR.

Parameters available on GenePattern Server

- Available at: https://genepattern.ucsd.edu/gp/pages/index.jsf?lsid=urn:lsid:genepattern.org:module.analysis:00429:4.1

- Amplicon Architect Results (required)

- Compressed (.tar.gz, .zip) files of the results from individual or grouped Amplicon Architect runs.

- project_name (required)

- Prefix for output .tar.gz. Result will be named: output_prefix.tar.gz

- Amplicon Repository Email

- If wanting to directly transfer results to AmpliconRepository.org, please enter your email.

- run amplicon classifier

- Option for users to re-run Amplicon Classifier.

- reference genome

- Reference genome used for Amplicon Architect results in the input.

- upload only

- If 'Yes', then skip aggregation / classification and upload file to AmpliconRepository as is.

- name map

- A two column file providing the current identifier for each sample (col 1) and a replacement name (col 2). Enables batch renaming of samples.

Installation

- Option 1: Git Clone

- Step 1:

git clone https://github.com/genepattern/AmpliconSuiteAggregator.git - Step 2: Install python package dependencies from list below.

- If running into dependency issues, please use the docker methods.

- Step 1:

- Option 2: Docker

- Step 1:

docker pull genepattern/amplicon-suite-aggregator

- Step 1:

Dependencies

- List of python package dependencies used: intervaltree, matplotlib, numpy, pandas, Pillow, requests, scipy, urllib3

Options when running locally

Amplicon Suite Aggregator related options

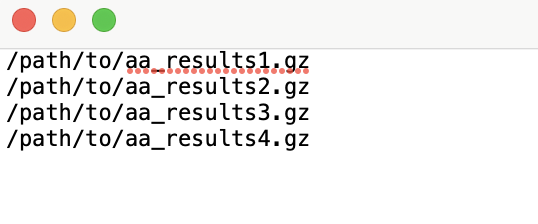

-flist/--filelist: Text file with files to use (one per line)-

Create an

input_list.txtfile in this format where each line is a filepath to compressed aa_results: -

Use this file as the input to the

-flistflag.

-

--files: List of files or directories to use. Can specify multiple paths of (.tar.gz, .zip) of Amplicon Architect results.-o/--output_name: Output Prefix. Will be used as project name for Amplicon Repository upload.--name_map: A two column file providing the current identifier for each sample (col 1) and a replacement name (col 2). Enables batch renaming of samples.-c/--run_classifier(Yes, No): If 'Yes', then run Amplicon Classifier on AA samples. If Amplicon Classifier results are already included in inputs, then they will be removed and samples will be re-classified.--ref(hg19, GRCh37, GRCh38, GRCh38_viral, mm10): Reference genome name used for alignment, one of hg19, GRCh37, GRCh38, GRCh38_viral, or mm10.

AmpliconRepository related options

-u/--username: Email address for Amplicon Repository. If specified, will trigger an attempt to upload the aggregated file to AmpliconRepository.org.--upload_only(Yes, No): If 'Yes', then skip aggregation / classification and upload file to AmpliconRepository as is.-s/--server(dev, prod, local-debug): Which server to send results to. Accepts 'dev' or 'prod' or 'local-debug'. 'prod' is what most users want. 'dev' and 'local-debug' are for development and debugging.

How to run

-

If using local CLI:

- Running aggregator:

python3 /path/to/AmpliconSuiteAggregator/path/src/AmpliconSuiteAggregator.py **options** - Using API to upload directly to AmpliconRepository without aggregation:

python3 /path/to/AmpliconSuiteAggregator/path/src/AmpliconSuiteAggregator.py --files /path/to/aggregated/file.tar.gz -o projname -u your.amplicon.repository.username@gmail.com --upload_only Yes -s prod- Log into Amplicon Repository, you should see a new project with the output prefix you specified.

- Running aggregator:

-

If using Docker:

- To Running aggregator:

docker run --rm -it -v PATH/TO/INPUTS/FOLDER:/inputs/ genepattern/amplicon-suite-aggregator python3 /opt/genepatt/AmpliconSuiteAggregator.py **options**

- Using API to upload directly to AmpliconRepository without aggregation:

docker run --rm -it -v PATH/TO/INPUTS/FOLDER:/inputs/ genepattern/amplicon-suite-aggregator python3 /opt/genepatt/AmpliconSuiteAggregator.py -flist /path/to/input_list.txt -u YOUR_AMPLICON_REPOSITORY_EMAIL -o projname -u your.amplicon.repository.username@gmail.com --upload_only Yes -s prod- Log into Amplicon Repository, you should see a new project with the output prefix you specified.

- To Running aggregator:

Programming Language

- Python

Contact

For any issues

- Edwin Huang, edh021@cloud.ucsd.edu

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

File details

Details for the file ampliconsuiteaggregator-0.0.1.tar.gz.

File metadata

- Download URL: ampliconsuiteaggregator-0.0.1.tar.gz

- Upload date:

- Size: 22.6 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.10.2

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 12da381cd09a7bc843e9bcd50d082b4a11172123762d2062bd8c33436df51415 |

|

| MD5 | 2307b2d4b2b9f8a8acc83b4c89fd004f |

|

| BLAKE2b-256 | 835a85dd2748179ae3b85b9942f5c646480523378a0a4a1d6c08265b96b51815 |

File details

Details for the file AmpliconSuiteAggregator-0.0.1-py3-none-any.whl.

File metadata

- Download URL: AmpliconSuiteAggregator-0.0.1-py3-none-any.whl

- Upload date:

- Size: 25.5 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/5.1.1 CPython/3.10.2

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | e6b701c249c2c2f40529a1a179394b7c622cdc22d85882da8a052e6fcfdb76c8 |

|

| MD5 | 9c4cc51a01a4de8d6b0b549b2780f4c6 |

|

| BLAKE2b-256 | b2da6dd769703aa9713b5176757ba08bb3d7651c538c8dbc4bda62370aef2403 |