Ease working with neural networks

Project description

This project aims to ease the workflow of working with neural networks, I will be updating the code as I learn.

I am currently pursuing bachelor's in data science and I am interested in Machine Learning and Statistics

Github Profile

Medium Profile

Website

Github Repository of the source code

PyPI link of the project

This code contains two classes, one for the Layer and one for the Network

Layer Class

This class creates a Layer of the neural network which can be used for further calculations

def __init__(self,inputs:np.array,n,activation = 'sigmoid',weights=None,bias=None,random_state=123,name=None) -> None:

The constructor of the Layer class takes the following arguments:

- name - The name of the layer, defaults to None

- inputs - The inputs for the layer, shape = (n_x,m)

- n - The number of neurons you would like to have in the layer

- weights - The weights for the layer, initialized to random values if not given, shape = (n[l], n[l-1])

- bias - The bias for the layer, initialized to random values if not given, shape = (n[l],1)

- activation- The activation function you would like to use, defaults to sigmoid

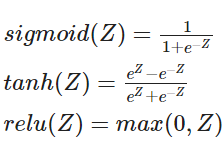

Can chose from ['sigmoid','tanh','relu']

Raises ValueError if the activaion function is not among the specified functions

Equations of activation functions for reference - random_state - The numpy seed you would like to use, defaults to 123

Returns: None

Example

# goal - to create a layer of 5 neurons with relu activation function whose name is 'First hidden layer'and initializes random weights

x = np.random.randn(5,5)

layer1 = Layer(name='First hidden layer',inputs=x)

Fit function

def fit(self)->np.array:

Fits the layer according to the activation function given to that layer

For the process of fitting, it first calculates Z according to the equation

$Z^{[l]} = W^{[l]} \times X^{[l-1]} + b^{[l]}$

Then calculates the activation function by using the formula

$a^{[l]} = g^{[l]}(Z^{[l]})$

Returns: np.array - Numpy array of the outputs of the activation function applied to the Z function

Example

# goal - you want to fit the layer to the activation function

outputs = layer1.fit()

Derivative function

def derivative(self)->np.array:

Calculates the derivative of the acivation function accordingly

Returns: np.array - Numpy array containing the derivative of the activation function accordingly

Example

# goal - You want to calculate the derivative of the activation function of the layer

derivatives = layer1.derivative()

Network Class

This class creates a neural network of the layers list passed to it

def __init__(self,layers:list) -> None:

The constructor of the Network class takes the following arguments:

- layers - The list of layer objects

Raises TypeError if any element in the layers list is not a Layer instance

Example

# goal - To create a network with the following structure

# Input layer - 2 neurons

# First Hidden layer - 6 neurons with sigmoid activation function

# Second Hidden Layer - 6 neurons with tanh activation function

# Output Layer - 1 neuron with sigmoid activation function

X = np.random.randn(2,400)

layer1 = Layer('First hidden layer',n=6,inputs=X,activation='sigmoid')

layer2 = Layer('Second Hidden layer',n=6,activation='tanh',inputs=layer1.fit())

layer3 = Layer('Output layer',n=1,inputs=layer2.fit(),activation='sigmoid')

nn = Network([layer1,layer2,layer3])

Fit function

def fit(self)->np.array:

Propagates through the network and calcuates the output of the final layer i.e the output of the network

Returns: np.array - The numpy array containing the output of the network

Example

# Goal- to propagate and find out the outputs of the network

outputs = nn.fit()

Summary function

def summary(self)->pd.DataFrame:

Returns the summary of the network which is a pandas dataframe containing the following columns:

- Layer Name: The name of the layer

- Weights: The shape of the weights

- Bias: The shape of the bias

- Total Parameters: Total number of parameters initialized in the layer

Example

#Goal - To print the summary of the network

summary = nn.summary()

print(summary)

Output

| Layer Name | Weights | Bias | Total parameters |

| First hidden layer | (6,2) | (6,1) | 18 |

| Second hidden layer | (6,6) | (6,1) | 42 |

| Output Layer | (1,6) | (1,1) | 7 |

Attributes of a network

- params - The list containing total number of parameters initialized at each layer of the network

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Filter files by name, interpreter, ABI, and platform.

If you're not sure about the file name format, learn more about wheel file names.

Copy a direct link to the current filters

File details

Details for the file MiniTensorflow-0.0.9.tar.gz.

File metadata

- Download URL: MiniTensorflow-0.0.9.tar.gz

- Upload date:

- Size: 17.6 kB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.4.2 importlib_metadata/4.6.4 pkginfo/1.7.1 requests/2.26.0 requests-toolbelt/0.9.1 tqdm/4.62.0 CPython/3.9.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

36bb513872dcfacd80bf7dc918441bb196e5de1f81fbd3f2d9b9a1e582574978

|

|

| MD5 |

8190be1d571251e61d0aa2c8083e7f48

|

|

| BLAKE2b-256 |

fe750d381ccb6ce80296fcbe5f576428fe18566f7fbaeb1832ac870c50fbebf5

|

File details

Details for the file MiniTensorflow-0.0.9-py3-none-any.whl.

File metadata

- Download URL: MiniTensorflow-0.0.9-py3-none-any.whl

- Upload date:

- Size: 17.7 kB

- Tags: Python 3

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/3.4.2 importlib_metadata/4.6.4 pkginfo/1.7.1 requests/2.26.0 requests-toolbelt/0.9.1 tqdm/4.62.0 CPython/3.9.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 |

b17687673b5708d6b5b01c32f3c09f17b45f9b3925885a5c6a3dcd9a9d84e9a0

|

|

| MD5 |

ccbf18696914692e656aab7cfa274845

|

|

| BLAKE2b-256 |

f3908414b1a8458006588dc6c97c4e47bea64a36b8b07880c070e1716f02a4a1

|